Computer Networking

Shortcut to this page: ntrllog.netlify.app/networking

Info provided by Computer Networking: A Top-Down Approach and Professor Polly Huang

What is the Internet?

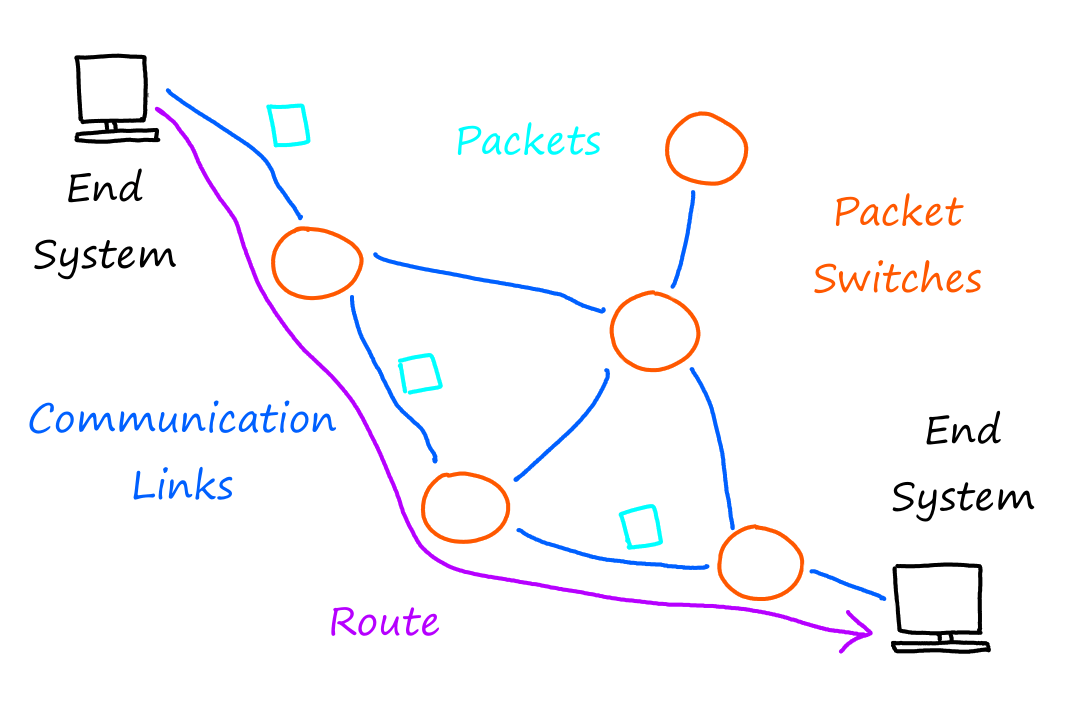

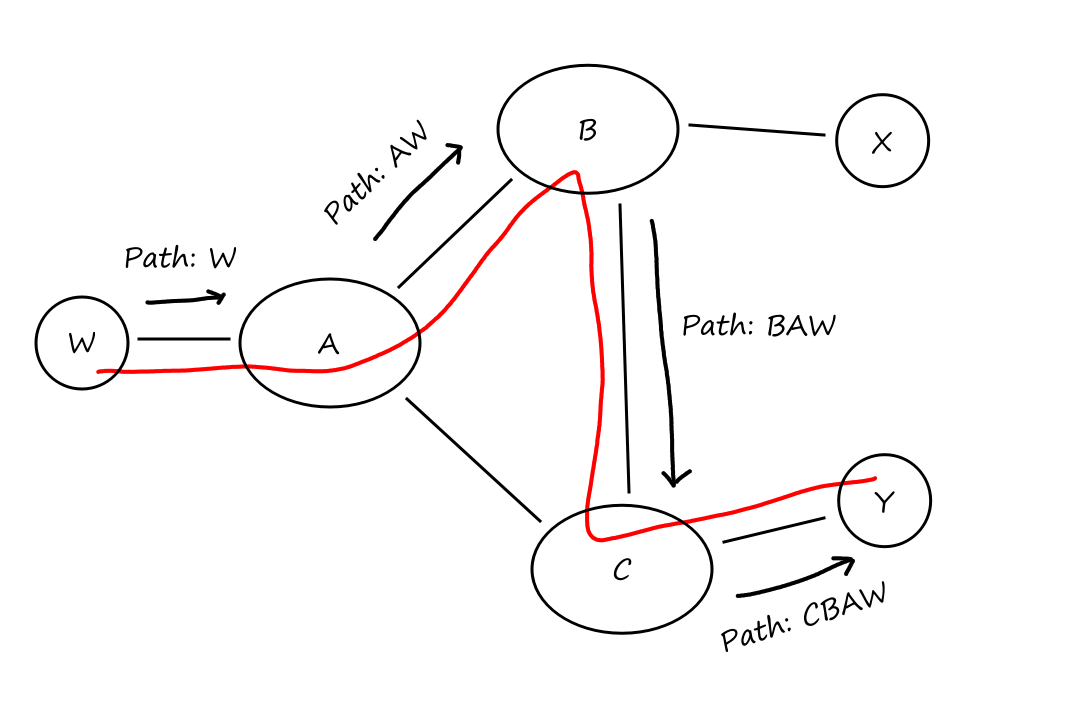

Computers. Laptops. Smartphones. TVs. Watches. And everything else that has the word "smart" in front of it. All of these things that can connect to the Internet are called hosts or end systems. End systems send and receive data through a system of communcation links and packet switches. The data travels through this system as little pieces called packets and they are reassembled when they reach their destination. Packet switches are machines that help transfer these packets. The whole path that a packet takes is called a route or path.

A router is a type of packet switch. The two terms may be used interchangeably (by me).

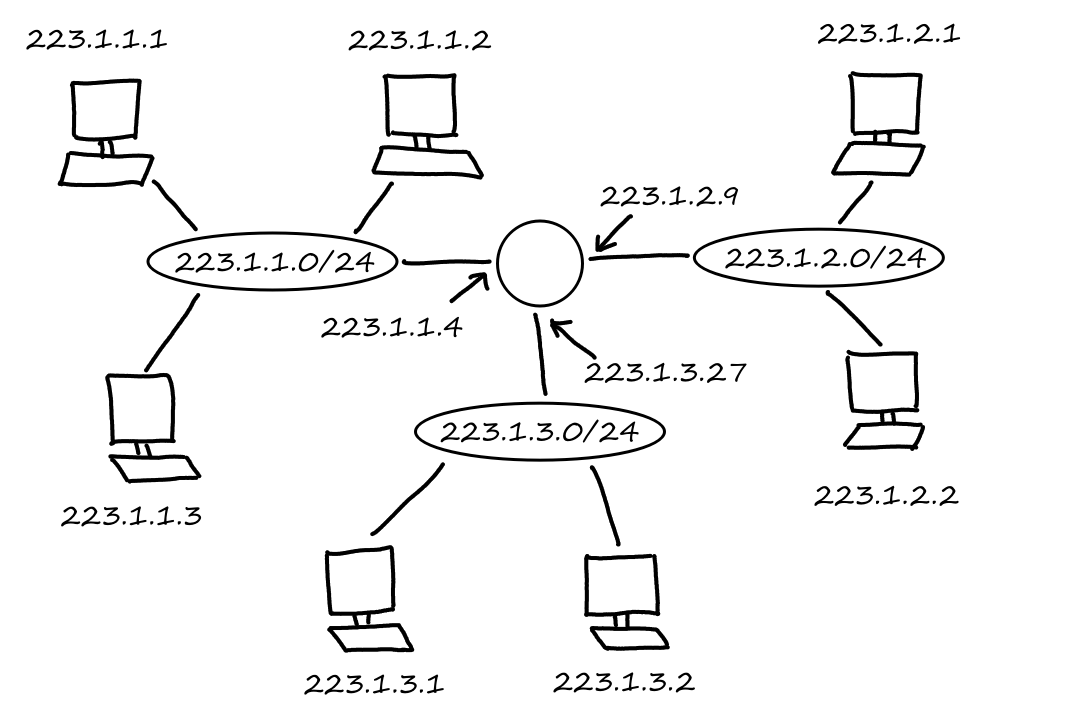

I believe the shape I'll be using for all packet switches is a circle/oval. And links will be just a line connecting the circles.

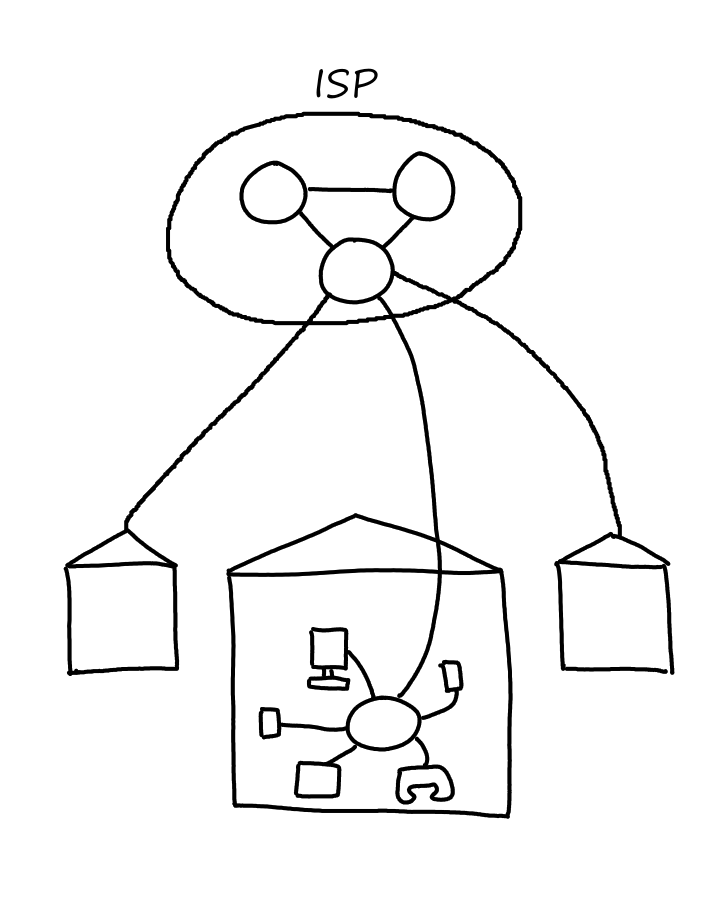

Access to the Internet is provided by Internet Service Providers (ISPs). Think ATT and Spectrum for example. ISPs themselves are a network of packet switches and communication links.

What is a Protocol?

All the devices involved with sending and receiving packets run protocols, which are a set of rules defining:

- how messages should be formatted

- the order of the messages exchanged

- the actions that can be performed when the messages are received or transmitted

The protocols are defined by Internet standards, which are developed by the Internet Engineering Task Force (IETF). The actual documents are called requests for comments (RFCs).

When we go to a URL on our browser, the actions that occur are a result of following a protocol (the TCP protocol).

- Computer sends a request to connect to the Web server and waits for a reply

- Web server receives the connection request and returns a "connection okay" message

- Computer sends the name of the Web page we want

- Web server sends it to our computer

Livin' on the Edge

The word "end system" is used to describe devices that connect to the Internet because they exist at the "edge" of the Internet.

Access Networks

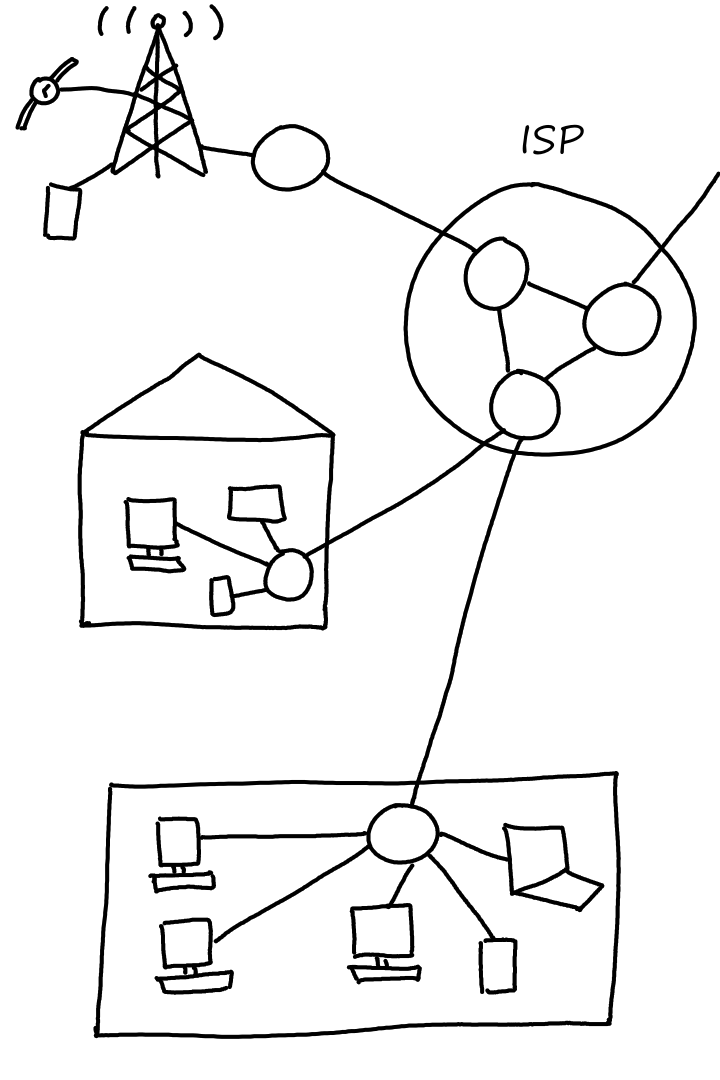

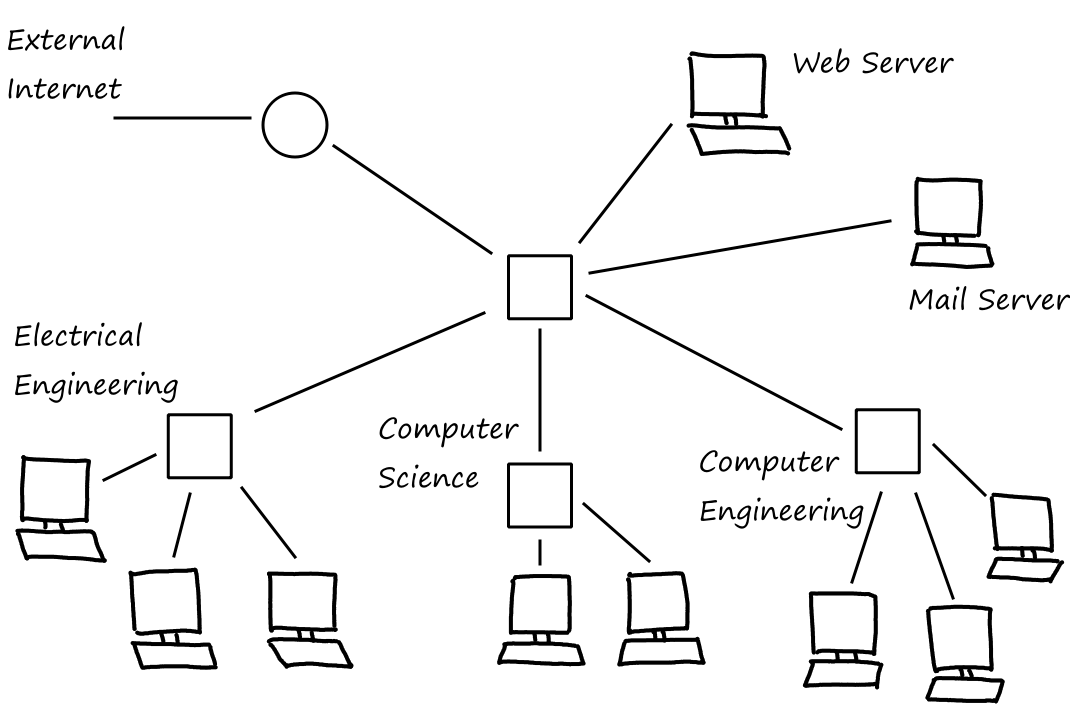

The access network is the network where end systems connect to the first router in the path to the ISP. (There may be many routers in between the ISP and a home. The first router is the one that's actually in the home.) Basically, an access network is a network on the edge of the Internet.

Home Access: What's the WiFi Password?

The home network will probably be the easiest for everyone to relate to. All our phones, laptops, tablets, computers, and everything else that connect to the WiFi in our homes form a home network.

Two of the most popular ways to get Internet access are by a digital subscriber line (DSL) (think telephone lines and telephone companies) and cable.

Wide-Area Wireless Access: Unlimited Talk, Text, and Web For Only $ Per Month!

When our phones aren't connected to WiFi, it uses mobile data, which is Internet provided by cellular network providers through base stations (cell towers?). This is where the terms 3G, 4G, 5G, and LTE come into play. (6G doesn't exist yet at the time of this writing.)

The Network Core

The network core is, well, the part of the Internet that is not at the edge. This is the part of the Internet that our router connects to — out to where our Internet access comes from.

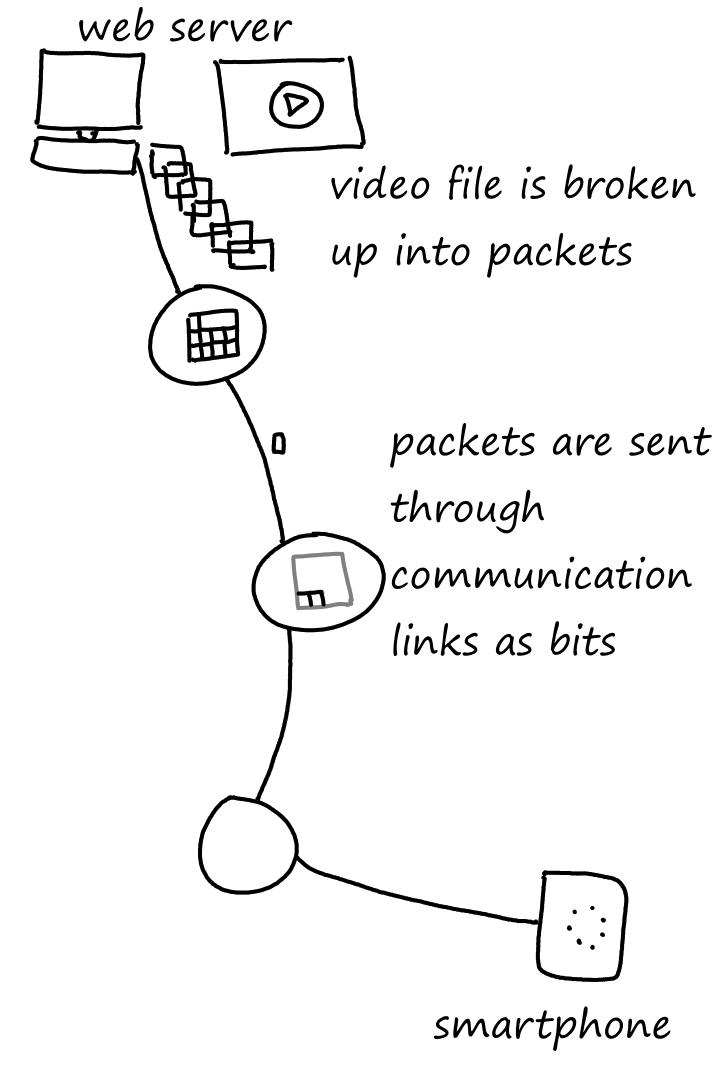

Packet Switching

The data that the end systems send and receive are called messages. Messages can be any type of data, such as emails, pictures, or audio files. When going from their source to their destination, messages are broken up into packets, which are transferred from packet switch to packet switch. From packet switch to packet switch, each packet is broken up into bits and sent across the communication link connecting the two packet switches.

No Packet Left Behind

Most packet switches use store-and-forward transmission. This means that the packet switch waits until it receives the entire packet before it starts sending the first bit of the packet to the next location.

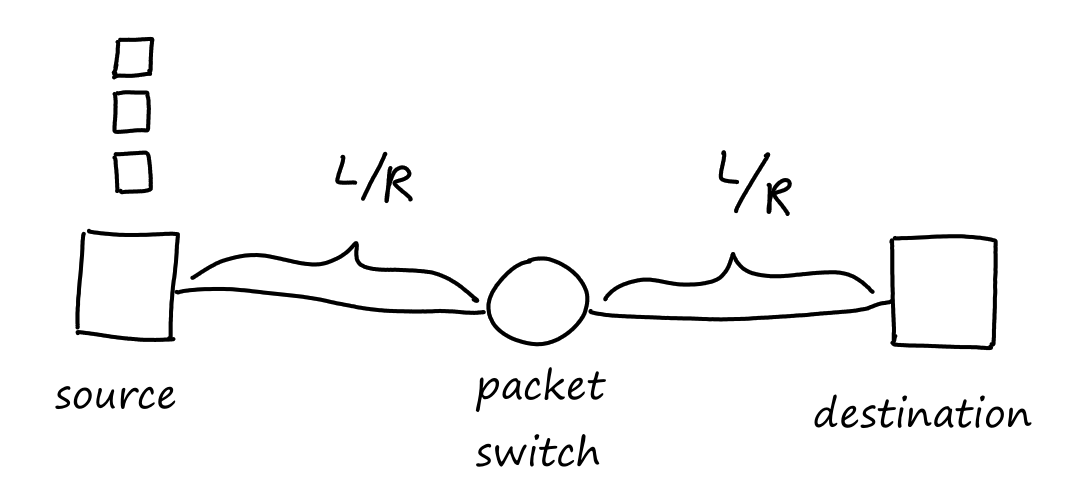

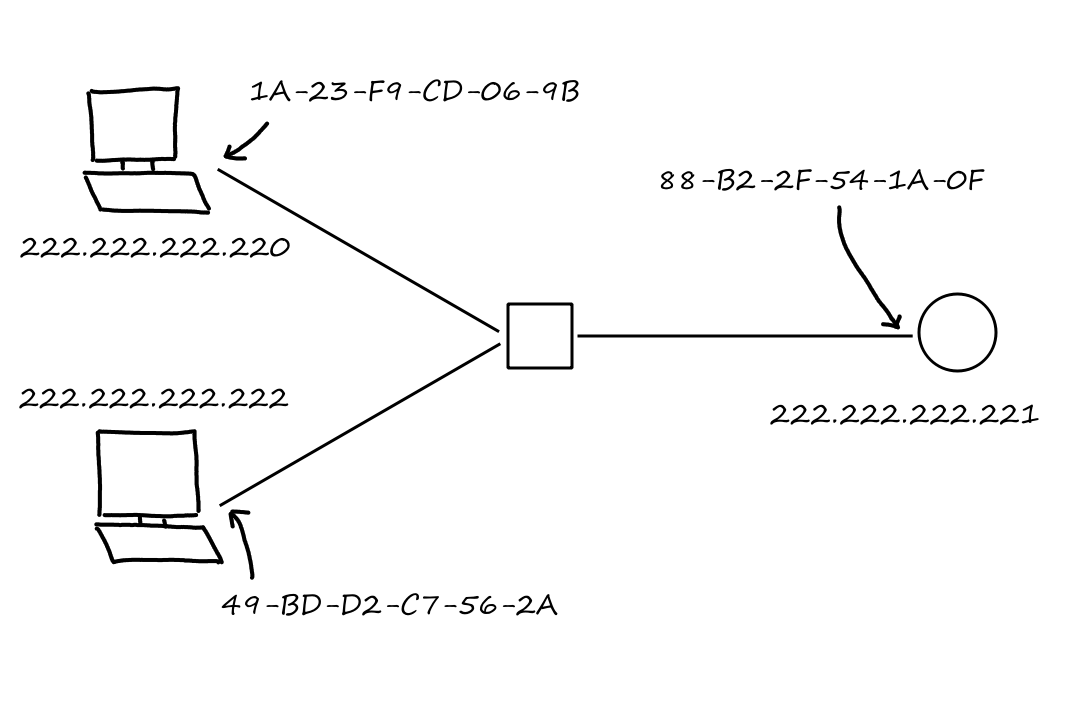

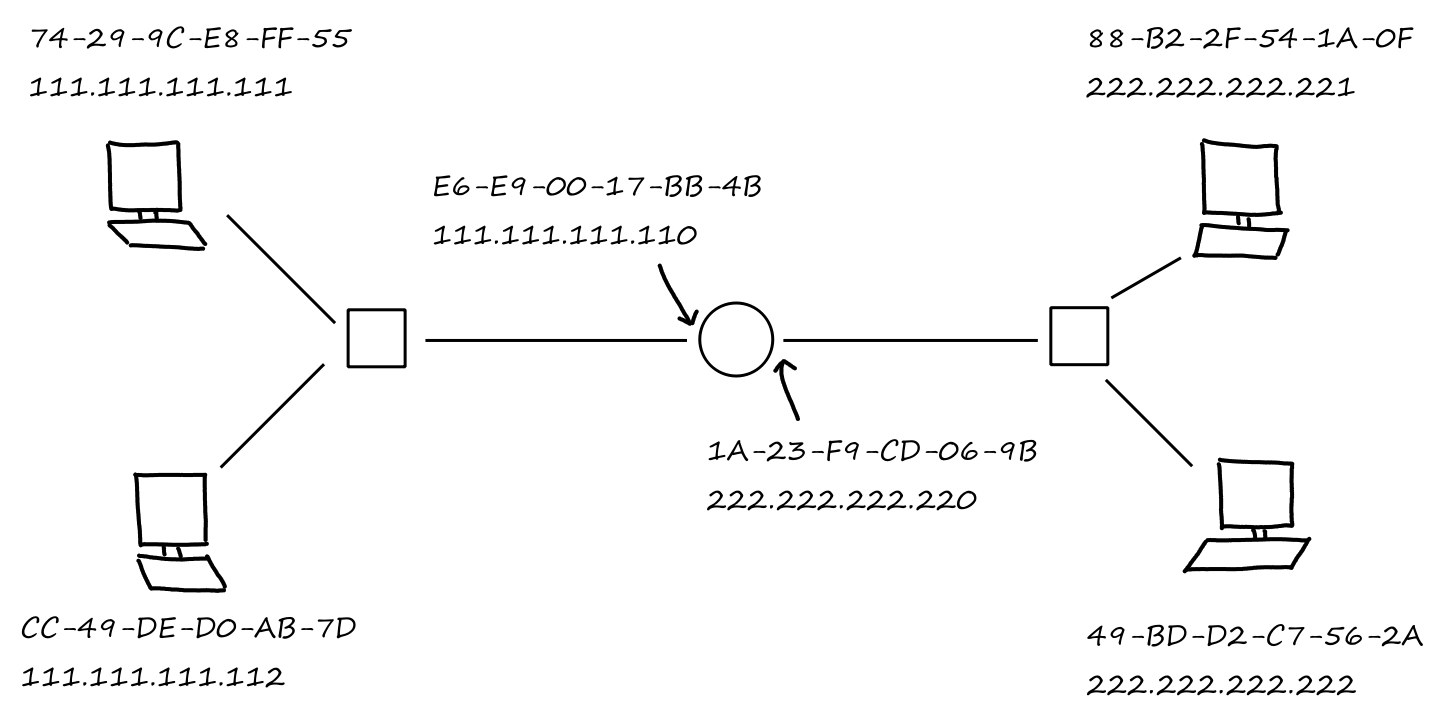

Consider a simple network of two end systems and one packet switch. The source has three packets to send to the destination.

Let's say each packet has bits and that each communication link can transfer bits per second. Then the total time it takes to transfer one packet across one communication link is seconds.

So the time it takes to transfer the packet from source to destination is .

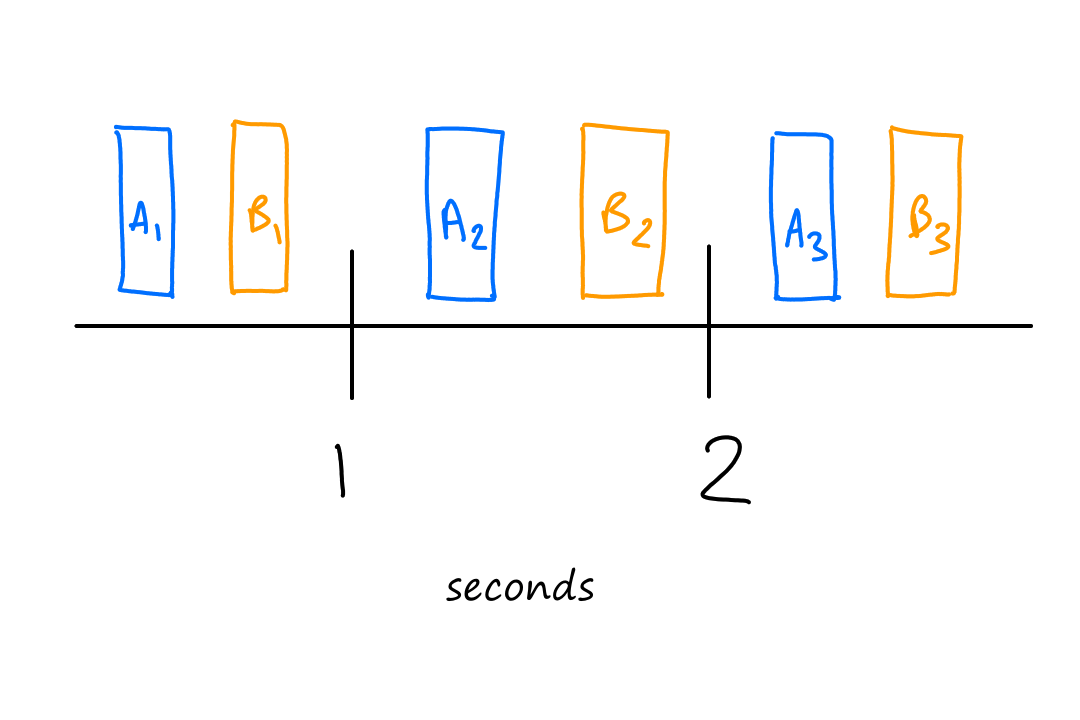

How long does it take to transfer all three packets from source to destination? At seconds, the packet switch receives all of the first packet. At this time, while the packet switch starts sending bits of the first packet to the destination, it also starts receiving bits of the second packet from the source.

At seconds, the first packet arrives at the destination and the second packet arrives at the packet switch.

At seconds, the second packet arrives at the destination and the third packet arrives at the packet switch.

At seconds, the third packet arrives at the destination.

Let's generalize this for communication links and packets.

It's helpful to think about this from the point of view of the last packet.

First, the last packet has to wait for the packets ahead of it to be transmitted; this takes time.

Then it will be transmitted onto the link, which takes time. Since there are links, the total time the last packet will travel is .

So the total time it takes packets to travel across communication links is .

Besides store-and-forward, there's also cut-through switching, in which the switch starts sending bits before the whole packet is received.

Queuing Delays

Realistically, a packet switch has more than two links. For each link in the packet switch, there is an output buffer (a.k.a. output queue) where the packets wait if the link is busy. This can happen if the transmission rate of the link is slower than the arrival rate of the packets, i.e., the packets are coming in faster than the packet switch can send them out.

Okay, Some Packets Left Behind

Since the buffer can only be so big, if there isn't enough room for more incoming packets, some packets will be dropped, resulting in packet loss. Depending on the implementation, either the arriving packet or one of the packets in the buffer is dropped.

Forwarding Tables

Again, packet switches usually have multiple links. So how does the packet switch know which link to send the packet to? The answer is that each packet switch has a forwarding table that lists the destination of each link. When a packet arrives, the packet switch examines the packet to see its destination and searches it up in the packet's forwarding table. The table tells the packet switch which link to use to get to the destination.

Circuit Switching

With packet switching, the packets are sent and if there is a lot of traffic, then oh well. Maybe the data will take a long time to send — or worse — get dropped. To avoid this, we can reserve the resources ahead of time. This way, one connection won't be competing with other connections, i.e., the connection will have the links to itself. (Imagine being the only one on the road and no other cars are allowed to be on the road with you!)

With circuit switching, each link has a number of circuits. One circuit per link is required for communication between two end systems. So a link with, for example, four circuits can support four different connections at the same time. However, each connection only gets a fraction of the link's transmission rate.

Suppose we have a link with four circuits and a transmission rate of Mbps. Then each circuit can only transfer data at a rate of Mbps, which is kbps.

Frequency-Division Multiplexing

Multiplexing is sending multiple signals as one big signal.

Multiple end systems can all use one link to transfer data if there are enough circuits. Each end system will be transferring different data, but they all get transferred over as "one big data" through the link. The way to differentiate which data came from which end system is to divide the link into different frequencies, such as in the example above.

Radio uses FDM. We can tune in to a specific frequency to listen to the station we want.

Time-Division Multiplexing

With time-division multiplexing, the end systems get to use all of the frequency to transfer data, but only for a limited amount of time.

Suppose there are two end systems ( and ) communicating with two other end systems using the same link. Let's say they're only allowed -second intervals to send their data. So from to seconds, part of the data from and part of the data from gets sent. From to seconds, part of the data from and part of the data from gets sent. And this repeats for every second.

More formally, time is divided into frames, and each frame is divided into slots. Once a connection between two end systems is established, one slot in every frame is reserved just for that connection.

If a link transmits frames per second and each slot consists of bits, then the transmission rate of each circuit is bits per second kbps. The transmission rate of the whole link is (I think).

Suppose that all links in a network use TDM with slots and can transfer data at a rate of Mbps. Then each circuit has a transmission rate of kbps.

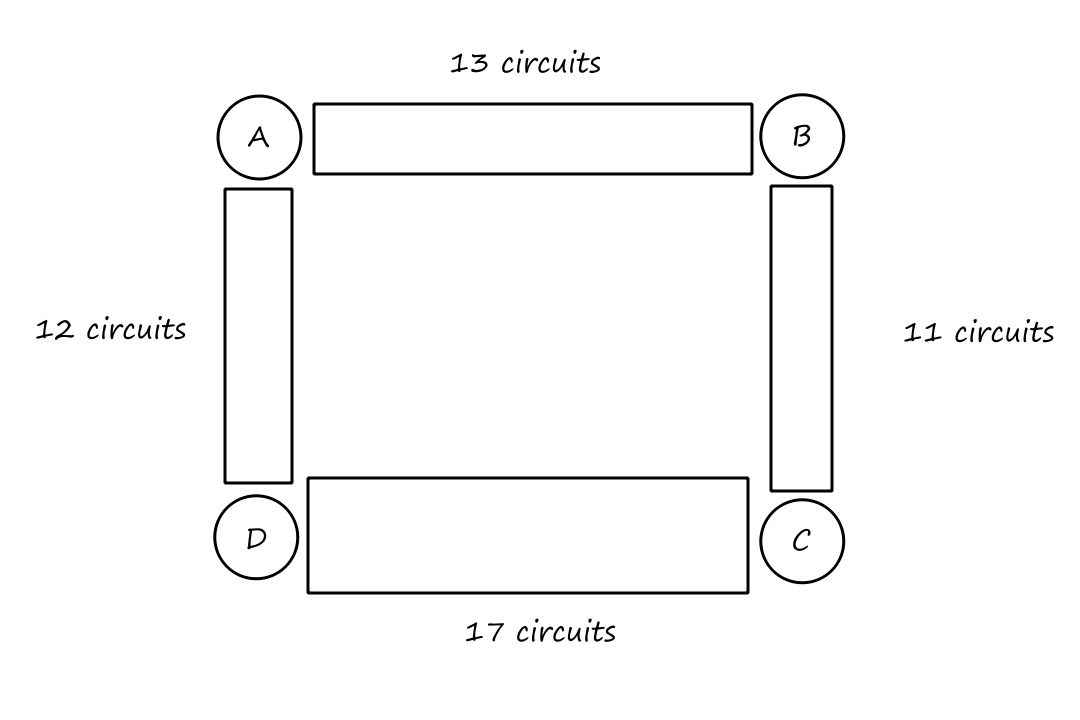

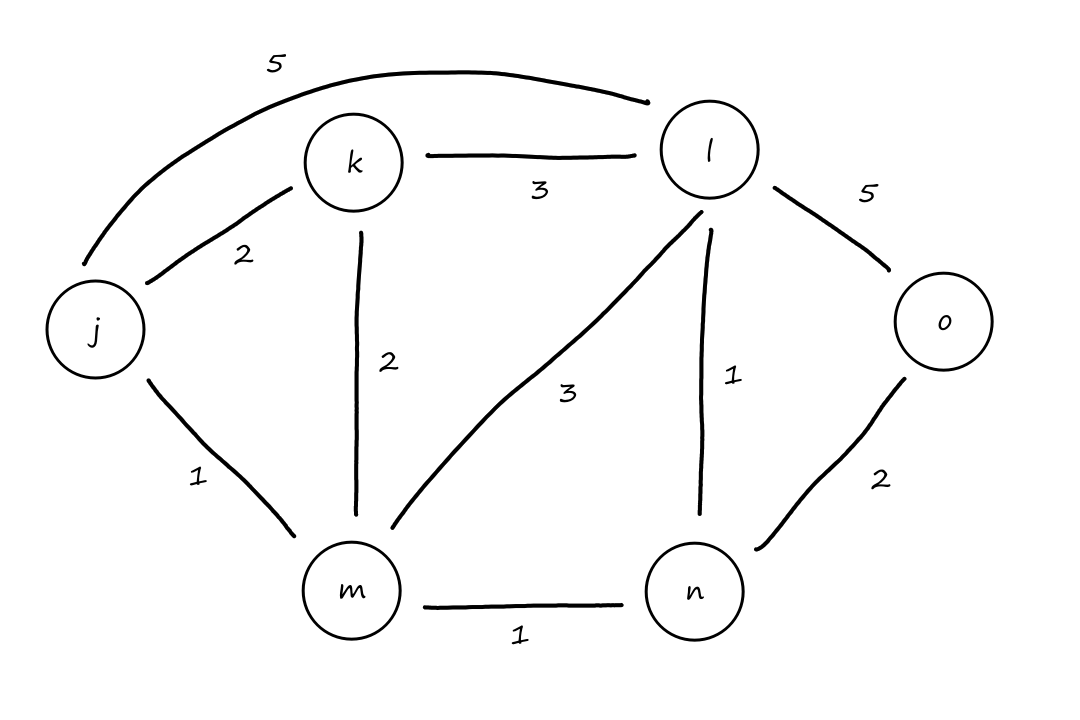

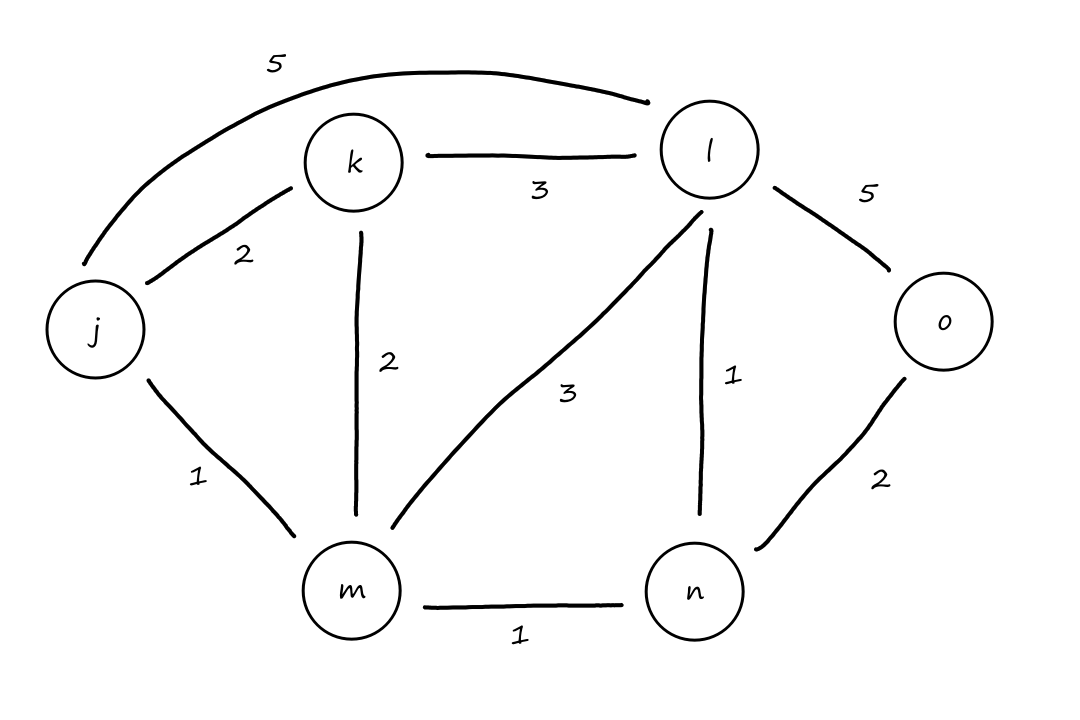

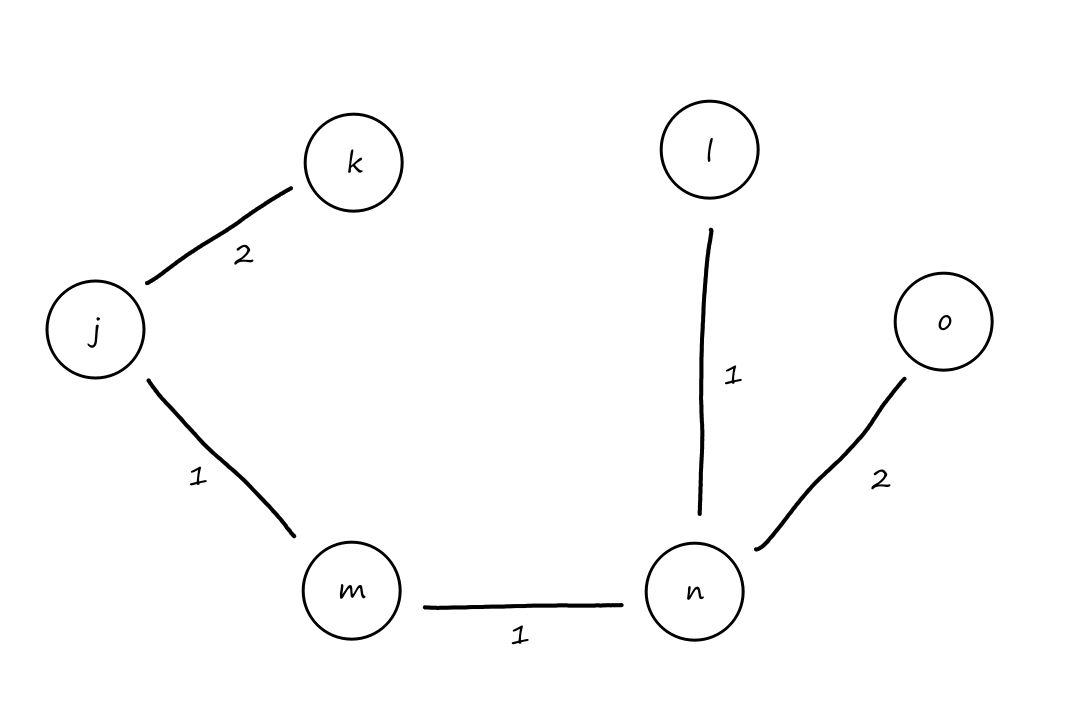

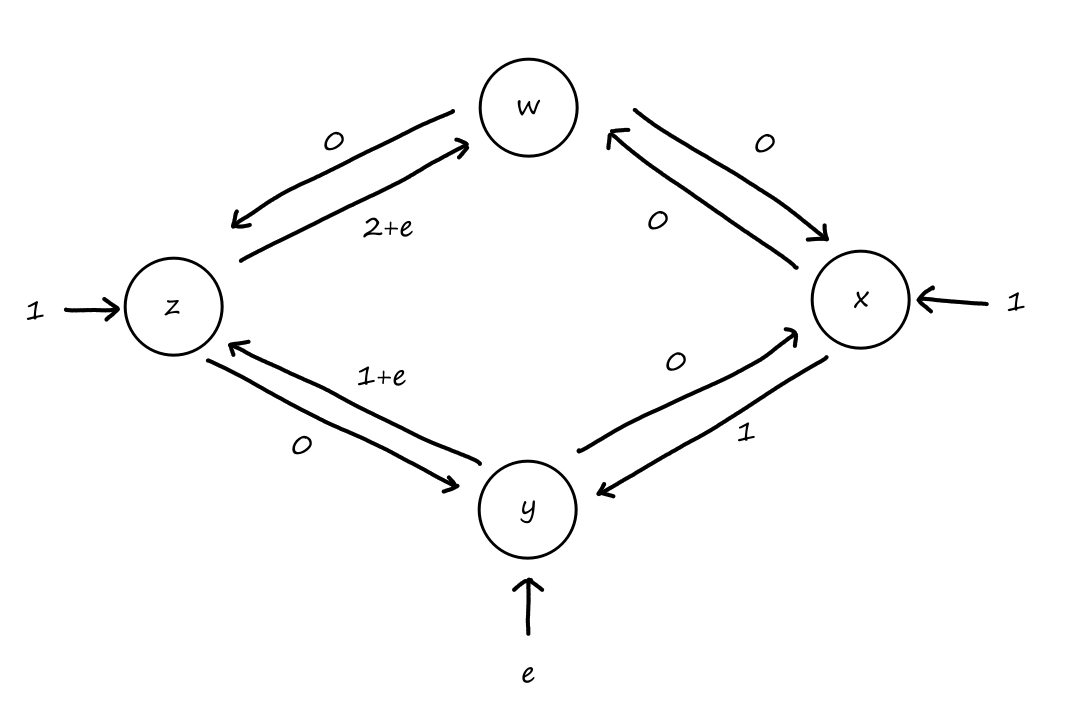

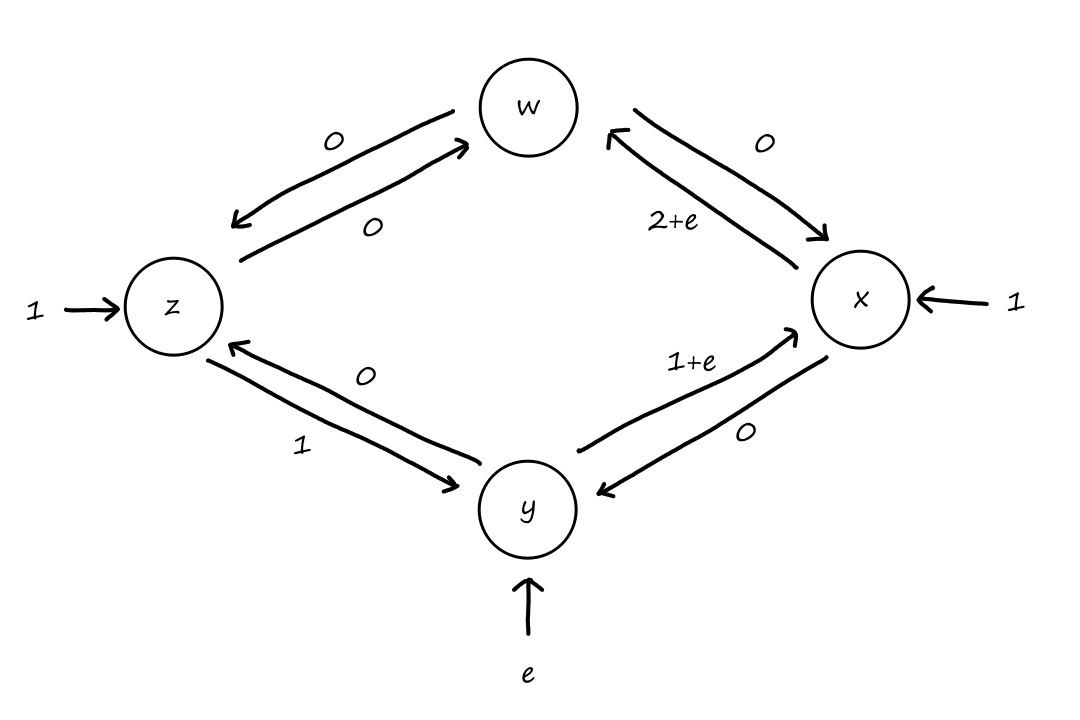

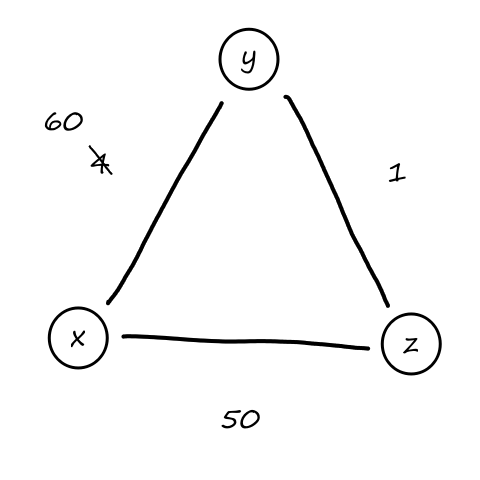

Suppose there is a circuit-switched network with four circuit switches , , , and .

What is the maximum number of connections that can be ongoing in the network at any time?

There can be connections from to , connections from to , connections from to , and connections from to . So the maximum number of connections possible is .

Note that while it's possible to have a connection from to , for example, that wouldn't maximize the number of connections because two circuits would have to be reserved for one connection, as opposed to the answer above where only one circuit has to be reserved for one connection.

Suppose that every connection requires two consecutive hops, and calls are connected clockwise. For example, a connection can go from to , from to , from to , and from to . What is the maximum number of connections that can be ongoing in the network at any time?

: Since there are only circuits from to , the number of connections possible for is .

: Since all circuits are being used for , there are no circuits available for .

: Since there are only circuits from to , the number of connections possible for is .

: Since all circuits are being used for , there are no circuits available for .

So the maximum number of connections possible is .

Suppose that connections are needed from to , and connections are needed from to . Can we route these calls through the four links to accommodate all connections?

For , we can use the circuits for and . only supports connections, so the remaining connections will need to use of the circuits in .

For , we can't use the circuits for because they are already being used for . So the only circuits available are for . There are only circuits available for since of them are being used for . So there are only circuits available for , which is not enough for the connections that are needed for .

So the answer is no.

It doesn't matter how we choose to divide up the connections. Notice that connections are needed from to , but any path from to , whether it's or , will have all the circuits used up on that path. So the connections from to can only travel in the other direction, and neither direction can support connections on its own.

(Also, the answer can be obtained by looking at the answer to the second question, but that's boring.)

Switching Teams

Packet switching is not good for things that require a continuous connection, like video calls. However, packet switching is, in general, more efficient than circuit switching.

Suppose several users share a Mbps link. But each user is not using the connection all of the time, i.e., there are periods of activity and inactivity. Let's say that a user is actively using the connection 10% of the time, and generates data at kbps when they are active. With circuit switching, this means the link can support users at once. The circuit must be reserved for all users as long as they are connected, even if they're not using it all the time.

With packet switching, resources are not reserved, so any number of users can use the link. If there are users, the probability that users are actively using the connection at the same time is ~, which means there is a ~ chance that there are simultaneous users at any time. As long as there are not more than users at a time, there will be no queuing delay (if there are more than users, there will be more data than the Mbps link can handle). So packet switching performs just as well as circuit switching while allowing for more users at the same time.

Sticking with the same Mbps link, suppose there are users. One of the users suddenly generates bits of data, but the other users are inactive. Suppose the link has slots per frame. If all slots are utilized, then the link can send bits per second ( Mbps). However, under the rules of circuit switching, only one of the slots per frame will be used for that user. As a result, the link will only transfer bits per second for that user's connection. So it will take seconds to transfer all the data.

With packet switching, since no other users are active, the one user gets to send all bits of data in second since there will be no queuing delays.

Suppose users share a Mbps link. Also suppose each user transmits continuously at Mbps when transmitting, but each user transmits only of the time.

When circuit switching is used, how many users can be supported?

Only users can be supported since each user transmits at Mbps.

Suppose packet switching is used from now on.

Why will there be no queuing delay before the link if users transmit at the same time?

If only user is transmitting, then there will be traffic at a rate of Mbps, which the link can handle. If users are transmitting, then there will be traffic at a rate of Mbps, which the link can also handle.

Why will there be a queuing delay if users transmit at the same time?

If users are transmitting, then there will be traffic at a rate of Mbps. But since the link's transmission rate is only Mbps, packets will be coming in faster than they can be transmitted.

What is the probability that a given user is transmitting?

Suppose there are users. What is the probability that at any given time, all users are transmitting at the same time?

The probability of user transmitting is . So the probability of users transmitting is .

A Network of Networks

We get our Internet access from an ISP. Of course, all of us don't get Internet access from the same ISP. There are different ISPs out there providing Internet access to different networks. But then how do our end systems communicate with other end systems? How do we communicate with servers and visit websites from different parts of the world for example? The answer is that the ISPs themselves are connected with each other. But what exactly does that look like?

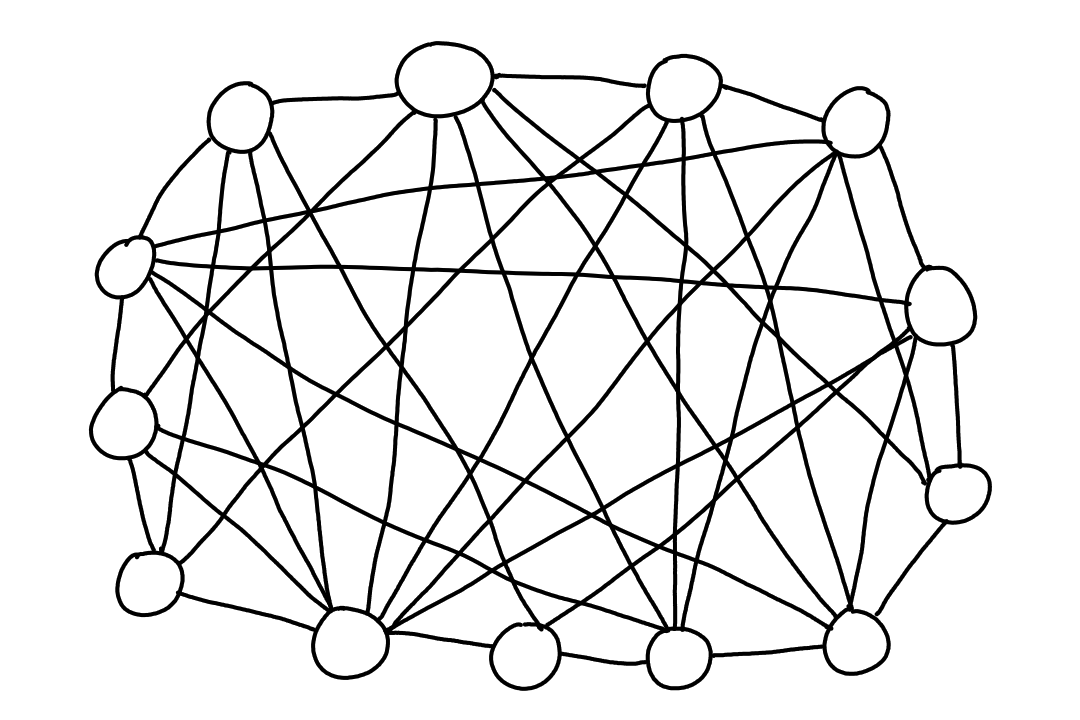

One idea is that we connect every ISP with each other directly.

The problem with this is that it is too costly, because there would be way too many links (too many for me to draw). In computer science terms, there would be connections.

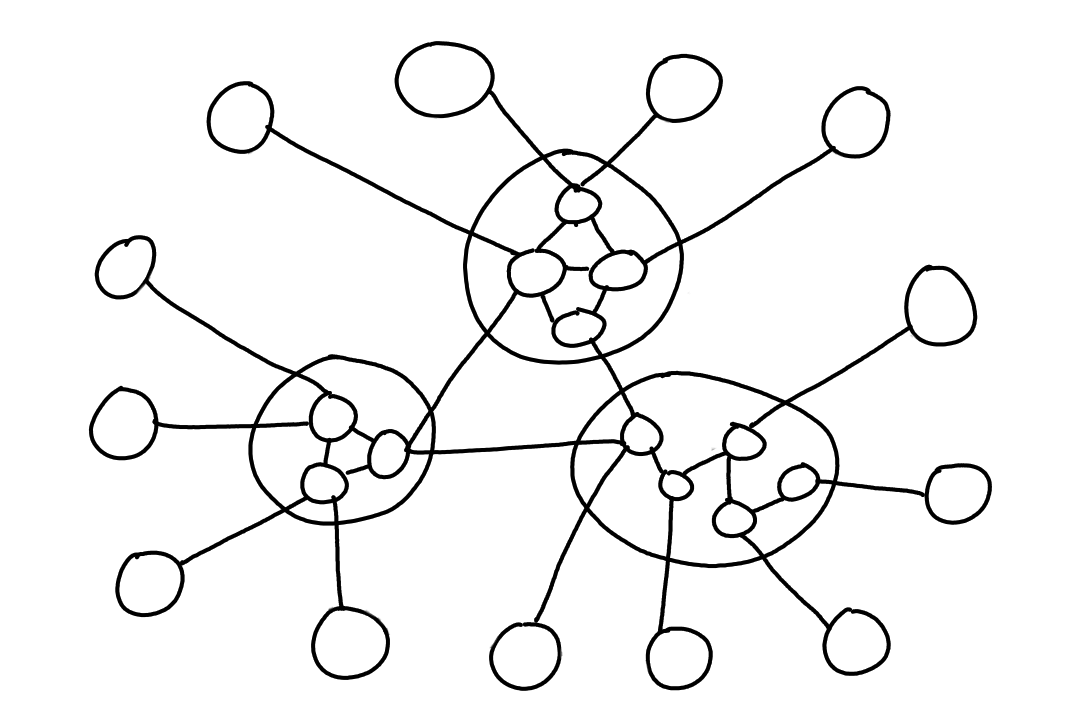

To minimize the number of connections, another idea is that there is a global ISP and all the other ISPs connect to that global one.

While this is also costly, the global ISP would offset the cost by charging the other ISPs (we'll call them access ISPs from this point on) money to connect to the global ISP.

And if one global ISP becomes profitable, naturally there will be other global ISPs wishing to be profitable too.

This structure is good for the access ISPs because they can choose which global ISP they want to connect to by comparing prices and service quality. So what we have so far is a two-tier hierarchy where global ISPs are at the top and access ISPs are at the bottom.

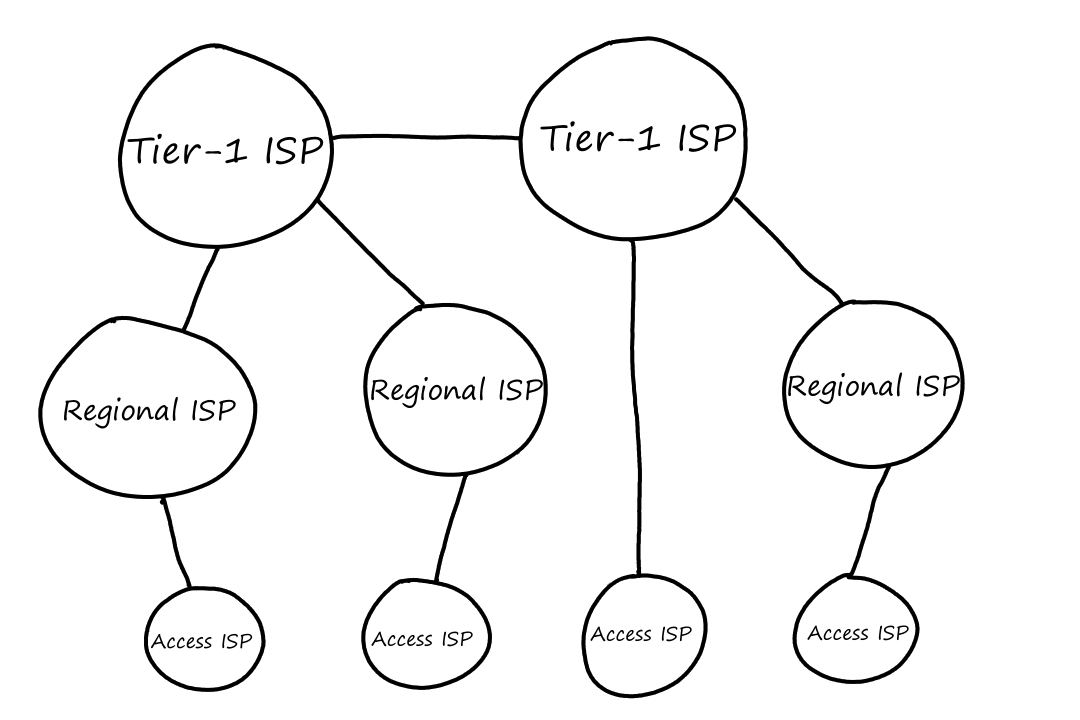

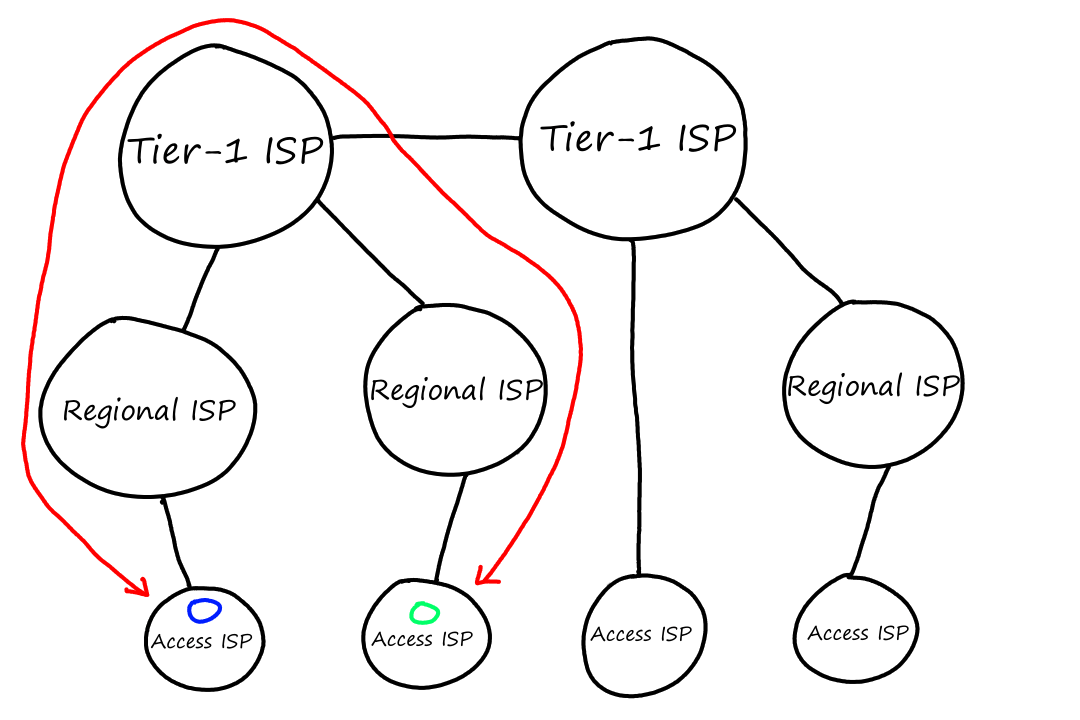

The reality is that these global ISPs can't exist in every city in the world. What happens instead is that the access ISPs connect to a regional ISP, which then connects to a global ISP. (We'll now be calling global ISPs by the more correct term tier-1 ISP.) Some examples of tier-1 ISPs are AT&T, Verizon, and T-Mobile.

Sometimes, an access ISP can connect directly to a tier-1 ISP. In that case, the access ISP would pay the tier-1 ISP.

In some regions, there can be a larger regional ISP consisting of smaller regional ISPs.

Access ISPs and regional ISPs can choose to multi-home. That is, they can connect to more than one provider ISP at the same time, getting Internet access from multiple ISPs. While they have to pay each ISP they're connected to, the multi-homed ISPs can achieve better reliability in case one of the provider ISPs has a failure.

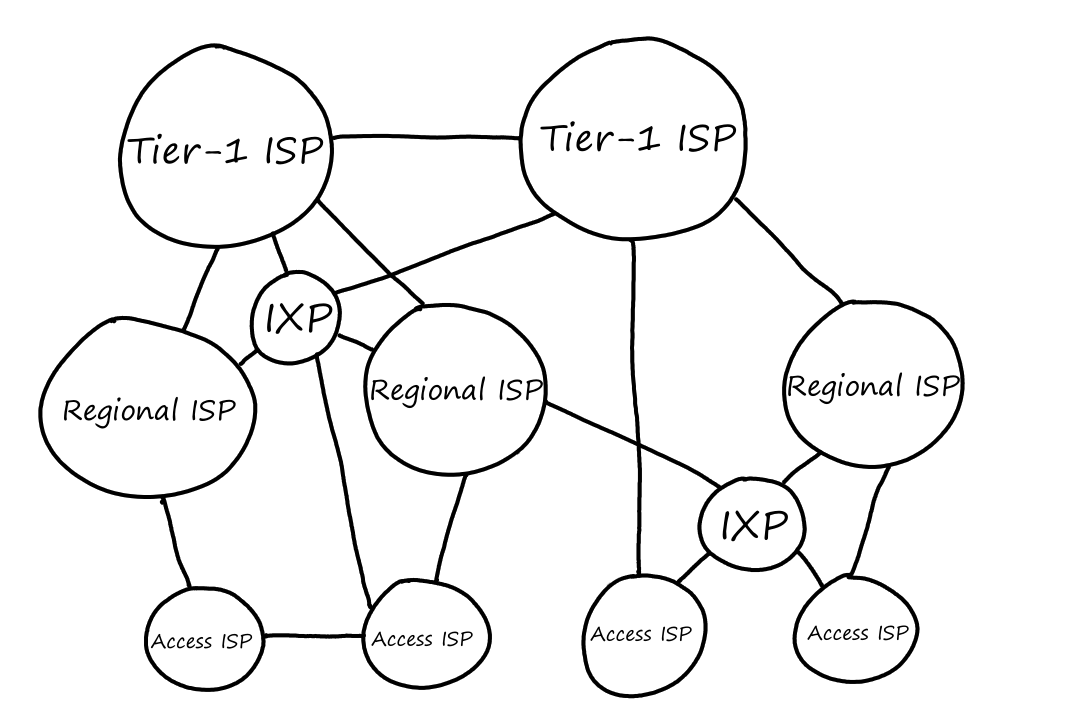

This multi-tier hierarchy is closer to the structure of today's Internet, but there are still a few pieces missing.

Lower-tier ISPs pay higher-tier ISPs based on how much traffic goes through their connection. To avoid sending traffic to the higher-tier ISPs, the lower-tier ISPs can peer. This means that they connect with each other so that the traffic goes between them instead of up to the higher-tier ISP.

For example, suppose that a computer connected to the green ISP wants to communicate with a computer connected to the blue ISP. Without peering, the traffic would have to go all the way up to the tier-1 ISP and back down from there.

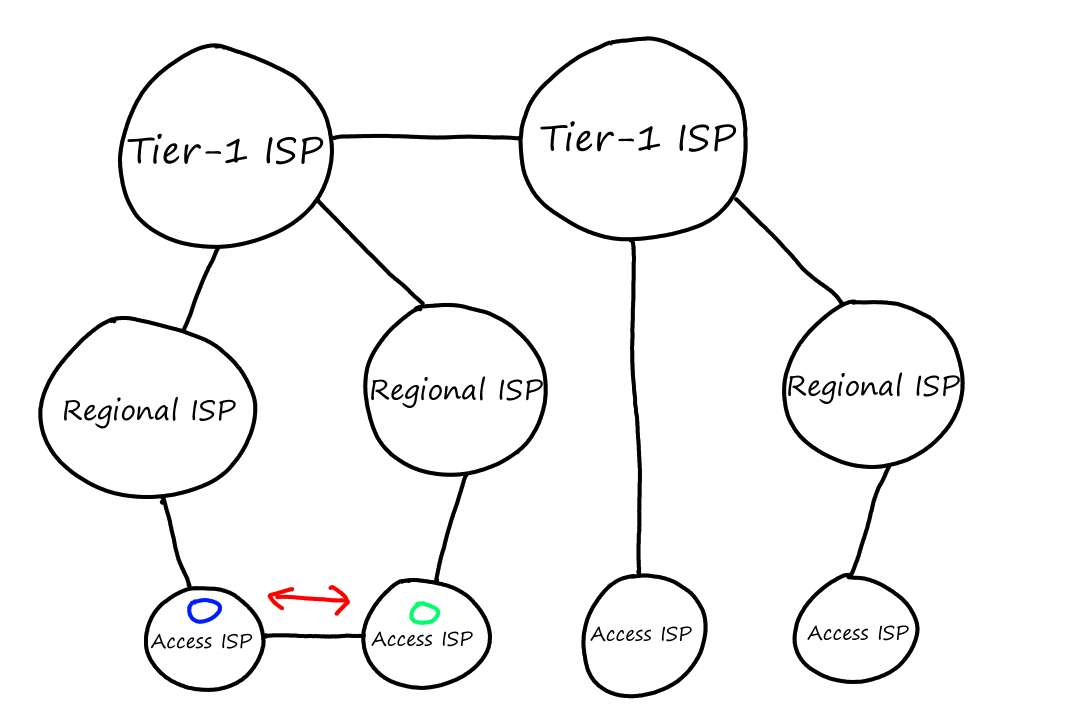

But with peering, they can avoid the cost of going through the regional and tier-1 ISPs by connecting with each other directly. Usually when ISPs peer with each other, they agree to not pay each other for the traffic that comes from peering.

To facilitate this peering, third-party companies can create an Internet Exchange Point (IXP) which ISPs connect to in order to peer with other ISPs.

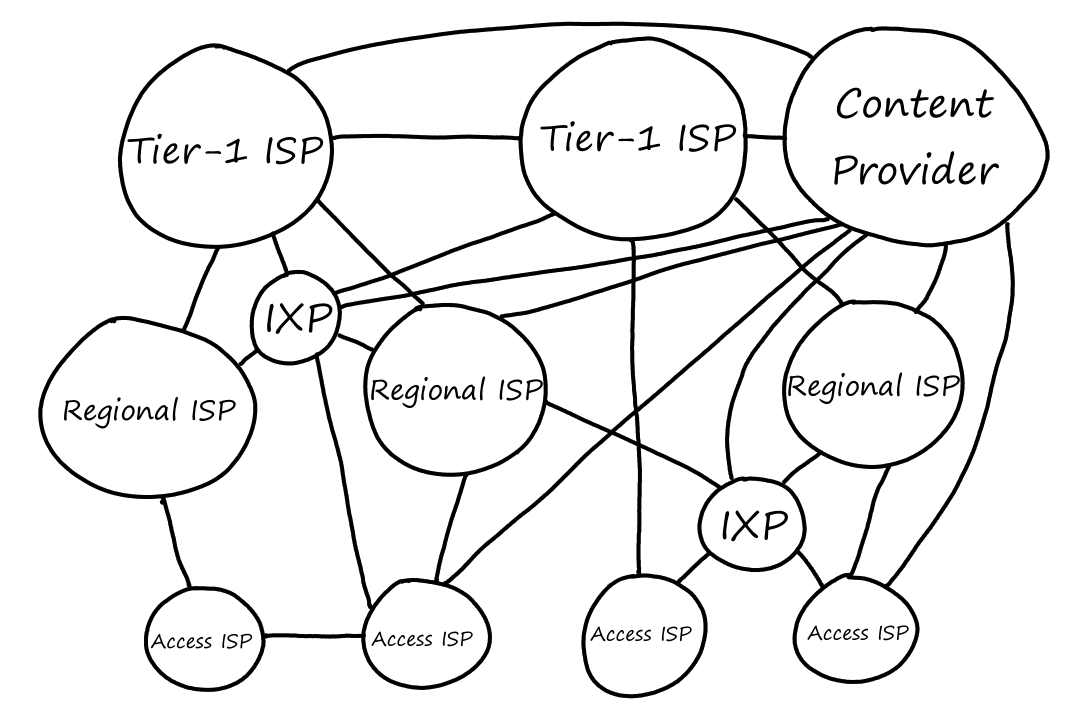

There's one more piece: content-provider networks. Content providers are companies such as Google, Amazon, and Microsoft.

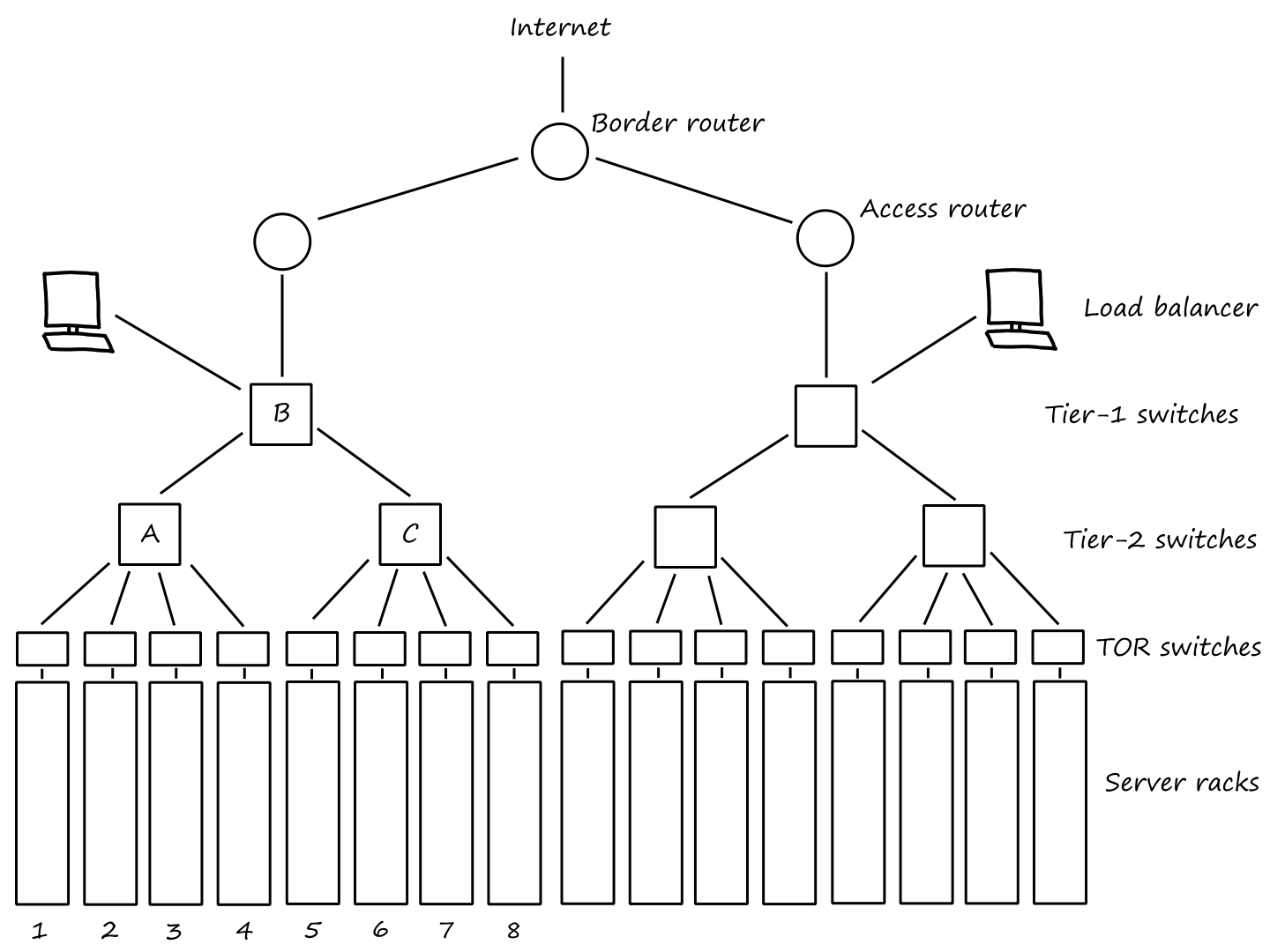

They run their own private networks so that they can bring their services and content closer to users. They do this by having data centers distributed everywhere across the world. Each data center has tons of servers that are all connected to each other.

And this is why the Internet is a network of networks. (Recall that ISPs themselves are networks of packet switches and links. There's also the edge network from the previous section, and each network in the edge network is a network of routers and links.)

Delay, Loss, and Throughput

In a perfect world, data would travel through the Internet instantaneously without any limitations. But, we know things aren't perfect.

What's the Holdup?

As packets travel along their route, they experience nodal processing delay, queuing delay, transmission delay, and propagation delay. All these delays combined is called total nodal delay.

Processing Delay

When a packet arrives at a router, the router looks at the packet's header to see which link it should go on. It also checks for any bit-level errors in the packet. The time it takes to do this (microseconds), is the processing delay.

Queuing Delay

Once the outbound link has been determined, the packet goes to the queue for that link. The time it waits in the queue (microseconds to milliseconds), is the queuing delay.

Transmission Delay

Once the packet reaches the front of the queue, it is ready to be transferred across the link. Recall that packets travel through links as bits. The time it takes to push all of the bits into the link (microseconds to milliseconds) is the transmission delay. If a packet has bits and the link can transfer bits per second, then the transmission delay is .

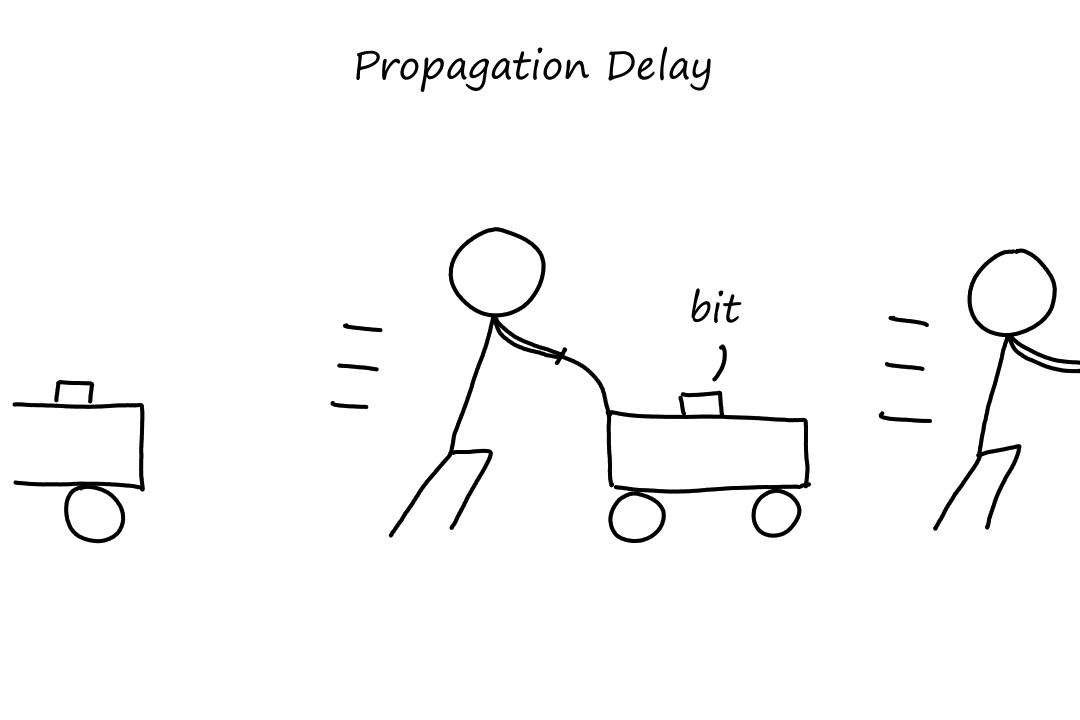

Propagation Delay

Now that the bits are on the link, they must get to the other end. The time it takes to get from the beginning of the link to the end of the link (milliseconds) is the propagation delay. This is determined by two things: how fast the bits are able to move and how far they have to go. How fast the bits can move depends on the physical characteristics of the link (e.g., whether it's fiber optics or twisted-pair copper wire) and is somewhere near the speed of light.

If is the distance the bit has to travel, i.e., the length of the link, and is the propagation speed of the link, then is the propagation delay.

Transmission delay and propagation delay sound very similar to each other. So here are some pictures illustrating the difference.

Yeah, somehow the bits go from being on a conveyer belt to being pushed in needlessly large carts. There's no delay from belt to cart though. That part happens magically.

Consider a packet of length which begins at end system and travels over three links to a destination end system. These three links are connected by two packet switches. For , let , be the length of each link, be the propagation speed of each link, and be the transmission rate of each link. The packet switch delays each packet by . Assume there are no queuing delays.

In terms of , , , and , what is the total end-to-end delay for the packet?

The total end-to-end delay is the sum of all the delays: processing delay, queuing delay, transmission delay, and propagation delay.

The processing delay is given to us as . There are two packet switches, so there will be two processing delays.

We are also given that there are no queuing delays.

The transmission delay is .

The propagation delay is .

So the total end-to-end delay is .

Suppose now the packet is bytes, the propagation speed on all three links is m/s, the transmission rates of all three links are Mbps, the packet switch processing delay is ms, the length of the first link is km, the length of the second link is km, and the length of the third link is km. What is the end-to-end delay?

From the first question, we know that the end-to-end delay is , so now we'll just calculate and plug in those values.

We're given that ms.

To calculate transmission delay, we'll first convert everything to bits. There are bits in a byte, so the packet length is bits. For the transmission rate, Mbps = bits per second. Since all three links have the same transmission rate, . So seconds ms.

For the propagation delay, we'll first convert everything to meters. The lengths of the links are m, m, and m. Since all three links have the same propagation speed, . So seconds ms, seconds ms, and seconds ms.

So the total-end-to-end delay is ms.

Consider a highway that has a tollbooth every km. It takes seconds for a tollbooth to service a car. Suppose a caravan of cars are on the highway with each car traveling km/hour. When going from tollbooth to tollbooth, the first car waits at the tollbooth until the the other nine cars arrive. To analogize, the tollbooth is a router, the highway is a link, the cars are bits, and the caravan is a packet.

How long does it take the caravan to travel from one tollbooth to the next?

Since the tollbooth takes seconds to service a car and there are cars, it takes a total of seconds (or minutes) to service all the cars.

Since the distance between every tollbooth is km and the cars are moving at km/hour, it takes hour (or minutes) to reach the next tollbooth.

Since the cars in the caravan travel together, everything depends on the last car. So we'll look at this from the last car's point of view.

The last car has to wait minutes before it can be serviced by the tollbooth. Once this is done, it takes minutes for it to reach the next tollbooth. So it takes the last car minutes to reach the next tollbooth.

Here's some animation to visualize:

At seconds all cars have exited the first tollbooth and are on the highway to the next tollbooth.

The first car will take minutes to enter the next tollbooth (get off the animation line), but since it waited seconds for the previous tollbooth servicing, it will enter the next tollbooth at .

To analogize some more, the time it takes the tollbooth to service a car is the transmission delay. The time it takes the car to move to the next tollbooth is the propagation delay.

Now suppose that the cars travel at km/hour and the tollbooth takes minute to service a car. Will cars arrive at the second tollbooth before all the cars are serviced at the first tollbooth? That is, will there still be cars at the first tollbooth when the first car arrives at the second tollbooth?

Now it takes minutes to service all cars.

The first car will wait minute for the tollbooth to service it. Then it will take minutes () to reach the next tollbooth. So it will take a total of minutes for the first car to go from one tollbooth to the next.

But in minutes, the first tollbooth will have only serviced cars. So there will be cars still at the first tollbooth when the first car arrives at the second tollbooth.

Queuing Delay and Packet Loss

Queuing delay is the most complicated delay since it varies from packet to packet (as implied, processing delay, transmission delay, and propagation delay are the same for every packet). For example, if packets arrive at an empty queue, the first packet will have no queuing delay, but the last packet will have to wait for the first packets to leave the queue.

The factors that determine queuing delay are the rate at which traffic arrives, the transmission rate of the link, and the type of traffic that arrives (e.g., periodically or in bursts).

Let be the average rate (in packets per second) at which packets arrive. If a packet has bits, then is the average rate (in bits per second) at which bits arrive. is the transmission rate of the link. So is the ratio of bits coming in vs bits going out; this ratio is called traffic intensity.

means that bits are arriving at the queue faster than they are leaving. In this case, the queuing delay will approach infinity.

If , then the type of traffic that arrives has an effect on the queuing delay. For example, if one packet arrives every seconds, then the packet will always arrive at an empty queue, so there will be no queuing delay.

However, if packets arrive in bursts every seconds, then the first packet will experience no queuing delay. But the second packet will experience a queuing delay of seconds. The third packet will experience a queuing delay of seconds. Generally, each packet will have a queuing delay of seconds.

Regardless of the type of traffic, as traffic intensity gets closer to , the queuing delay gets closer to approaching infinity.

Average queuing delay grows exponentially with traffic intensity, i.e., a small increase in traffic intensity results in a large increase in average queuing delay.

Packet Loss

If packets are coming in faster than they are leaving the queue, then the queue will eventually be full and not be able to store any more packets. In this case, some packets will be dropped or lost. As traffic intensity increases, so does the size of the queue, which means the number of packets lost also increases.

End-to-End Delay

End-to-end delay is the total delay that a packet experiences from source to destination.

Suppose there are routers between the source and destination. Then there are links. Also suppose that the network is uncongested so there are practically no queuing delays.

Then the end-to-end delay is .

Traceroute

Traceroute is a program that allows us to see how packets move and how long they take. We specify the destination we want to send some packets to and the program will print out a list of all the routers the packets went through and how long it took to reach that router.

Suppose there are routers between the source and destination. Traceroute will send special packets into the network, one for each router and one for the destination. When the nth router receives the nth packet, it sends a message back to the source. When it receives a message, the source records the time it took to receive the message and where the message came from. Traceroute performs these steps 3 times.

Throughput

Throughput is the rate at which bits are transferred between the source and destination. The instantaneous throughput is the rate at any point during the transfer. The average throughput is the rate over a period of time.

If a file has bits and the transfer takes seconds, then the average throughput of the file transfer is bits per second.

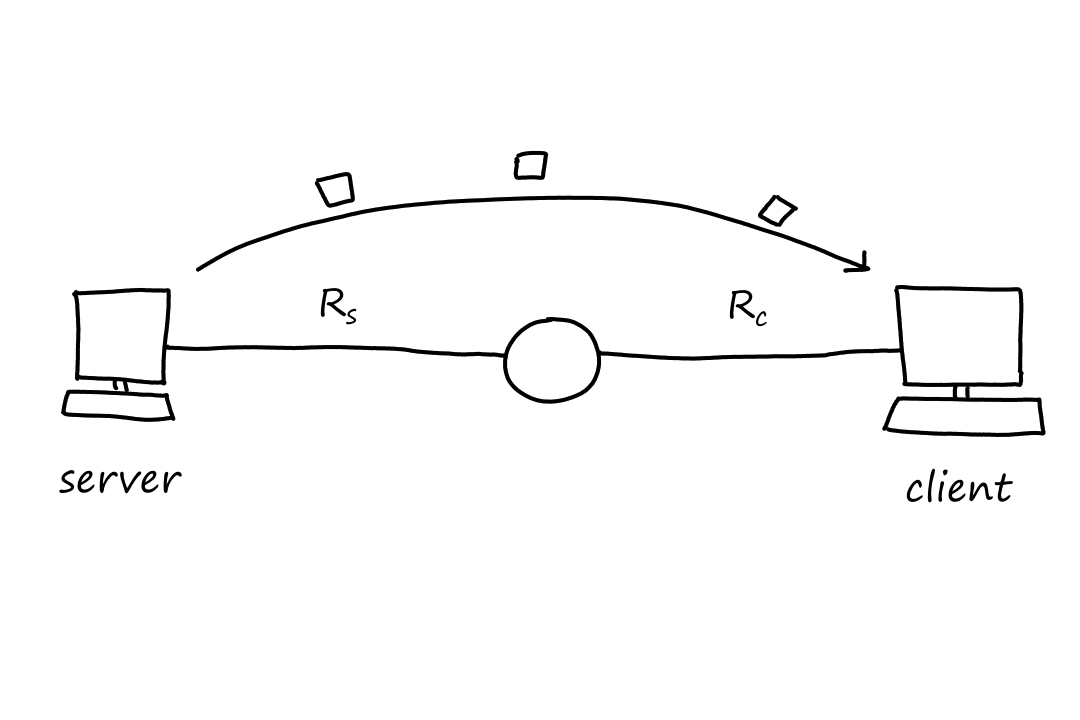

Suppose we have two end systems connected by two links and one router. Let , be the rates of the two links.

Suppose bits, Mbps, and Mbps.

- After second, bits will move from the server to the router.

- After seconds, bits will move from the server to the router and bits will move from the router to the client.

- After seconds, bits will move from the server to the router and bits will move from the router to the client.

- After seconds, bits will move from the server to the router and bits will move from the router to the client.

The file transfer is complete when the client receives bits, which means bits will have to move from the router to the client times. Since it takes second to do so, it will take seconds for the file transfer to complete.

Notice how it didn't matter how fast the bits moved from the server to the router. The time the file took to reach the client only depended on the rate from the router to the client. This doesn't mean the time it takes to reach the client is always equal to the rate from router to client though. Let's flip the numbers around to prove this point.

Suppose bits, Mbps, and Mbps.

- After second, bits will move from the server to the router.

- After seconds, bits will move from the server to the router and bits will move from the router to the client.

- After seconds, bits will move from the server to the router and bits will move from the router to the client.

- After seconds, bits will move from the server to the router and bits will move from the router to the client.

Wait, but isn't the rate from router to client bits per second? Why aren't bits moving from router to client every second? That's because there are only bits entering the router every second.

From this, we can see that the throughput of a file transfer depends on the link that is transferring bits at the lowest rate. This link is called the bottleneck link.

Mathematically, the throughput is .

The time it takes to transfer the file is .

Generally, the throughput for a network with links is .

The bottleneck link is not always the link with the lowest transmission rate. We'll look at another example to show this.

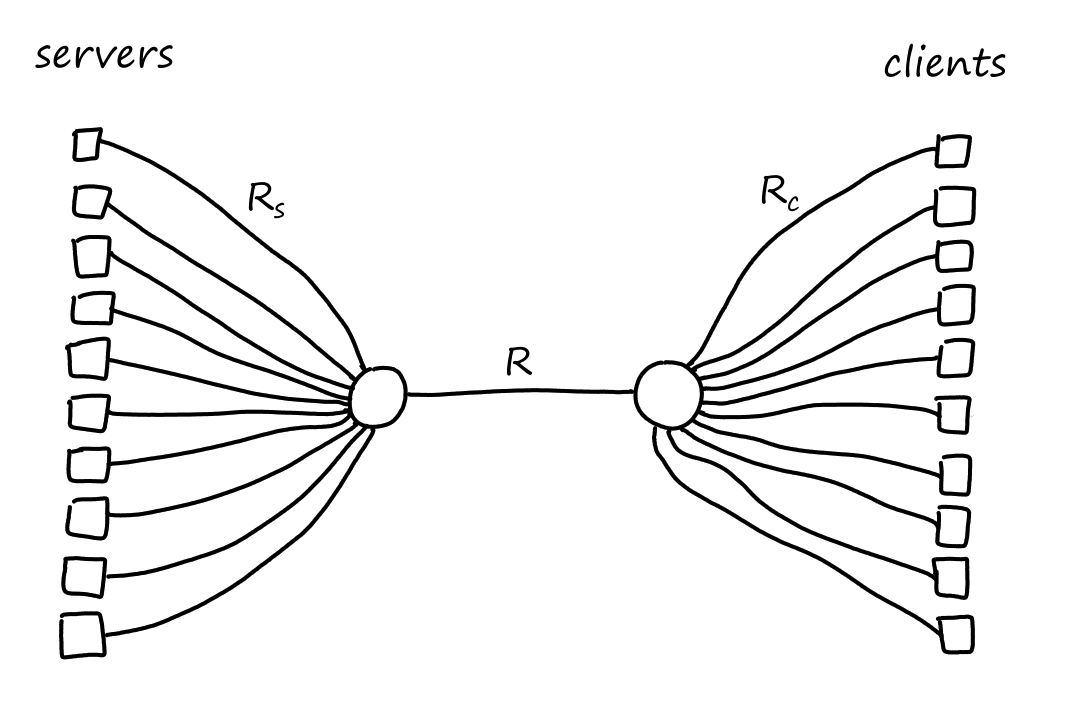

Suppose there are clients downloading data from servers (each client is connected to a unique server). Suppose they all share a common link with transmission rate somewhere in the network core. We'll denote the transmission rates of the server links as and the transmission rates of the client links as .

(I think sharing a common link means all the server links eventually connect to one router and all the client links eventually connect to a different router. And the link between these two routers are the same.)

Let's assign actual values to these transmission rates. So let Mbps, Mbps, and Mbps. Since the common link is being used for simultaneous downloads, the link divides its transmission rate equally among the connections. So the common link is transferring bits at a rate of kbps per connection. Which means the throughput for each download is kbps. Even though the common link had the highest transmission rate, it ended up being the bottleneck link.

Links in the network core are almost never the bottleneck link because they are usually over-provisioned with high speed links. Typically, the bottleneck links are in the access network.

Protocol Layers

There are many pieces to the Internet, such as routers, links, applications ("apps"), protocols, and end systems. These pieces are organized into layers.

Layered Architecture: The Internet Is Like an Onion

The airline system can be seen as an organization of layers.

| Layer | Action (Leaving) | Action (Arriving) |

|---|---|---|

| Ticketing | Purchase | Complain |

| Baggage | Check | Claim |

| Gates | Enter | Exit |

| Runway | Takeoff | Land |

| Airplane | Routing | Routing |

Every time we board and leave an airplane, we perform the same steps in a certain order. And the action we perform depends on the layer we're at since each layer is responsible for one thing and one thing only. (I.e., each layer provides a different service.) Also, each layer depends on the layer before it. For example, we can only check in our baggage if we have a ticket, or we can only enter the gate once we have checked in our baggage.

This layering makes it easier to distinguish the different components of an airline system. It also makes it easier to change the implementation of a layer's service without affecting the rest of the layers. For example, the current implementation of the gate layer is to board people by ticket priority and check-in time. But the implementation can be changed to board people by height. This change doesn't affect any of the other layers, and the function of this layer remains the same: getting people on the airplane.

Protocol Layering

The Internet is made up of 5 layers.

| Layer |

|---|

| 5. Application |

| 4. Transport |

| 3. Network |

| 2. Link |

| 1. Physical |

Recall that a protocol is a set of rules that define how things should work. Each protocol belongs to one of the layers.

Application Layer

The application layer includes all the network applications (basically apps or programs that connect to the Internet).

| Protocol | Details |

|---|---|

| HTTP | sending and receiving Web documents |

| SMTP | sending emails |

| FTP | transferring files |

Applications communicate with each other by sending messages to each other.

Transport Layer

The transport layer is responsible for delivering the application's message to the network core.

| Protocol | Details |

|---|---|

| TCP |

connection oriented guaranteed delivery |

| UDP |

connectionless no reliability, no flow control, no congestion control |

In the transport layer, the packets of information are called segments.

Network Layer

The network layer is responsible for moving the transport layer's segments from router to router.

| Protocol | Details |

|---|---|

| IP |

defines how packets must be formatted defines the actions that must be taken when sending or receiving packets |

In the network layer, the packets are called datagrams.

Link Layer

The link layer is responsible for moving the network layer's datagrams across links.

| Protocol |

|---|

| Ethernet |

| WiFi |

| DOCSIS |

In the link layer, the packets are called frames.

Physical Layer

The physical layer is responsible for moving the individual bits of the link layer's frames across links.

OSI Model

In the late 1970s (before the Internet became public), there was another model that was proposed by the International Organization for Standardization (ISO) on how to organize computer networks. This model was called the Open Systems Interconnection (OSI) model and it had 7 layers:

| Layer |

|---|

| 7. Application |

| 6. Presentation |

| 5. Session |

| 4. Transport |

| 3. Network |

| 2. Link |

| 1. Physical |

The layers that also appear in the Internet model work roughly the same way. So we'll just go over the ones that are new.

The presentation layer makes sure the data is readable and usable. It provides data compression, data encryption, and data description. I guess it's sorta like a translator.

The session layer makes sure the data goes where it needs to go. It provides data synchronization, checkpointing, and data recovery.

Encapsulation

Whenever we moved across the different layers, we called the packets of information by a different name. That is because the packets change as they move from layer to layer.

- Application layer sends message

- Transport layer adds transport-layer header information to the message, forming a segment

- Network layer adds network-layer header information to the segment, forming a datagram

- Link layer adds link-layer header information to the datagram, forming a frame

The header information is needed so that the thing (e.g., router, link, end system) receiving the packet knows what it is and what to do with it. Here are some animations to illustrate:

End systems implement all 5 layers while routers usually implement only the bottom 3 layers (network, link, physical). (And some packet switches may only implement the bottom 2 layers, link and physical.)

Notice that when the packet left the end system, the last header added was the link-layer header, which is the first thing that gets processed when entering the router. When the packet reaches the link layer, the link-layer headers are extracted away. So now the top-most header is the network-layer header, which is what the network layer then processes.

After going through the network layer, the router determines that the packet has to go on another link again. So now the packet has to travel down the layers. When it goes from the network layer down to the link layer, a new link-layer header is added, so that the next thing (router or end system) can process it.

Now that the packet has reached the end system, it goes up the layers one last time. Again, at each layer the headers are removed until only the message remains, which the application understands.

From this point on, we'll go into more detail for each layer, starting with the application layer.

Principles of Network Applications

A network application is a set of apps or programs that connect to and communicate with the Internet. These apps are made by our local software developers using languages such as C, Java, or Python.

Typically, network applications involve multiple programs running on different end systems. For example, we can download Spotify on our phone, tablet, computer, or watch. And Spotify will have a different program running on their servers to provide functionality to the app.

Network Application Architectures

There are 2 types of network application architectures: client-server and peer-to-peer (P2P).

In the client-server architecture, there is a server that is always on and running. Whenever clients want data, they make a request to the server, which then provides the clients with what they requested. In addition to always being on, the server has a fixed IP address, so clients can make requests to the server at any time.

Big companies, like Google, need more than one server to handle all the requests, otherwise that one server would get overwhelmed. So they set up data centers, which house multiple servers. And then they go and set up multiple data centers.

In peer-to-peer architecture, there are no servers (and thus no clients). Instead, devices communicate directly with other devices — called peers — to transfer data.

Let's say you wanted to download a large file (perhaps an online textbook 🤫). You could go online and download it (this would be client-server), but let's say the file is so large, that it takes too long. Suppose that I happen to have the complete file already downloaded. Using the right application, I could transfer my file directly to your computer. Suppose that someone else also has the file. Then you can receive the file from both of us. And the more people that have the file, the more people you can connect to to receive the file, making the download faster.

The effectiveness of peer-to-peer relies on the number of peers. The more peers there are, the more computing power there is. This is referred to as self-scalability.

Processes Communicating

We call them programs. Operating systems call them processes.

Client and Server Processes

A network application consists of pairs of processes that send messages to each other. A Web broswer (a process) communicates with a Web server (another process). Spotify, the app (a process), communicates with Spotify's servers (another set of processes). The process that is requesting data is the client process and the process that is providing the data is the server process. Despite the naming, the terms "client" and "server" processes apply for both client-server architecture and peer-to-peer architecture.

More formally (and perhaps more accurately), client processes are the ones that initiate communication and server processes are the ones that wait to be contacted.

The Interface Between the Process and the Computer Network

As we saw before, a process in the application layer sends a message to the transport layer. In order to do so, it opens a socket, which is the application layer's door to the transport layer. Sockets are also used to receive messages from the transport layer.

For us developers, sockets are also referred to as the Application Programming Interface (API) between the application and the network. We don't have control over how the socket is implemented; that's handled by the OS.

Addressing Processes

In order for the message to go to the correct place, the sending process needs to specify where the message needs to be delivered to. First, it needs to specify which end system is receiving the message. This can be done by providing the IP address of the end system. Just knowing which end system is not enough though because an end system is running multiple applications while it's on, so we also need to know which application needs the message. Whenever processes open a socket, they must choose a number to "label" the socket. This number is called a port number. So by specifying the IP address and the port number, the message can be delivered to the correct application on the correct end system.

Transport Services Available to Applications

On the other side of the socket is a transport-layer protocol that delivers the message to the socket of the receiving process. There are several transport-layer protocols an application can choose from. When deciding which one to use, there are several things to consider.

Reliable Data Transfer

As we saw earlier, packets can get lost. For some situations, like transferring financial documents, packet loss is undesirable. But even though packets can get lost, a protocol can still guarantee that the file will be delivered correctly. If a protocol can provide this guarantee, then that protocol provides reliable data transfer.

In other situations, like video calls, some packet loss is acceptable. ("I'm sorry, can you repeat that? You cut out for a few seconds.") Those applications are referred to as loss-tolerant applications.

Throughput

Throughput is the rate at which bits are transferred between the source and destination (Hmm, I feel like I've said these exact words before 🤔). A transport-layer protocol can guarantee a specified throughput of some amount of bits per second. This would be useful for voice calls, which need a certain throughput in order for the voices to be heard clearly. Applications that have throughput requirements are bandwidth-sensitive applications.

Applications that don't are elastic applications. File transfers don't need a certain throughput. If more bits can be transferred, then the download will be faster. If fewer bits can be transferred, then the download will be slower.

Timing

Whereas throughput is the number of bits going through, timing is the delay between each bit. One timing guarantee might be that bits go to the receiving process every 100 milliseconds. For real-time applications, such as video calls and online games, it is ideal to have low delay.

Security

Before transferring the data, a transport protocol can encrypt it.

Transport Services Provided by the Internet

There are 2 transport protocols an application can use: TCP and UDP.

TCP

TCP is a connection-oriented protocol. The client and server have to confirm a connection (TCP connection) with each other first before messages can be transferred. And once the application is done sending messages, it must close the connection.

TCP also provides reliable data transfer. This means data is sent without error and in the proper order — there are no missing or duplicate bytes. Part of this is achieved by implementing congestion control. If the network is congested, the sending process will be throttled.

UDP

UDP is like the opposite of TCP. It's connectionless. The data transfer is unreliable — there's no guarantee all the messages will arrive and if it does arrive, there's no guarantee that the messages will arrive in order. And there's no congestion control. All of these things sound bad, so why does UDP even exist in the first place?

Well, no congestion control means that UDP can send bits at any rate with no limits*. This is good for voice call applications because they want data to be sent as much as possible and as fast as possible.

*However, there will be actual limitations to this rate, including link transmission rate and network congestion.

For some reason, most firewalls are configured to block most types of UDP traffic. So applications that use UDP have to be prepared to use TCP as a backup.

| Application | Application-Layer Protocol | Transport-Layer Protocol |

|---|---|---|

| SMTP | TCP | |

| Remote terminal access | SSH | TCP |

| Web | HTTP | TCP |

| File transfer | FTP | TCP |

| Streaming | HTTP | TCP |

| Internet telephony | SIP, RTP, etc. | TCP or UDP |

TCP and UDP both don't guarantee:

- throughput

- timing

- security*

Despite this, time-sensitive applications still work. This is because they are designed with these lack of guarantees in mind.

*TCP can be enchanced with Transport Layer Security (TLS) to provide encryption. TLS is not a protocol; it's an add-on to TCP.

Application-Layer Protocols

Application-layer protocols define:

- the types of messages exchanged (e.g., request and response messages)

- the format of the messages

- what fields can go in a message

- the meaning of the information in the fields

- rules for determining when and how processes send and respond to messages

Some protocols are defined in RFCs and are therefore public. While other protocols, such as Skype's, are proprietary.

The Web and HTTP

The Internet became big in the 1990s with the arrival of the World Wide Web.

Some Dry Facts About HTTP

A Web page is a document with a bunch of objects, which can be images, videos, JavaScript files, CSS style sheets, etc. (More formally, an object is a file that is addressable by a single URL.) A Web page is usually an HTML file that includes references to these objects. Web browsers request and display Web objects while Web servers store Web objects.

HTTP stands for HyperText Transfer Protocol, and it is the Web's application-layer protocol. When browsers request a Web page, they send HTTP request messages to the server and the server responds with HTTP response messages that contain the objects.

HTTP uses TCP as its transport protocol. Port 80 is reserved for HTTP, so when browsers and servers initialize their sockets, they use port 80.

HTTP is a stateless protocol. This means that the server does not store any information about the exchanges the server has with clients. For example, the server will not know if a particular client has asked for a Web page before or if it's the client's first time asking for the Web page.

The original version of HTTP is called HTTP/1.0. Then there was HTTP/1.1 (1997), HTTP/2 (2015), and HTTP/3 (2022).

Non-Persistent and Persistent Connections

Each time a browser makes a request for a Web page, the browser actually has to make a request for the base HTML file and then each object in the file. In non-persistent connections, the browser opens a TCP connection for one object, then closes the connection after the object is sent. So a TCP connection has to be opened for each object. In persistent connections, the browser opens one TCP connection and requests/receives all the objects using that one connection. After one object is sent, the connection remains open for some period of time, allowing other objects to be sent using the same connection if needed. HTTP/1.1 and later use persistent connections by default.

HTTP with Non-Persistent Connections

- Browser starts a TCP connection to the server on port 80

- Browser sends an HTTP request message to the server

- Server receives the request, gets the base file, puts it in an HTTP response message, and sends the message

- Server closes the TCP connection

- Browser receives the response.

And these steps repeat for each object in the Web page. So if a Web page has objects, there will be connections made ( for the Web page and for each object). If this seems tedious, it's because it is. That's why browsers can be configured to open multiple parallel connections at the same time instead of waiting for one connection to be done before opening another.

Let's look at one request in action:

As we can see, it takes some time for the client to receive the file after making a request.

The round-trip time (RTT) is the time it takes for a packet to travel from client to server. It includes queuing delay, processing delay, and propagation delay.

Notice that the definition of RTT is the time it takes for a packet — not an object — to travel from client to server and then back to the client. That is why transmission delay is not included in the definition of RTT.

RTT is a measure of how long it takes for the server to start getting back to the client, not how long it takes to completely get back to the client.

So the total response time is RTTs plus the transmission time of the file. RTT is for establishing the TCP connection and RTT is for receiving the file.

Besides the delay for sending each object, there is also the burden of resource management. For each TCP connection, buffers and variables must be allocated; this can take up a lot of resources on the server if there are a bunch of connections to manage.

HTTP with Persistent Connections

Instead of closing the TCP connection after sending the requested object, the server keeps the connection open for a certain period of time so that multiple objects can be sent through the connection.

For both non-persistent and persistent connections, they can also be non-parallel or parallel. In non-parallel connections, the client waits until it receives an object before requesting another one. So each object requires RTT. In parallel connections, the client requests an object as soon as it needs it, even if a previous request for an object has not been completed yet. This means if there are objects, it could request for all objects at once by opening parallel connections. So it could take as little as RTT to get all the objects. (Note that the RTT is just for the objects in the base file. The browser still needs RTT to get the base file itself first.)

Persistent parallel is the default in HTTP/1.1 (and later) and the example below will show why.

Note that parallel connections does not mean multiple links between client and server. If there is only one link between client and server and multiple objects are requested in parallel, I believe what happens is that the link transmits the objects in an alternating fashion.

Suppose there is a -kilobit page that contains -kilobit images, all of which are stored on a server. Suppose the round-trip time from the server to a client's browser is milliseconds. For simplicity, we'll assume there is only one Mbps link between the client and server; the time it takes to transmit a GET request onto the link is zero. Note that based on the assumptions, the link has a millisecond one-way propagation delay as well as a transmission delay (for the page and images).

For non-persistent, non-parallel connections, how long is the response time? (The time from when the user requests the URL to the time when the page and its images are displayed?)

Let's start with the base file. It takes RTT to establish the TCP connection and another RTT to start getting the base file. So there are a total of RTTs for the base file. We are given that one RTT is milliseconds, so the RTT is milliseconds.

The transmission delay for the base file is seconds millisecond.

So the total time it takes to get the base file is milliseconds.

Now for the objects. Since it is non-persistent, the TCP connection for the base file will be closed and new ones ( each) will be needed for the objects. Since it is non-parallel, the previous TCP connection has to close before a new one opens.

For one object, it takes RTT to establish the TCP connection, and RTT to start getting the object. So RTTs for one object. For objects, this is a total of RTTs. In terms of milliseconds, this is milliseconds.

The transmission delay for one object is the same for the base file. Then for objects, the transmission delay is milliseconds.

So the total time it takes to get all of the objects is milliseconds.

Bringing the total time for the whole Web page to milliseconds seconds.

What about for non-persistent, parallel connections?

Again, we'll start with the base file. It takes RTT to establish the TCP connection and another RTT to start getting the base file. So RTTs. Same as before, milliseconds for the RTTs for the base file.

The transmission delay is also the same at millisecond.

So the total time it takes to get the base file is milliseconds.

For the objects, since the connections are parallel, all TCP connections will be opened in parallel in RTT and all objects will start to be retrieved at the same time in RTT. So RTTs for all objects. This means it takes milliseconds for the RTTs of all objects.

Even though the connections are parallel, the objects are not transmitted onto the link in parallel. As we saw before, it takes millisecond to transmit object. So for objects, milliseconds.

So the total time it takes to get all of the objects is milliseconds.

Bringing the total time for the whole Web page to milliseconds seconds.

What about for persistent, non-parallel connections?

We open the TCP connection and wait for the base file to transmit and propagate, which takes milliseconds.

For the objects, since the connection is persistent, we don't need to open any more TCP connections.

But since it is non-parallel, we need to get the objects one at a time. RTT for each object means RTTs for all objects, which means milliseconds for the RTTs.

And milliseconds to transmit.

So the total time it takes to get all of the objects is milliseconds.

Bringing the total time for the whole Web page to milliseconds seconds.

What about for persistent, parallel connections?

The base file takes milliseconds to transmit and propagate.

We don't need to open any more TCP connections, and all objects will start to be retrieved at once in RTT. So milliseconds.

milliseconds to transmit.

So the total time it takes to get all of the objects is milliseconds.

Bringing the total time for the whole Web page to milliseconds seconds.

HTTP/2

If we use only one TCP connection, we run into a problem called Head of Line (HOL) blocking. Let's say the base file has a large video clip near the top and many small objects below the video. Being near the top, the video will be requested first. Especially if the bottleneck link is bad, the video will take some time to transfer, causing the objects to have to wait for the video. The video at the head of the line blocks the objects behind it. Using parallel connections is one way to solve this problem, but multiple connections means the server has to open and maintain multiple sockets, which is not good if it can be avoided.

Standardized in 2015, HTTP/2 introduced a way to allow for using one TCP connection without experiencing HOL blocking.

HTTP/2 Framing

The solution is to break each object into small frames and interleave them. That way, each response will contain parts of each object.

Let's say the base file has large video clip and small objects. Suppose the video clip is broken up into frames and each of the small objects is broken up into frames. The first response can contain frames: frame from the video and the first frame of each of the small objects. The second response can also contain frames: frame from the video and the last frame of each of the small objects. In just frames, all of the small objects will have been sent. If the frames weren't interleaved, the small objects would be sent after frames.

(For some reason, interloven sounds right.)

Server Pushing

Another feature HTTP/2 introduced was server pushing, where the server can analyze the base file, see what objects there are, and send them to the client without needing to wait for the client to ask for them.

HTTP Message Format

There are two types of HTTP messages: request messages and response messages.

Request Messages: Give Me What I Want!

Here's an example of what a request message might look like:

GET /projects/page.html HTTP/1.1

Host: www.myschool.edu

Connection: close

User-agent: Mozilla/5.0

Accept-language: fr

The first line is the request line. There are three fields: the method field, the URL field, and the HTTP version field. As we can infer, the method is GET, which is the method for requesting objects (because, you know, we want to "get" them).

The lines below the request line are the header lines. Connection: close means "close the connection after giving me what I requested", i.e., use a non-persistent connection. Connection: keep-alive would be for a persistent connection. The user agent specifies what type of browser is making the request. And Accept-language: fr means get the French version if it exists.

It may look like the header line specifying the host is unnecessary, because the browser needed to know the host in order to establish the TCP connection in the first place. It turns out that it is needed by Web proxy caches, which we'll see later.

There is also an entity body that follows the header lines. It wasn't included in the example above because the entity body is usually empty for GET requests. The entity body is usually used for POST requests, which are used to submit information, like user inputs from a form. The information that needs to be submitted are included in the entity body.

This is a little bit unconventional, but GET requests can also be used to submit information. Instead of going in the entity body, the inputted data is included in the URL with a question mark, like google.com/search?q=what+is+http, where q is the name of the input and the stuff after the '=' is the value of the input.

Response Messages: Here You Go, Now Leave Me Alone!

Here's an example of what a response message might look like:

HTTP/1.1 200 OK

Connection: close

Date: Sat, 16 Sep 2023 19:23:09 GMT

Server: Apache/2.2.3 (CentOS)

Last-Modified: Sat, 16 Sep 2023 18:34:09 GMT

Content-Length: 6821

Content-Type: text/html

(the requested data...)

The first line is the status line. There are three fields: the protocol version, the status code, and the status message.

The lines below are the header lines. The Date: header line specifies when the HTTP response was created and sent by the server. The Server: header line specifies the type of the Web server. Last-Modified: is when the object was created or last modified. Content-Length: specifies the object's size in bytes.

The entity body contains the actual object (the requested data).

Cookies! 🍪

HTTP is stateless, so the server doesn't store information about the requests that the clients are making. However, servers sometimes want a way to identify users so that they can restrict access or provide user-specific information when responding to the client. This is where cookies come in. Cookies allow sites to keep track of users*.

*Technically, cookies keep track of browsers. But users usually use one browser, and that browser is usually used by only one user (unless it's a shared computer). So tracking a browsing session is essentially tracking a user.

Let's say we go to our favorite shopping site on our favorite browser. The site will create a unique ID (let's say 9876) for this request, store the ID in its database, and send the ID back in the response. The response will include a Set-cookie: header; this tells the browser to create and store a cookie file. For example, the header will look like Set-cookie: 9876, so the browser will store 9876 in a file. Now, as we navigate through our shopping site, the site can ask the browser to include this cookie in every request. Then the site will query its database for this ID to identify the user. If the ID exists in the database, the site will know the user has visited before, and if the ID isn't in the database, the site will consider this a new user visiting for the first time.

When we say that the site can identify a user, the site doesn't actually know who we are, like our name and stuff. Until we enter that information ourselves. When we enter our information to buy something or to fill out a form, the site can store that information and associate that with the ID in the cookie. So the next time we visit that site, it can look at the ID and the information linked to that ID.

Web Caching

A Web cache is a server that stores Web pages and objects that have been recently requested.

When a browser requests an object, it sends the request to a Web cache. If the Web cache has a copy of the requested object, the Web cache sends it to the browser. If it doesn't, the Web cache requests it from the origin server, which sends the object to the Web cache. The Web cache stores a copy of it in its storage and sends that copy to the browser.

Having a Web cache can significantly speed things up. If the server is physically located far away from the client or if the bottleneck link between the server and client is really bad, then using a Web cache can reduce response times, assuming the Web cache is physically closer to the client or the bottleneck link between the Web cache and client is faster than the bottleneck link between the server and client.

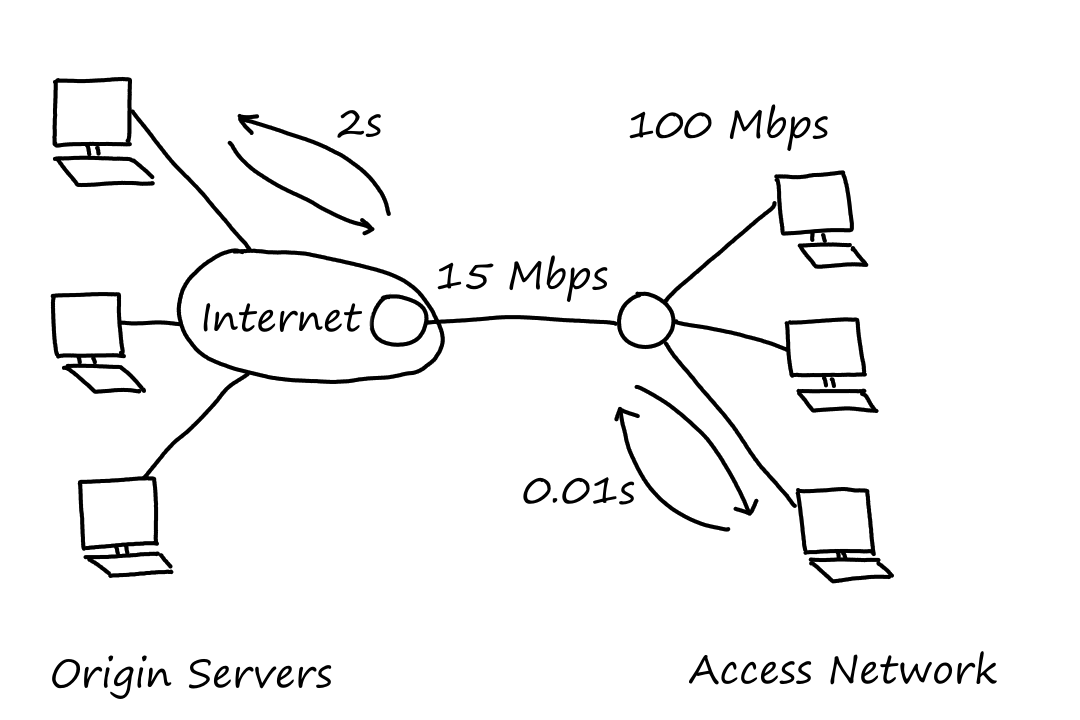

Let's look at a hypothetical access network connected to the Internet. The router in the access network is connected to the router in the Internet by a Mbps link. The links in the access network have a transmission rate of Mbps. Suppose the average object size is Mbits and that the average request rate is requests per second. Also suppose that it takes seconds on average for data to travel between the router in the Internet and the origin servers (we'll informally call this "Internet delay"). And that it takes seconds on average for data to travel in the access network.

Recall that traffic intensity is where is the average rate at which bits are traveling and is the transmission rate of the link.

On the access network, requests per second and Mbits per request means there are Mbits traveling per second. So the traffic intensity is

which isn't too bad. In fact, the delay on the access network is negligible for a traffic intensity of .

On the access link, the traffic intensity is

which is really bad. The average response time could be in minutes. (Recall that queuing delay increases as traffic intensity approaches .)

One solution is to upgrade the access link, but that's costly.

Instead, let's consider placing a Web cache in the access network. Suppose that there is a chance that the cache can satisfy a request (i.e., the hit rate is ). This means only of the requests are going through the access link, so the traffic intensity is .

The average delay is the sum of the access network delay, access link delay, and Internet delay. The access network delay is seconds; the access link delay is negligible with a traffic intensity of ; and the Internet delay is seconds. Since of the requests stay in the access network and of the request go to the Internet, the average delay is

seconds

If we had upgraded the access link instead of using a Web cache, the average delay would be at least seconds from the Internet delay alone. So we can see that using a Web cache provides faster response times.

Conditional GET

The problem with using a Web cache is that objects in the Web cache can get outdated since the Web cache only stores a copy of the object while the actual object sits at the server and can be modified. The simple fix is for the Web cache to send a request to the server to check the last time the object was modified.

Let's say the browser sends a request to the Web cache. Suppose the Web cache doesn't have it, so it will send a request to the server. The server will send the object to the Web cache:

HTTP/1.1 200 OK

Date: Sun, 17 Sep 2023 19:10:09

Server: Apache/1.3.0 (Unix)

Last-Modified: Sun, 17 Sep 2023 18:30:09

Content-Type: image/gif

(the requested data...)

Now, the Web cache will send the object to the browser and make a copy of the object. Let's say the browser requests the same object one week later. The Web cache has a copy of the object this time, but it will send a conditional GET to the server first to check if there have been any changes:

GET /fruit/kiwi.gif HTTP/1.1

Host: www.exotiquecuisine.com

If-modified-since: Sun, 17 Sep 2023 18:30:09

Let's say the object hasn't been modified since then. The server will then send this to the Web cache:

HTTP/1.1 304 Not Modified

Date: Sun, 24 Sep 2023 15:39:09

Server: Apache/1.3.0 (Unix)

The entity body will be empty since it is pointless to send the object. However, if the object had been modified, the object would have been sent in the entity body.

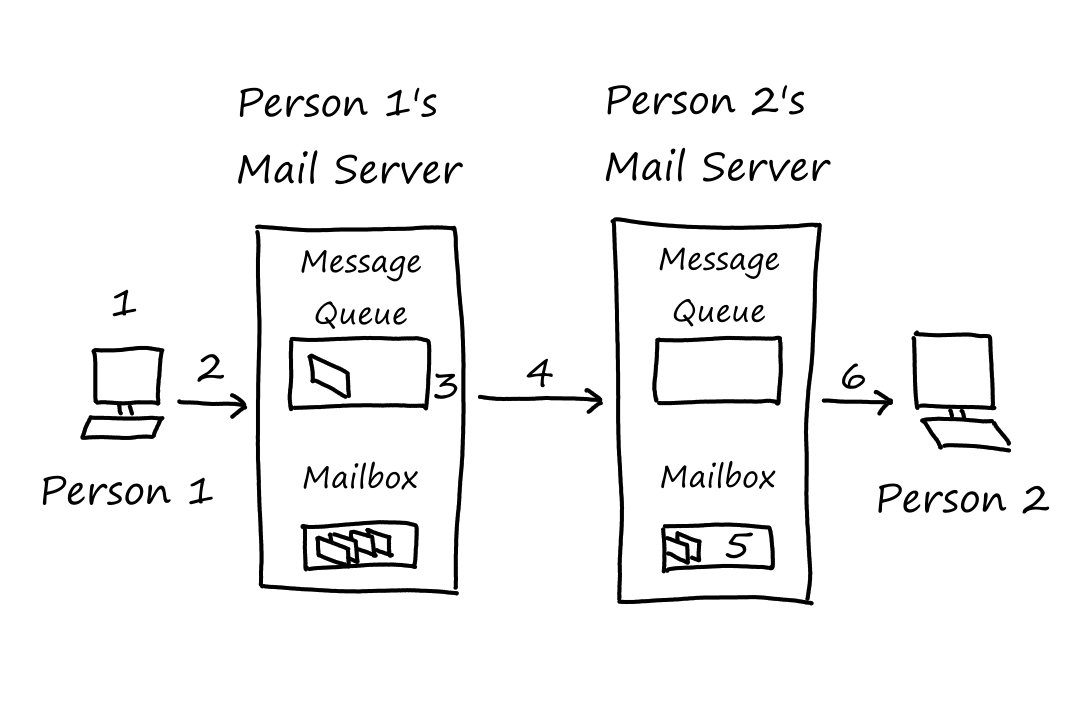

The Internet's mail system has three components: user agents, mail servers, and the Simple Mail Transfer Protocol (SMTP). User agents are applications, such as Gmail and Outlook, that allow us to read and write emails. Mail servers store emails in mailboxes and send emails to other peoples' mailboxes. Before being sent, emails wait in the sender's mail server's message queue.

We, as individuals, have our own mail servers (in a sense). This may sound a little weird (what? I'm running a server?). When we create an email account on, say, Google, Google manages a mail server for us. This mail server is shared with other Google users. (Note, the mail server is shared, not the mailbox.)

SMTP

SMTP is the application-layer protocol for email applications. Specifically, it is the protocol for sending emails (not receiving). It uses TCP as the underlying transport-layer protocol (which is probably not surprising). When a mail server sends mail, it is an SMTP client. When a mail server receives mail, it is an SMTP server.

SMTP requires emails to be encoded using 7-bit ASCII. This restriction is a result of SMTP being around since the 1980s, when people weren't sending a lot of emails, much less emails with large attachments like images and videos.

- Person 1 uses their user agent to write and send an email

- Person 1's user agent sends the email to Person 1's mail server, which places the email in its message queue

- Person 1's mail server opens a TCP connection to Person 2's mail server

- After TCP's whole handshaking thing, Person 1's mail server sends the email

- Person 2's mail server receives the email and puts it in Person 2's mailbox

- Person 2 uses their user agent to read the email

Person 1's user agent sends emails to Person 1's mail server, which then sends emails to Person 2's mail server. It may seem more efficient for Person 1's user agent to send emails directly to Person 2's mail server. This won't work though because user agents don't have a way to deal with Person 2's mail server being unreachable for whatever reason. Mail servers, which are always on and always connected to the Internet, keep retrying every minutes until it works.

SMTP uses persistent connections. So if a mail server has multiple emails to send to the same mail server, then it can use the same TCP connection to send all the emails.

In HTTP, each object is sent in its own response message. In SMTP, all of the objects are placed into one email.

After the TCP handshake, there is another SMTP handshake that takes place between the mail servers before they can send and receive emails.

Mail Message Formats

An email consists of a header and a body. The header is kinda like the metadata of the email. An example:

From: me@gmail.com

To: you@gmail.com

Subject: Thanks for reading this

And, of course, the body contains the message.

Mail Access Protocols

As mentioned eariler, SMTP is used only for sending emails. For receiving emails, there are two main protocols. Web-based applications, like Google's Gmail website and app, use HTTP. Some other clients, like Outlook, use Internet Mail Access Protocol (IMAP).

Both of these protocols also allow us to manage our emails. For example, we can move emails into folders, delete emails, and mark emails as important.

Mail servers only use SMTP to send emails. User agents on the other hand, can use SMTP or HTTP to send emails to the mail server.

DNS

As we probably know, computers like to work with numbers. So naturally, servers are uniquely identified by a set of numbers (IP address). However, we don't type a bunch of numbers if we want to go to Google — we type "google.com" (does anyone actually type this to Google something?). "google.com" is what's known as a hostname, which is a human-readable name for a server.

Services Provided by DNS

The Internet's domain name system (DNS) is what allows us to type human-readable names to go to websites. It converts human-readable hostnames to IP addresses.

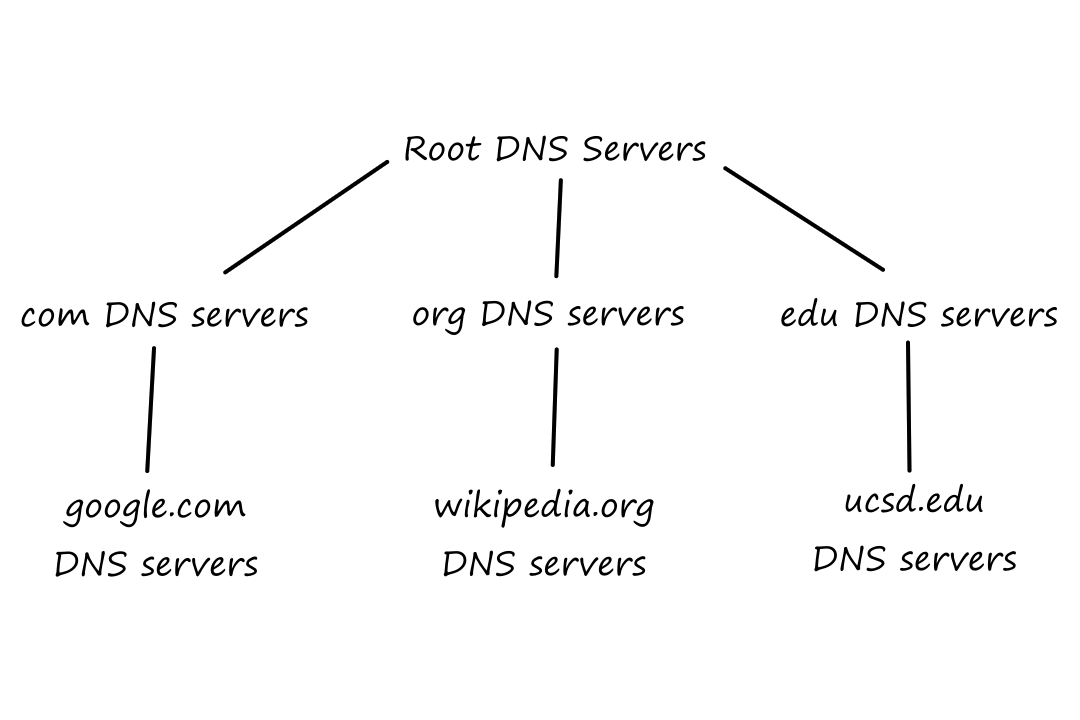

DNS is a distributed database deployed on a bunch of DNS servers.

It is also an application-layer protocol that allows hosts to query the database. It uses UDP.

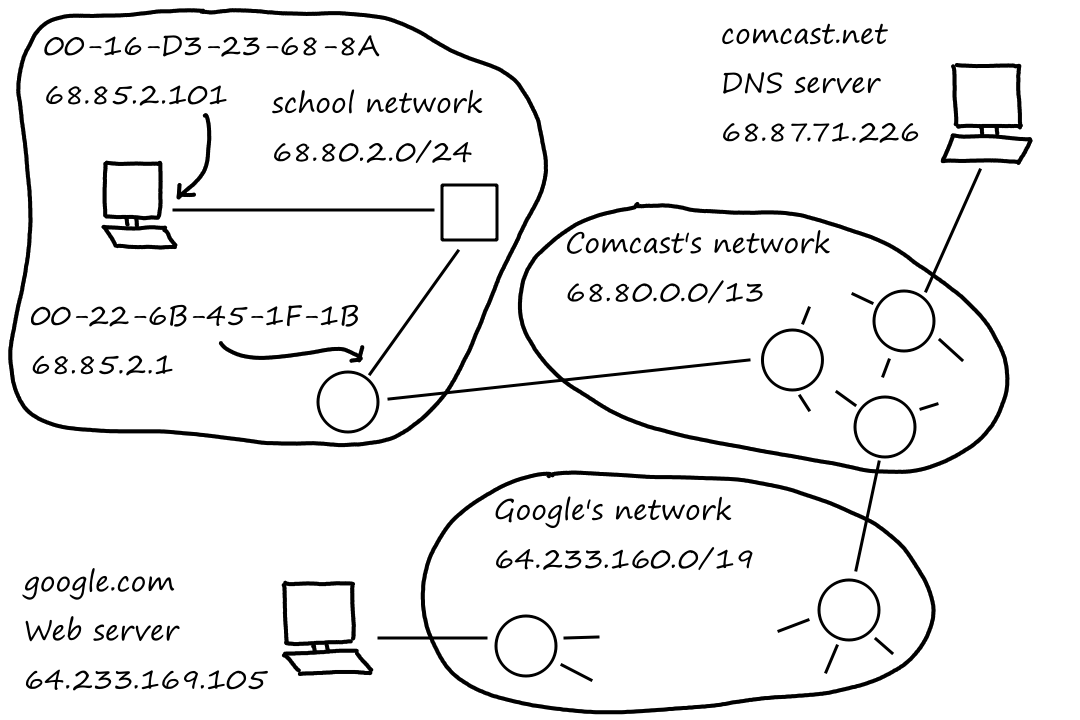

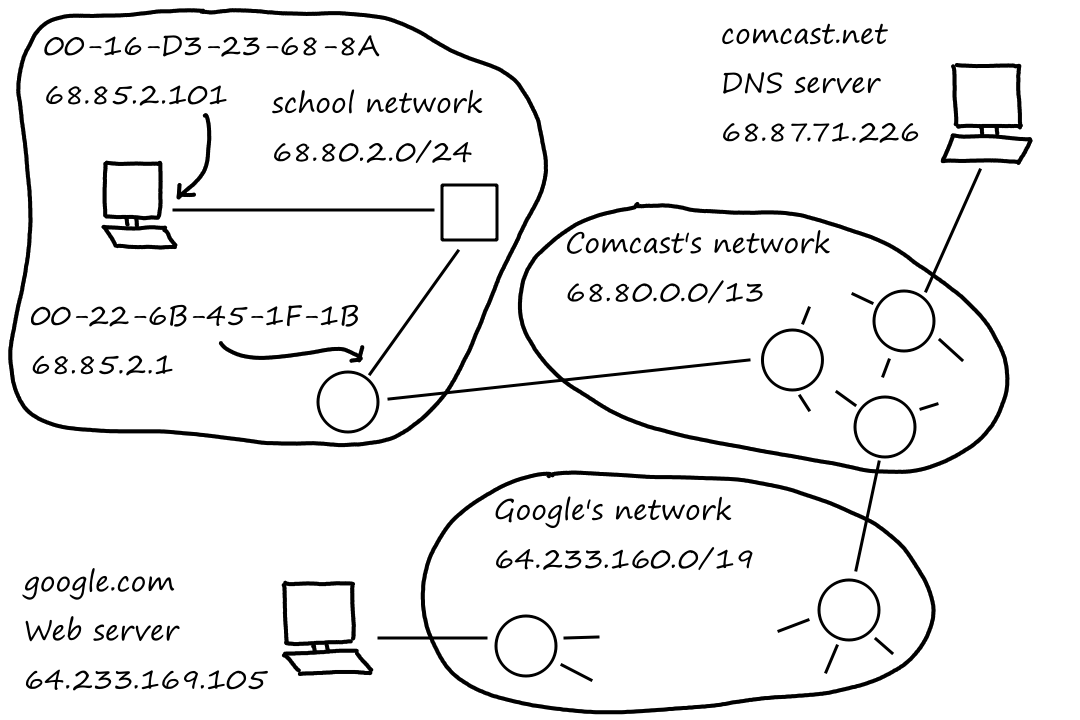

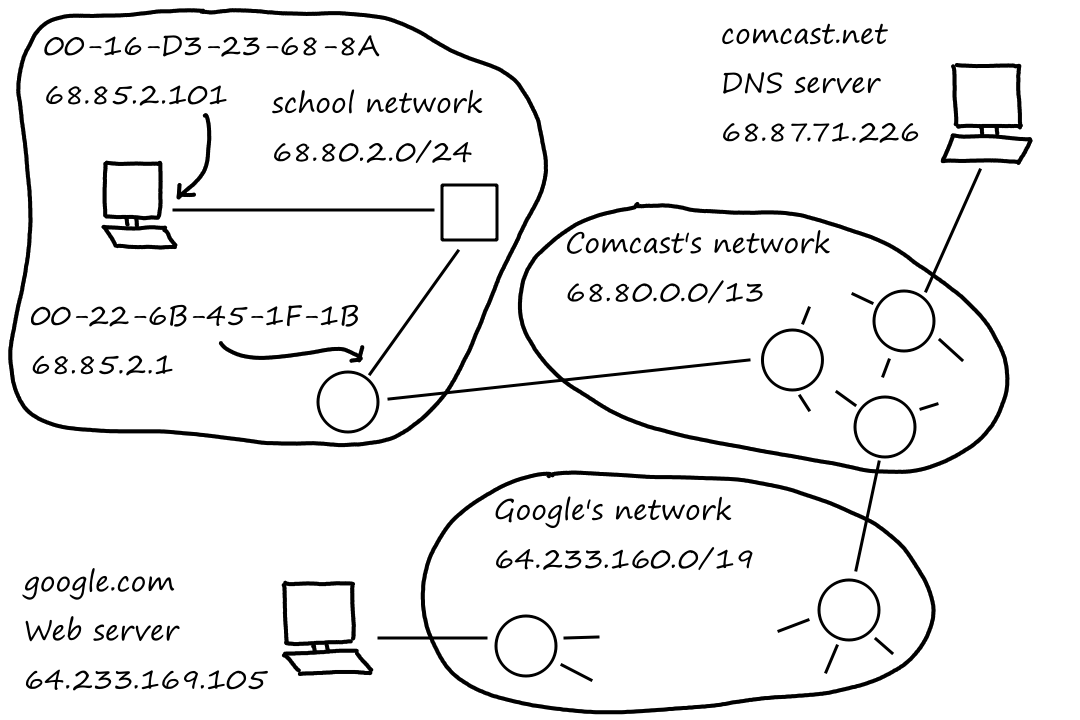

Here's how it works:

- There is a DNS application running on our phone/computer

- The browser sends the hostname to the DNS application

- The DNS application uses the hostname to query a DNS server for the IP address

- The DNS application receives the IP address and sends it to the browser

- The browser uses the IP address to open a TCP connection

From this, we can see yet another source of delay: translating the hostname to an IP address.

DNS also provides additional related services. Sometimes, a hostname can be complicated, like "relay1.west-coast.enterprise.com". An alias can be set up so that we can type "enterprise.com" instead of that long hostname. The long (real) hostname is called the canonical hostname. This is called host aliasing. Something similar is available for mail servers too. "gmail.com" is more likely an alias than an actual hostname for a mail server (which could be something like "relay1.west-coast.gmail.com"). This is mail server aliasing.

Companies often have several servers running their website. Each server has its own IP address, but those IP addresses should all point to the same website. The DNS database stores this set of IP addresses and rotates between the IP addresses so that traffic is distributed evenly across all servers. So DNS also provides load distribution.

How DNS Works

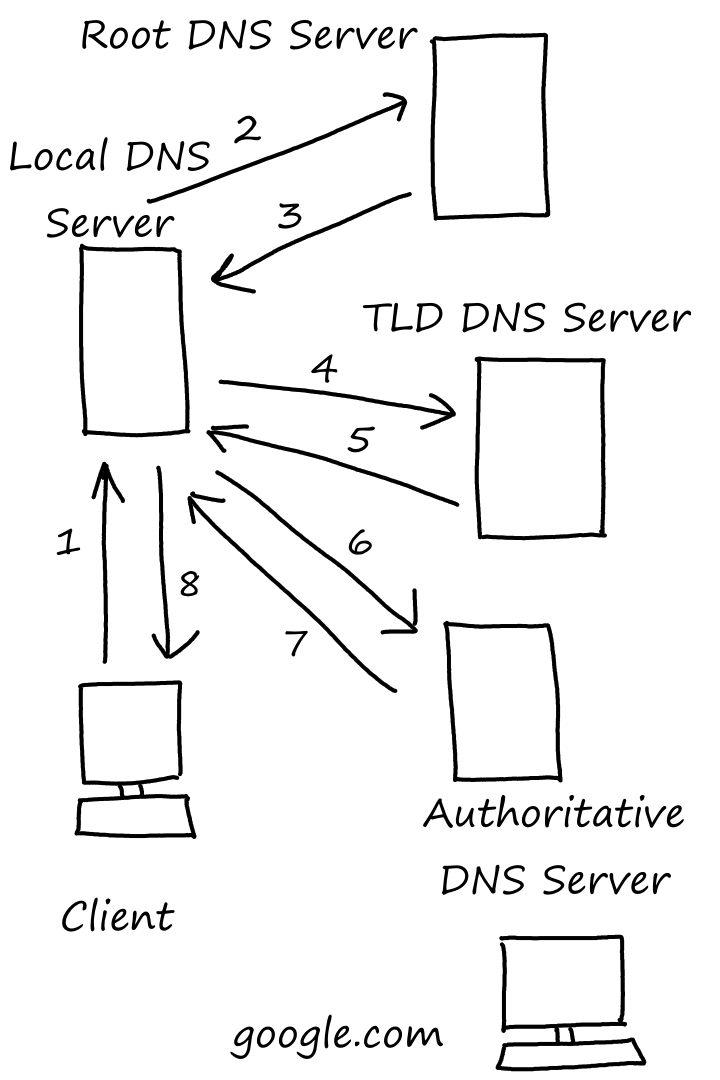

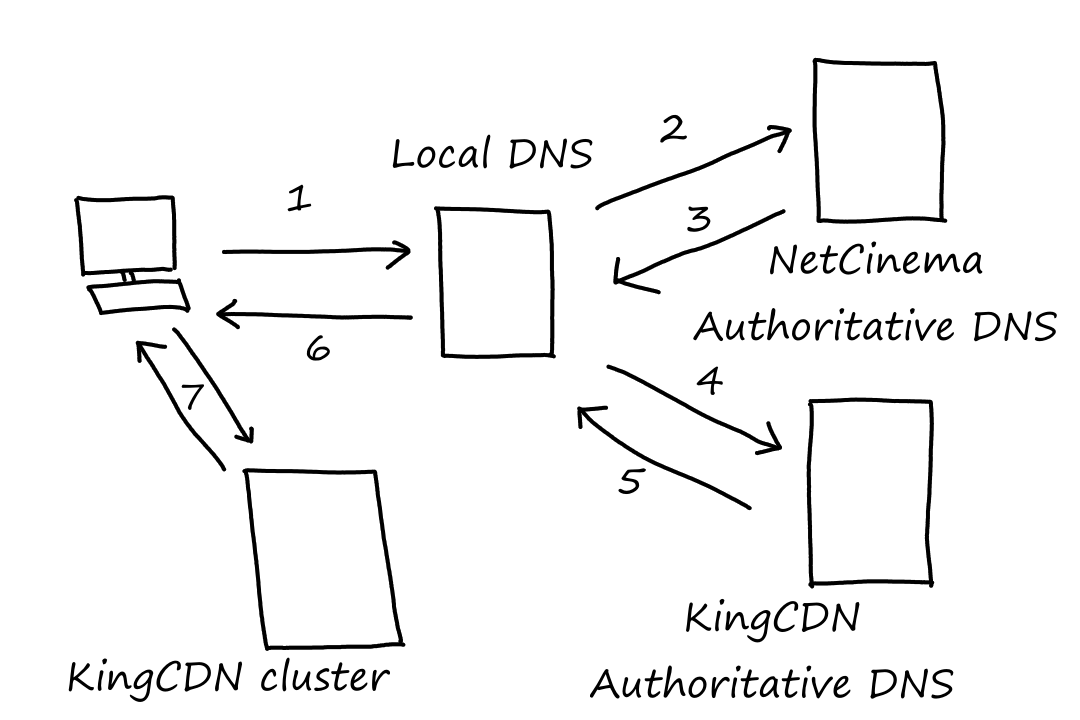

DNS is a distributed database organized in a hierarchy. At the top are root DNS servers. This is where the translation starts. The DNS application queries the root DNS server, which then looks at the top-level domain (e.g., com, org, edu, gov, net). It does this because there are dedicated DNS servers for each top-level domain (i.e., there are DNS servers for all .com websites, DNS servers for all .edu websites, etc.). Appropriately, they're called top-level domain (TLD) servers. So the root server will tell the DNS application which TLD server to contact. The TLD server will send back to the DNS application the IP address of the authoritative DNS server, which is where the actual IP address of the website sits. So finally, the DNS application will contact the authoritative DNS server for the IP address of the website.

There's also a local DNS server, which aren't part of the hierarchy for some reason. The local DNS server is the entry point into the DNS hierarchy; the DNS application first contacts the local DNS server, which then contacts the root DNS server. Local DNS servers are managed by ISPs.

1. Local DNS! What is google.com's IP address?

2. Root DNS! What is google.com's IP address?

3. Go ask a com TLD server. Here's the IP address of one.

4. TLD DNS! What is google.com's IP address?

5. Go ask one of Google's authoritative servers. Here's the IP address of one.

6. Authoritative DNS! What is google.com's IP address?

7. The IP address is 127.0.0.1.

8. I finally know google.com's IP address. It's 127.0.0.1.

In the example above, the queries from the local DNS server (2, 4, and 6) are iterative queries. This means that the responsibility of asking the next query is placed on the server that asked the query.

There are also recursive queries, in which the responsibility of asking the next query is placed on the server who got asked. In the example above, 1 is a recursive query.

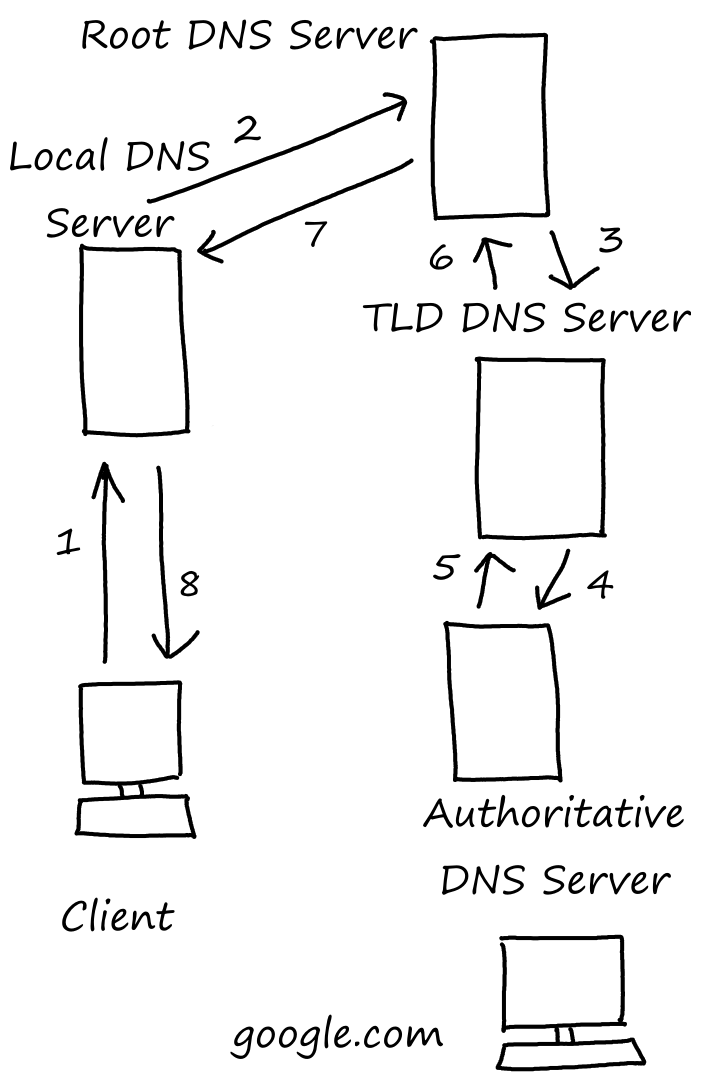

Here's an example where all the queries are recursive.

1. Local DNS! What is google.com's IP address?

2. Root DNS! What is google.com's IP address?

3. TLD DNS! What is google.com's IP address?

4. Authoritative DNS! What is google.com's IP address?

5. TLD DNS! The IP address is 127.0.0.1.

6. Root DNS! The IP address is 127.0.0.1.

7. Local DNS! The IP address is 127.0.0.1.

8. I finally know google.com's IP address. It's 127.0.0.1.

In practice though, most queries are iterative.

DNS Caching

As we saw earlier, there are a lot of queries that get made when going through the hierarchy. This traversal can be skipped if the DNS server stores the IP address of whatever was queried. For example, a local DNS server can store the IP address of a website, so that if another host wants to visit the same website, the local DNS server doesn't have to ask all those other DNS servers; it just returns the IP address it stored. This cache isn't good for very long though (typically 2 days).

Especially for .com websites, root DNS servers are often bypassed since the local DNS server should typically have the IP address of the appropriate TLD server in its cache. How many .com websites do we (as a whole) go to in a day?

DNS Records and Messages

The entries in the database are called resource records. A resource record is a four-tuple that looks like this: (Name, Value, Type, TTL). TTL stands for "time to live", which indicates when the record should be removed from a cache. The name and value depend on what the type is. (We'll ignore the TTL in the examples below.)

Type A records are hostname-to-IP mappings. (relay1.abc.def.com, 145.37.93.126, A). The name is the hostname and the value is the IP address for that hostname.

Type NS records are domain-to-DNS-server mappings. (abc.com, dns.abc.com, NS). The name is a domain and the value is the hostname of a DNS server.

CNAME records are alias-to-canonical-hostname mappings. (abc.com, relay1.abc.com, CNAME). The name is an alias and the value is the canonical hostname.

MX records are the equivalent of CNAME records but for mail servers. (abc.com, mail.abc.com, MX).

Type AAAA records are also hostname-to-IP mappings, but for IPv6.

Inserting Records into the DNS Database

If we want to register a domain name, say "ourwebsite.com", we do so through a registrar. A registrar makes sure the domain is unique and inserts the appropriate records into the DNS database.

The registrar also needs the names and IP addresses of the primary and secondary authoritative DNS servers. Once that is provided, the registrar inserts a Type NS record and a Type A record into the appropriate TLD server. For example, (ourwebsite.com, dns1.ourwebsite.com, NS) and (dns1.ourwebsite.com, 199.199.199.199, A) are inserted into the TLD servers for .com websites. So when a (local) DNS server contacts a TLD server, the TLD server will return both the Type NS record and the Type A record.

nslookup is a tool for performing DNS queries.

Here's a sample output for nslookup google.com:

Server: dns-cac-lb-01.rr.comAddress: 3002:1997:f01:1::1Non-authoritative answer:Name: google.comAddresses: 2607:f8b0:4007:80f::200e172.217.14.110

The Server is the DNS server to which the query is sent. If no DNS server is specified in the command, then it is the default DNS server, which is usually the local DNS server.

Non-authoritative answer means the answer is not from an authoritative DNS server. What happened was that the local DNS server queried a non-authoritative server that had the answer in its cache.

Here, there happened to be two answers returned. 2607:f8b0:4007:80f::200e and 172.217.14.110 are two separate servers for google.com.

Here's a sample output for nslookup -type=NS google.com:

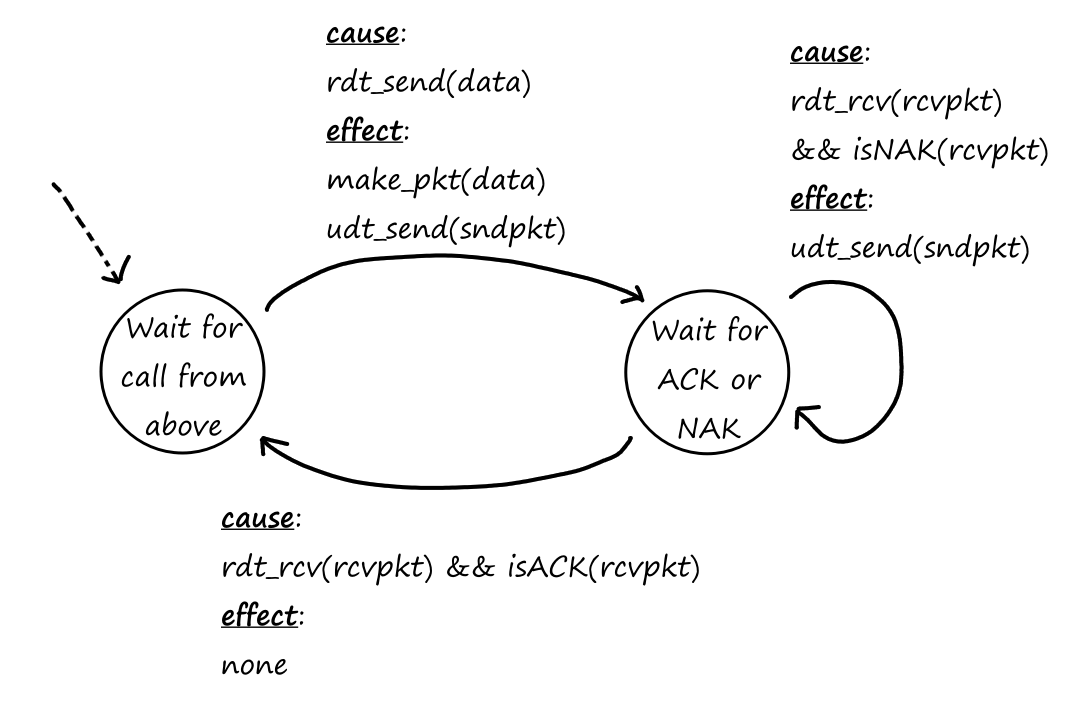

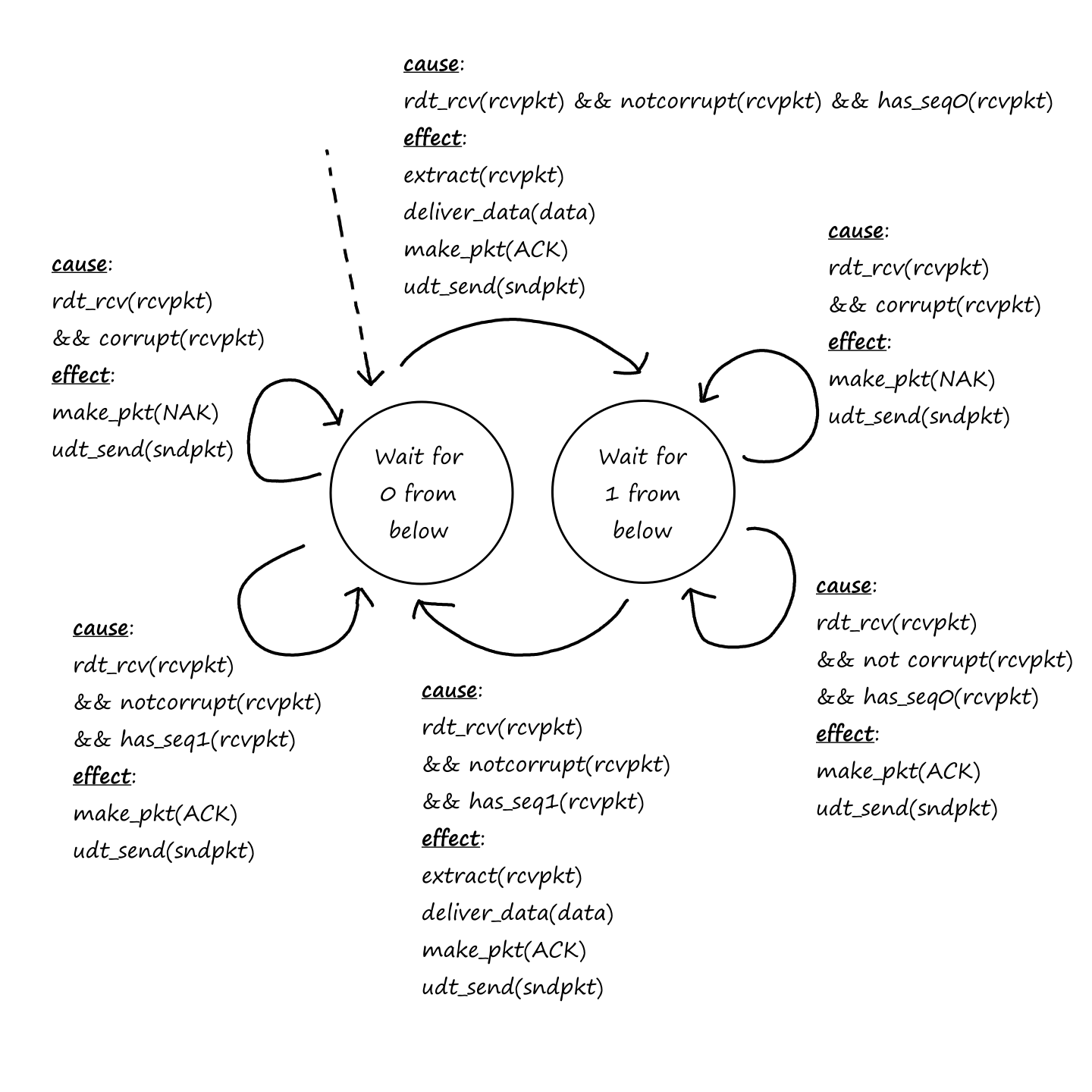

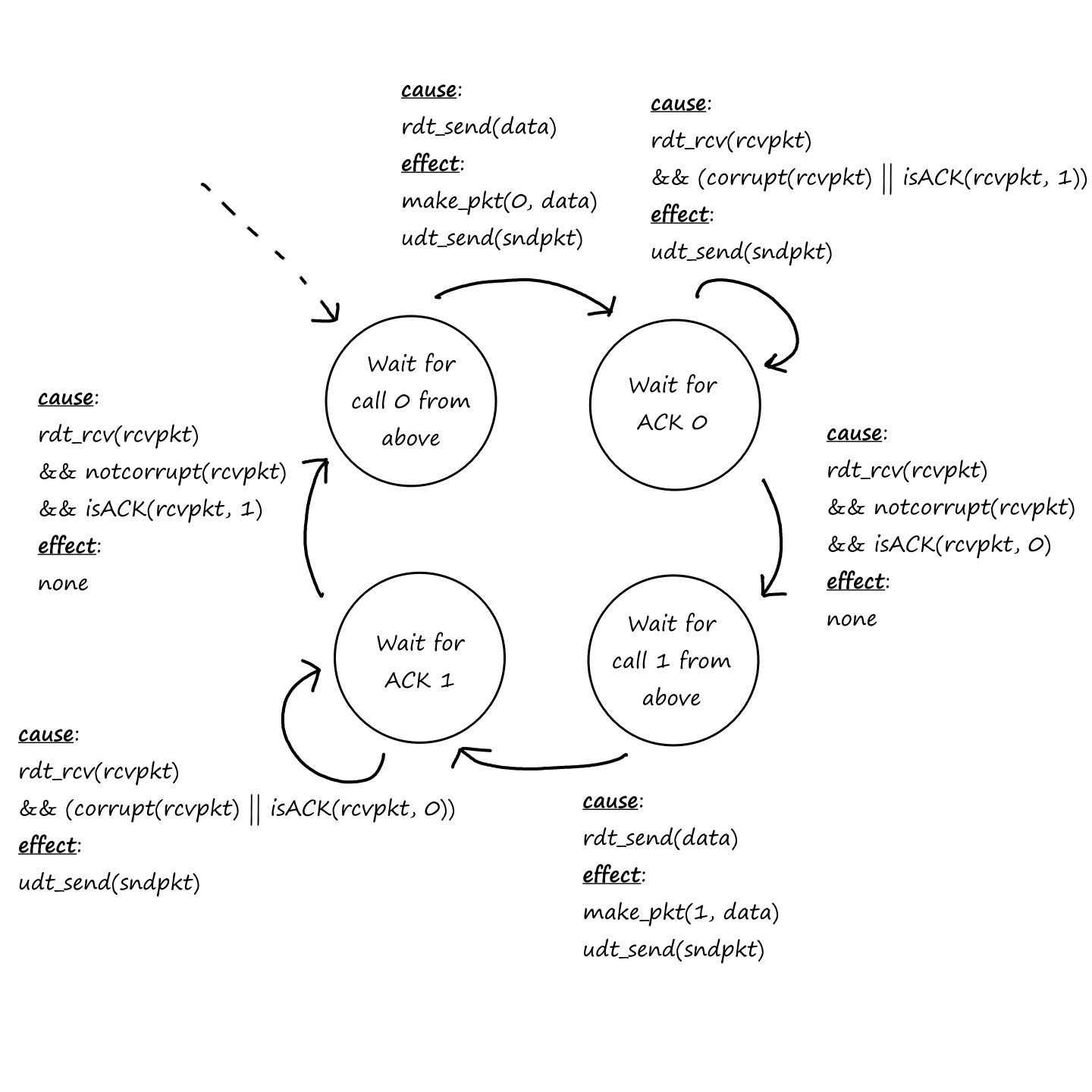

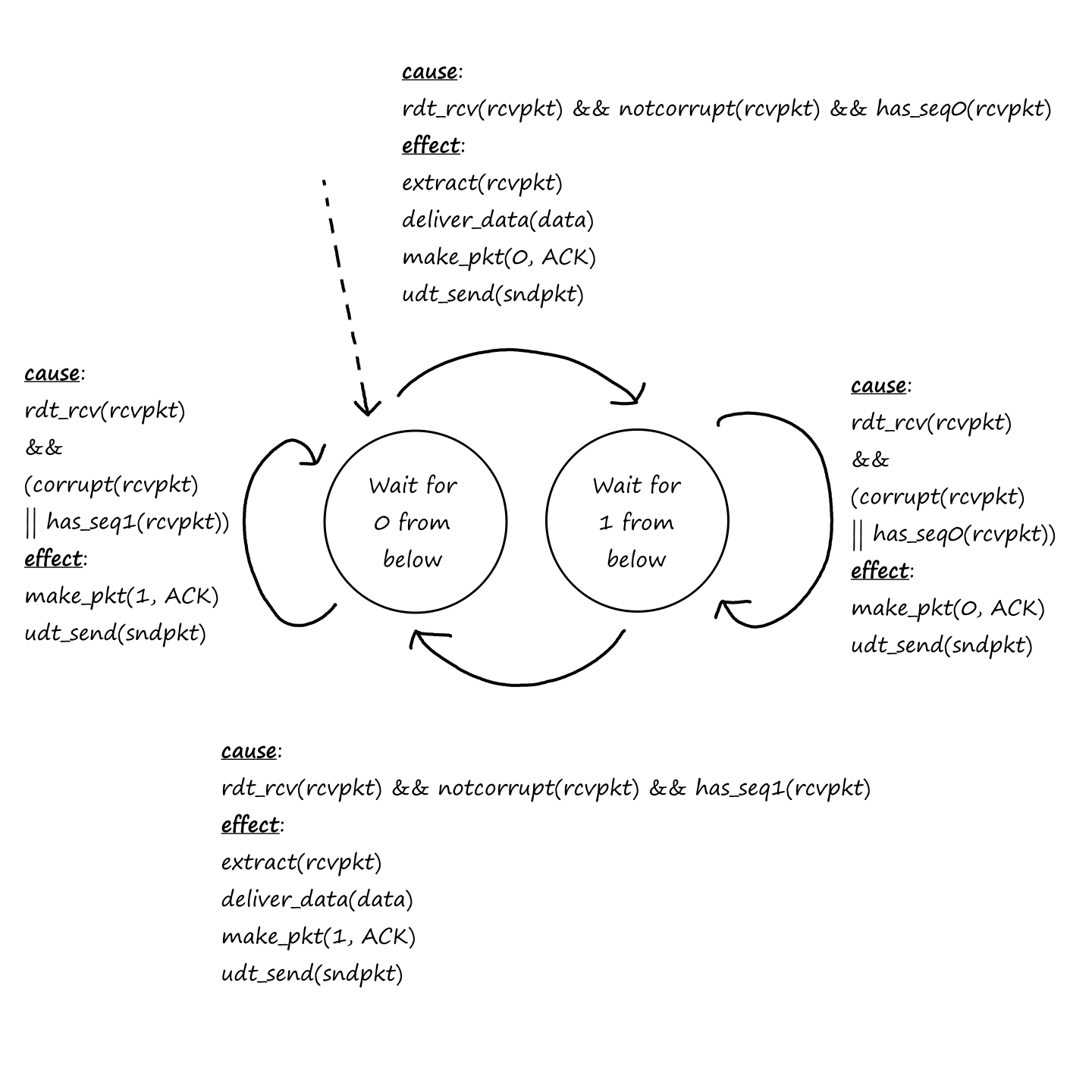

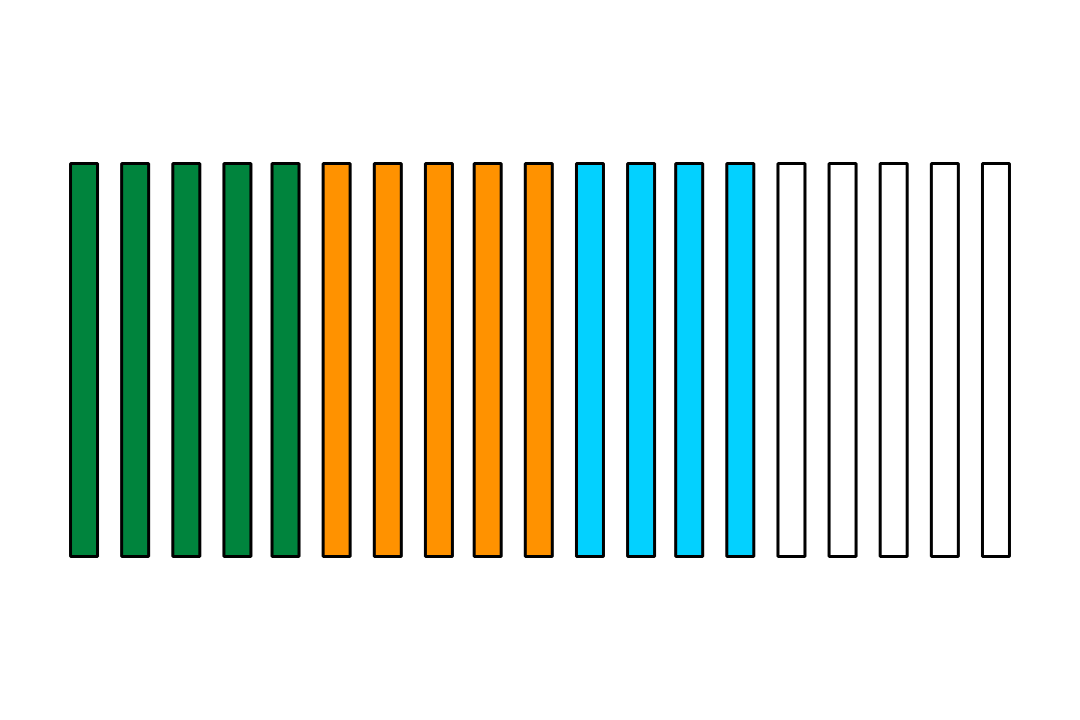

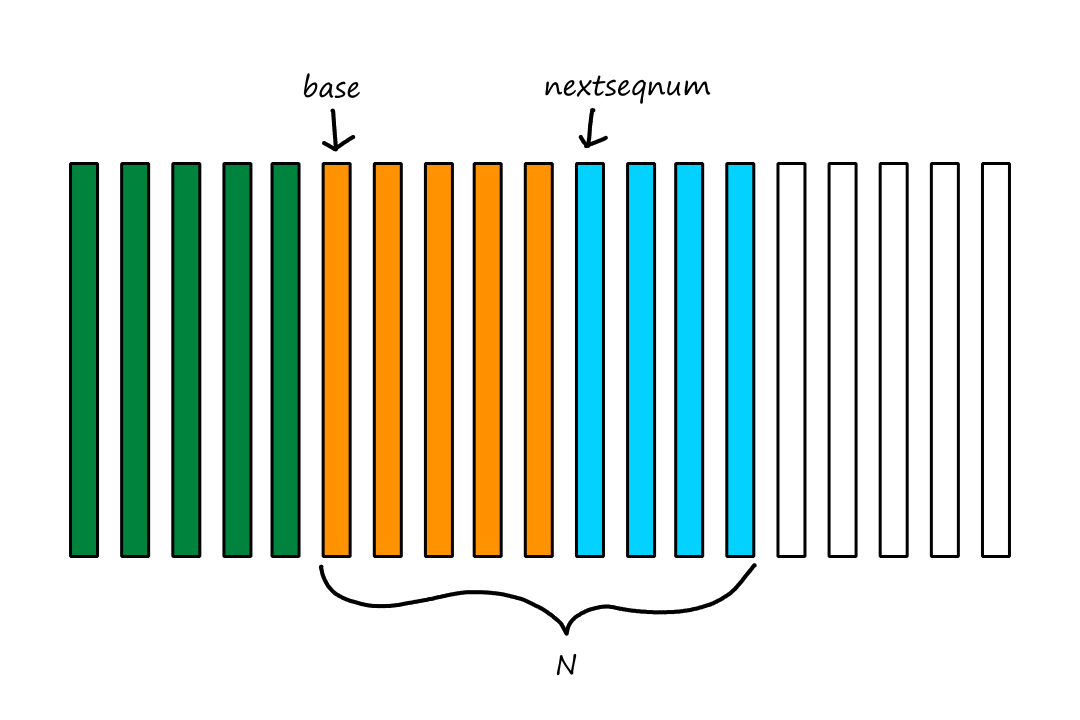

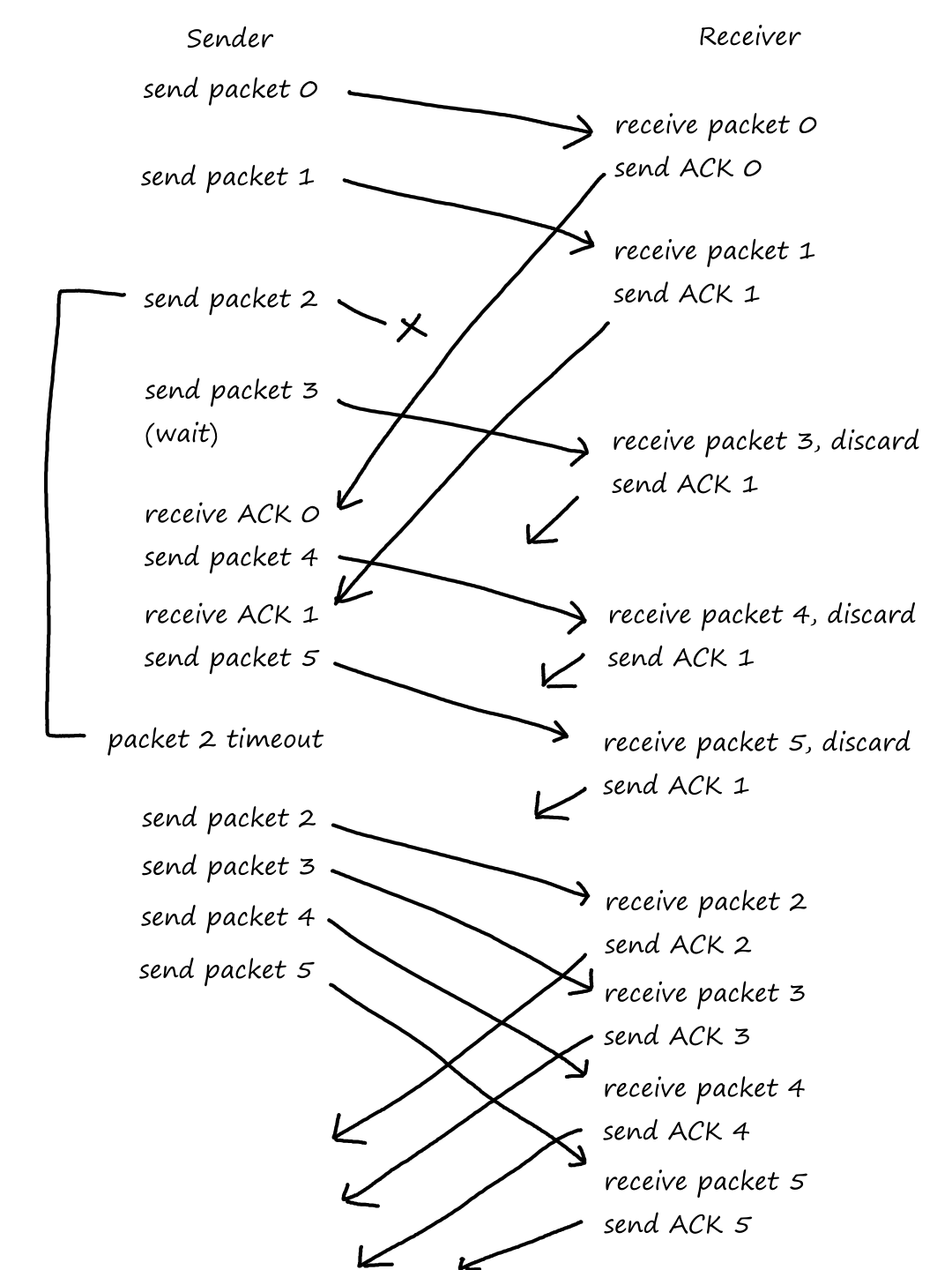

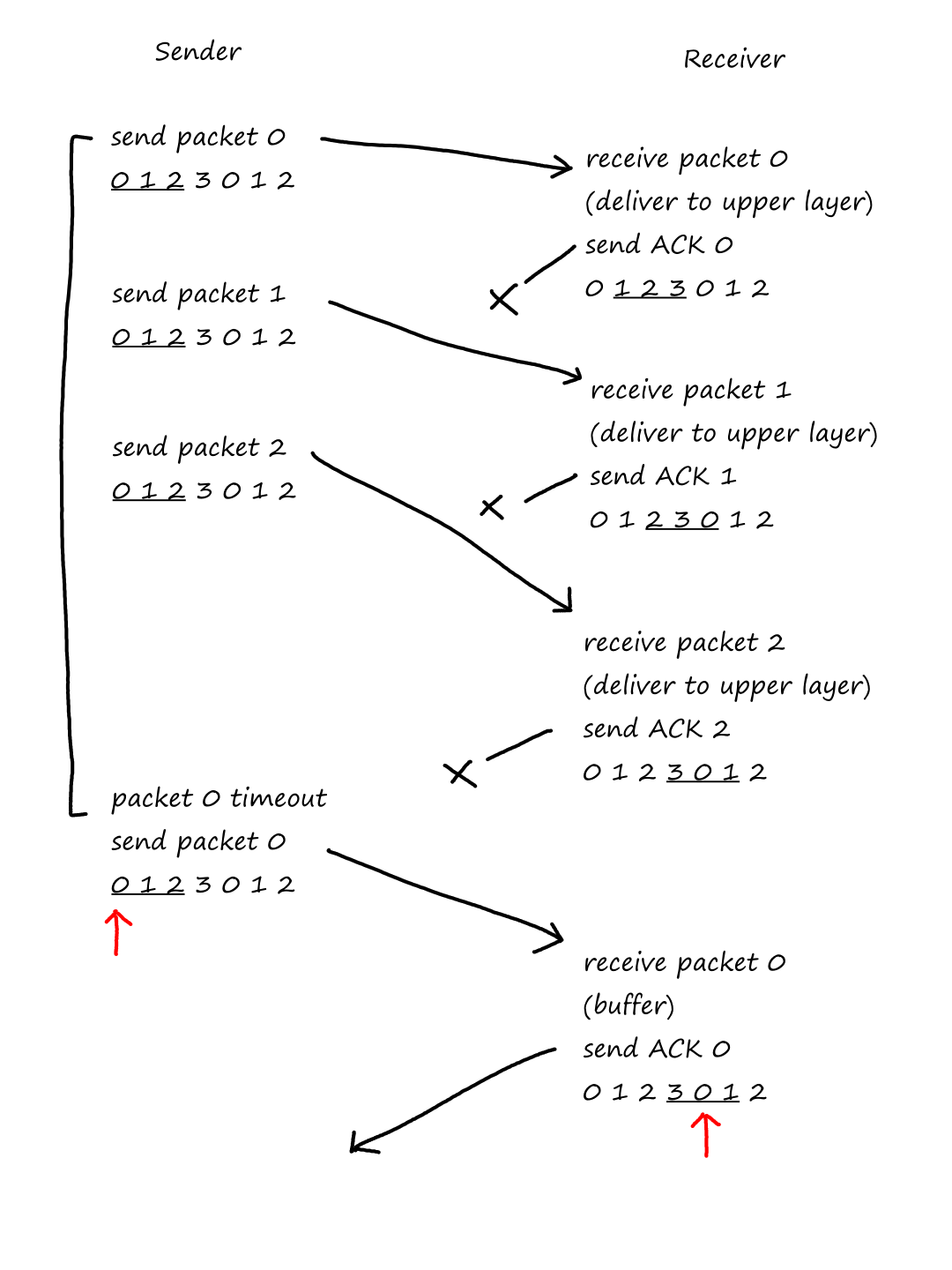

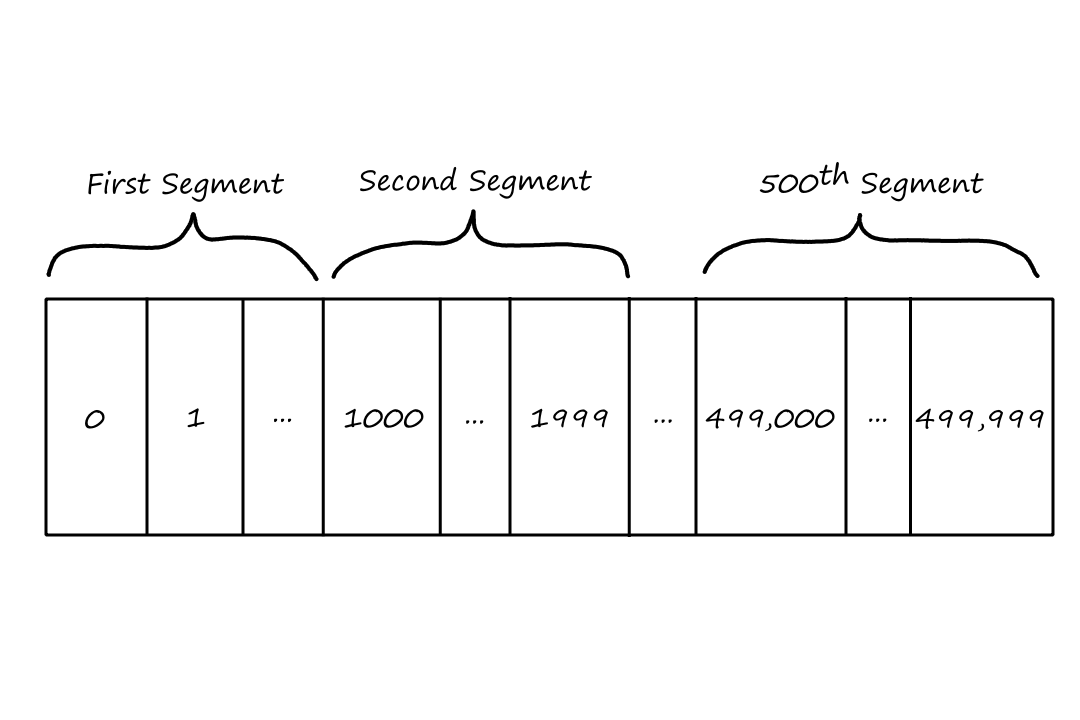

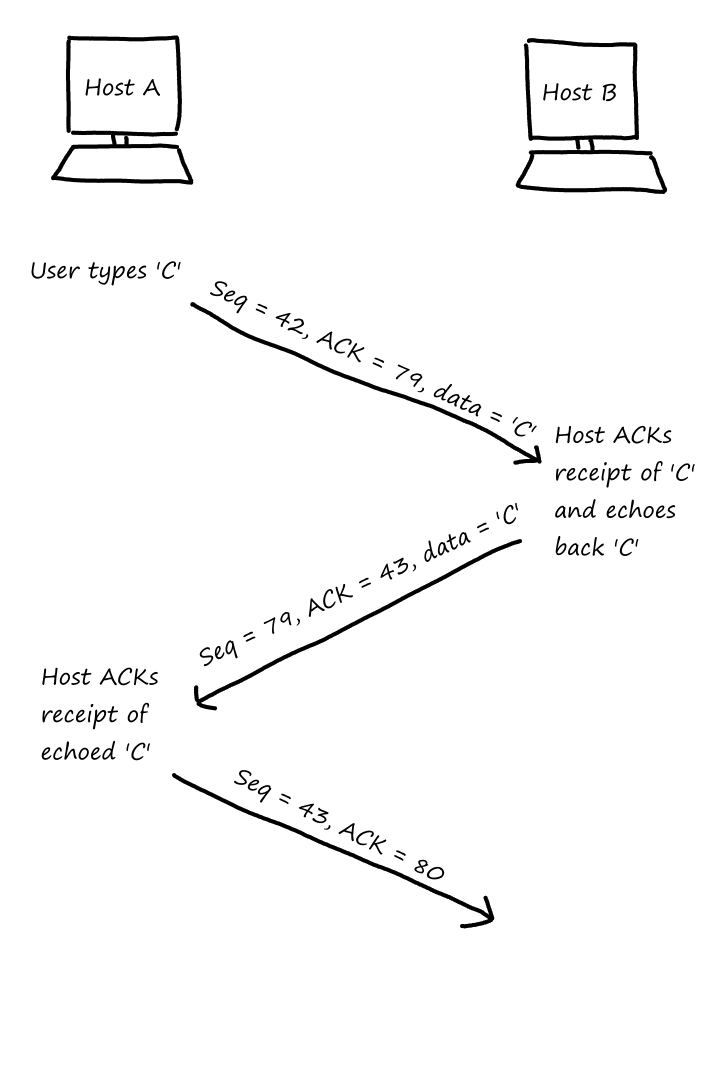

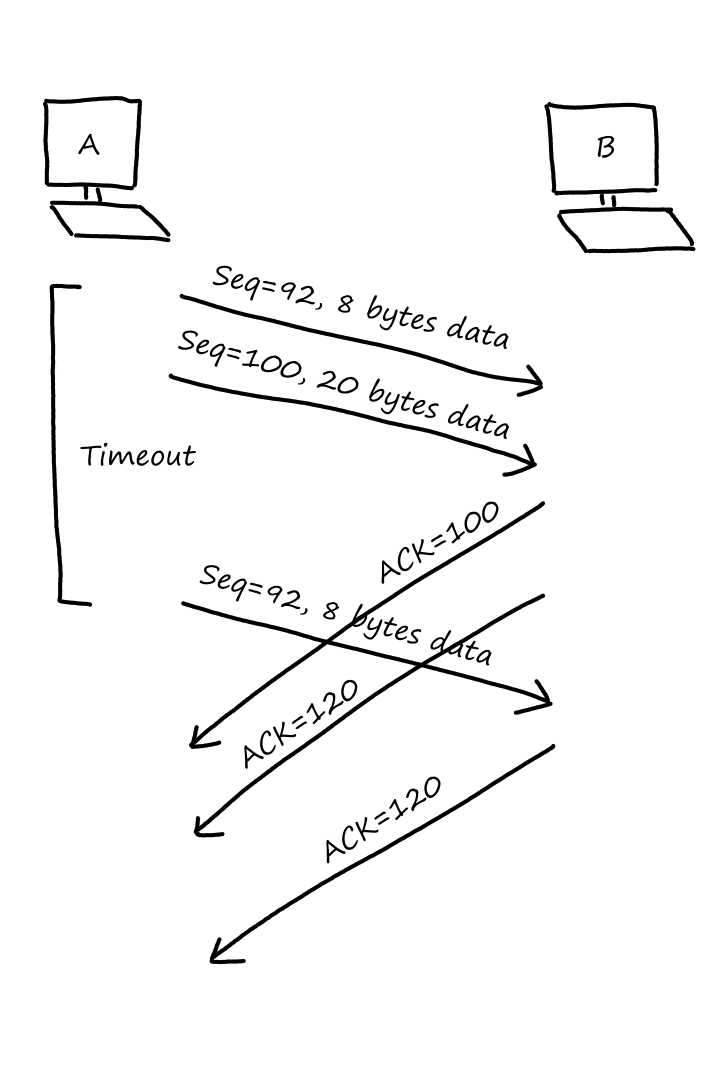

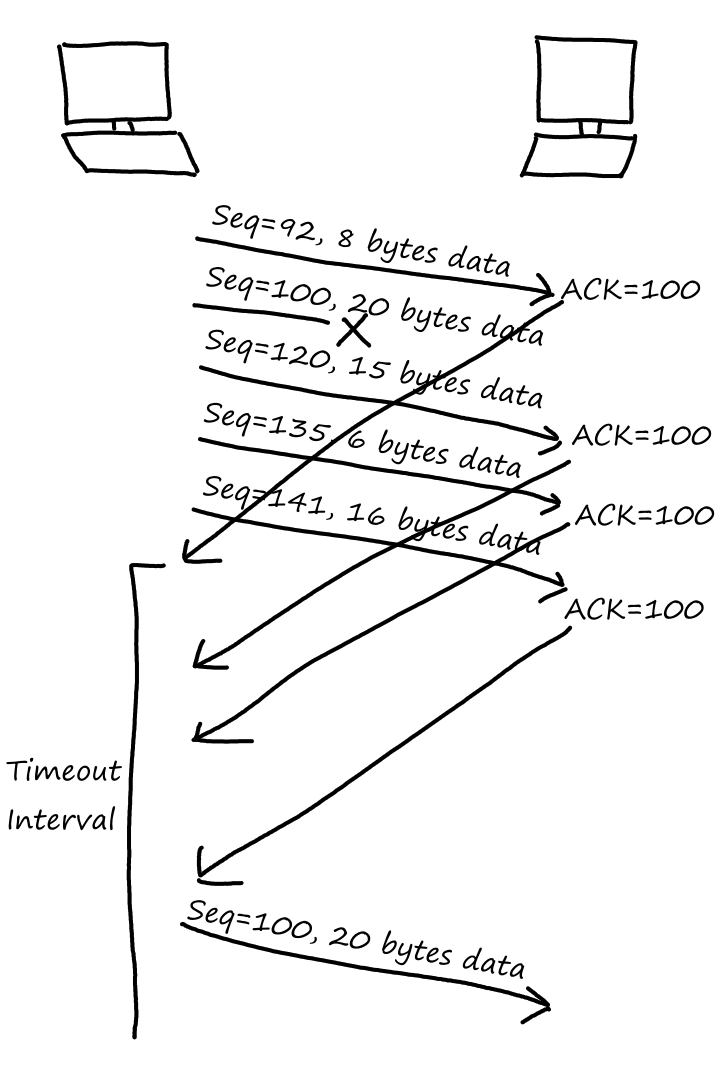

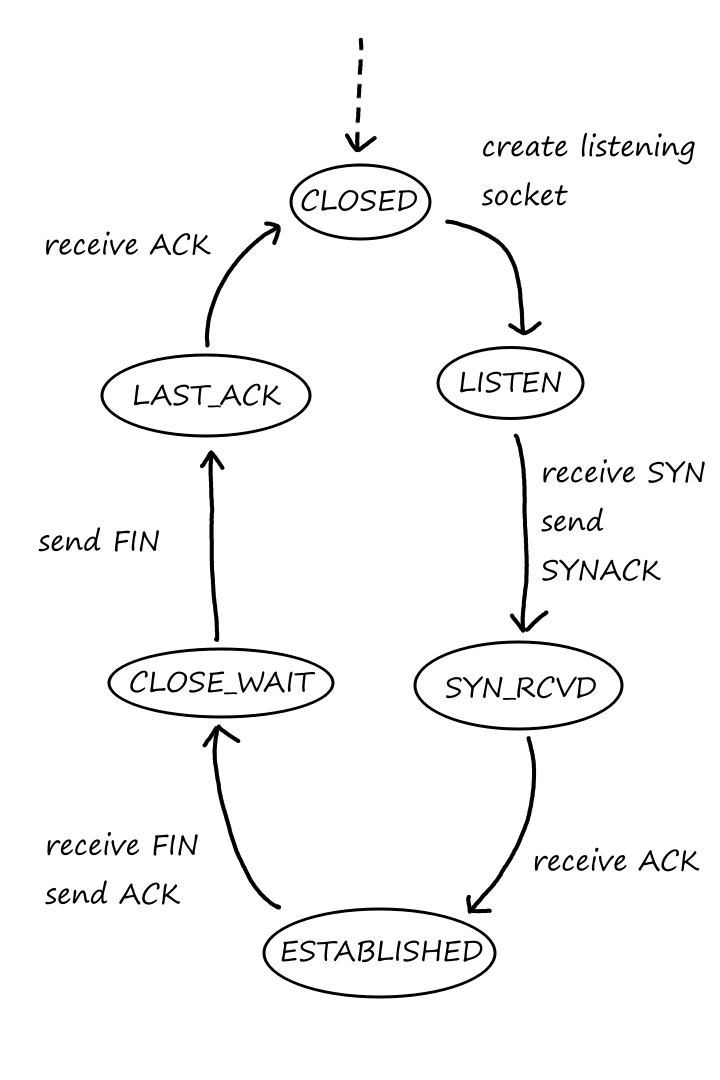

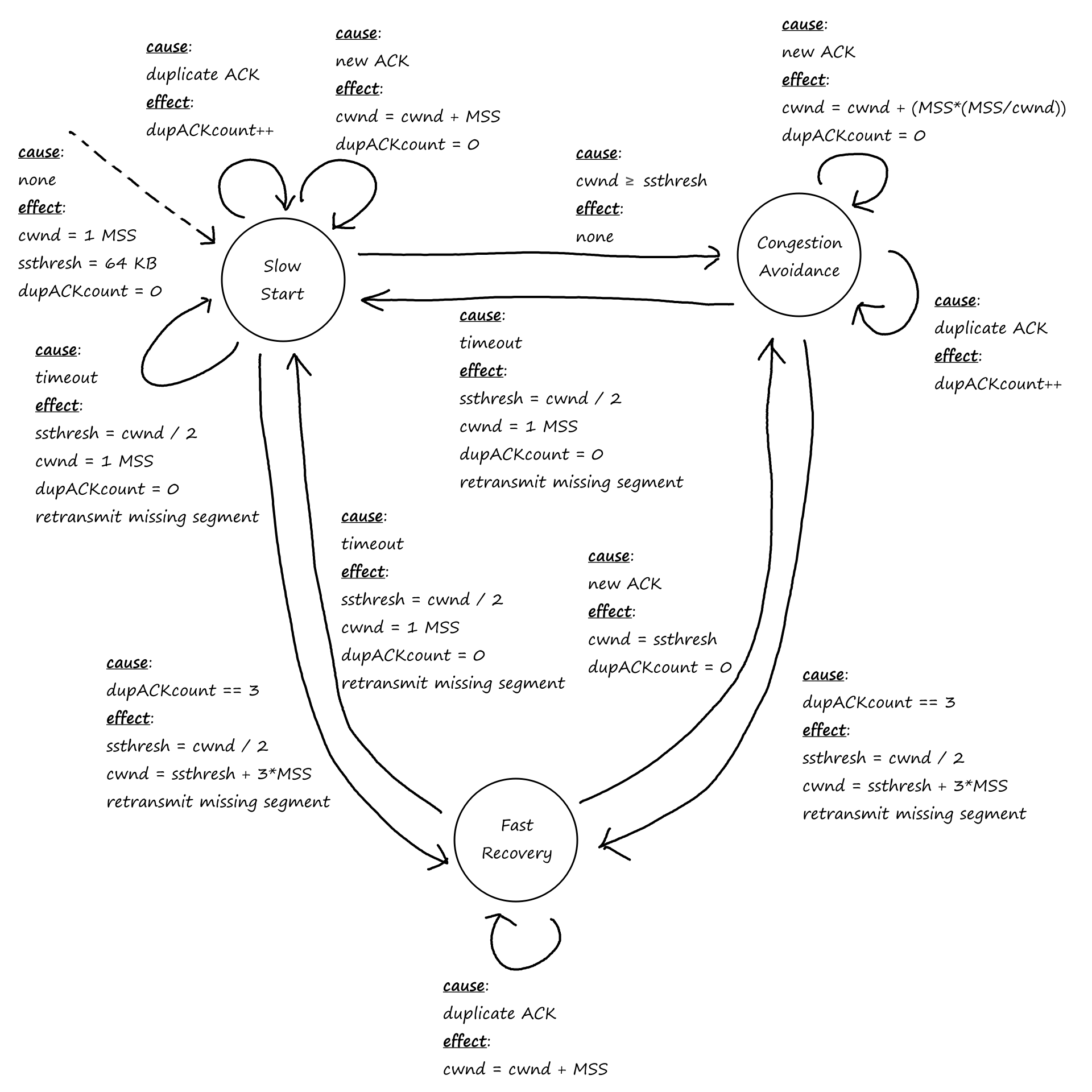

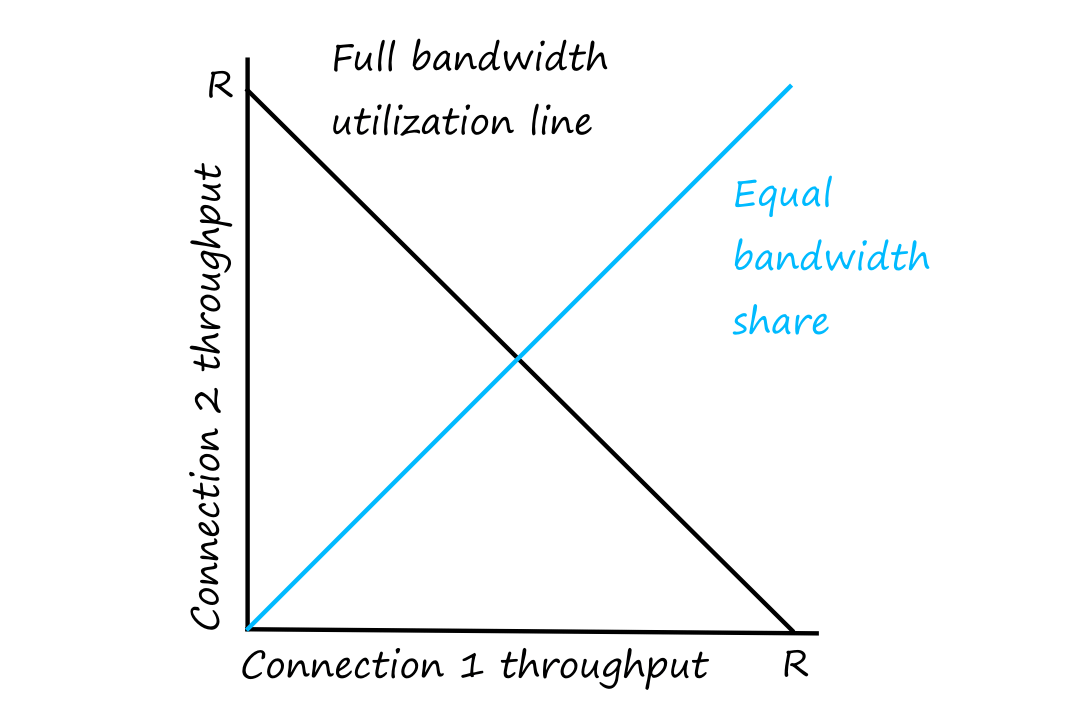

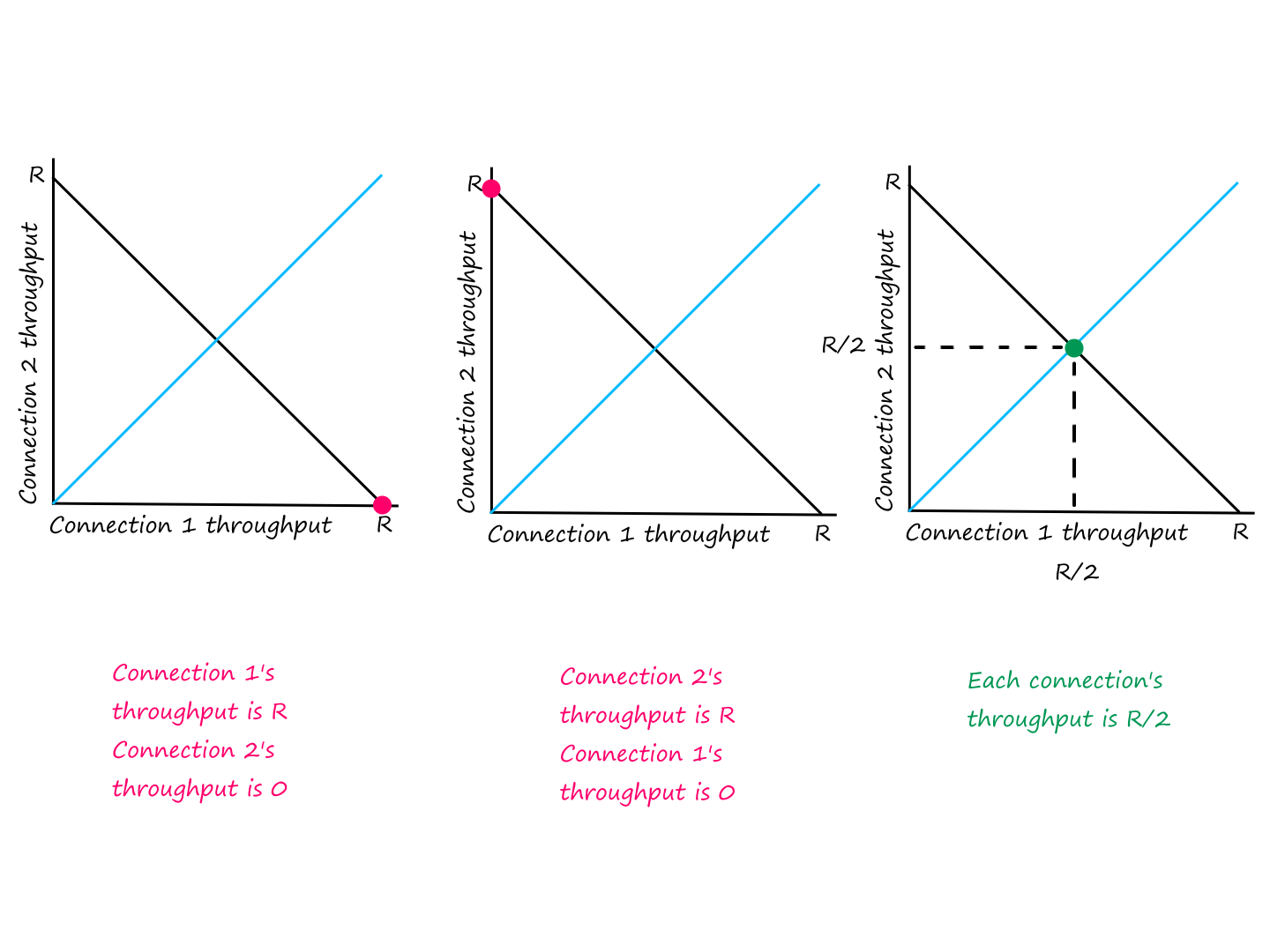

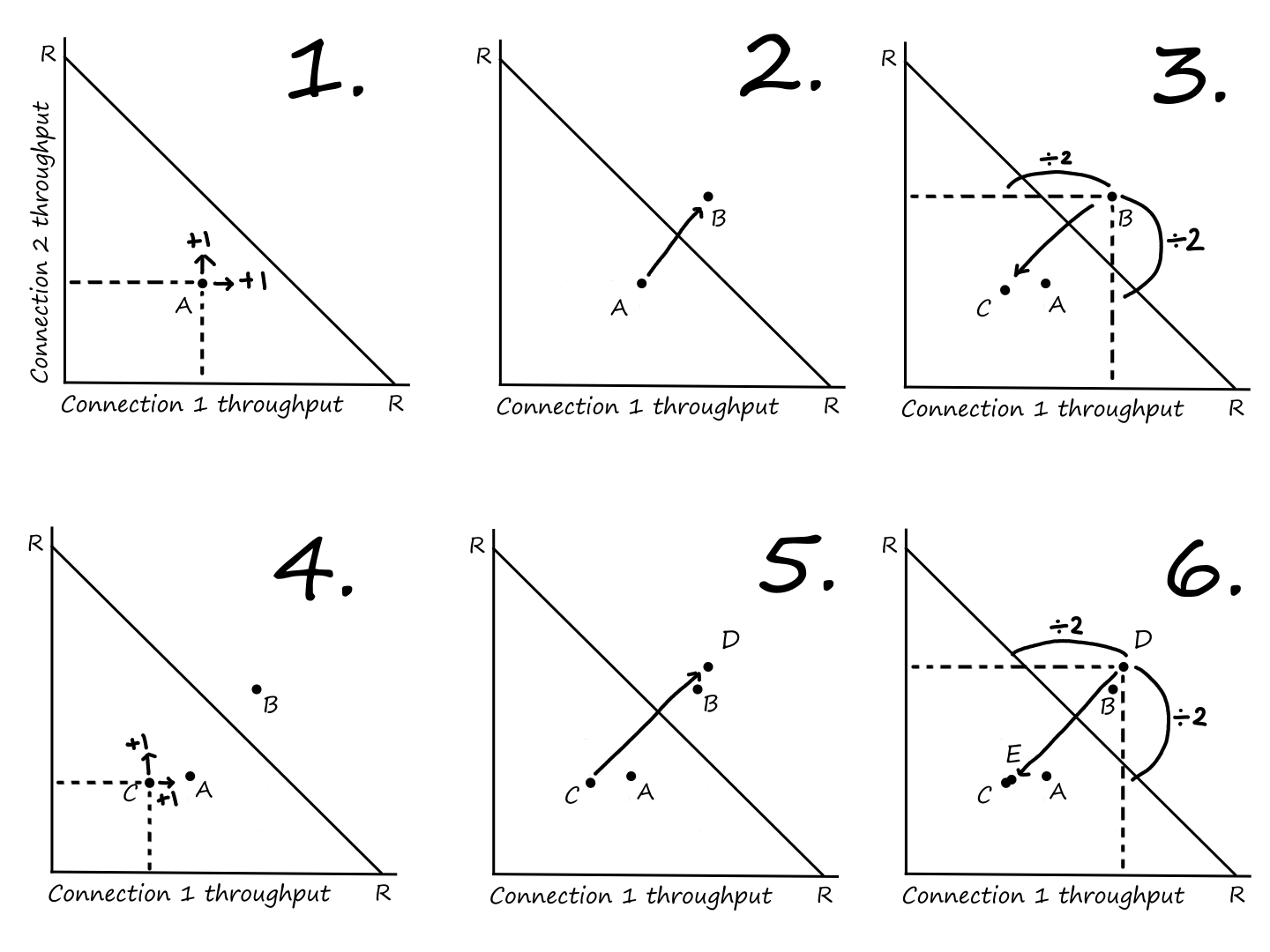

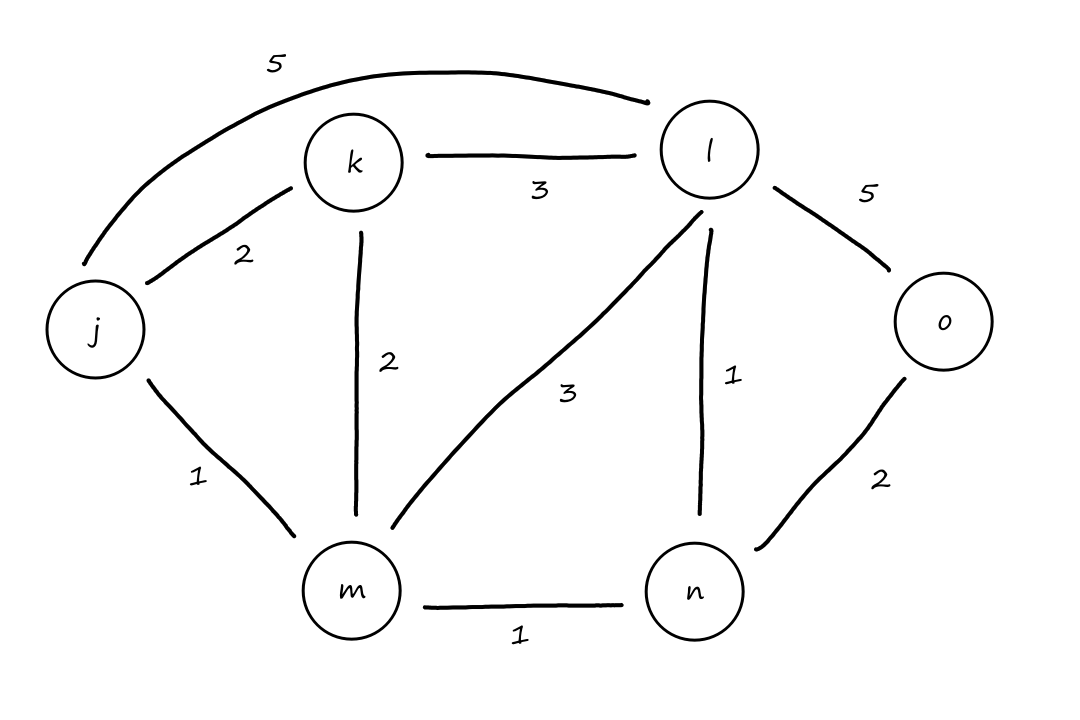

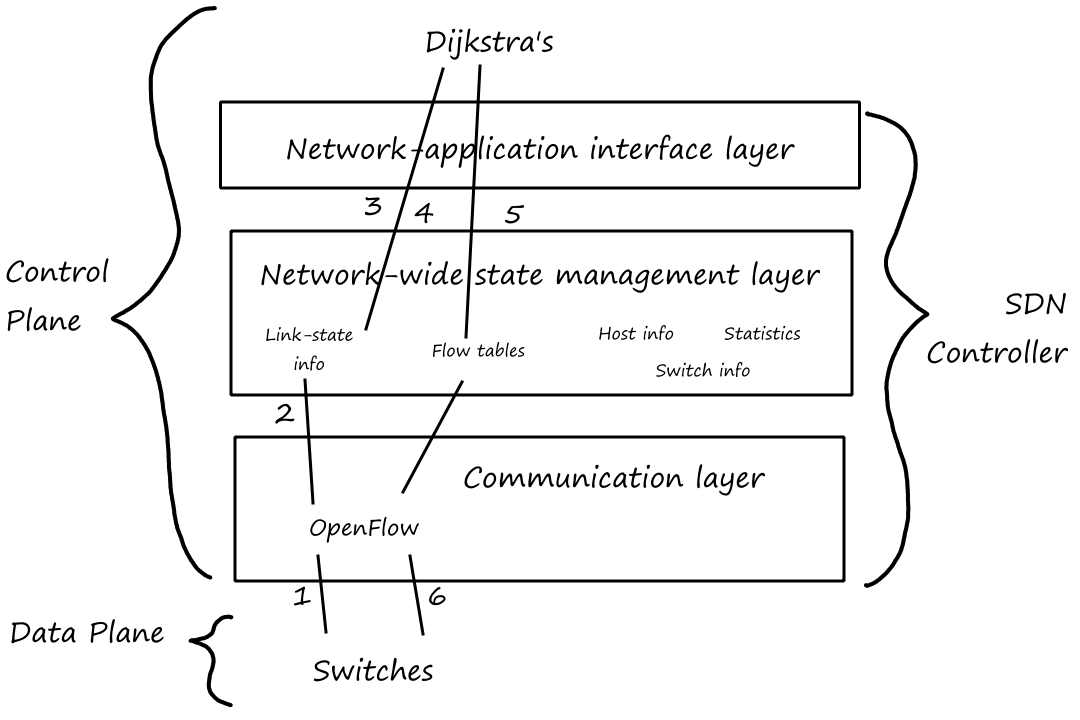

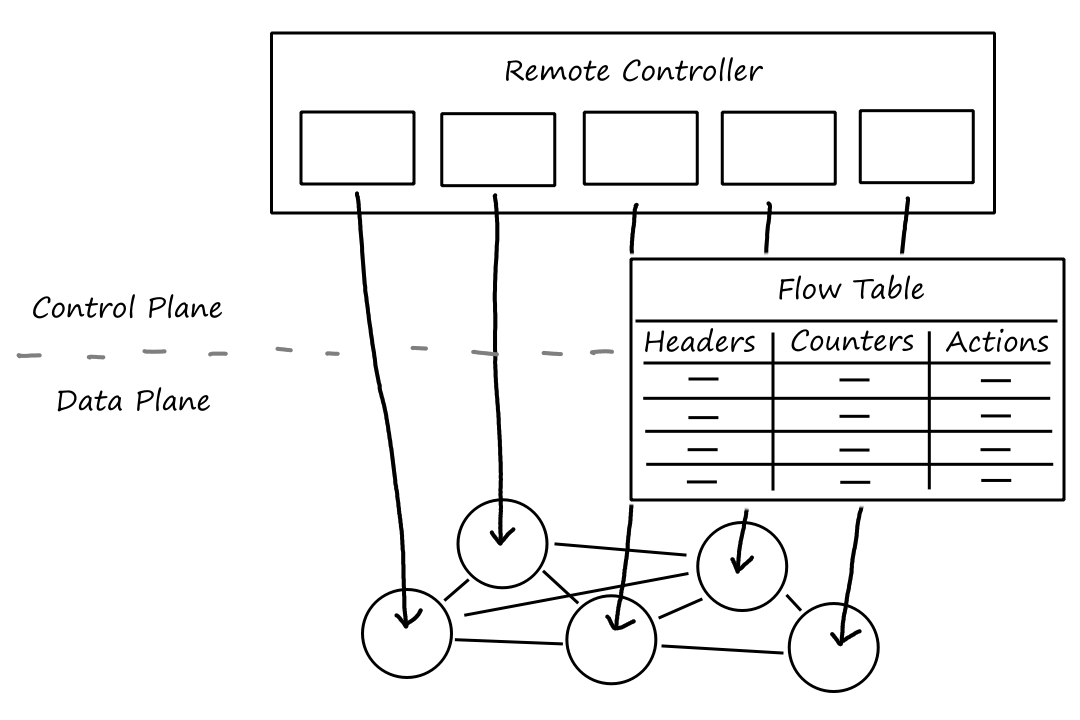

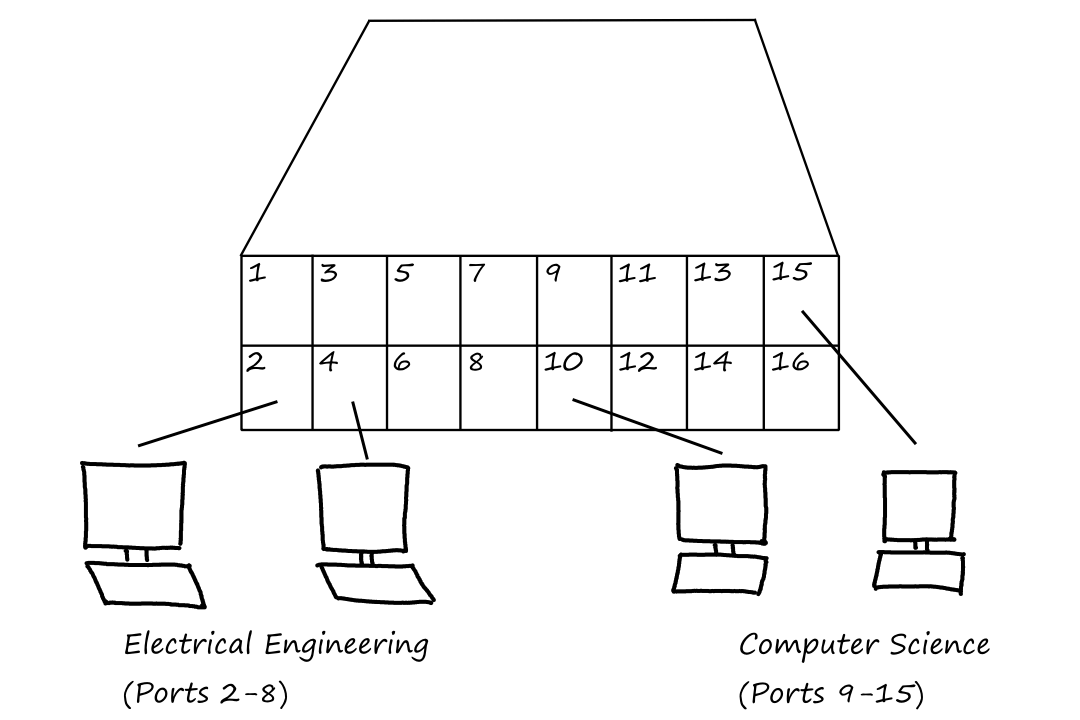

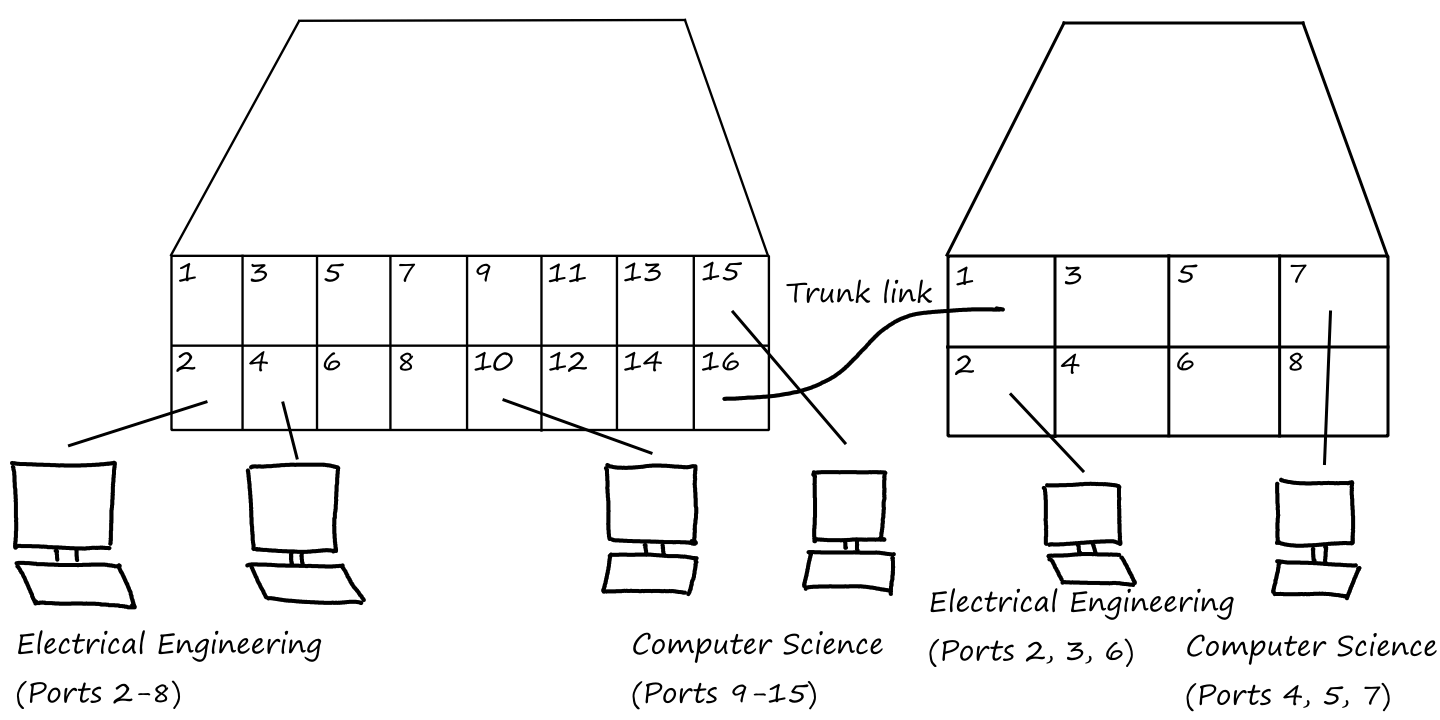

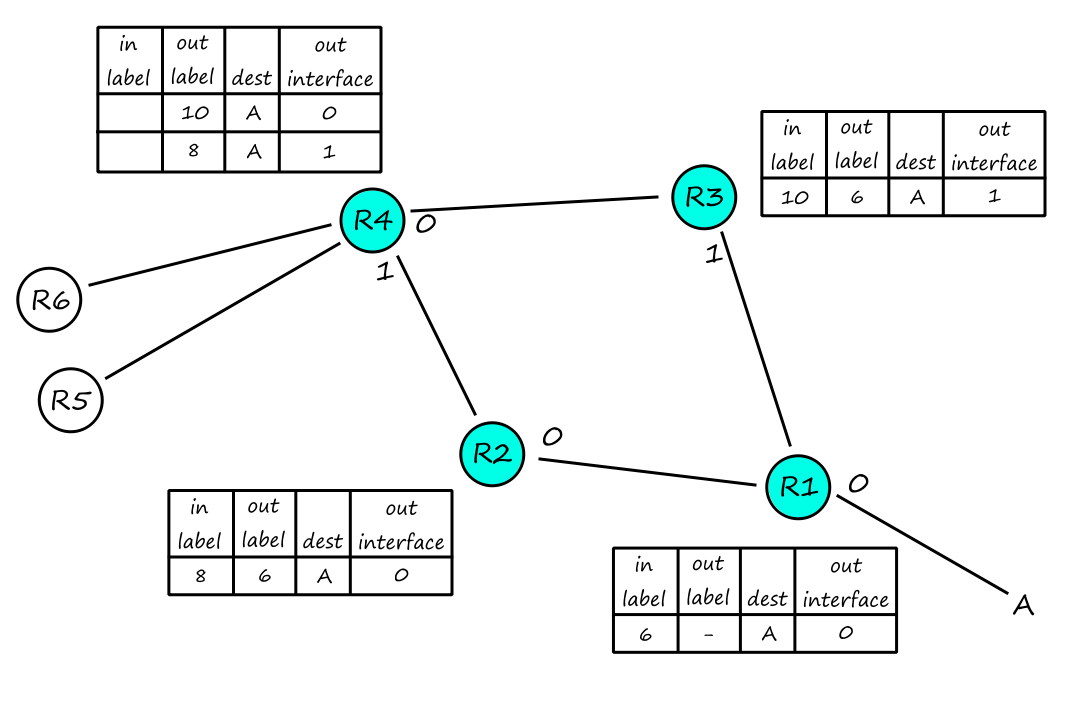

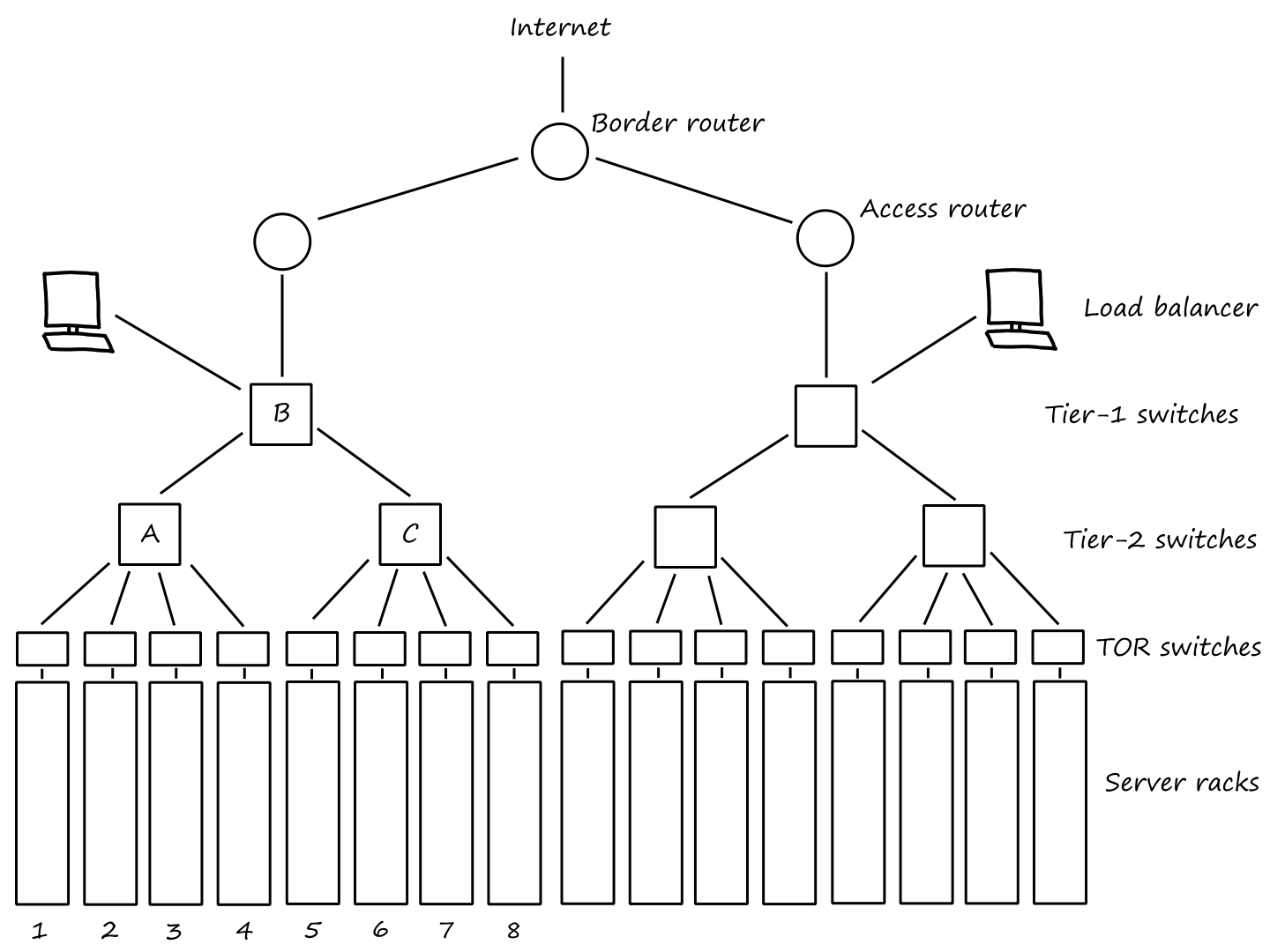

Server: dns-cac-lb-01.rr.comAddress: 3002:1997:f01:1::1Non-authoritative answer:google.com nameserver = ns2.google.comgoogle.com nameserver = ns1.google.comns1.google.com internet address = 216.239.32.10ns2.google.com internet address = 216.239.34.10