Artificial Intelligence

Shortcut to this page: ntrllog.netlify.app/ai

Info provided by Artificial Intelligence: A Modern Approach and Professor Francisco Guzman (CSULA)

What is AI?

Well, it kinda depends on what we think makes a machine "intelligent". Is it the ability for the machine to act like a human? Or is it the ability for the machine to always do the mathematically-optimal thing? And is intelligence a way of thinking or a way of behaving? These are the four main dimensions that researchers are using to think about AI.

Acting humanly: The Turing test approach

Let's say we ask a question to a computer and some people and get back their responses. If we can't tell whether the responses came from a person or from a computer, then the computer passes the Turing test.

In order to pass the Turing test, a computer would need:

- natural language processing: communicate in a human language

- knowledge representation: store what it knows or hears

- automated reasoning: answer questions and draw new conclusions

- machine learning: adapt to new circumstances and detect and extrapolate patterns

There's also a total Turing test, which tests if a computer can physically behave like a human. To pass the total test, a computer would also need:

- computer vision and speech recognition

- robotics: manipulate objects and move around

Thinking humanly: The cognitive modeling approach

While the Turing test is just focused on the computer's results, some people are more interested in the computer's process. When performing a task, does a computer go through the same thought process as a human would?

If the input-output behavior matches human behavior, then that could give us some insight into how our brains work.

We can learn about how humans think by:

- introspection: thinking about our thoughts as they go by

- psychological experiments: observing a person in action

- brain imaging: observing the brain in action

Cognitive science combines computer models and experimental techniques from psychology to study the human mind.

Thinking rationally: The "laws of thought" approach

The world of logic is pretty much, "if these statements are true, then this has to be true". If we give a computer some assumptions, can it reason its way to a correct conclusion?

In things like chess and math, all the rules are known, so it's relatively easy to make logical choices and statements. But in the real world, there's a lot of uncertainty. Interestingly, the theory of probability allows for rigorous reasoning with uncertain information. With this, we can use raw perceptual information to understand how the world works and make predictions.

Acting rationally: The rational agent approach

A computer agent is defined to be something that can operate autonomously, perceive their environment, persist over a prolonged time period, adapt to change, and create and pursue goals. A rational agent acts to achieve the best outcome.

There's a subtle distinction between "thinking" rationally and "acting" rationally. Thinking rationally is about making correct inferences. (What's implied here is that there is some (slow) deliberate reasoning process involved.) But it's possible to act rationally without having to make inferences. For example, recoiling from a hot stove, which is more of a reflex action than something you come to a conclusion about.

The rational agent approach is how most researchers approach studying AI. This is because rationality (as opposed to humanity) is easier to approach scientifically, and the rational agent approach kinda encapsulates all the other approaches. For example, in order to act rationally, a computer needs the skills that is required for it to act like a human.

So AI is the science of making machines act rationally, a.k.a. doing the right thing. The "right" thing is specified by us to the agent as an objective that it should complete. This way of thinking about AI is considered the standard model.

Beneficial machines

But the problem with the standard model is that it only works when the objective is fully specified. In the real world, it's hard to specify a goal that is complete and correct. For example, if the primary goal of a self-driving car is safety, then, of course, it's safest to just not leave the house at all, but that's not helpful; there needs to be some tradeoff. Inherently, there is a value alignment problem: the values put into the machine must be aligned with our values. That is, the machine's job shouldn't be to just complete its objective; it should also consider the costs, consequences, and benefits that would affect us.

Agents and Environments

A little more formally, an agent perceives its environment through sensors and acts upon that environment through actuators. Its percept sequence is the complete history of everything it has ever perceived. The agent looks at its percept sequence (and its built-in knowledge) to determine what action to take.

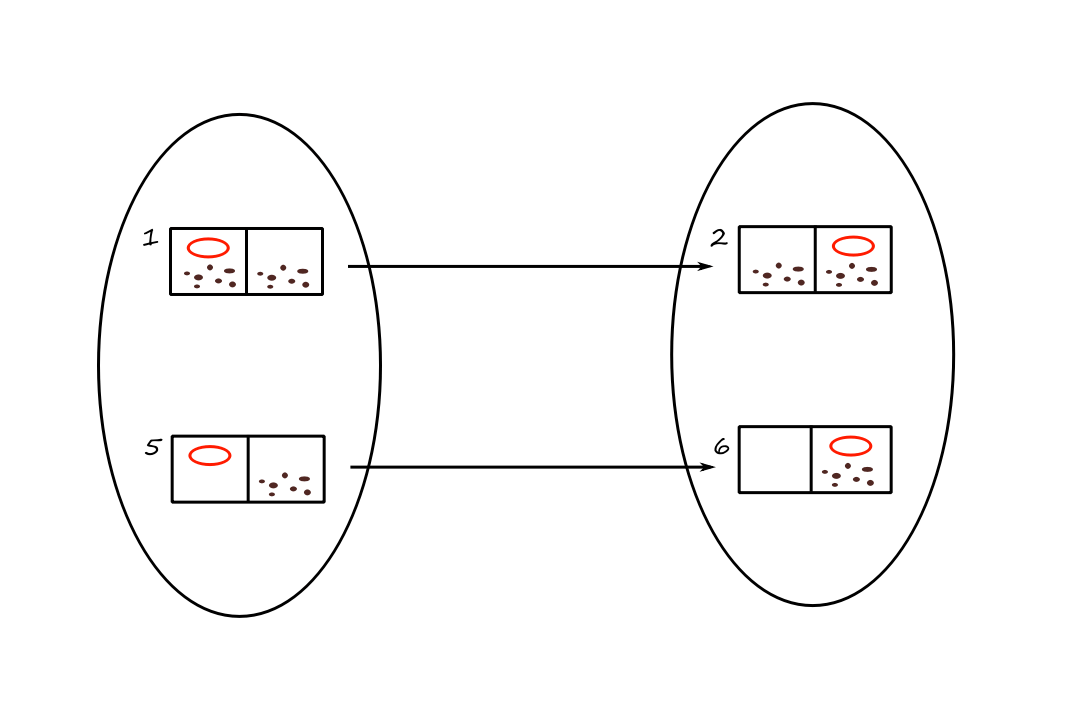

Mathematically, an agent's behavior is defined by an agent function, which maps a percept sequence to an action.

Let's take a look at a robotic vacuum cleaner. Suppose the floor is divided into two squares `A` and `B`, and that the vacuum cleaner is on square `A`. We can create a table that (theoretically) lists all the percept sequences and the actions that (we think) should be taken for each one.

| Percept sequence | Action |

|---|---|

| Square `A` is clean | Move right |

| Square `A` is dirty | Clean |

| Square `B` is clean | Move left |

| Square `B` is dirty | Clean |

| Square `A` is clean, Square `A` is clean | Move right |

| Square `A` is clean, Square `A` is dirty | Clean |

| `vdots` | `vdots` |

| Square `A` is clean, Square `A` is clean, Square `A` is clean | Move right |

| Square `A` is clean, Square `A` is clean, Square `A` is dirty | Clean |

| `vdots` | `vdots` |

In this very simplified scenario, it's pretty easy to see what the right actions should be. But in general, it's a bit harder to know what the correct action should be.

Good Behavior: The Concept of Rationality

So how can we know what the "right" action should be? How do we know that an action is "right"?

Performance Measures

Generally, we assess how good an agent is by the consequences of its actions (consequentialism). If the resulting environment is desirable, then the agent is good. (good bot)

A performance measure is a way to evaluate the agent's behavior in an environment. We have to be a little bit thoughtful about how we come up with a performance measure though. For the vacuum cleaner, it might sound like a good idea to measure performance by how much dirt is cleaned up in an eight-hour shift. The problem with this is that a rational agent would maximize performance by repeatedly cleaning up the dirt and dumping it on the floor. A better performance measure would be to reward the agent for a clean floor. For example, we could give it one point for each clean square, incentivizing it to maximize the number of points (and thus the number of clean squares).

As we can see, it's better to come up with performance measures based on what we want to be achieved in the environment, rather than what we think the agent should do.

Rationality

Formally, for each possible percept sequence, a rational agent should use the evidence provided by the percept sequence and its built-in knowledge to select an action that maximizes its performance measure (definition of a rational agent). We can assess whether an agent is rational by looking at four things:

- the performance measure that defines success

- the agent's prior knowledge of the environment

- the actions that the agent can perform

- the agent's percept sequence to date

For our vacuum cleaner:

- the performance measure awards one point for each clean square

- the "geography" of the environment is known (but not the dirt distribution)

- the actions are moving right, moving left, and cleaning

- the agent's percept sequence consists of what square it's at and whether it's clean

Under these circumstances, we can expect our vacuum cleaner to do at least as well as any other agent.

Suppose that we change the performance measure to deduct one point for each movement. Once all the squares are clean, the vacuum cleaner will just keep moving back and forth (we never defined "stopping" as an action). Then it would be irrational.

Omniscience, learning, and autonomy

One thing that's been sorta glossed over is that the agent doesn't know what will actually happen if it performs an action, i.e., it's not omniscient. So it's more correct to say that a rational agent tries to maximize expected performance.

In order to be able to maximize expected performance, the agent needs to be able to gather information and learn.

Crossing a street when it's empty is rational. It's expected that you'll reach the other side. But if you get hit by a car because you didn't look before crossing... well... then that's not rational.

If an agent only relies on its built-in knowledge, then it lacks autonomy. In order to be autonomous, the agent should build on its existing knowledge by learning. By being able to learn, a rational agent can be expected to succeed in a variety of environments.

The Nature of Environments

A task environment is the problem that we are trying to solve by using a rational agent.

Specifying the task environment

We can specify a task environment by specifying the performance measure, the environment, and the agent's actuators and sensors (PEAS). Yes, these are the four things we listed out when defining how an agent can be rational. The vacuum cleaner example was fairly simple though, so we can look at something more complicated, like an automated taxi.

- performance measure: correct destination, minimize fuel consumption, minimize time/cost, minimize impact on other drivers, maximize passenger safety and comfort, maximize profits

- environment: roads, traffic, obstacles, police, pedestrians, passengers, weather

- actuators: steering, accelerator, brake, signal, horn, display screen, speech

- sensors: cameras, speedometer, GPS, accelerometer, radar, microphone, touchscreen

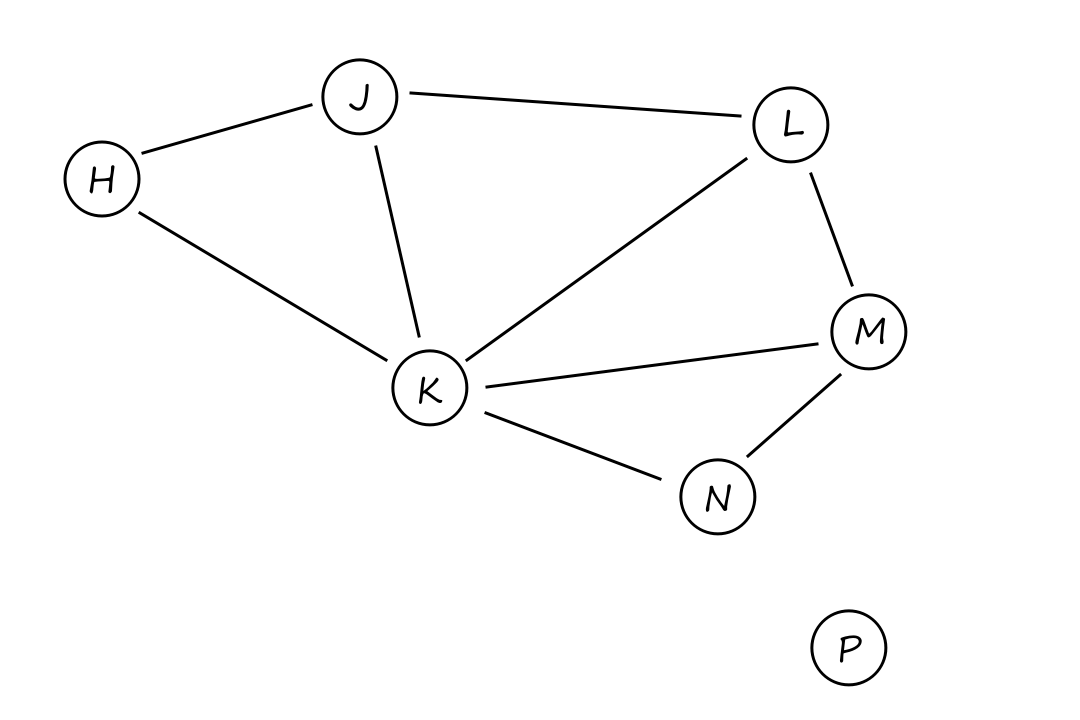

Properties of task environments

A task environment can be fully observable or partially observable. If the agent's sensors give it access to the complete state of the environment, then the task environment is fully observable. Noisy and inaccurate sensors would make it partially observable. Or maybe the capability simply isn't available. For example, a vacuum with a local sensor can't tell whether there is dirt in other squares and an automated taxi can't know what other drivers are thinking. Basically, if the agent has to make a guess, then the environment is partially observable.

An environment can also be unobservable if the agent has no sensors.

A task environment can be single-agent or multiagent. This one's pretty self-explanatory.

Though there is a small subtlety. Does an automated taxi have to view another car as another agent or can it think of it as just another "object"? The answer depends on whether the "object's" performance measure is affected by the automated taxi. If it is, then it must be viewed as an agent.

A multiagent environment can be competitive or cooperative. In a competitive environment, maximizing/improving one agent's performance measure minimizes/worsens another agent's performance measure. In a cooperative environment, all agents' performance measures can be maximized.

A task environment can be deterministic or nondeterministic. If the next state of the environment is completely determined by the current state and the agent's action, then it is deterministic. If something unpredictable can happen, then it is nondeterministic. For example, driving is nondeterministic because traffic cannot be predicted exactly. But if you want to argue that traffic is predictable, then some more reasons that driving is nondeterministic are that the tires may blow out unexpectedly or that the engine may seize up unexpectedly.

A stochastic environment is a nondeterministic environment that deals with probabilities. "There's a `25%` chance of rain" is stochastic, while "There's a chance of rain" is nondeterministic.

A task environment can be episodic or sequential. Classifying images is episodic because classifying the current image doesn't depend on the decisions for past images nor does it affect the decisions of future images. Chess and driving are sequential because the available moves depend on past moves, and the next move affects future moves.

A task environment can be static or dynamic. A static environment doesn't change while the agent is deciding on its next action, whereas a dynamic one does. Driving is dynamic because the other cars are always moving. Chess is static. As time passes, if the environment doesn't change, but the agent's performance score does, then the environment is semidynamic. Timed chess is semidynamic.

A task environment can be discrete or continuous. This refers to the state of the environment, the way time is handled, and the percepts and actions of the agent. Chess has a finite number of distinct states, percepts, and actions, which means it is discrete. Whereas in driving, speed and location range over continuous values.

A task environment can be known or unknown. (Well, strictly speaking, not really.) This is not referring to the environment itself, but to the agent's knowledge about the rules of its environment. In a known environment, the outcomes (or outcome probabilities) for all actions are given.

Known vs unknown is not the same as fully vs partially observable. A known environment can be partially observable, like solitaire, where we know the rules, but we can't see the cards that are turned over. An unknown environment can be fully observable, like a new video game, where the screen shows the entire game state, but we don't know what the buttons do yet until we try them.

The hardest case is partially observable, multiagent, nondeterministic, sequential, dynamic, continuous, and unknown. Driving is all of these, except the environment is known.

The Structure of Agents

Now we get to go over the more technical aspects of how agents work.

Recall that an agent function maps percept sequences to actions. An agent program is code that implements the agent function. This program runs on some sort of computing device with sensors and actuators, referred to as the agent architecture.

agent = architecture + program

In general, the architecture makes the percepts from the sensors available to the program, runs the program, and sends the program's action choices to the actuators.

Agent programs

A simple way to design agent programs is to have them take the current percept as input and return an action. Here is some sample pseudocode for an agent program:

function TABLE-DRIVEN-AGENT(percept)

append percept to the end of percepts

action = LOOKUP(percepts, table)

return action

percepts: a sequence, initially empty

table: a table of actions, indexed by percept sequences, initially fully specified

The table is the same table that we drew out for the vacuum cleaner (in the "Agents and Environments" section). Using a table to list out all the percepts and actions is a way to represent the agent function, but it is typically not practical to build such a table because it must contain every possible percept sequence. Here's why.

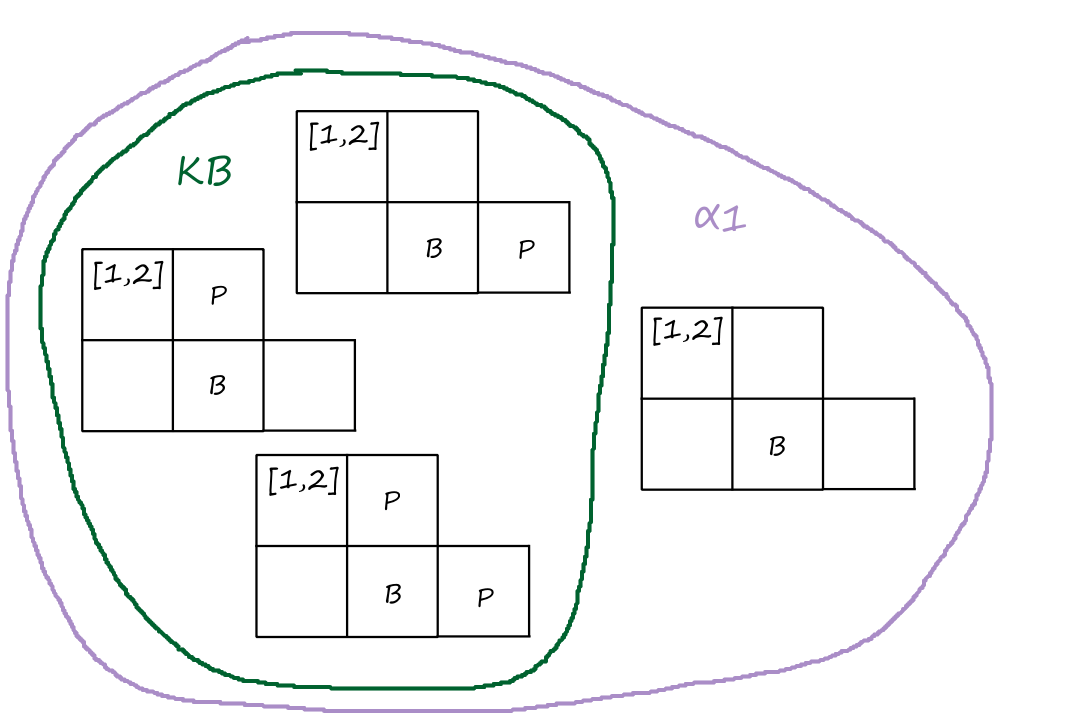

Let `P` be the set of possible percepts and let `T` be the total number of percepts the agent will receive.

If the agent receives `1` percept, then there are `|P|` possible percepts that it could be.

If the agent receives `2` percepts, then there are `|P|^2` possible percepts that they could be.

If the agent receives `3` percepts, then there are `|P|^3` possible percepts that they could be.

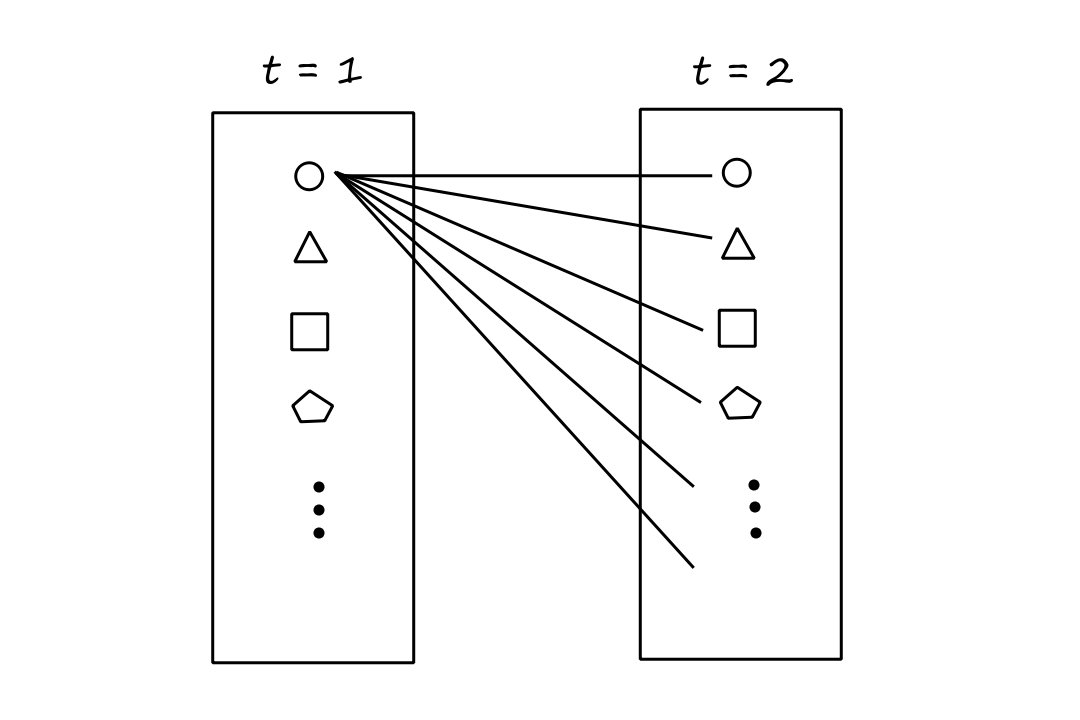

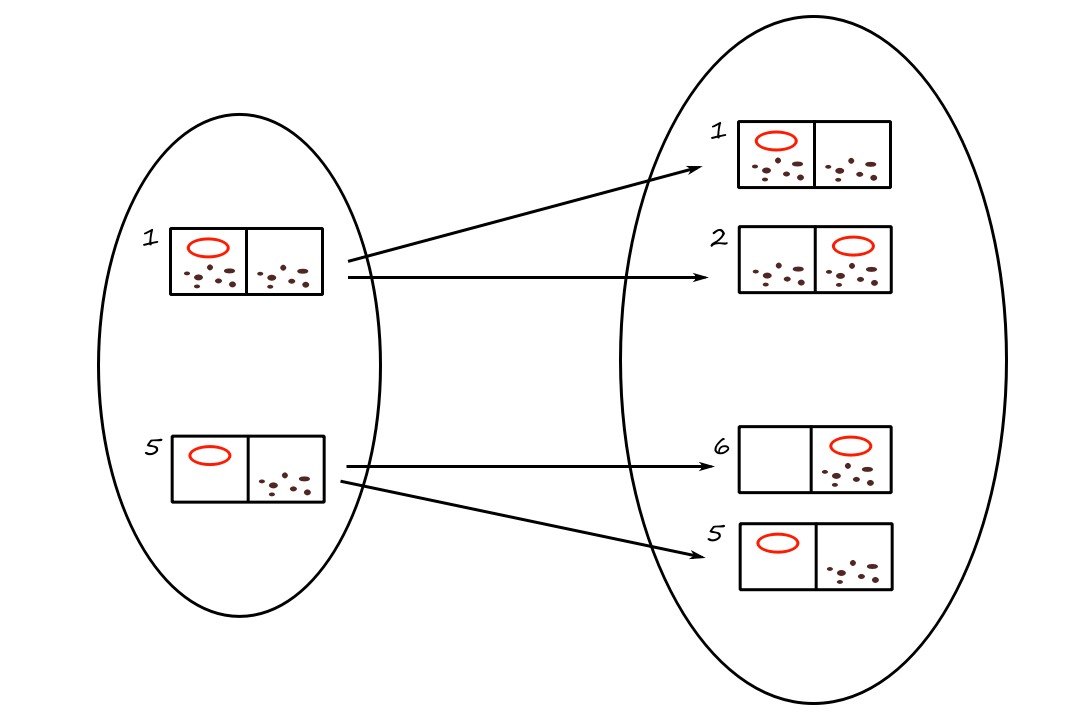

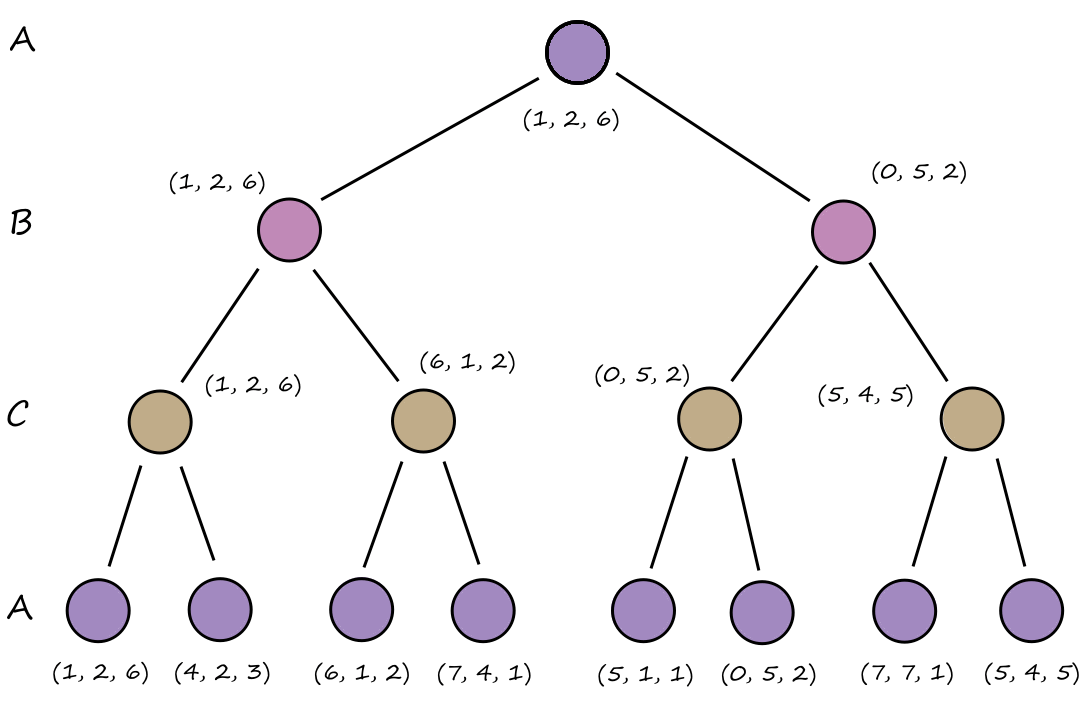

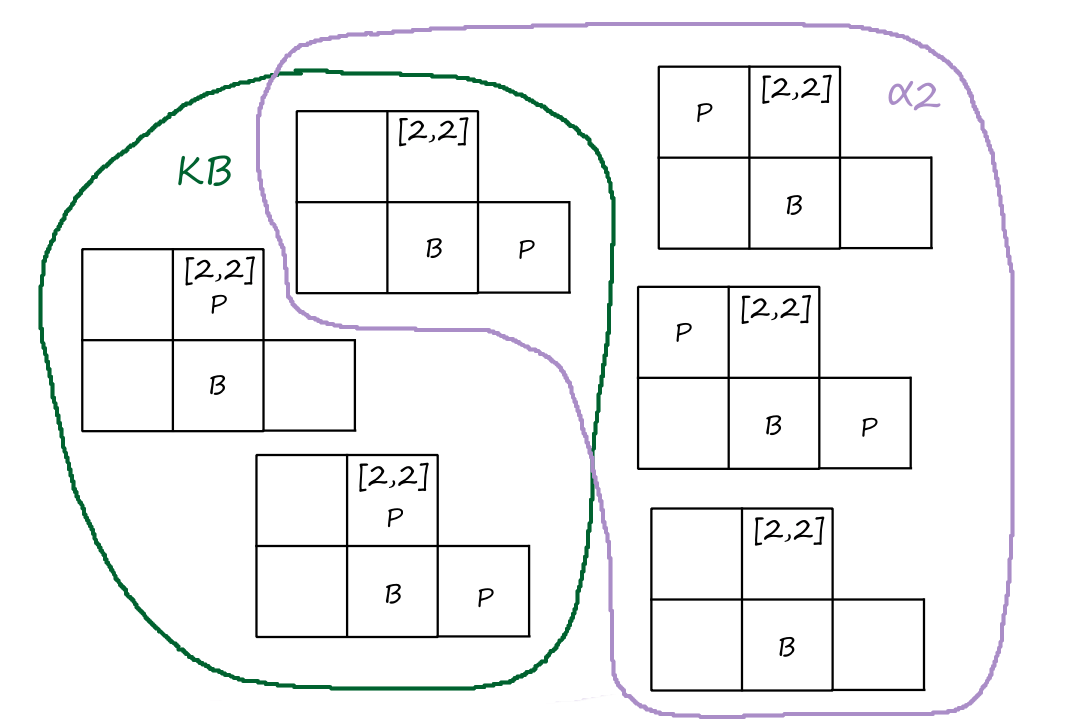

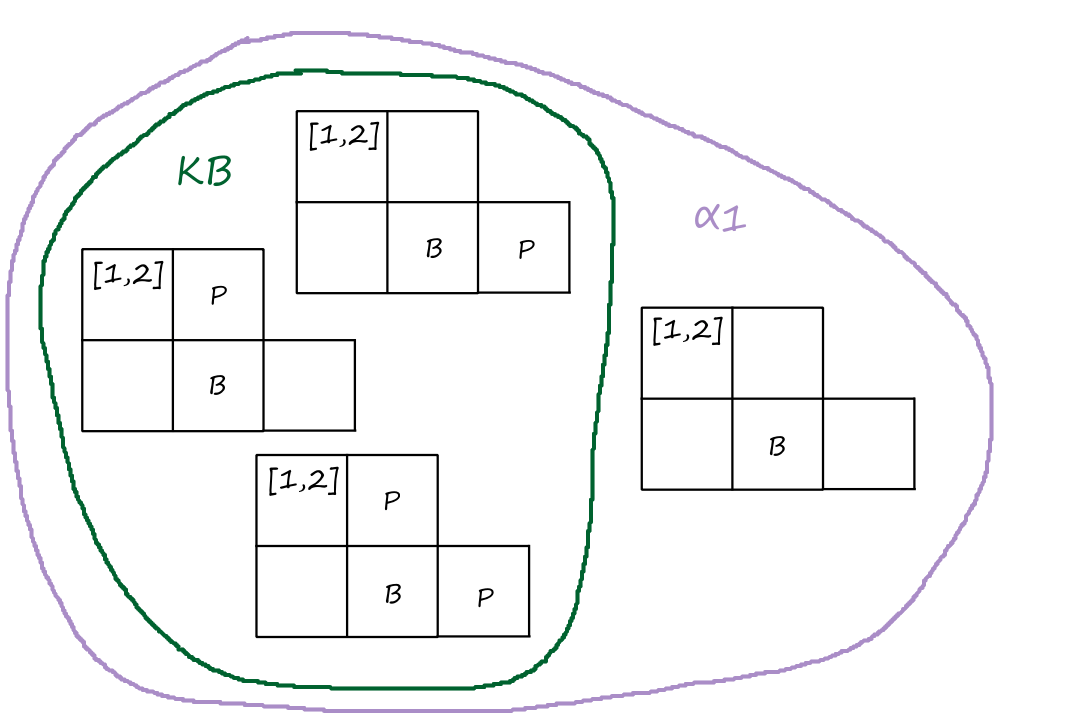

Suppose each shape is a percept. For each percept at `t=1`, there are `|P|` percepts at `t=2` that could be paired with that percept. So `|P|*|P|=|P|^2` total percepts.

Extending that reasoning, for each of the `|P|^2` percept pairs at `t=1,2`, there are `|P|` percepts at `t=3` that could be paired with that percept pair. So `|P|^2*|P|=|P|^3` total percepts.

In general, there are `sum_(t=1)^T |P|^t` possible percept sequences, meaning that the table will have this many entries. An hour's worth of visual input from a single camera can result in at least `10^(600,000,000,000)` percept sequences. Even for chess, there are at least `10^(150)` percept sequences.

So yeah, building such a table is not really possible.

Theoretically though, TABLE-DRIVEN-AGENT does do what we want, which is to implement the agent function. The challenge is to write programs that do this without using a table.

Before the 1970s, people were apparently using huge tables of square roots to do their math. Now there are calculators that run a five-line program implementing Newton's method to calculate square roots.

Can AI do this for general intelligent behavior?

Simple reflex agents

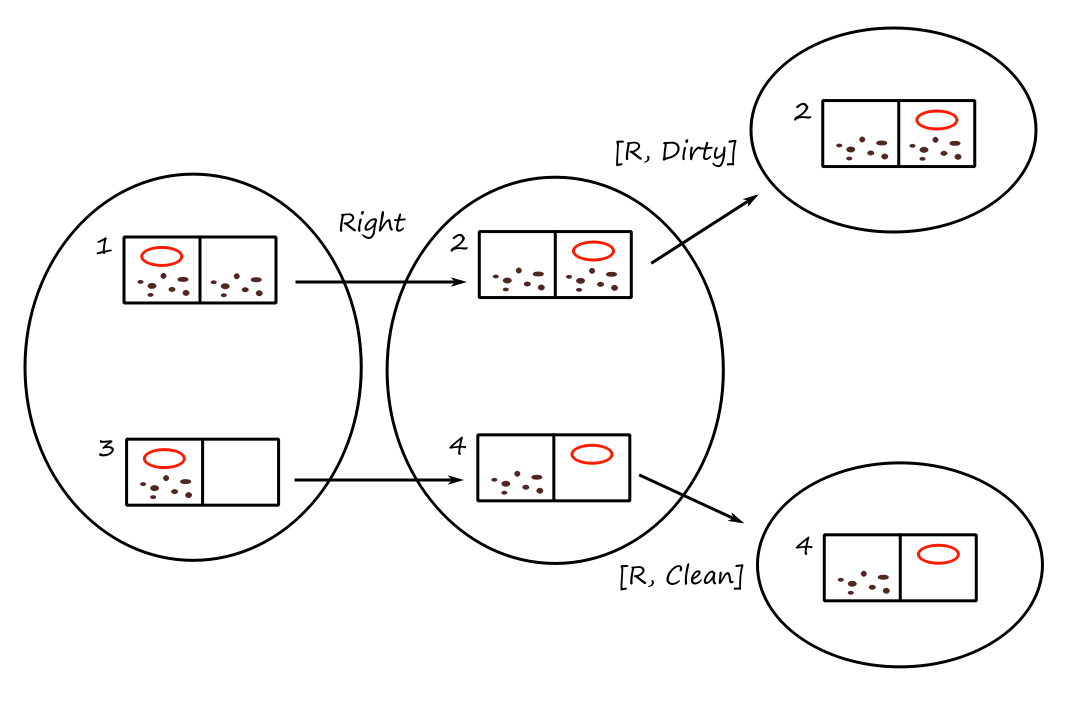

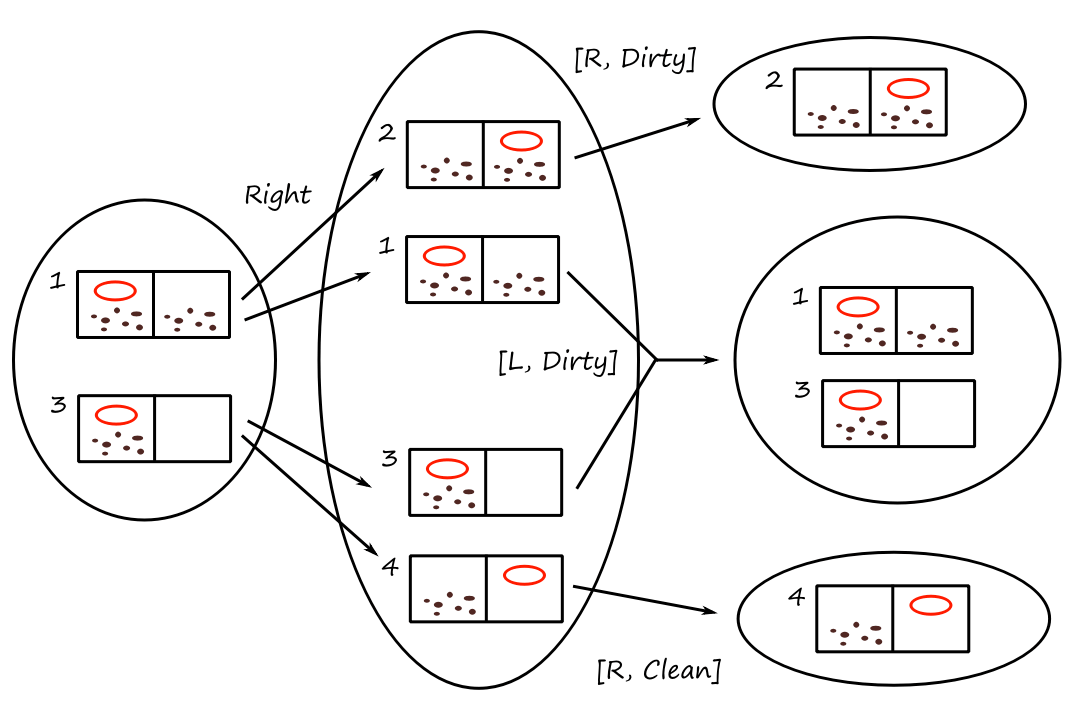

This is the simplest kind of agent. A simple reflex agent selects actions based only on the current percept; it ignores the rest of the percept history. Here's an agent program that implements the agent function for our vacuum:

function REFLEX-VACUUM-AGENT([location, status])

if status == Dirty then return Clean

else if location == A then return Move right

else if location == B then return Move left

Note that since the agent is ignoring the percept history, there are only `4` total possible percepts (not `4^T`) at all times. Square `A` is clean, square `A` is dirty, square `B` is clean, and square `B` is dirty.

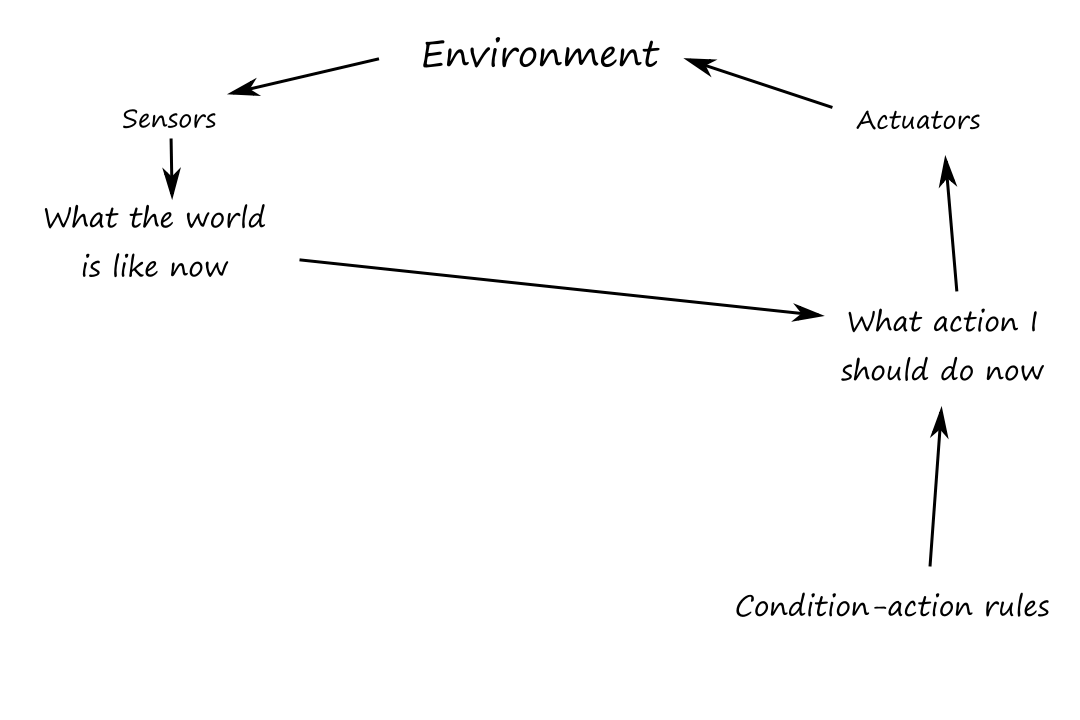

Simple reflex behaviors can be modeled as "if-then" statements. If the car in front is braking, then initiate braking. Because of their structure, they are called condition-action rules (or situation-action rules or if-then rules).

REFLEX-VACUUM-AGENT is specific to the world of just two squares. Here's a more general and flexible way of writing agent programs:

function SIMPLE-REFLEX-AGENT(percept)

state = INTERPRET-INPUT(percept)

rule = RULE-MATCH(state, rules)

action = rule.ACTION

return action

rules: a set of condition-action rules

This approach involves designing a general-purpose interpreter that can convert a percept to a state. Then we provide the rules for specific states.

While simple reflex agents are, well, simple, they only work well in fully observable environments. The state of the environment has to be easily identifiable, otherwise the wrong rule and action will be applied. For example, it may be hard for the agent to tell if a car is braking or if it's just the taillights that are on.

For our vacuum example, suppose the vacuum didn't have a location sensor. Then it won't know which square it's on. If it starts at square `A` and chooses to move left, it will fail forever (and likewise if it starts at square `B` and chooses to move right).

Infinite loops like these often happen in partially observable environments and can be avoided by having the agent randomize its actions. Randomization can be rational in some multiagent environments, but it is usually not rational in single-agent environments.

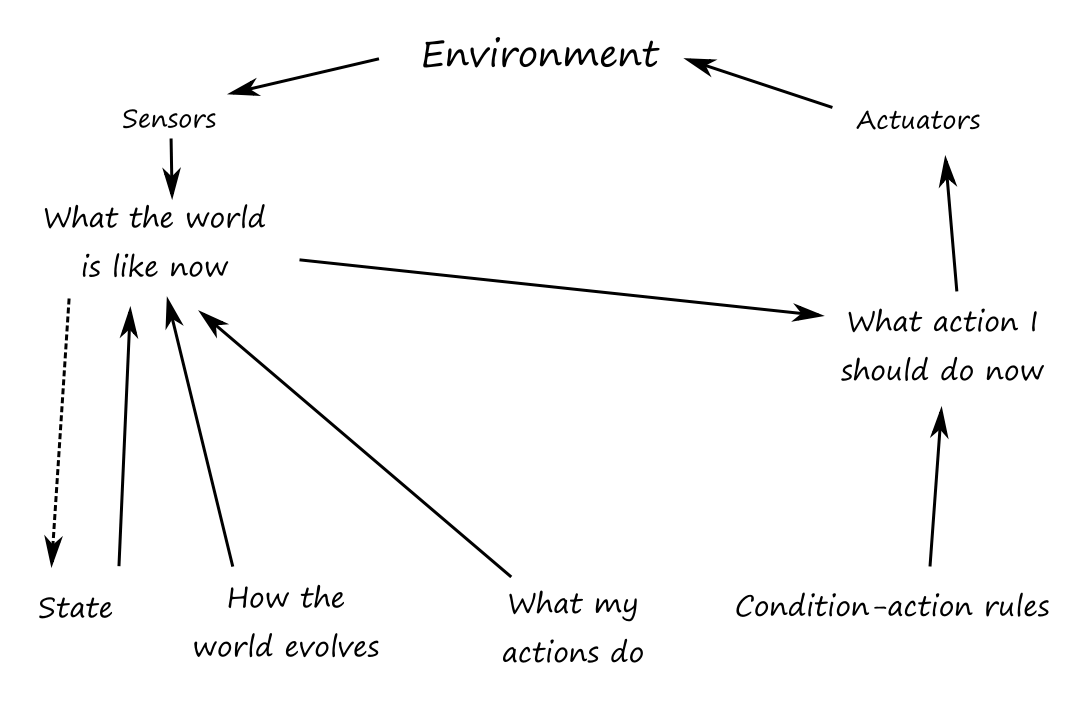

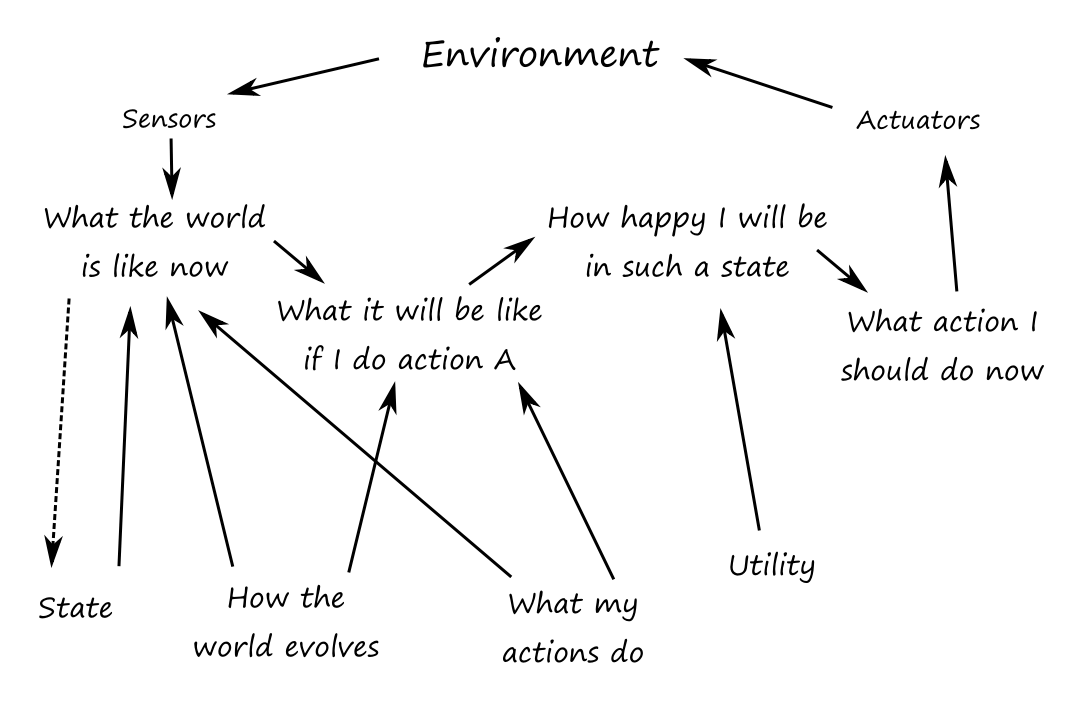

Model-based reflex agents

To deal with partial observability, the agent can keep track of the part of the world it can't see now. In order to do this, the agent needs to maintain some sort of internal state that depends on the percept history. For a car changing lanes, the agent needs to keep track of where the other cars are if it can't see them all at once.

In order to update its internal state, the agent needs to know "how the world works". When the agent turns the steering wheel clockwise, the car turns to the right (effect of agent action). When it's raining, the car's camera can get wet (how the world evolves independent of agent's actions). This is a transition model of the world.

The agent also needs to know how to interpret the information it gets from its percepts to understand the current state of the world. When the car in front of the agent brakes, there will be several red regions in the forward-facing camera image. When the camera gets wet, there will be droplet-shaped objects in the image. This knowledge is called a sensor model.

A model-based agent uses the transition model and sensor model to keep track of the state of the world.

"What the world is like now" is really the agent's best guess.

function MODEL-BASED-REFLEX-AGENT(percept)

state = UPDATE-STATE(state, action, percept, transition_model, sensor_model)

rule = RULE-MATCH(state, rules)

action = rule.ACTION

return action

state: the agent's current conception of the world state

transition_model: a description of how the next state depends on the current state and action

sensor_model: a description of how the current world state is reflected in the agent's percepts

rules: a set of condition-action rules

action: the agent's most recent action, initially none

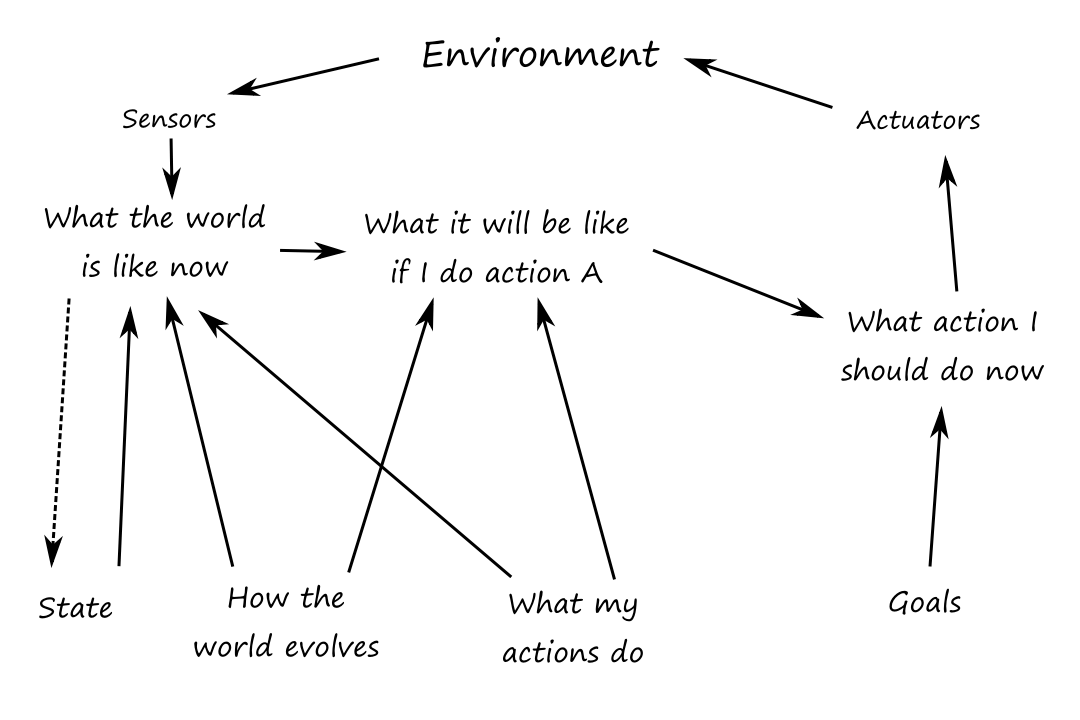

Goal-based agents

Sometimes, having information about the current state of the environment is not enough to decide what to do. For example, knowing that there's a fork in the road is not enough; the agent needs to know whether it should go left or right. The correct decision depends on the goal.

Goal-based decision making is different from following condition-action rules because it involves thinking about the future. A reflex agent brakes when it sees brake lights because that's specified in the rules. But a goal-based agent brakes when it sees brake lights because that's how it thinks it will avoid hitting other cars.

Goal-based agents are also more flexible than reflex agents. If we want a goal-based agent to drive to a different destination, then we just have to update the goal. But if we want a reflex agent to go to a different destination, we have to update all the rules so that they go to the new destination.

Utility-based agents

While achieving the goal is what we want the agent to do, there are many different actions that can be taken to get there. And those different actions can affect how "happy" or "unhappy" the agent will be. This level of "happiness" is referred to as utility.

For example, a quicker/safer/more reliable/cheaper route makes the agent "happier" because it optimizes its performance measure.

A utility function maps environment states to a desirability score. If there are conflicting goals (e.g., speed and safety), the utility function specifies the appropriate tradeoff.

An agent won't know exactly how "happy" it will be if it takes an action. So it's more correct to say that a rational utility-based agent chooses the action that maximizes expected utility (as opposed to just utility).

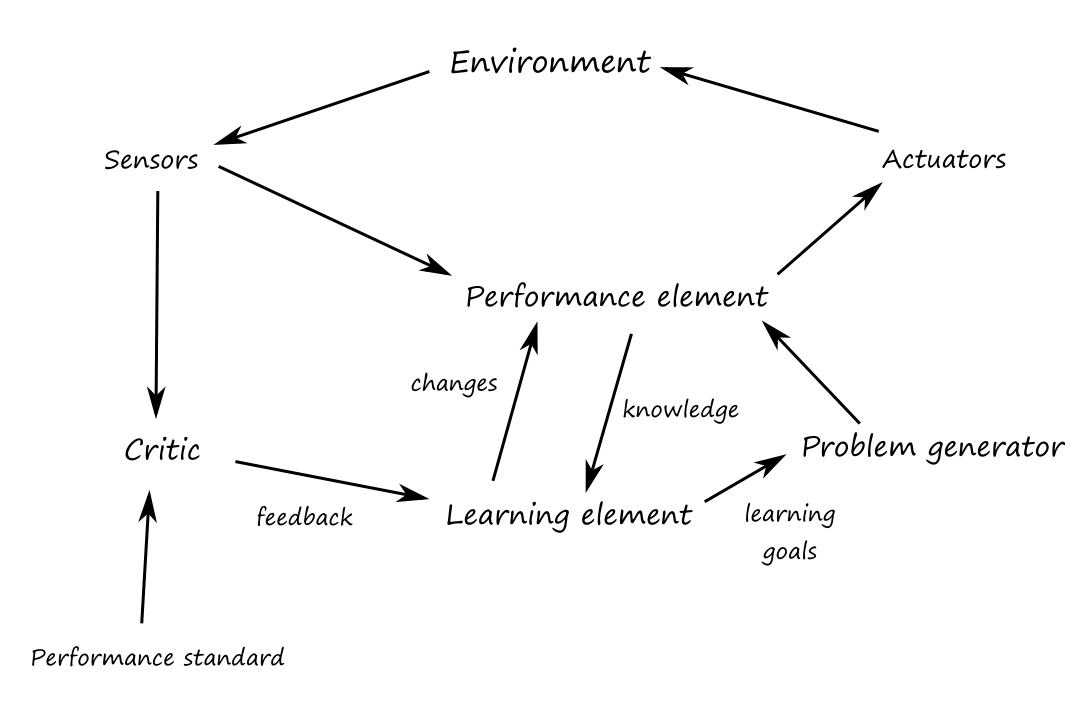

Learning agents

The preferred way to build agents (model-based, goal-based, utility-based, etc.) is to build them as a learning agent and then teach them.

A learning agent can be divided into four conceptual components: the learning element, the performance element, the critic, and the problem generator. The learning element is responsible for making improvements. The performance element is responsible for selecting actions. The critic provides feedback on how the agent is doing and determines how the performance element should be modified to do better.

The problem generator is responsible for suggesting actions that will lead to new and informative experiences. This allows the agent to explore some suboptimal actions in the short run that may become much better actions in the long run.

The performance standard can be seen as a mapping from a percept to a reward or penalty. This allows the critic to learn what is good and bad.

The performance standard is a fixed external thing that is not a part of the agent.

For now, we'll assume that the environments are episodic, single agent, fully observable, deterministic, static, discrete, and known.

Problem-Solving Agents

If the agent doesn't know what the correct action to take should be, it should do some problem solving:

- Goal formulation: figure out what the goal is to limit the number of actions that need to be considered

- Problem formulation: describe the states and actions (come up with an abstract model of the world)

- Search: try different sequences of actions to find one (the solution) that achieves the goal

- Execution: just do it

In a fully observable, deterministic, known environment, the solution to any problem is a fixed sequence of actions. For example, traveling from one place to another.

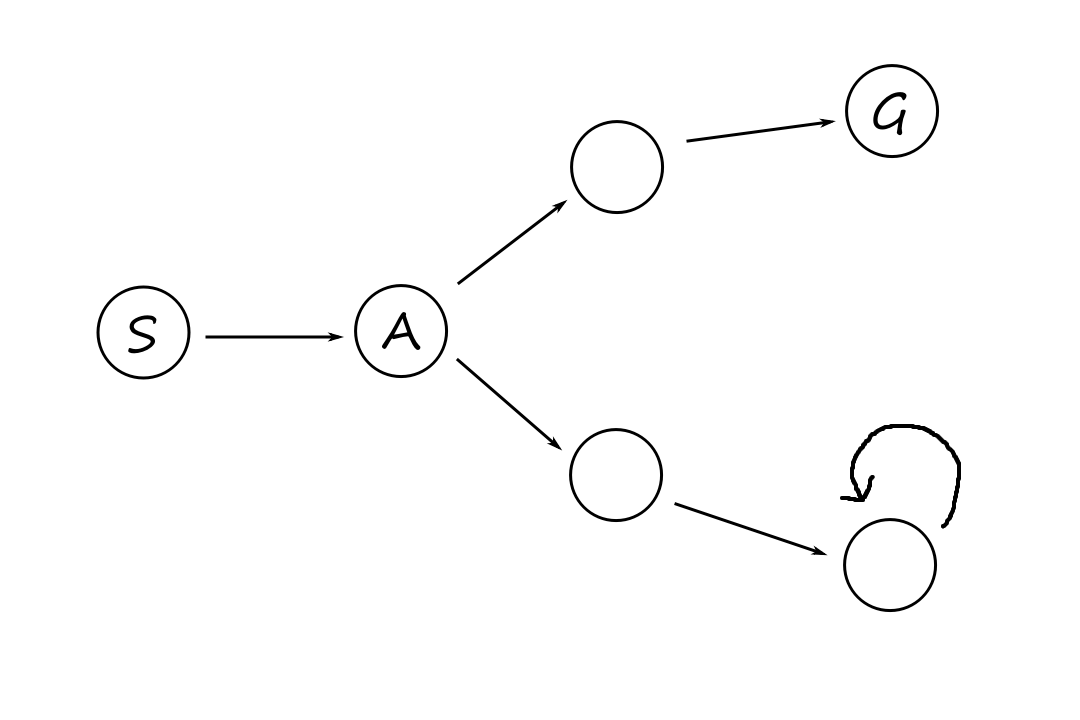

In a partially observable or nondeterministic environment, a solution would be a branching strategy (if/else) that recommends different future actions depending on what percepts the agent sees. For example, the agent might come across a sign that says the road is closed, so it would need a backup plan.

Search problems and solutions

Formally, a search problem can be defined by specifying:

- the state space: the set of possible states that the environment can be in

- the initial state that the agent starts in

- a set of one or more goal states

- the actions available to the agent

- a transition model that describes what each action does

- an action cost function that describes the cost of performing an action

- the cost should reflect the agent's performance measure, such as distance, time, or monetary cost

A sequence of actions forms a path, and a solution is a path from the initial state to a goal state. The cost of a path is the sum of the costs of each action on that path, so an optimal solution is the path with the lowest cost among all solutions. Everything can be represented as a graph where the vertices are the states and the edges are the actions.

Example Problems

Standardized problems

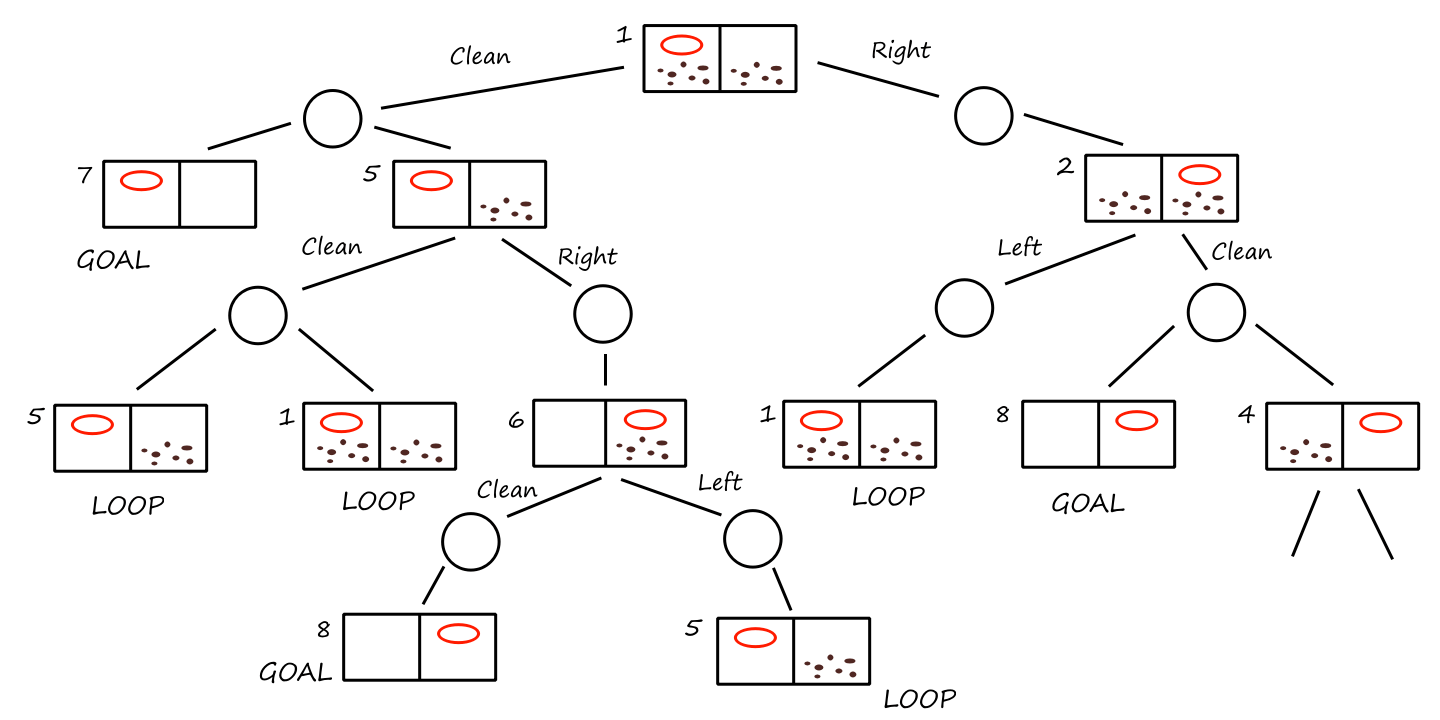

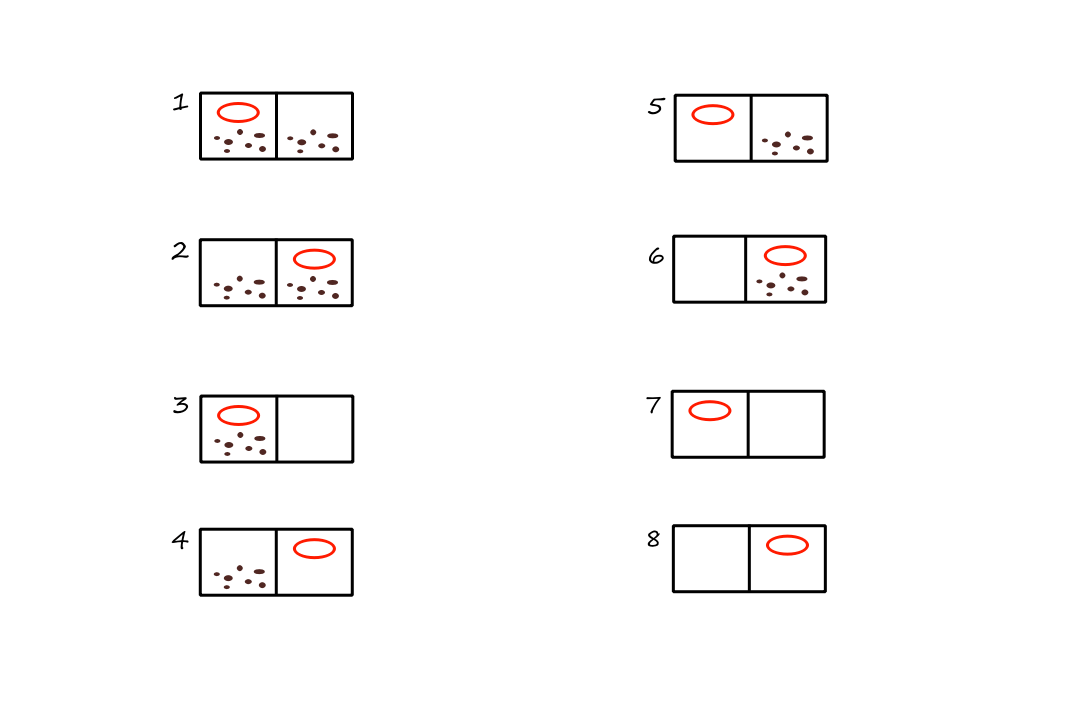

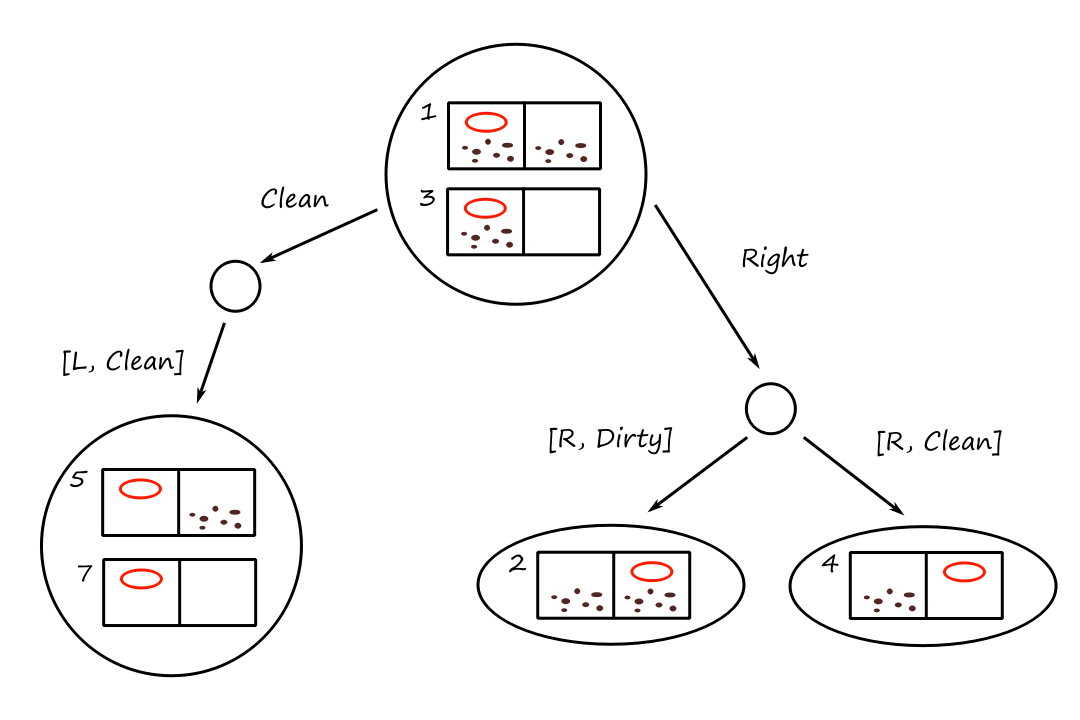

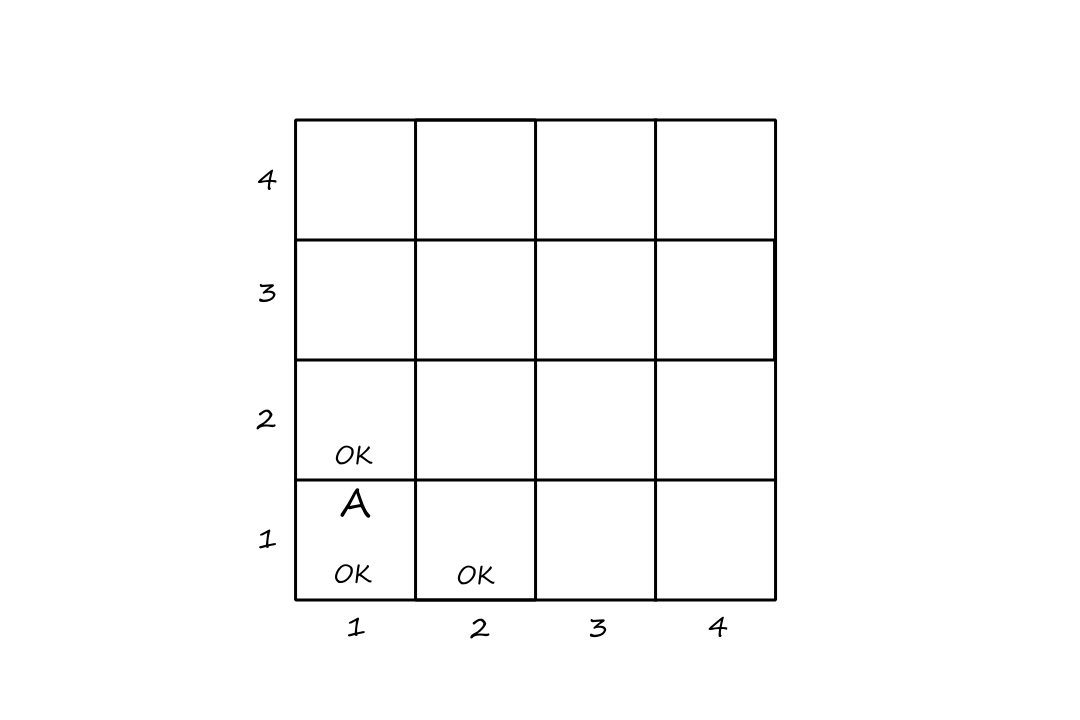

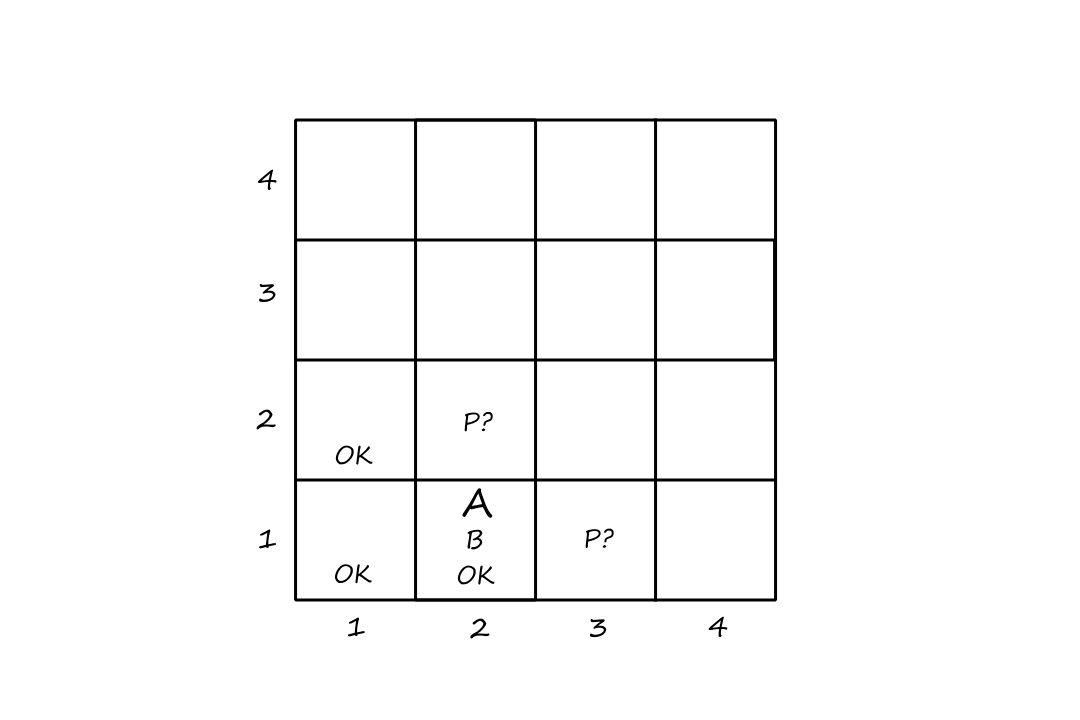

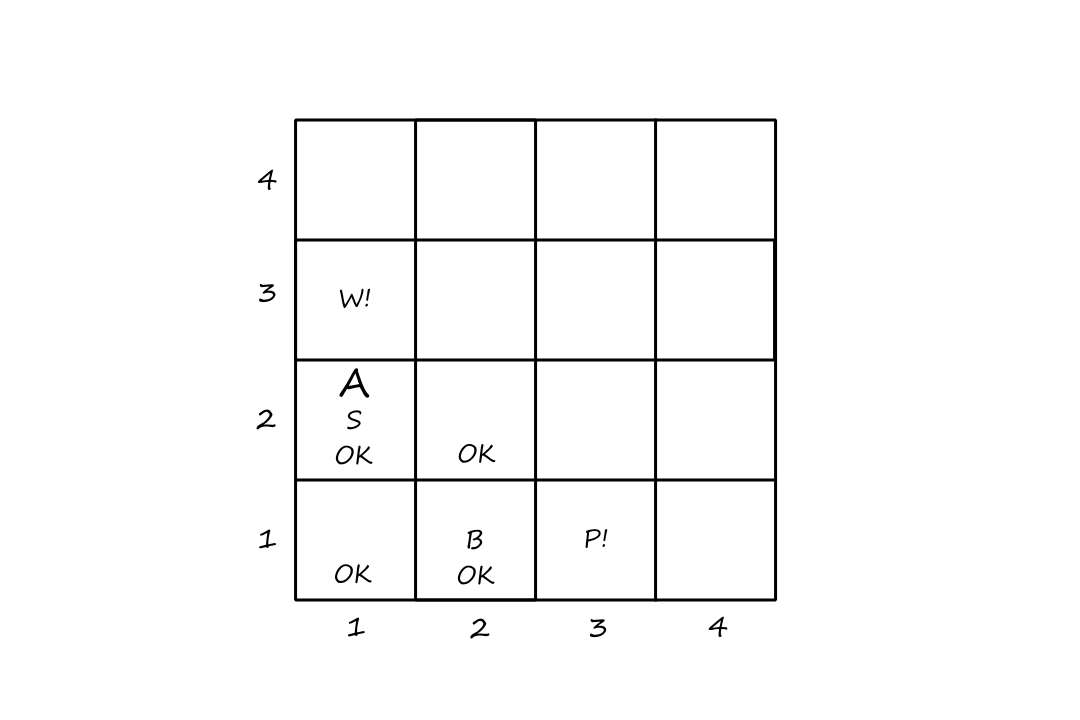

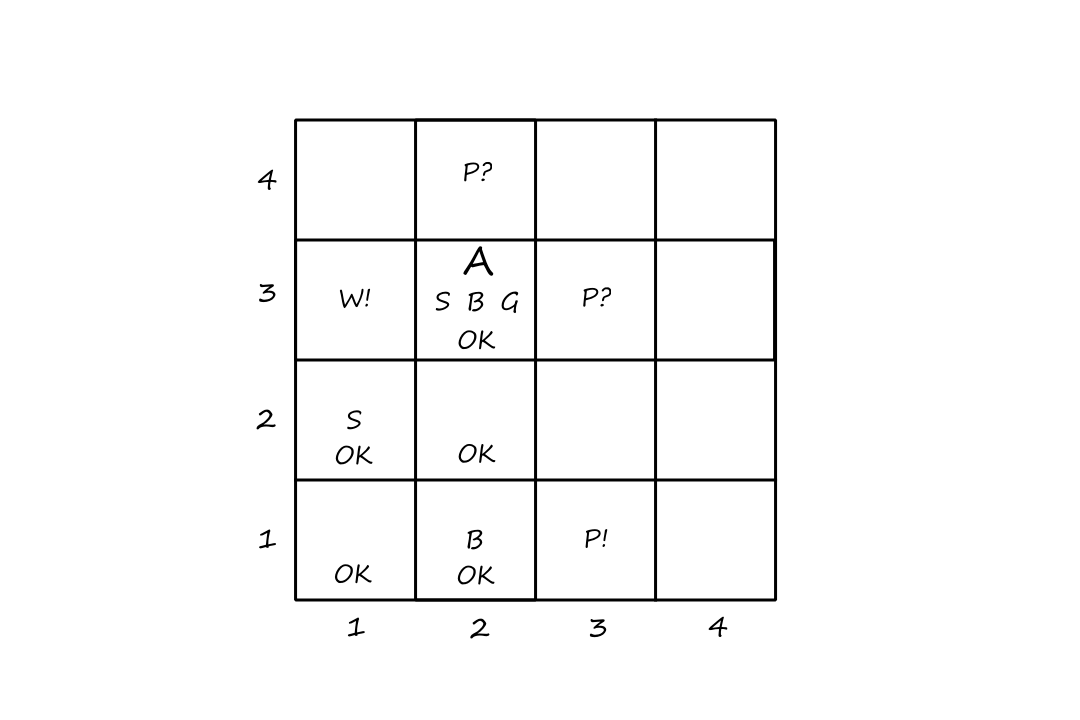

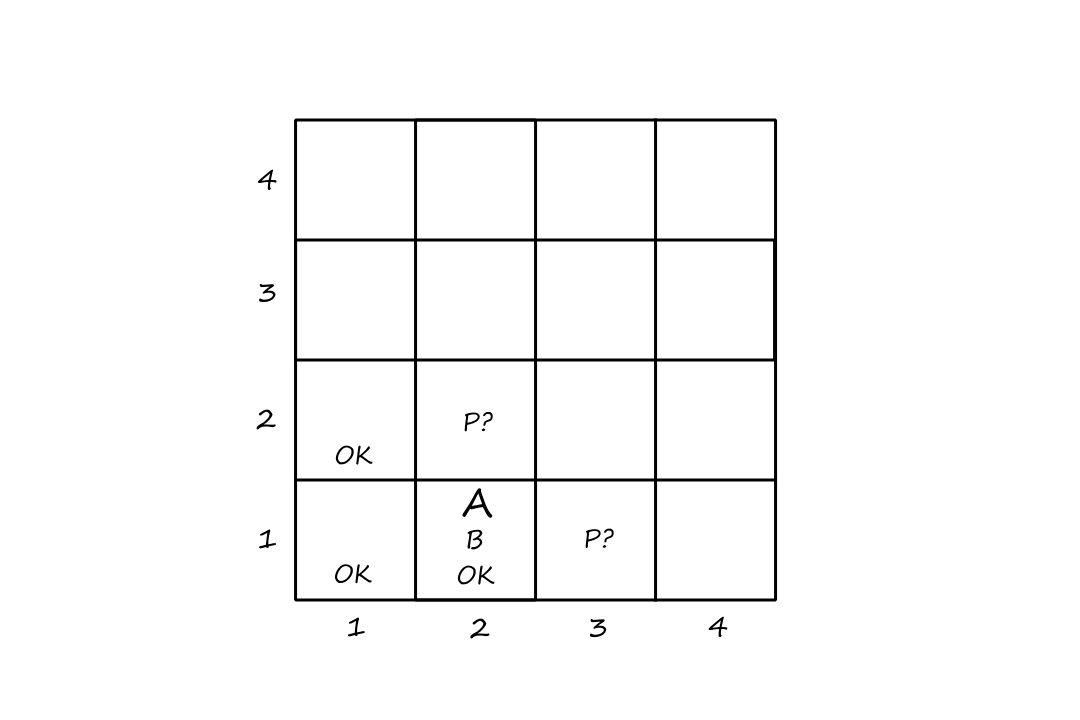

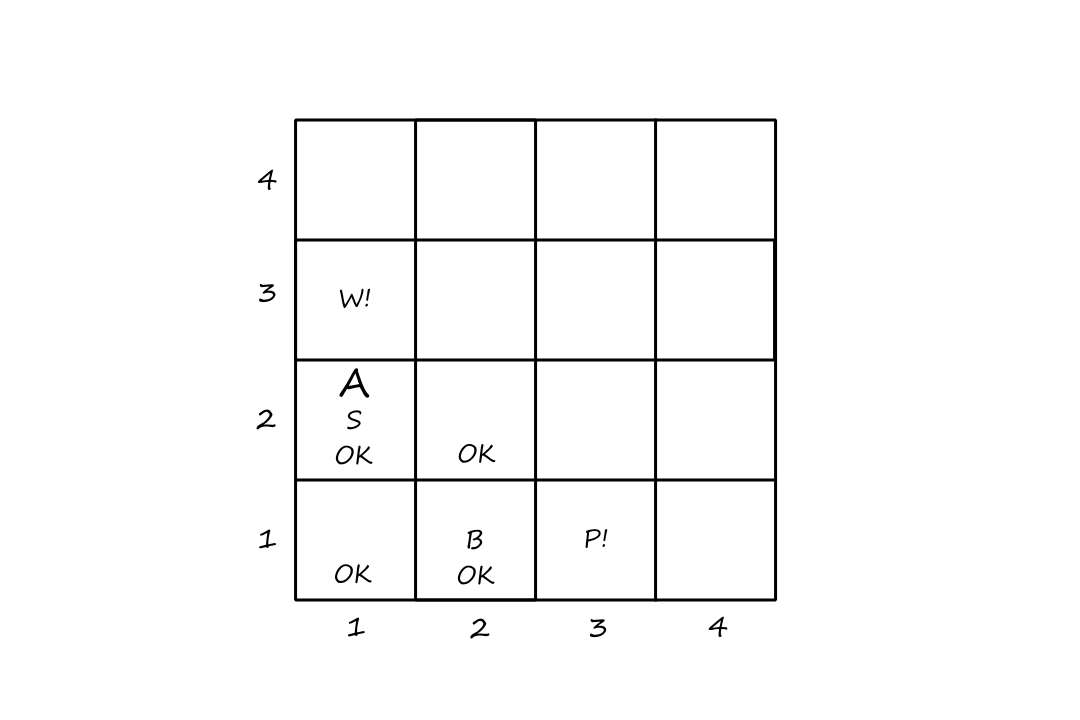

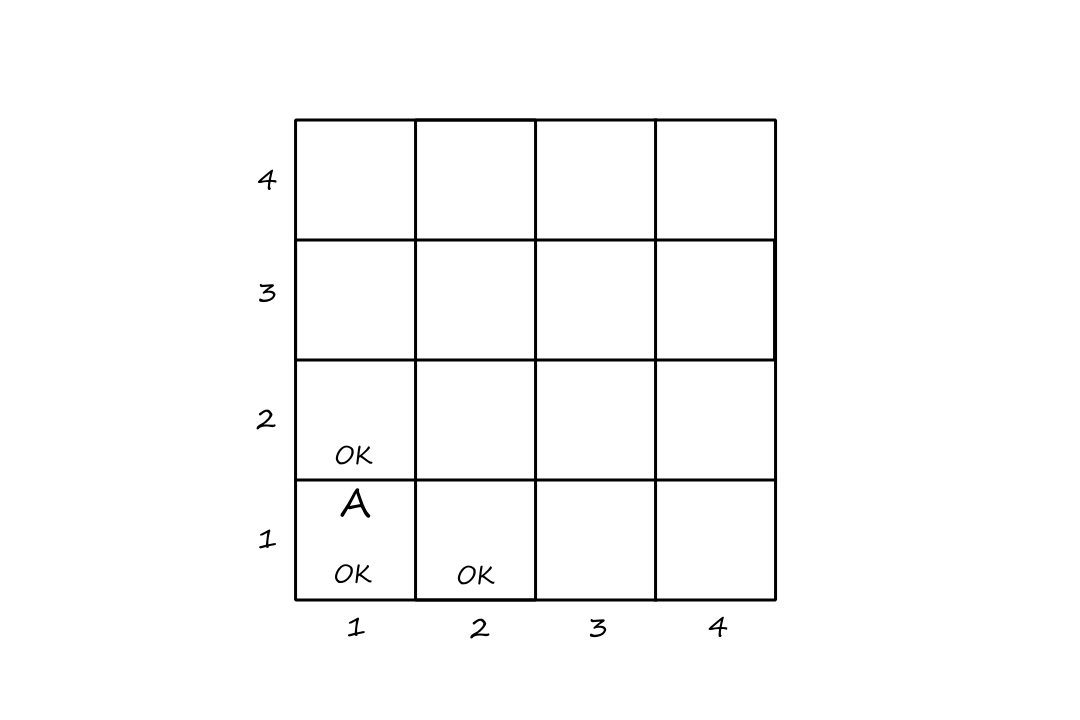

A grid world problem is an array of cells where agents can move from cell to cell and each cell can contain objects. Our little vacuum example is an example of a grid world problem.

- states: there are `8` different states depending on which objects are in which cells

- for each of the two cells, it can contain the vacuum or not and it can contain dirt or not (`2*2*2=8`)

- initial state: any state can be chosen as the initial state

- actions: move left, move right, clean

- transition model: cleaning removes dirt from the agent's cell and move left/right are self-explanatory

- goal states: all the states where every cell is clean

- both cells are clean and the vacuum ends up in the left cell

- both cells are clean and the vacuum ends up in the right cell

- action cost: each action costs `1`

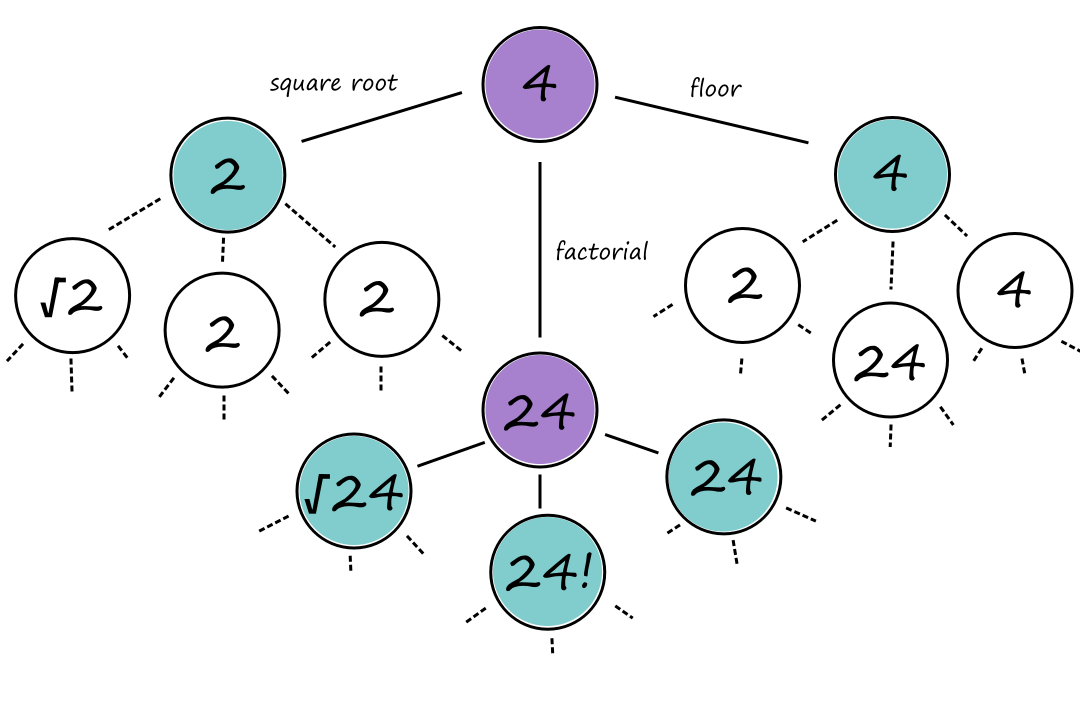

This one's interesting. Donald Knuth conjectured that a sequence of square root, floor, and factorial operations can be applied to the number `4` to reach any positive integer. For example, we can reach `5` by applying eight operations:

`lfloorsqrt(sqrt(sqrt(sqrt(sqrt((4!)!)))))rfloor=5`

- states: positive real numbers

- initial state: `4`

- actions: apply square root, floor, or factorial operation

- transition model: the effect of applying the operations are mathematically defined

- goal state: the desired positive integer

- action cost: each action costs `1`

Real-world problems

A common type of real-world problem is the route-finding problem (finding an optimal path from point A to point B). For example, airline travel:

- states: location and current time

- initial state: our home airport

- actions: take any flight from the current location

- transition model: the flight's destination will become our new current location and the flight's arrival time will become our new current time

- goal state: our destination

- the goal can also be "arrive at the destination on a nonstop flight"

- action cost: combination of monetary cost, waiting time, flight time, seat quality, time of day, type of airplane, frequent-flyer reward points, etc.

Touring problems is an extension of the route-finding problem where multiple locations must be visited instead of just one destination. The traveling salesperson problem is a popular example.

Search Algorithms

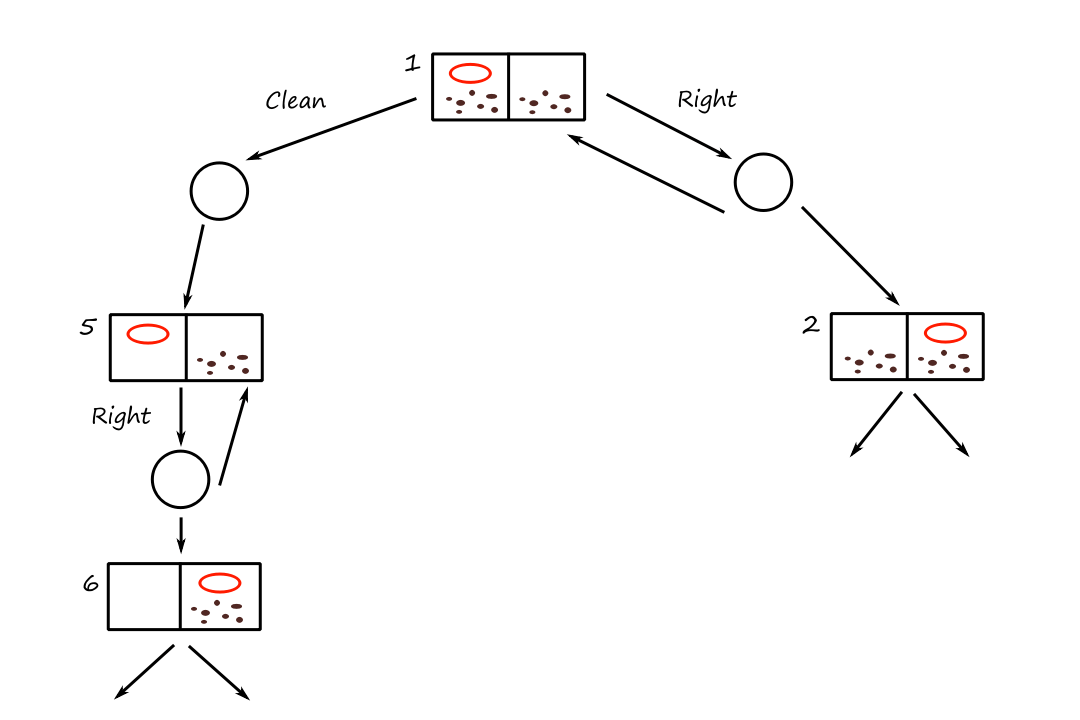

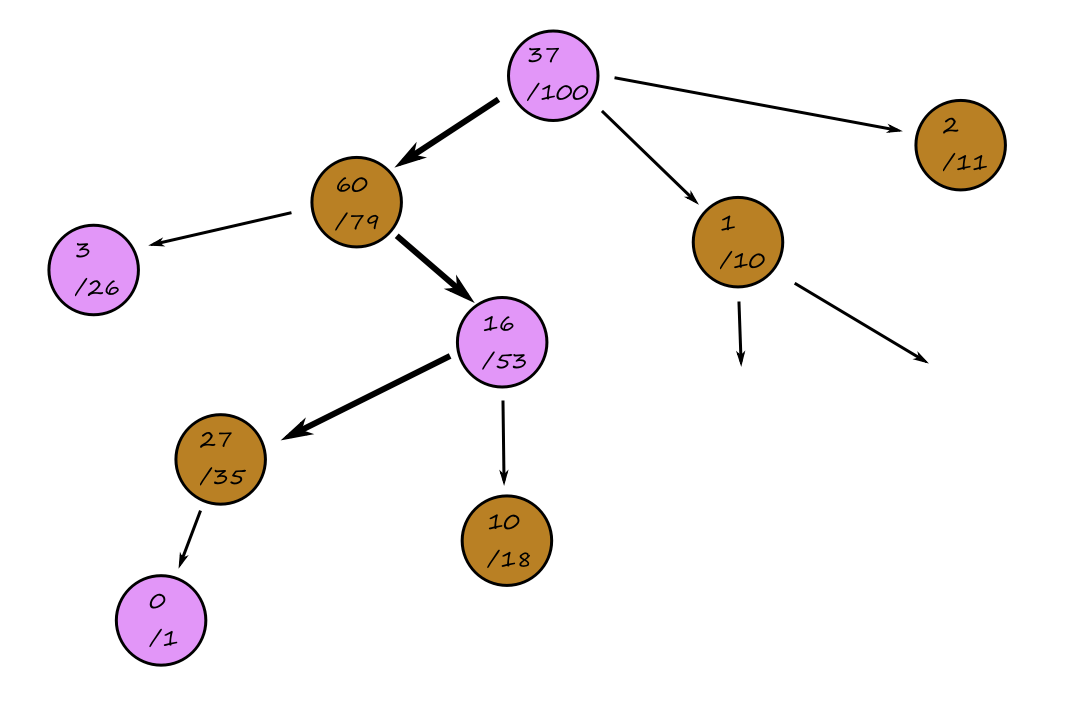

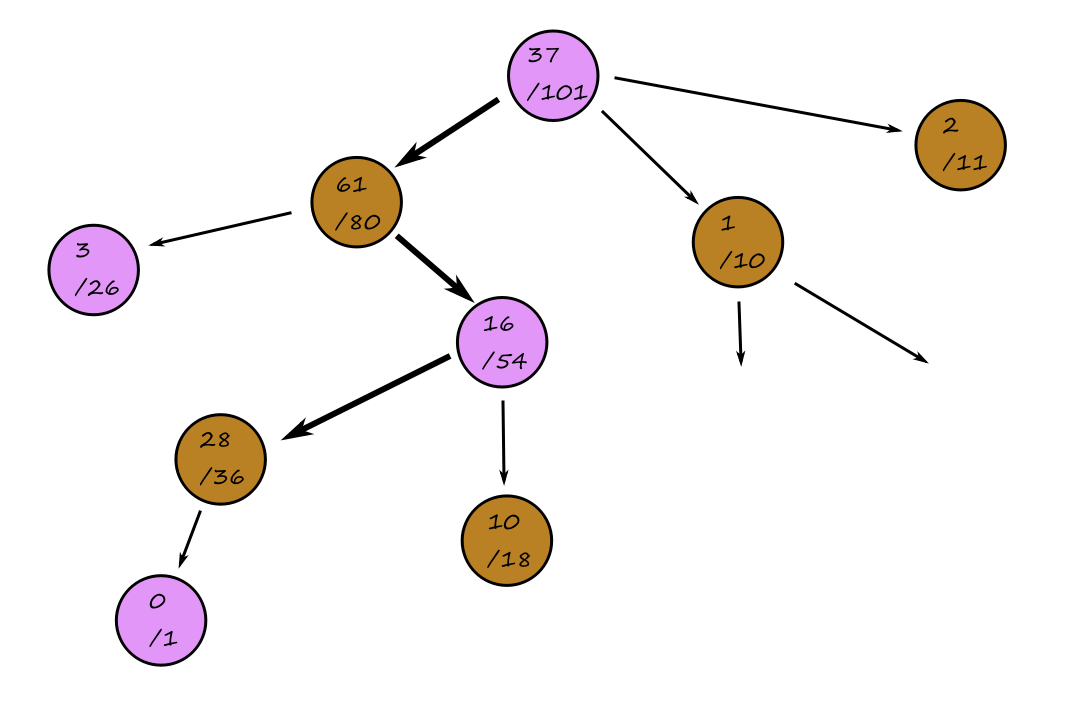

Search problems can be represented as a search tree, where a node represents a state and the edges represent the actions. For Knuth's conjecture:

The purple nodes are nodes that have been expanded. The green nodes are nodes that are on the frontier, i.e., they have not been expanded yet.

When we're at a node, we can expand the node to consider the available states that are possible (generate nodes). The nodes that have not been expanded yet make up the frontier of the search tree.

Best-first search

So how do we decide which path to take? A good general approach is to perform best-first search. In this type of search, we define an evaluation function, which, in a sense, is a measure of how close we are to reaching the goal state. When we apply the evaluation function to each frontier node, the node with the minimum value of the evaluation function should be the next node to go to.

One example of an evaluation function for Knuth's conjecture could be

`f(n) = abs(n-G)`

where `n` is the current node and `G` is the goal. This evaluation function is measuring the distance between where we are and where we want to be, so it makes sense to choose the node that minimizes this distance. Unfortunately, this isn't a very helpful evaluation function to use because it would tell us to keep applying the floor operation.

Search data structures

We can implement a node by storing:

- state: the state of the node

- parent: the node that generated this node

- action: the action that was applied to the parent to generate this node

- path-cost: the total cost of the path from the initial state to this node

We can store the frontier using a queue. A queue is good because it provides us with the operations to check if a frontier is empty, take the top node from the frontier, look at the top node of the frontier, and add a node to the frontier.

function BEST-FIRST-SEARCH(problem, f)

node = NODE(STATE = problem.INITIAL)

frontier = priority queue ordered by f

reached = a lookup table

while not IS-EMPTY(frontier) do

node = POP(frontier)

if problem.IS-GOAL(node.STATE) then return node

for each child in EXPAND(problem, node) do

s = child.STATE

if s is not in reached or child.PATH-COST < reached[s].PATH-COST then

reached[s] = child

add child to frontier

return failure

function EXPAND(problem, node)

s = node.STATE

for each action in problem.ACTIONS do

s' = problem.RESULT(s, action)

cost = node.PATH-COST + problem.ACTION-COST(s, action, s')

yield NODE(STATE = s', PARENT = node, ACTION = action, PATH-COST = cost)

Redundant paths

As we traversing the tree, it's possible to get stuck in a cycle (loopy path), i.e., go around in circles through repeated states. Here's an example of a cycle:

`4 ubrace(rarr)_(text(factorial)) 24 ubrace(rarr)_(text(square root)) 4.9 ubrace(rarr)_(text(floor)) 4`

A cycle is a type of redundant path, which is a path that has more steps than necessary. Here's an example of a redundant path:

`4 ubrace(rarr)_(text(floor)) 4 ubrace(rarr)_(text(square root)) 2`

(This is redundant because we could've just gone to `2` directly without needing the first floor operation.)

There are three ways to handle the possibility of running into redundant paths. The preferred way is to keep track of all the states we've been to. That way, if we see it again, then we know not to go that way. (The best-first search pseudocode does this.)

The bad part about doing this is that there might not be enough memory to keep track of all this info. So we can check for cycles instead of redundant paths. We can do this by following the chain of parent pointers ("going up the tree") to see if the state at the end has appeared earlier in the path.

Or we can choose to not even worry about choosing redundant paths if it's rare or impossible to have them. From this point on, we'll use graph search for search algorithms that check redundant paths and tree-like search for ones that don't.

Uninformed Search Strategies

The uninformed means that as the agent is performing the search algorithm, it doesn't know how close the current state is to the goal.

Breadth-first search

In breadth-first search, all the nodes on the same level are expanded first before going further down any paths. This is generally a good strategy to consider when all the actions have the same cost.

Breadth-first search can be implemented as a call to BEST-FIRST-SEARCH where the evaluation function is the depth of the node, i.e., the number of actions it takes to reach the node.

There are several optimizations we can take advantage of when using breadth-first search. Using a first-in-first-out (FIFO) queue is faster than using a priority queue because the nodes that get added to a FIFO queue will already be in the correct order (so there's no need to perform a sort, which we would need to do with a priority queue).

Best-first search waits until the node is popped off the queue before checking if it's a solution (late goal test). But with breadth-first search, we can check if a node is a solution as soon as it's generated (early goal test). This is because traversing breadth first explores all the possible paths above the generated node, guaranteeing that we've found the shortest path to it. (Think about it like this: because we're traversing breadth first, we can't say, "Would we have found a shorter way here if we had gone on that path instead?" because we already checked that path.)

Because of this, breadth-first search is cost-optimal for problems where all actions have the same cost.

function BREADTH-FIRST-SEARCH(problem)

node = NODE(problem.INITIAL)

if problem.IS-GOAL(node.STATE) then return node

frontier = a FIFO queue

reached = {problem.INITIAL}

while not IS-EMPTY(frontier) do

node = POP(frontier)

for each child in EXPAND(problem, node) do

s = child.STATE

if problem.IS-GOAL(s) then return child

if s is not in reached then

add s to reached

add child to frontier

return failure

Let `d` represent the depth of the search tree. Suppose each node generates `b` child nodes.

The root node generates `b` nodes. Each of those `b` nodes generates `b` nodes (total `b^2` nodes). And each of the `b^2` nodes generates `b` nodes (total `b^3` nodes). So the total number of nodes generated is

`1+b+b^2+b^3+...+b^d=O(b^d)`

Time and space complexity are exponential, which is bad. (All the generated nodes have to remain in memory.)

Dijkstra's algorithm or uniform-cost search

If actions have different costs, then uniform-cost search is a better consideration. The main idea behind this is that the least-cost paths are explored first. (I go into more step-by-step detail about how Dijkstra's algorithm works in my Computer Networking resource.)

Uniform-cost search will always find the optimal solution, but the main problem with it is that it's inefficient. It will expand a lot of nodes.

Uniform-cost search can be implemented as a call to BEST-FIRST-SEARCH where the evaluation function is the cost of the path from the root to the current node.

The worst-case time and space complexity is `O(b^(1+lfloorC^**//epsilonrfloor))` where `C^**` is the cost of the optimal solution and `epsilon` is a lower bound on the cost of all actions.

Since costs are positive (`gt 0`), going down one path by one node may be "deeper" than going down another path by one node, so we can't use the same `d` notation for depth. So `C^(**)/epsilon` is an upper bound on how deep the solution is, i.e., the max number of actions that are taken to reach the solution.

Depth-first search

In depth-first search, the deepest node in the frontier is expanded first. Basically, one path at a time is picked and followed until it ends. So if a goal state is found, then that path is chosen as the solution. This also means that the path chosen may not be the cheapest path available. (What if we had gone down that path instead?)

So why should this algorithm even be considered? It's because depth-first search doesn't require a lot of memory. There's no need for a reached table and the frontier is very small.

The frontier of breadth-first search can be thought of as the surface of an expanding sphere while the frontier of depth-first search can be thought of as the radius of that sphere.

The memory complexity is `O(bm)` where `b` is the branching factor (number of nodes on a branch) and `m` is the maximum depth of the tree. Whenever we expand a node, we add `b` generated children to the frontier. Then we move to one of the children, generate its `b` children, and add them to the frontier. And so on until the bottom. This happens `m` times. So at the bottom, there are `m` groups of `b` children in the frontier.

Depth-first search can be implemented as a call to BEST-FIRST-SEARCH where the evaluation function is the negative of the depth.

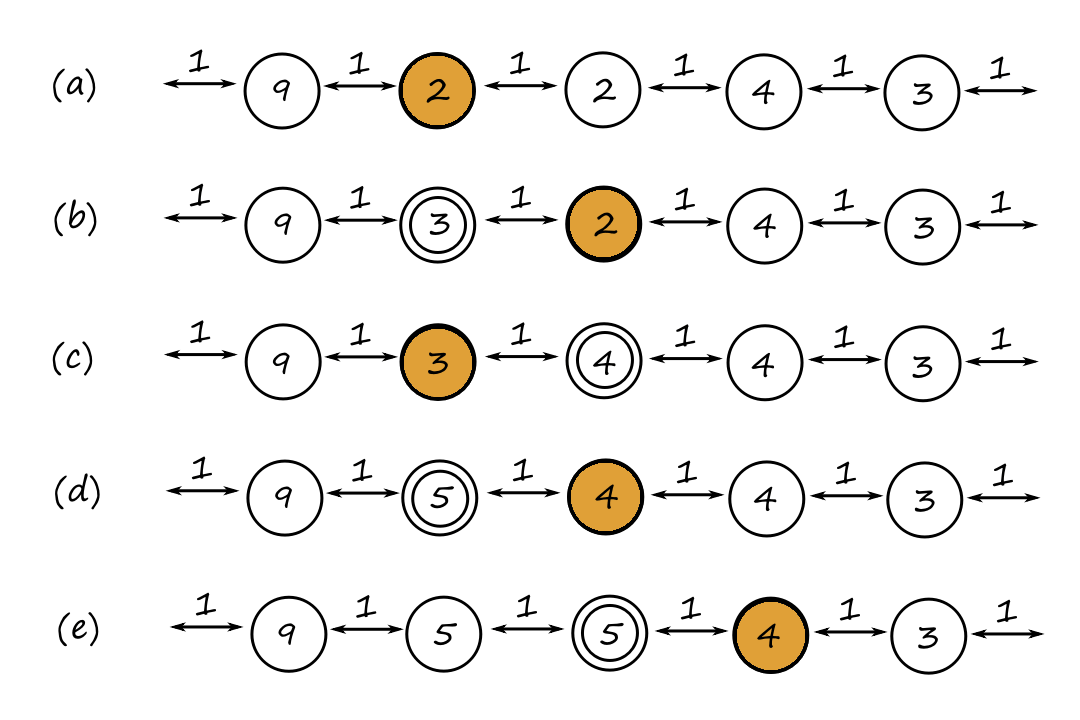

Depth-limited and iterative deepening search

Another problem with depth-first search is that it can keep going down one path infinitely. To prevent this, we can decide to stop going down a path once we reach a certain point. This is depth-limited search.

The time complexity is `O(b^l)` and the space complexity is `O(bl)` for the same reasons as depth-first search.

But how far is too far? If we stop too early, we may not find a solution and if we choose to stop very late, it will take a long time. Iterative deepening search keeps trying different stopping points until a solution is found or until no solution is found (if this is the case, then we have gone deep enough to the point where all paths can be fully explored).

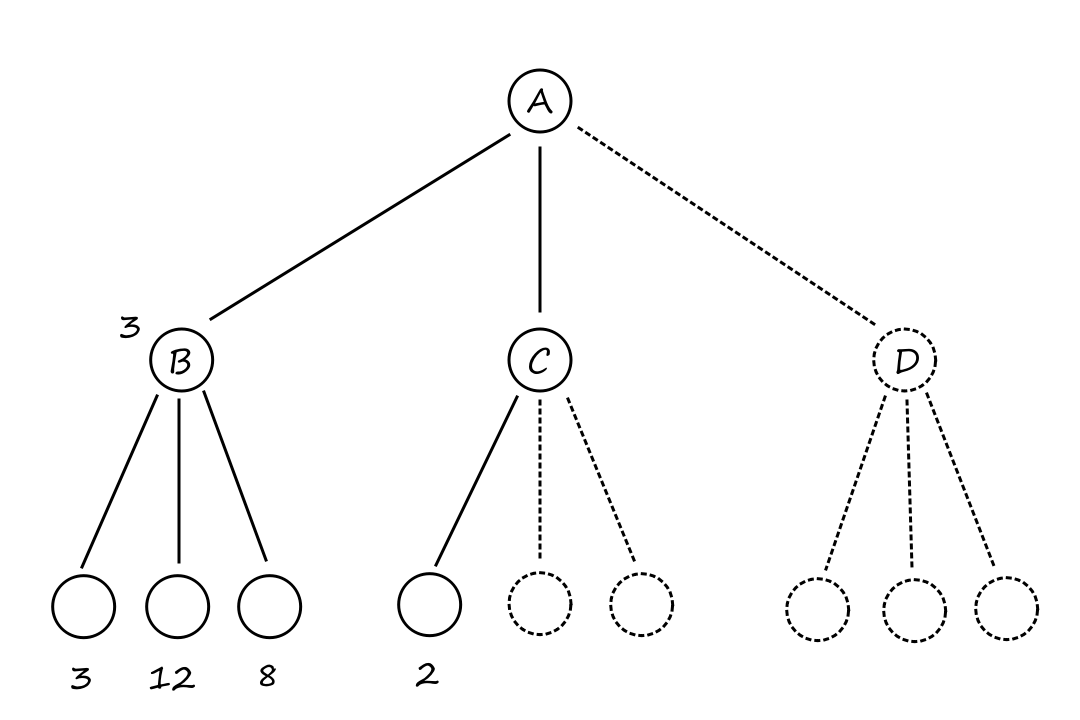

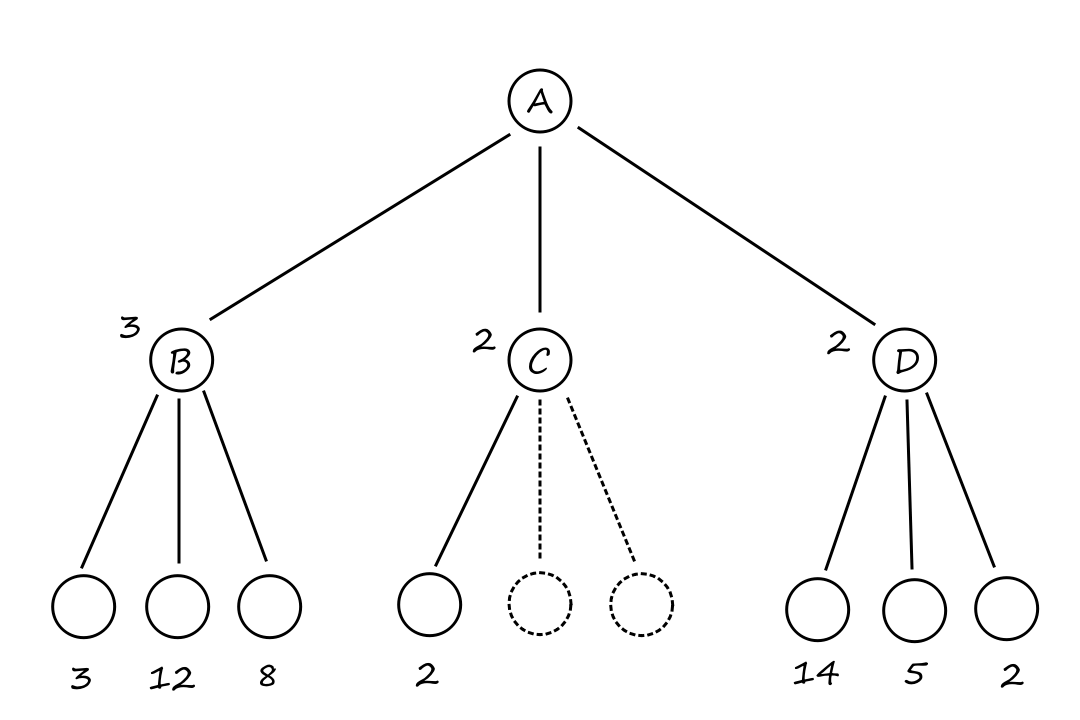

It may seem a bit repetitive (or wasteful) to keep generating the top-level nodes. For example, `B` and `C` are generated `3` times at limits `1`, `2`, and `3`. However, this isn't really that big of an issue since the majority of the nodes are at the bottom, and those are only generated a few times compared to the top-level nodes.

In general, the nodes at the bottom level are generated once, those on the next-to-bottom level are generated twice, ..., and the children of the root are generated `d` times. So the total number of nodes generated is

`(d)b^1+(d-1)b^2+(d-2)b^3+...+b^d=O(b^d)`

So the time complexity is `O(b^d)` when there's a solution (and `O(b^m)` when there isn't).

The memory complexity is `O(bd)` when there is a solution and `O(bm)` when there isn't (for finite state spaces).

function DEPTH-LIMITED-SEARCH(problem, l)

frontier = a LIFO queue

result = failure

while not IS-EMPTY(frontier) do

node = POP(frontier)

if problem.IS-GOAL(node.STATE) then return node

if DEPTH(node) > l then

result = cutoff

else if not IS-CYCLE(node) do

for each child in EXPAND(problem, node) do

add child to frontier

return result

function ITERATIVE-DEEPENING-SEARCH(problem)

for depth = 0 to infinity do

result = DEPTH-LIMITED-SEARCH(problem, depth)

if result != cutoff then return result

cutoff is a string used to denote that there might be a solution at a deeper level than `l`.

Iterative deepening is the preferred uninformed search method when the search state space is larger than can fit in memory and the depth of the solution is not known.

Bidirectional search

So far, all the algorithms started at the initial state and moved towards a goal state. But what we can also do is start from the initial state and the goal state(s) and hope that we meet somewhere in the middle. This is bidirectional search.

We need to keep track of two frontiers and two tables of reached states. There is a solution when the two frontiers meet.

Even though we have to keep track of two frontiers, each frontier is only half the size of the whole tree. So the time and space complexity is `O(b^(d//2))`, which is less than `O(b^d)`.

function BIBF-SEARCH(problem_F, f_F, problem_B, f_B)

node_F = NODE(problem_F.INITIAL)

node_B = NODE(problem_B.INITIAL)

frontier_F = a priority queue ordered by f_F

frontier_B = a priority queue ordered by f_B

reached_F = a lookup table

reached_B = a lookup table

solution = failure

while not TERMINATED(solution, frontier_F, frontier_B) do

if f_F(TOP(frontier_F)) < f_B(TOP(frontier_B)) then

solution = PROCEED(F, problem_F, frontier_F, reached_F, reached_B, solution)

else solution = PROCEED(B, problem_B, frontier_B, reached_B, reached_F, solution)

return solution

function PROCEED(dir, problem, frontier, reached, reached_2, solution)

node = POP(frontier)

for each child in EXPAND(problem, node) do

s = child.STATE

if s not in reached or PATH-COST(child) < PATH-COST(reached[s]) then

reached[s] = child

add child to frontier

if s is in reached_2 then

solution_2 = JOIN-NODES(dir, child, reached_2[s])

if PATH-COST(solution_2) < PATH-COST(solution) then

solution = solution_2

return solution

TERMINATED determines when to stop looking for solutions and JOIN-NODES joins the two paths.

Comparing uninformed search algorithms

An algorithm is complete if either a solution is guaranteed to be found if it exists or a failure is reported when it doesn't exist.

| Criterion | Breadth-First | Uniform-Cost | Depth-First | Depth-Limited | Iterative Deepening | Bidirectional |

|---|---|---|---|---|---|---|

| Complete? | Yes* | Yes*† | No | No | Yes* | Yes*§ |

| Optimal cost? | Yes‡ | Yes | No | No | Yes‡ | Yes‡§ |

| Time | `O(b^d)` | `O(b^(1+lfloorC^**//epsilonrfloor))` | `O(b^m)` | `O(b^l)` | `O(b^d)` | `O(b^(d//2))` |

| Space | `O(b^d)` | `O(b^(1+lfloorC^**//epsilonrfloor))` | `O(bm)` | `O(bl)` | `O(bd)` | `O(b^(d//2))` |

`b` is the branching factor. `m` is the maximum depth of the search tree. `d` is the depth of the shallowest solution, or is `m` when there is no solution. `l` is the depth limit.

*if `b` is finite

†if all action costs are `ge epsilon gt 0`

‡if all action costs are identical

§if both directions are breadth-first or uniform-cost

Informed (Heuristic) Search Strategies

Informed means that information is provided about how close the current state is to a goal state.

The information is referred to as "hints", which are given by a heuristic function, denoted as `h(n)`.

`h(n)=` estimated cost of the cheapest path from the state at node `n` to a goal state

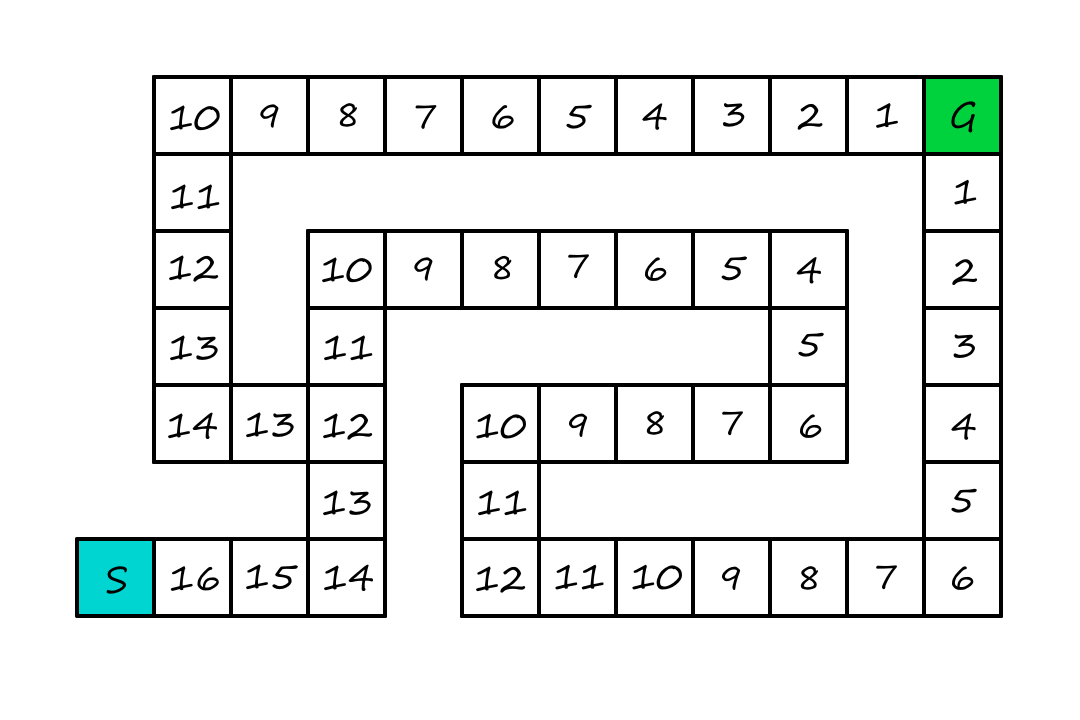

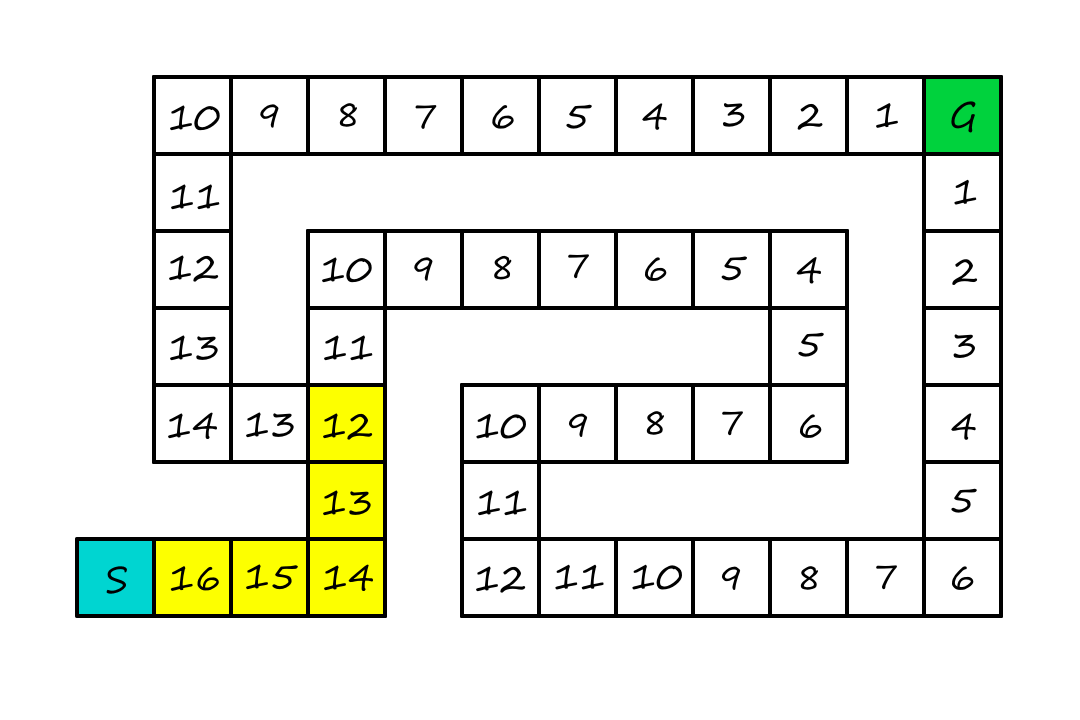

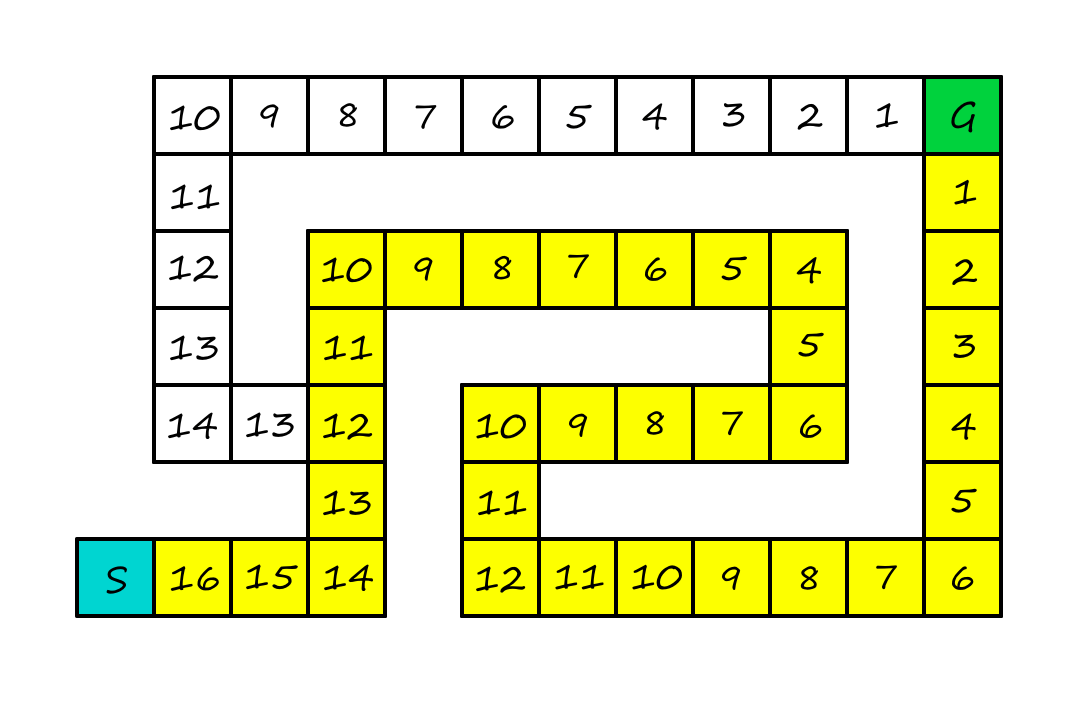

For the next few algorithms, we'll be using this maze, where the goal is to find the shortest path from `S` to `G`. The number in each square is the number of squares it is away from the goal (ignoring walls). That is the heuristic we'll be using.

Greedy best-first search

If we know how close each node is to a goal, then it seems to makes sense to choose the node that seems closest to the goal.

We expand first the node with the lowest `h(n)` value.

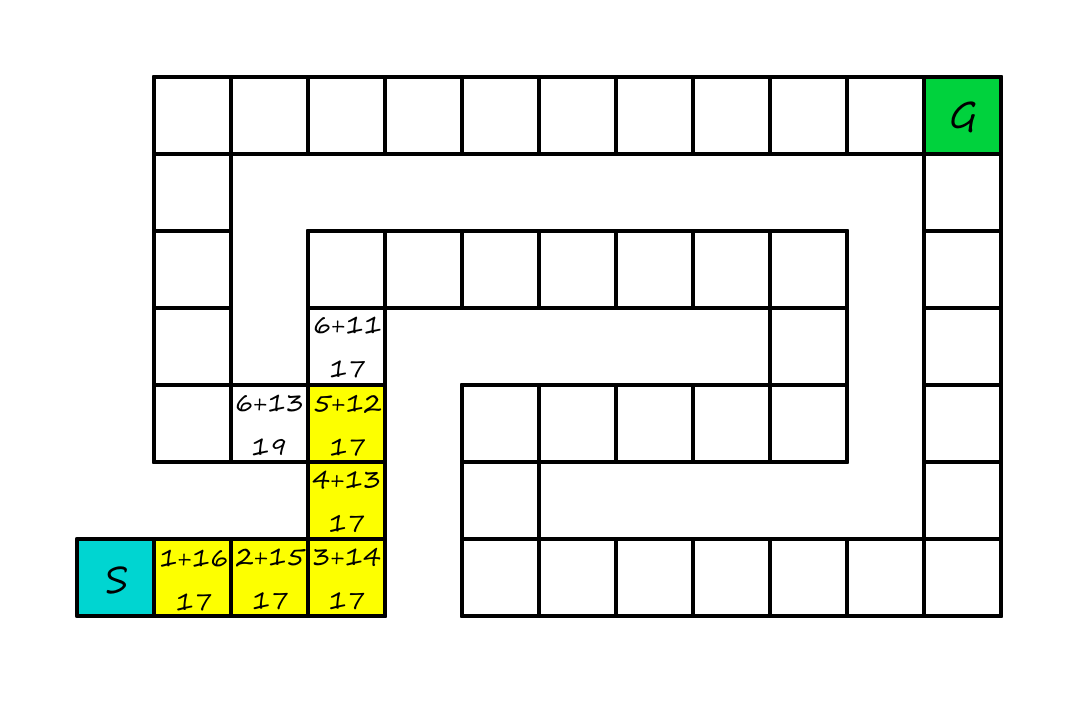

Since we're being greedy, we'll move to the square that looks like it's `11` squares away from the goal instead of the square that looks like it's `13` squares away from the goal.

Now obviously, this is not the optimal path. But being greedy is not always a good thing.

A* search

The reason greedy best-first search failed is because it didn't take into consideration the cost of going to each node -- it only looked at how far away each node was to the destination. Intuitively, if it takes a long time to get to a destination that is close to your goal, is it really worth going to that destination? A* search looks at both the cost to get to each node and the estimated cost from each node to the destination.

A* search can be implemented as a call to BEST-FIRST-SEARCH where the evaluation function is `g(n)+h(n)`. `g(n)` is the cost from the initial state to node `n`, and `h(n)` is the estimated cost of the shortest path from `n` to a goal state.

`f(n)=` estimated cost of the best path that continues from `n` to a goal

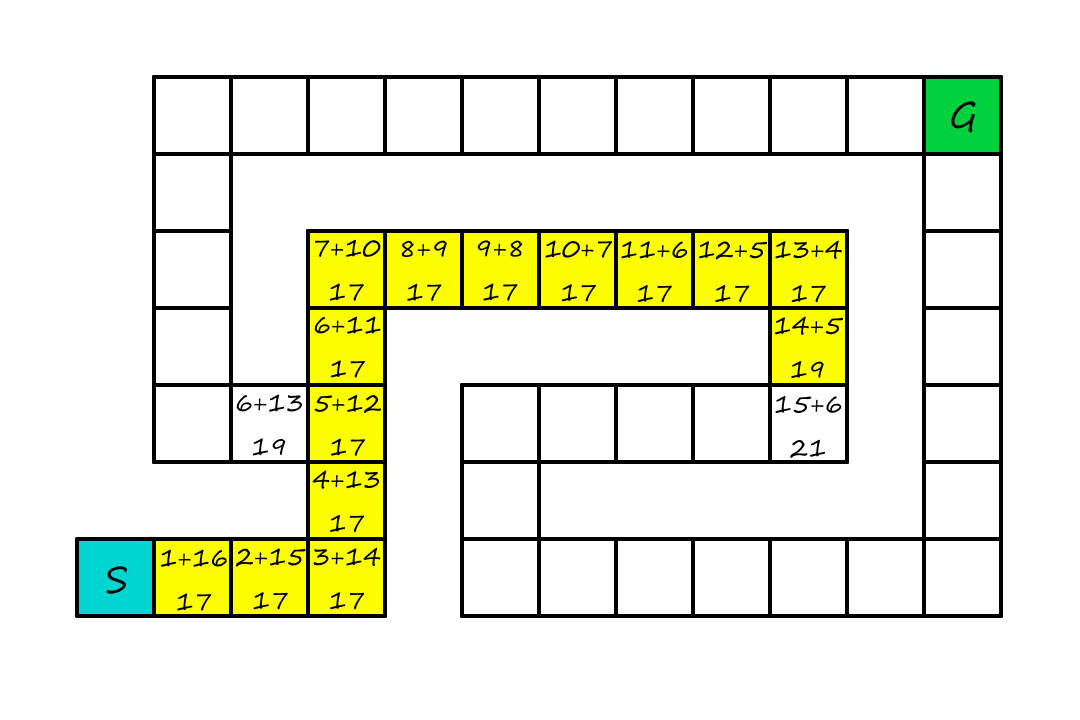

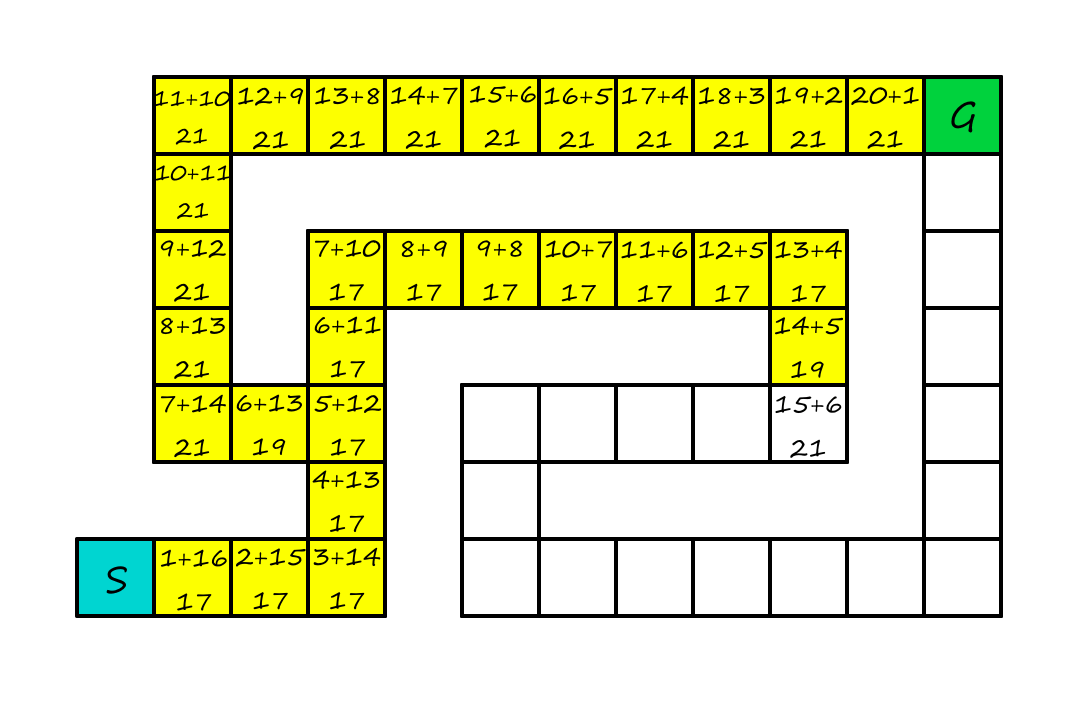

Now, the numbers in each square represent the cost of going to that square plus the estimated distance to the goal, i.e., the number of squares it is away from the goal (ignoring walls).

Just like in greedy search, we'll be going up again. But don't worry, things will be different this time.

At this point, we see that it's no longer worth continuing on this path because there is a cheaper option (`19`) at the fork. So we'll start going that way instead.

Even though we wasted some time going down the longer path, we still found the optimal solution.

Whether A* is cost-optimal depends on the heuristic. If the heuristic is admissible, then A* is cost-optimal. Admissible means that the heuristic never overestimates the cost to reach a goal, i.e., the estimated cost to reach the goal is `le` the actual cost to reach the goal. Admissible heuristics are referred to as being optimistic.

We can show A* is cost-optimal with an admissible heuristic by using a proof by contradiction.

Let `C^**` be the cost of the optimal path. Suppose for the sake of contradiction that the algorithm returns a path with cost `C gt C^**`. This means that there is some node `n` that is on the optimal path and is unexpanded (if we had expanded it, we would've found the optimal path and returned `C^**` instead).

Let `g^(ast)(n)` be the cost of the optimal path from the start to `n`, and let `h^(ast)(n)` be the cost of the optimal path from `n` to the nearest goal.

`f(n) gt C^**` (otherwise `n` would've been expanded)

`f(n) = g(n)+h(n)` (by definition)

`f(n) = g^(ast)(n)+h(n)` (because `n` is on the optimal path)

`f(n) le g^(ast)(n)+h^(ast)(n)` (because of admissibility, `h(n) le h^(ast)(n)`)

`f(n) le C^**` (by definition, `C^(ast)=g^(ast)(n)+h^(ast)(n)`)

The first and last lines form a contradiction. So it must be the case that the algorithm returns a path with cost `C le C^**`, i.e., A* is cost-optimal.

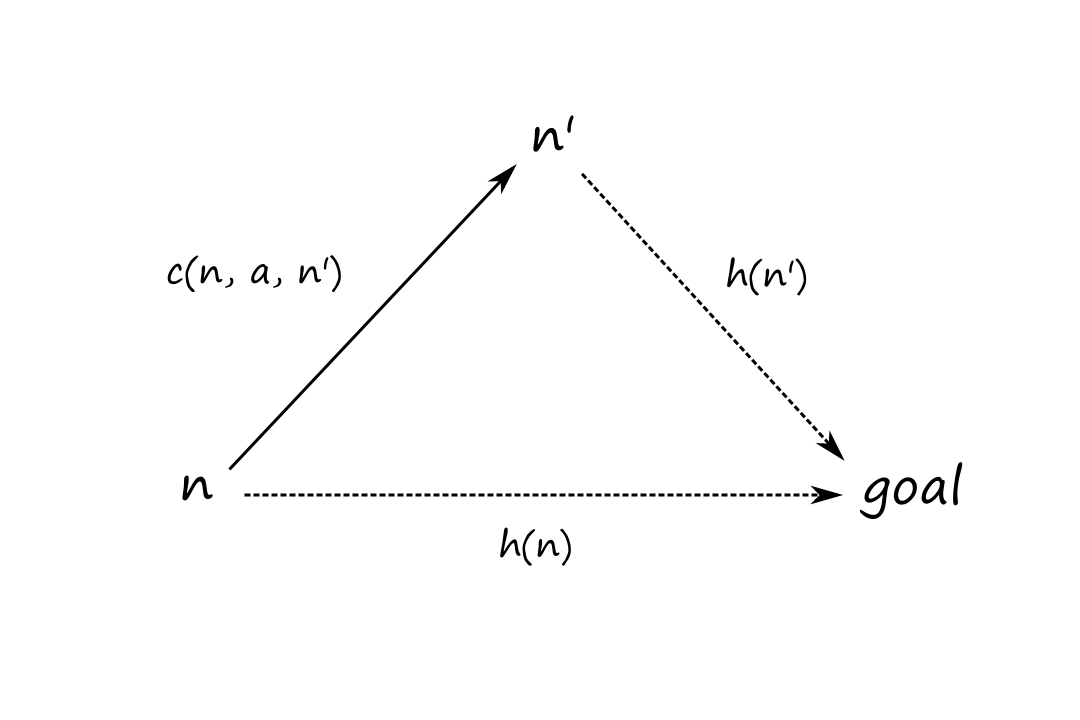

A heuristic can also be consistent, which is slightly stronger than being admissible (so every consistent heuristic is admissible). A heuristic `h(n)` is consistent if, for every node `n` and every successor `n'` of `n` generated by action `a`,

`h(n) le c(n,a,n') + h'(n)`

What this is saying is that the estimated cost to the goal from our current position should be less than (or equal to) the cost of going to another location plus the estimated cost of going to the goal from that location. In other words, adding a stop should make the trip longer unless that stop is on the way to the destination.

Consistency is a form of the triangle inequality, which says that a side of a triangle can't be longer than the sum of the other two sides.

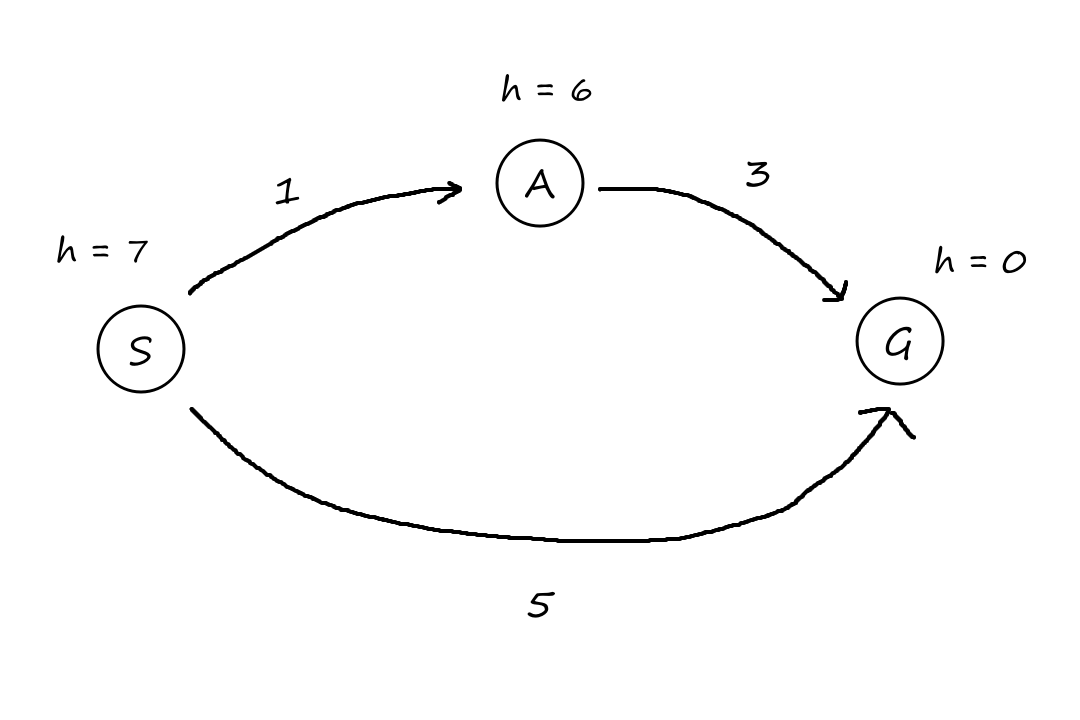

Here's an example of how things can be not optimal if the heuristic is not admissible/consistent.

When we're starting at `S`, we can either choose to go to `A` or to `G` directly. Since the estimated cost of `A rarr G` is `6`, it looks more expensive to go to `A` first, so we go to `G` directly instead (`f(A)=1+6=7 gt f(G)=5+0=5`).

However, `S rarr G` is not the optimal path! `S rarr G` costs `5` while `S rarr A rarr G` costs `4`.

This happened because we overestimated the distance from `A` to `G`.

As we go down a path, the `g` costs should be increasing because action costs are always positive. For our maze example, the closer we get to `G`, the farther we get from `S`. So the distance from `S` to our current position should always be increasing as we keep moving. The `g` costs are (strictly) monotonic.

So does this mean that `f=g+h` will also monotonically increase? As we move from `n` to `n'`, the cost goes from `g(n)+h(n)` to `g(n)+c(n,a,n')+h(n')`. Canceling out the `g(n)`, we see that the path's cost will be monotonically increasing if and only if `h(n) le c(n,a,n')+h(n')`, i.e., if and only if the heuristic is consistent.

So if A* search is using a consistent heuristic, then:

- all nodes that can be reached from the initial state and satisfy `f(n) lt C^(**)` will be expanded (surely expanded nodes)

- this is because `f` is monotonically increasing

- if `f` wasn't monotonically increasing, then if we expand a node, there could be a successor with a lower `f` value, which means there was a better path to get to that successor that we missed (i.e., a node with `f(n) lt C^(**)` wasn't expanded)

- but A* works by picking the nodes with the lowest `f`-values to expand, so we couldn't have missed that better path -- there's a contradiction

- if `f` wasn't monotonically increasing, then if we expand a node, there could be a successor with a lower `f` value, which means there was a better path to get to that successor that we missed (i.e., a node with `f(n) lt C^(**)` wasn't expanded)

- this means that all potential paths with lower costs are explored (ensuring cost-optimality and completeness I think)

- this is because `f` is monotonically increasing

- some nodes where `f(n)=C^(**)` might be expanded

- nodes where `f(n) gt C^(**)` are not expanded

These three things lead to A* with a consistent heuristic being referred to as optimally efficient.

Satisficing search: Inadmissible heuristics and weighted A*

Even though A* is complete, cost-optimal, and optimally efficient, the one big problem with A* is that it expands a lot of nodes, which takes time and uses up space. We can expand fewer nodes by using an inadmissible heuristic, which may overestimate the estimated cost to the goal. Intuitively, if the estimated costs of some of the nodes are higher, then there will be fewer nodes that look favorable to expand. Of course, if we overestimate, then there's a chance we might miss an optimal path, but this is the price to pay for expanding fewer nodes. We might end up with suboptimal, but "good enough" solutions (satisficing solutions).

Inadmissible heuristics are potentially more accurate. Consider a heuristic that uses a straight-line distance to estimate the distance of a road between two cities. The path between two cities is almost never a straight line, so the length of the actual path will be greater than the length of the straight line. Meaning that overestimating the straight-line distance by a little bit will make it closer to the length of the actual path.

We don't actually need an inadmissible heuristic to take advantage of the faster speed and lower memory usage. We can simulate an inadmissible heuristic by adding some weight to the heuristic.

`f(n)=g(n)+W*h(n)`

for some `W gt 1`

This is weighted A* search.

In general, if the optimal cost is `C^(**)`, weighted A* search will find a solution that costs between `C^(**)` and `W*C^(**)`. In practice, it's usually much closer to `C^(**)` than to `W*C^(**)`.

Weighted A* can be seen as a generalization of some of the other search algorithms:

| A* search | `g(n)+h(n)` | `W=1` |

| Uniform-cost search | `g(n)` | `W=0` |

| Greedy best-first search | `h(n)` | `W=oo` |

| Weighted A* search | `g(n)+W*h(n)` | `1 lt W lt oo` |

Memory-bounded search

Beam search keeps only `k` nodes with the best `f`-scores in the frontier. This means that we may be excluding cost-optimal paths (and even solutions), but it's the price to pay to limit the number of expanded nodes. Though for many problems, it can find near-optimal solutions.

Iterative-deepening A* search (IDA*) is a commonly used algorithm for problems with limited memory. Where iterative deepening search uses depth as the cutoff, iterative-deepening A* search uses `f`-cost as the cutoff. Specifically, the cutoff value is the smallest `f`-cost of any node that exceeded the cutoff on the previous iteration.

Once the cutoff is reached, IDA* starts over from the beginning. Recursive best-first search (RBFS) also uses `f`-values to determine how far down a path it should go, but once the cutoff is reached, it steps back and takes another path (the one with the second-lowest `f`-value) instead of starting over.

function RECURSIVE-BEST-FIRST-SEARCH(problem)

solution, fvalue = RBFS(problem, NODE(problem.INITIAL), infinity)

return solution

function RBFS(problem, node, f_limit)

if problem.IS-GOAL(node.STATE) then return node

successors = LIST(EXPAND(node))

if successors is empty then return failure, infinity

for each s in successors do

s.f = max(s.PATH-COST + h(s), node.f)

while true do

best = the node in successors with the lowest f-value

if best.f > f_limit then return failure, best.f

alternative = the second lowest f-value among successors

result, best.f = RBFS(problem, best, min(f_limit, alternative))

if result != failure then return result, best_f

Bidirectional heuristic search

Bidirectional search is sometimes more efficient than unidirectional search, sometimes not. If we're using a very good heuristic, then A* search will perform well and adding bidirectional search won't add any more value. If we're using an average heuristic, bidirectional search tends to expand fewer nodes. If we're using a bad heuristic, then both directional and unidirectional search will be equally bad.

If we want to use bidrectional search with a heuristic, it turns out that using `f(n)=g(n)+h(n)` as the evaluation function doesn't guarantee that we'll find an optimal-cost solution. Instead, we need two different evaluation functions, one for each direction:

`f_F(n)=g_F(n)+h_F(n)` for going in the forward direction (initial state to goal)

`f_B(n)=g_B(n)+h_B(n)` for going in the backward direction (goal to initial state)

This is a front-to-end search.

Suppose the heuristic is admissible. Let's say that we're in the middle of running the algorithm and the forward direction is currently at node `j` and the backward direction is currently at node `t`. We can define a lower bound on the cost of a solution path `text(initial) rarr ... rarr j rarr ... rarr t rarr ... rarr text(goal)` as

`lb(j,t)=max(g_F(j)+g_B(t), f_F(j), f_B(t))`

The optimal cost must be at least the cost of `text(initial) rarr ... rarr j` plus the cost of `text(goal) rarr ... rarr t`.

Since we're using an admissible heuristic, the estimated costs are underestimated. So the optimal cost must also be at least the cost of `text(initial) rarr ... rarr j` plus the estimated cost of `j rarr ... rarr text(goal)`. The same reasoning applies for `f_B(t)`.

So for any pair of nodes `j,t` with `lb(j,t) lt C^(**)`, either `j` or `t` must be expanded because the path that goes through them is a potential optimal solution. The problem is that we don't know for sure which node is better to expand -- that part's just up to luck.

Either way, this lower bound gives us an idea for an evaluation function we could use:

`f_2(n)=max(2g(n), g(n)+h(n))`

This function guarantees that a node with `g(n) gt C^(**)/2` will never be expanded. If `g(n) gt C^(**)/2`, then `2g(n) gt C^(**)`.

- if `2g(n) ge g(n)+h(n)`, then `f_2(n)=2g(n) gt C^(**)`

- if `2g(n) lt g(n)+h(n)`, then `f_2(n)=g(n)+h(n) gt 2g(n) gt C^(**)`

So `f_2(n) gt C^(**)` for nodes with `g(n) gt C^(**)/2`. But nodes on optimal paths will have `f_2(n) le C^(**)`, so those will be expanded first.

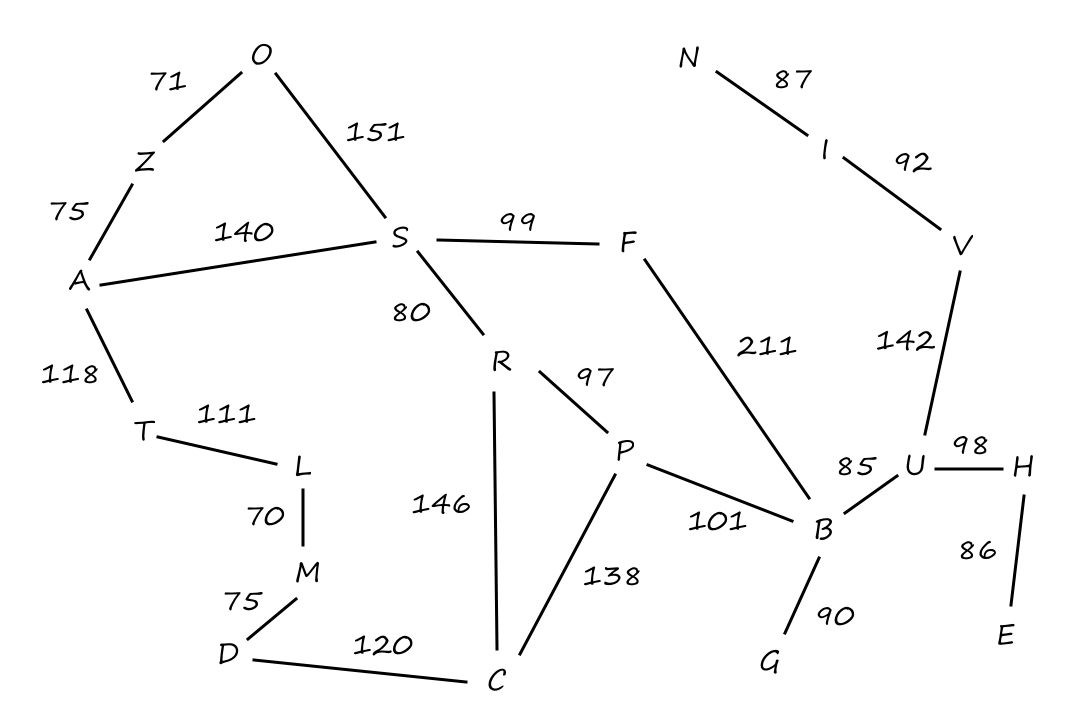

Here's another example of greedy search, A* search, and RBFS from the textbook.

The map uses straight lines, but it's just a drawing. The roads aren't necessarily straight lines.

| City | Straight-line distance to B |

|---|---|

| A | 366 |

| B | 0 |

| C | 160 |

| D | 242 |

| E | 161 |

| F | 176 |

| G | 77 |

| H | 151 |

| I | 226 |

| L | 244 |

| City | Straight-line distance to B |

|---|---|

| M | 241 |

| N | 234 |

| O | 380 |

| P | 100 |

| R | 193 |

| S | 253 |

| T | 329 |

| U | 80 |

| V | 199 |

| Z | 374 |

Greedy best-first search

Let's say we're starting at `A` and we want to go to `B`. If we use the straight-line distance heuristic, then we get the path `A ubrace(rarr)_(140) S ubrace(rarr)_(99) F ubrace(rarr)_(211) B`.

The numbers are the straight-line distances from that node to `B`.

The cost of that path is `450`, but that's not the path with the least cost. The path `A ubrace(rarr)_(140) S ubrace(rarr)_(80) R ubrace(rarr)_(97) P ubrace(rarr)_(101) B` has a lower cost with `418`.

A* Search

Notice that `B` is found when expanding `F`, but since there's a lower cost available to consider, we don't accept the `F rarr B` path as a solution yet.

A* is efficient because it prunes away nodes that are not necessary for finding an optimal solution. Here, `T` and `Z` are pruned away, but would've been expanded in uniform-cost or breadth-first search.

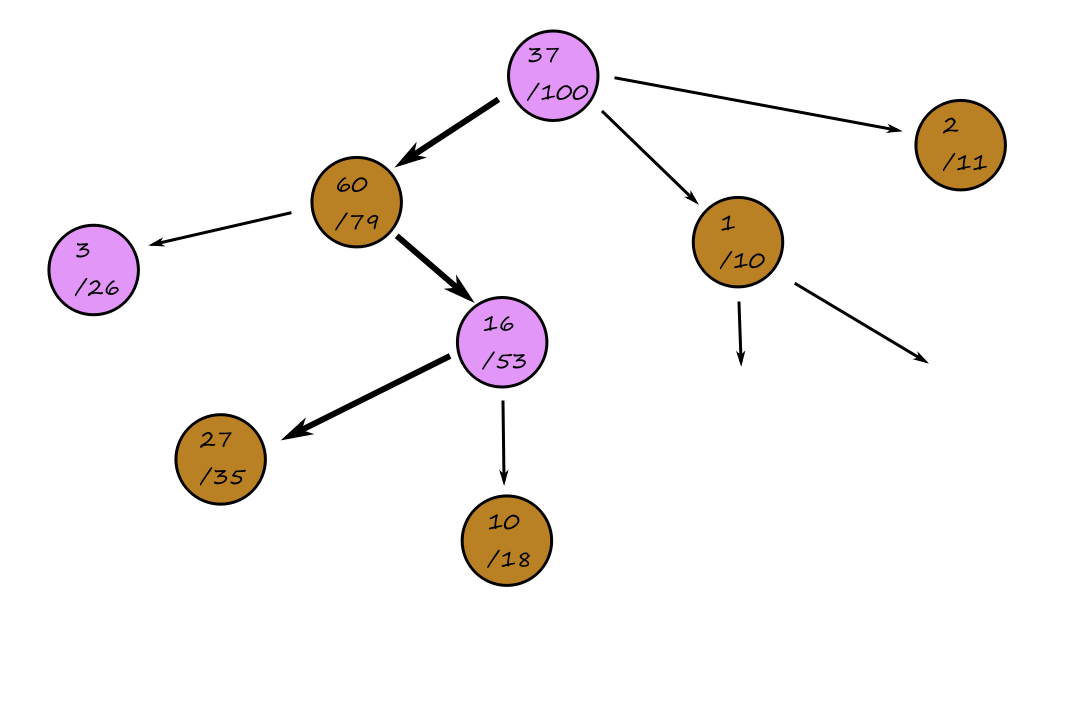

Recursive best-first search

Notice that when `P` is the successor with the lowest `f`-value, `P`'s `f`-value is higher than f_limit. So as we go back up to consider the alternative path, we update `R`'s `f`-value so we can check later if it's worth reexpanding `R`. (The same thing happens for `F`.) The `f`-value that is used as replacement is called the backed-up value, and it's the best `f`-value of the node's children.

RBFS looks similar to A* search in that it looks for the lowest `f`-value. The difference is that RBFS only looks at the `f`-values of the current node's successors while A* search looks at all the nodes in the frontier.

Heuristic Functions

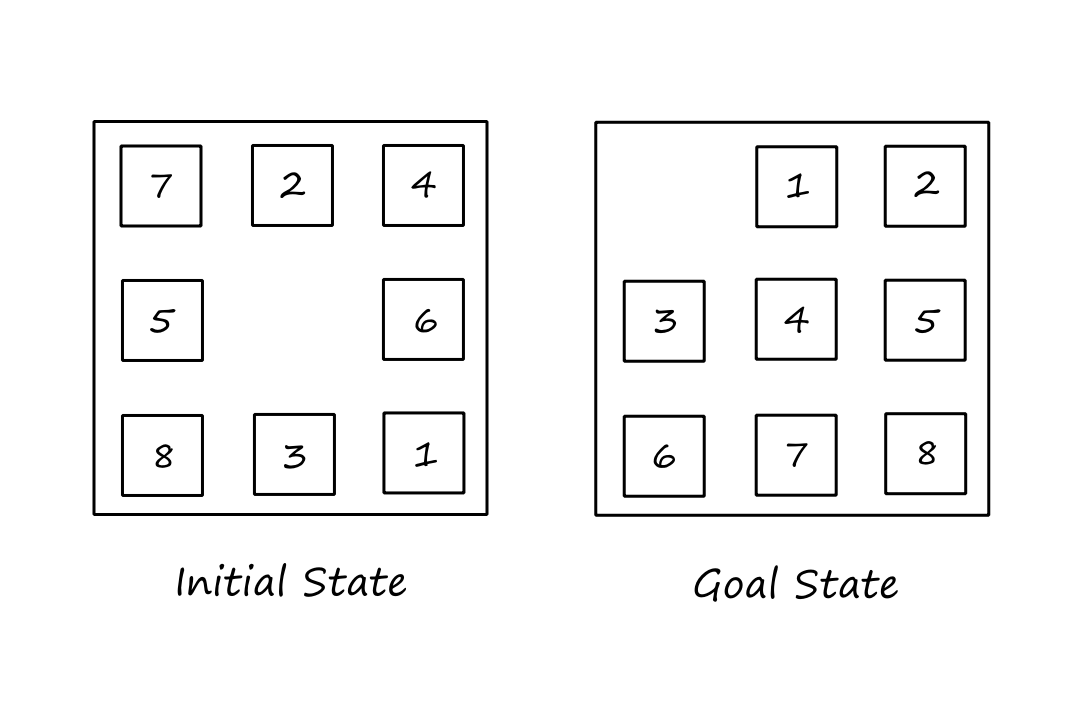

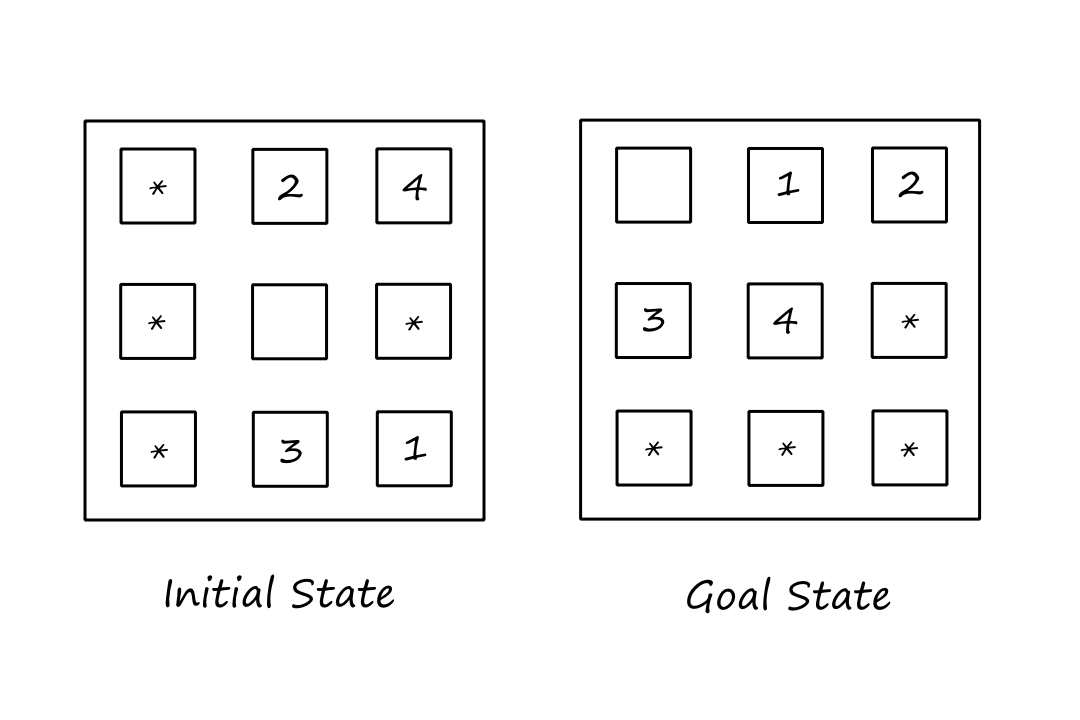

Here, we'll look at how the accuracy of a heuristic affects search performance and how we can come up with heuristics in the first place. We'll use the 8-puzzle as our main example. The 8-puzzle is a `3xx3` sliding-tile puzzle with `8` tiles and `1` blank space. We move tiles horizontally and vertically into the blank space until the goal state is reached (each move has a cost of `1`).

The shortest solution has `26` moves.

Two commonly-used heuristics are

- `h_1=` the number of misplaced tiles (blank space not included)

- for our example, `h_1=8` because all `8` tiles are misplaced

- this is admissible because the number of moves needed to reach a goal state will always be `ge` the number of misplaced tiles

- if all `8` misplaced tiles need just `1` move to get to the correct spot, then the estimated cost is still not overestimated

- `h_2=` the sum of the distances of the tiles from their goal positions

- for our example, `h_2=3+1+2+2+2+3+3+2=18`

- `1` is `3` squares away from its destination, `2` is `1` square away from its destination, `3` is `2` squares away from its destination, ...

- this is sometimes called the city-block distance or Manhattan distance

- this is admissible because the calculation of the distance doesn't take obstacles into account

- `8` is `2` squares away from its destination, but it may actually need `ge 2` moves

- for our example, `h_2=3+1+2+2+2+3+3+2=18`

The effect of heuristic accuracy on performance

One way to assess the quality of a heuristic is to look at the effective branching factor `b^(**)`. It can loosely be thought of as the average number of nodes that are generated at each depth. A good heuristic would have `b^(**)` close to `1`, which means that not a lot of nodes are being generated the farther we go down a path (we're fairly successful in finding good paths).

If A* finds a solution at depth `5` using `52` nodes, then the effective branching factor is `1.92`.

It turns out that `h_2` (the Manhattan distance) is always better than `h_1` (the number of misplaced tiles). This means that `h_2` dominates `h_1`. Because of this, A* search using `h_2` will never expand more nodes than A* using `h_1` (except for when there are ties).

Earlier we saw that every node with `f(n) lt C^(**)` will surely be expanded (assuming consistent heuristic).

`f(n) lt C^(**)`

`g(n)+h(n) lt C^(**)`

`h(n) lt C^(**)-g(n)`

So every node with `h(n) lt C^(**)-g(n)` will surely be expanded. By definition, `h_2(n) ge h_1(n)`, so every node that is surely expanded by A* with `h_2` is also surely expanded by A* with `h_1`. Mathematically, this is because `h_1(n) le h_2(n) lt C^(**)-g(n)`. But because `h_1` is small, there are more nodes that are `lt C^(**)-g(n)`, so A* with `h_1` will expand other nodes that A* with `h_2` wouldn't have expanded.

So it's generally better to use a heuristic function with higher values, assuming it's consistent and it's not too computationally intensive.

Generating heuristics from relaxed problems

So how do we come up with heuristics like `h_1` and `h_2` in the first place? (Perhaps more importantly, is it possible for a computer to come up with such a heuristic?)

Let's make the 8-puzzle simpler so that tiles can be moved anywhere, even if there's another tile occupying the spot. Notice now that `h_1` would give the exact length of the shortest solution.

Let's consider another simplified version where the tiles can still only move `1` square but, now, in any direction, even if the square is occupied. Notice that `h_2` would give the exact length of the shortest solution.

These simplified versions, where there are fewer restrictions on the actions, are relaxed problems. The state-space graph of a relaxed problem is a supergraph of the original state space because the removal of restrictions allows for more possible states, resulting in more edges being added. This means that an optimal solution in the original problem is also an optimal solution in the relaxed problem. But the relaxed problem may have better solutions (this happens when the added edges provide shortcuts).

That last part is how we can come up with heuristics. The cost of an optimal solution to a relaxed problem is an admissible heuristic for the original problem. (Also, if the heuristic is an exact cost for the relaxed problem, it must obey the triangle inequality, making it consistent.)

So how can we come up with relaxed problems systematically?

Let's describe the 8-puzzle actions as follows: a tile can move from square `X` to square `Y` if `X` is adjacent to `Y` and `Y` is blank. From this, we can generate three relaxed problems simply by removing one or both of the conditions:

- A tile can move from square `X` to square `Y` if `X` is adjacent to `Y`

- A tile can move from square `X` to square `Y` if `Y` is blank

- A tile can move from square `X` to square `Y`

From the first one, we can derive `h_2` (the Manhattan distance) and from the third one, we can derive `h_1` (the number of misplaced tiles).

Ideally, relaxed problems generated by this technique should be solveable without search.

If we generate a bunch of relaxed problems, we now also have a bunch of heuristics. If none of them are much better than the others, then which one should be used? One thing we can do is to pick the heuristic that gives the highest value:

`h(n)=max{h_1(n), ..., h_k(n)}`

But if it takes too long to compute all the heuristics, then we can also randomly pick one or use machine learning to predict which heuristic will be the best.

Generating heuristics from subproblems: Pattern databases

Let's consider a subproblem of the 8-puzzle, where the goal is to get only tiles `1,2,3,4,` and the blank space into the correct spots.

Since there are fewer tiles that have to be in a certain spot, the cost of the optimal solution of this subproblem is a lower bound on the cost of the complete problem, meaning that this could make a good admissible heuristic.

For every possible subproblem, we can store the exact costs of their solutions in a pattern database. So when we're trying to solve the complete problem, we could look in our database for the subproblem that matches our current state and use the exact cost of the subproblem as the heuristic for the complete problem.

We could have multiple databases for each subproblem type. For example, one database could be all the subproblem instances with tiles `1,2,3,4`. Another database could be all the subproblem instances with tiles `5,6,7,8`. Another database could be all the subproblem instances with tiles `2,4,6,8`. And so on. Then the heuristics from these databases can be combined by taking the maximum value. Doing this turns out to be more accurate than the Manhattan distance.

Generating heuristics with landmarks

This is kinda similar to using pattern databases, but kinda makes more sense when viewed in the context of route-finding problems.

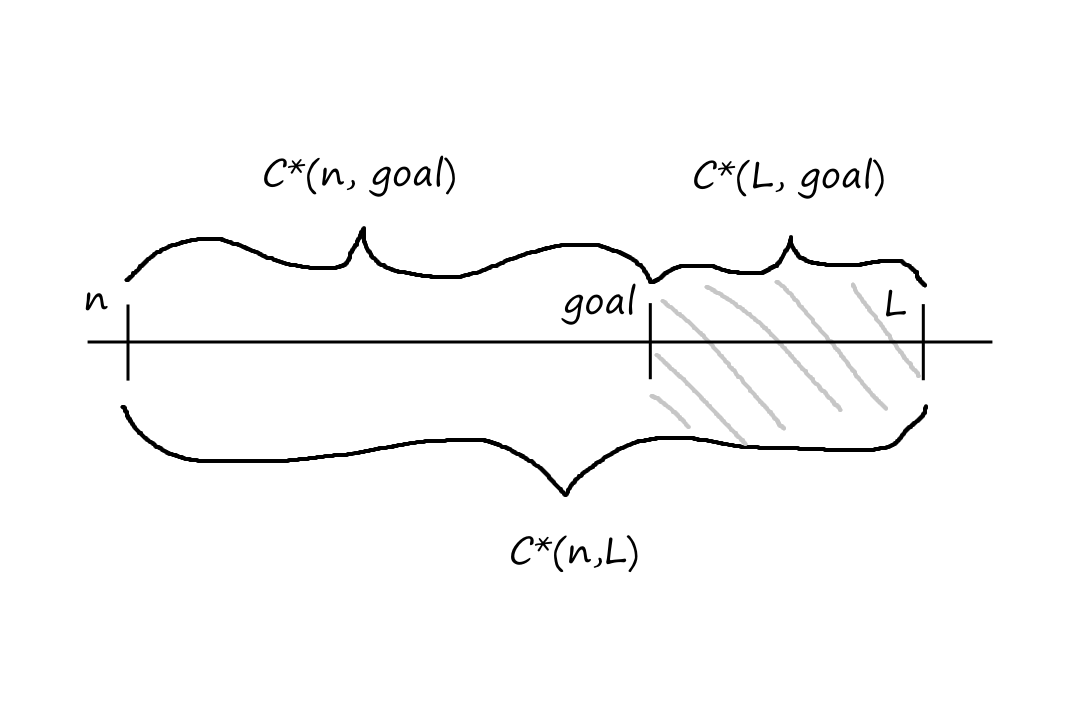

We can pick a few landmark points and precompute the optimal costs to these landmarks. More formally, for each landmark `L` and for each other vertex `v` in the graph, we compute and store `C^(**)(v,L)`. This may sound time-consuming, but it only needs to be done once and it will be infinitely useful.

So if we're trying to estimate the cost to the goal, we can look at the cost to get there from a landmark.

The heuristic would be

`h_L(n)=min_(L in text(landmarks)) C^(**)(n,L)+C^(**)(L,text(goal))`

This heuristic is inadmissible though. If the optimal path is through a landmark, then this heuristic will be exact. If it isn't, then it will overestimate the optimal cost (the exact cost of a non-optimal path is greater than the exact cost of an optimal path).

However, we can come up with an admissible heuristic:

`h_(DH)(n)=max_(L in text(landmarks)) abs(C^(**)(n,L)-C^(**)(L,text(goal)))`

This is a differential heuristic (differential because of the subtraction). To understand it intuitively, consider a landmark that is beyond the goal. If the goal happens to be on the optimal path to the landmark, then the path `n rarr text(goal) rarr L` minus the path `text(goal) rarr L` gives us the optimal path `n rarr text(goal)`.

If the goal is not on the optimal path to the landmark, then we would be subtracting by a larger value, resulting in a cost `lt` the optimal cost, i.e., the cost is underestimated.

Landmarks that are not beyond the goal will already underestimate the cost to the goal, but these landmarks are not likely to be considered since we're selecting the max.

A good way to decide landmark points are by looking at user requests for frequently requested landmarks.

Learning heuristics from experience

Sometimes, we can just develop an intuition for something if we do it many times. From the perspective of computers, they can pick up on patterns in past data and come up with a heuristic that seems to align with those patterns. A lot of this seems to depend on luck, so the heuristics are not expected to be admissible (much less consistent).

For example, the number of misplaced tiles might be helpful in predicting the actual cost. For further example, we might find that when there are `5` misplaced tiles, the average solution cost is around `14`.

Another feature that might be useful is the number of pairs of adjacent tiles that are not adjacent in the goal state.

If we have multiple features, we can combine them to generate a heuristic by using a linear combination of them:

`h(n)=c_1x_1(n)+c_2x_2(n)+...+c_kx_k(n)`

where each `x_i` is a feature

Machine learning would be used to figure out the best values for the constants.

Summary of Chapter 3

- A well-defined search problem consists of five parts: the initial state, a set of actions, a transition model that describes the results of those actions, a set of goal states, and an action cost function.

- The environment of the problem is represented by a state space graph. A path through the state space from the initial state to a goal state is a solution.

- Uninformed search methods have access only to the problem definition. Algorithms build a search tree to find a solution but differ based on which node they expand first.

- Best-first search expands the node with the lowest value given by an evaluation function.

- Breadth-first search expands the shallowest nodes first. It is complete, optimal for unit action costs, but has exponential space complexity.

- Uniform-cost search expands the node with the lowest path cost, `g(n)`, and is optimal for general action costs.

- Depth-first search expands the deepest unexpanded node first. It is neither complete nor optimal, but has linear space complexity. Depth-limited search adds a depth bound.

- Iterative deepening search calls depth-first search with increasing depth limits until a goal is found. It is complete when full cycle checking is done, optimal for unit action costs, has exponential time complexity, and has linear space complexity.

- Bidirectional search expands two frontiers, one around the initial state and one around the goal, stopping when the two frontiers meet.

- Informed search methods have access to a heuristic function `h(n)` that estimates the cost of a solution from `n`.

- Greedy best-first search expands the node with the lowest value of `h(n)`. It is not optimal but is often efficient.

- A* search expands the node with the lowest value of `f(n)=g(n)+h(n)`. It is complete and optimal (if `h(n)` is admissible), but uses up a lot of space.

- IDA* (iterative deepening A* search) is an iterative deepening version of A* that addresses the space complexity issue.

- RBFS (recursive best-first search) is a robust, optimal search algorithm that uses limited amounts of memory.

- Beam search puts a limit on the size of the frontier. This makes it incomplete and suboptimal, but it often finds reasonably good solutions and is generally faster than complete searches.

- Weighted A* search expands fewer nodes but sacrifices optimality.

- The performance of heuristic search algorithms depends on the quality of the heuristic function.

- Good heuristics can be obtained by relaxing the problem definition, by storing precomputed solution costs for subproblems in a pattern database, by defining landmarks, or by learning from experience.

Earlier, we assumed that the environments are episodic, single agent, fully observable, deterministic, static, discrete, and known. Now we'll start relaxing these constraints to get closer to the real world.

Local Search and Optimization Problems

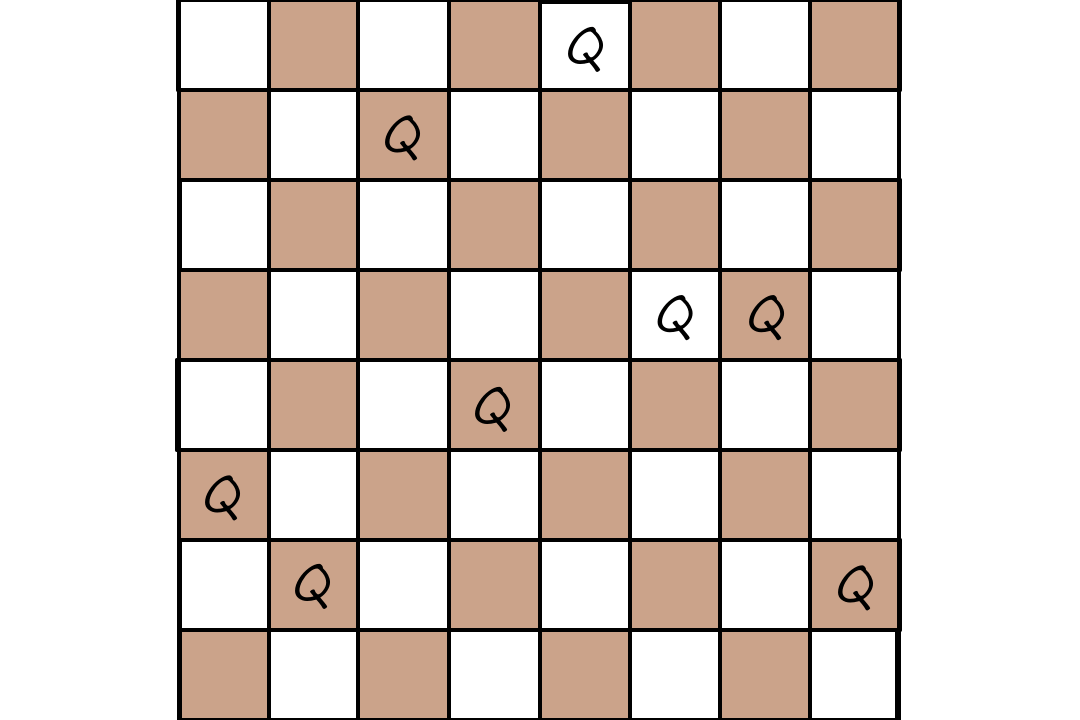

There are some search problems where we only care about what a goal state looks like (and we don't care what the path looks like). For example, the 8-queens problem is to find a valid placement of 8 queens such that no queens are attacking each other. Once we know what a solution looks like, it's trivial to reconstruct the steps to get there.

Local search algorithms work without keeping track of the paths or the states that have been reached. This means that they don't explore the whole search space systematically, i.e., they are not complete and may not find a solution. However, they use very little memory and can find reasonable solutions in problems with large or infinite state spaces.

Local search also works for optimization problems, where the goal is to find the best state according to some objective function. The objective function represents what we're trying to maximize/minimize.

Hill-climbing search

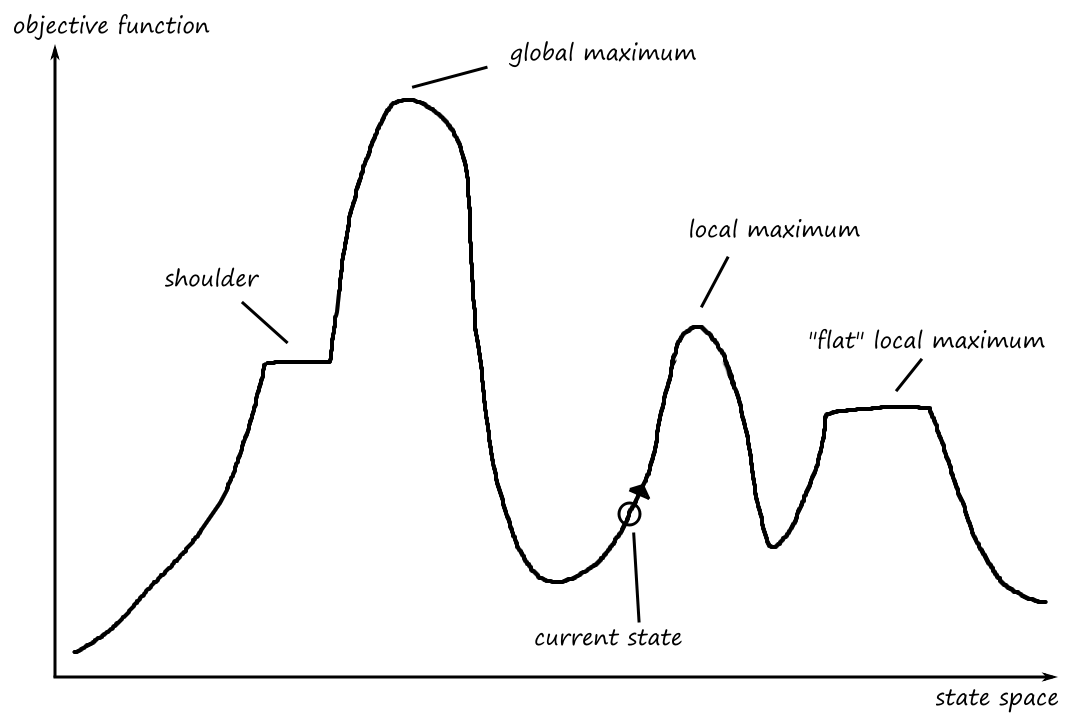

Let's take a look at a sample state-space landscape.

One goal might be to find the highest peak, which is the global maximum. This process is called hill climbing.

If the goal is to find the lowest valley (global minimum), then the process is called gradient descent.

The hill climbing search algorithm is pretty simple. It keeps moving forward until the next state it finds has a smaller value than the value of the current state.

function HILL-CLIMBING(problem)

current = problem.INITIAL

while true do

neighbor = a highest-valued successor state of current

if VALUE(neighbor) ≤ VALUE(current) then return current

current = neighbor

This particular version of hill climbing is steepest-ascent hill climbing because it heads in the direction of the steepest ascent.

Hill climbing is sometimes also called greedy local search because it gets a good neighbor state without thinking ahead about where to go next.

There are some situations where the hill climbing algorithm can get stuck before finding a solution. One situation is when a local maximum is reached. In this situation, every possible move results in a worser state.

Another situation is when a plateau is reached. A plateau can be a flat local maximum or a shoulder, where there is an uphill exit. In this situation, every possible move doesn't result in a better state (except if the plateau is a shoulder and we're right at the edge of the uphill exit).

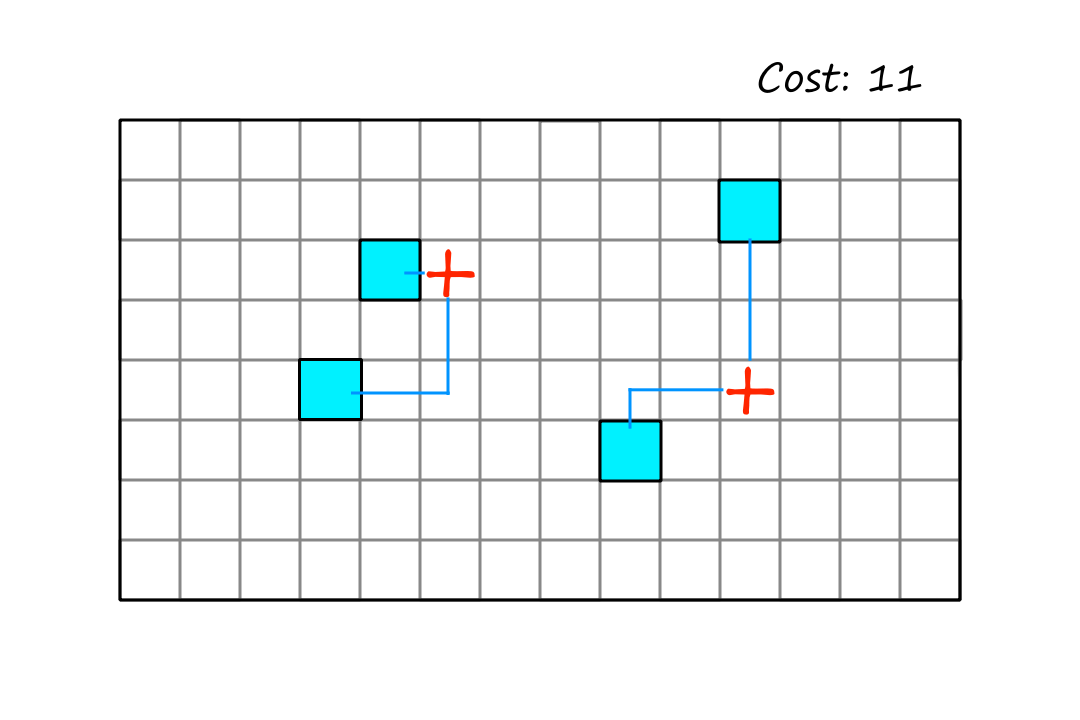

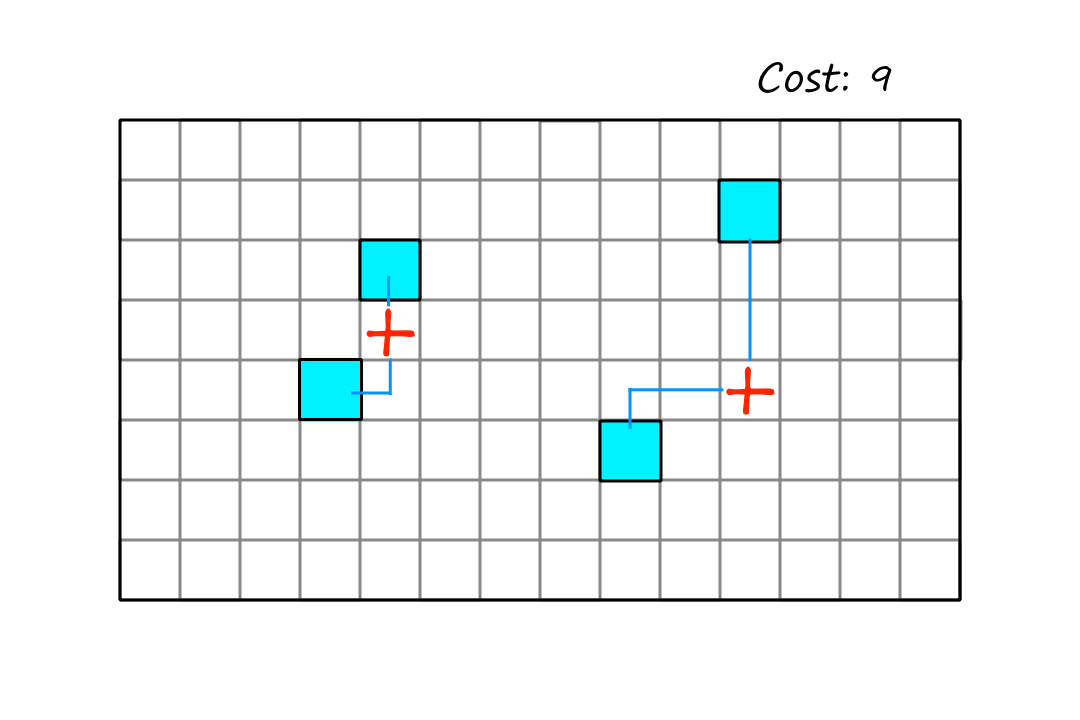

Let's look at the problem of positioning hospitals so that they are as close as possible to every house.

The cost is the sum of the distances from each house to the nearest hospital.

We can see that this is the best way to place the hospitals, but in order to achieve this position, the algorithm has to make the move below first.

Since this move doesn't result in a better state, the hill climbing algorithm won't consider that move. And as a result, the best configuration will never be reached. This is an example of a plateau.

One way to get unstuck is to allow sideways moves, i.e., to allow the algorithm to keep moving as long as the next state isn't worse. The idea is to hope that the plateau is a shoulder. This won't work if the plateau is a local maximum though, in which case we really are stuck.

Another way (kinda) is to just start over, but from a different starting point. This is random-restart hill climbing.

Interestingly, random-restart hill climbing is complete with probability `1` because it will eventually generate a goal state as the initial state.

Stochastic hill climbing chooses a random uphill move instead of the steepest uphill move.

First-choice hill climbing generates successors randomly until one of them is better than the current state.

Simulated annealing

Annealing is the process of hardening metals and glass by heating them to a high temperature and then gradually cooling them.

Sometimes, it's necessary to make worse moves that put us in a temporarily worse state so that we can get to a better position.

function SIMULATED-ANNEALING(problem, schedule) `e^(-DeltaE//T)`

current = problem.INITIAL

for t = 1 to infinity do

T = schedule(t)

if T = 0 then return current

next = a randomly selected successor of current

ΔE = VALUE(current) - VALUE(next)

if ΔE > 0 then current = next

else current = next only with probability

T represents the "temperature", corresponding to the temperature described in annealing.

In the simulated-annealing algorithm, we pick moves randomly instead of picking the best move. If the move puts us in a better situation, then we'll accept it. But if the move puts us in a worse position, then we'll consider it, depending on how bad of a move it is (measured by `DeltaE`). And as time goes on, we'll be less accepting of bad moves.

It's called simulated annealing because the algorithm starts with a high "temperature", meaning that there's a high level of randomness in exploring the search space. As time goes on, the "temperature" gets lower, reducing the random moves.

Local beam search

Local beam search starts with `k` randomly generated states, generates all their successors (if one of them is a goal, then we're done), selects the best `k` successors, and repeats.

Local beam search kinda looks like running `k` random-restart searches in parallel. However, it actually isn't. All random-restart searches are independent of each other, while each local beam search thread passes information to each other.

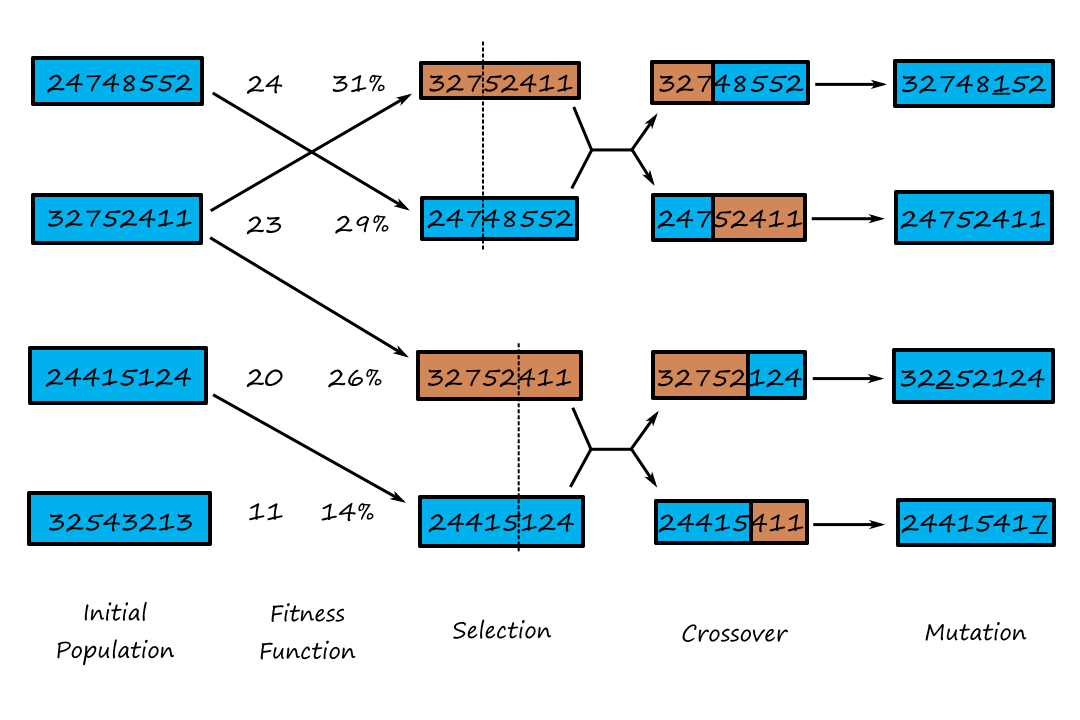

Evolutionary algorithms

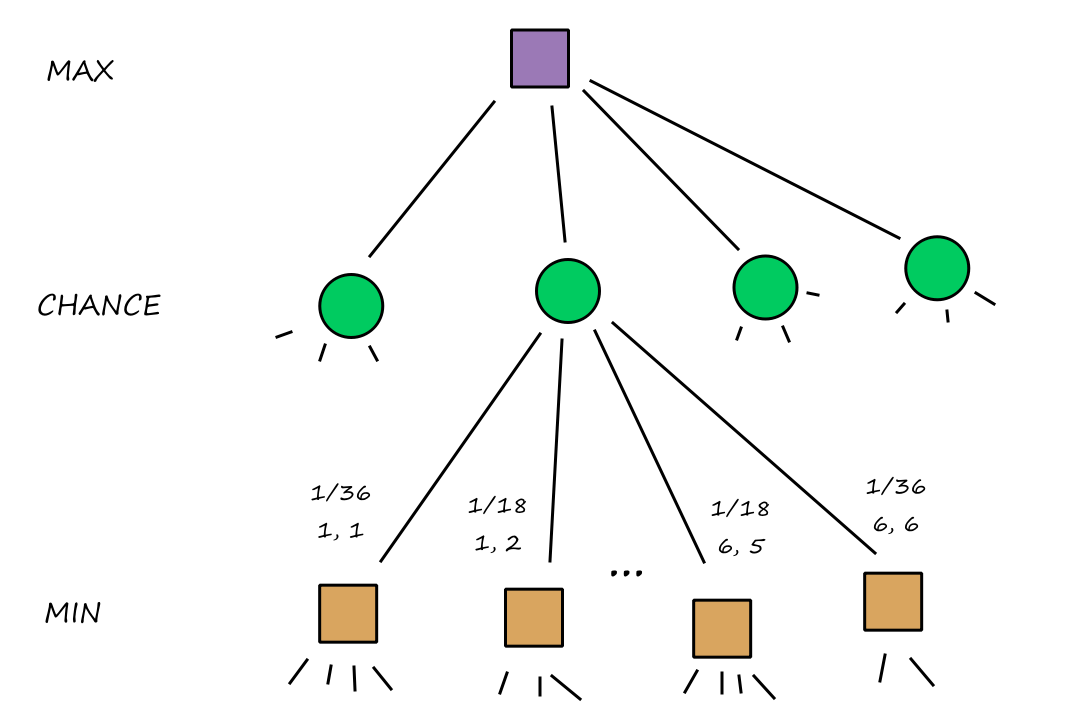

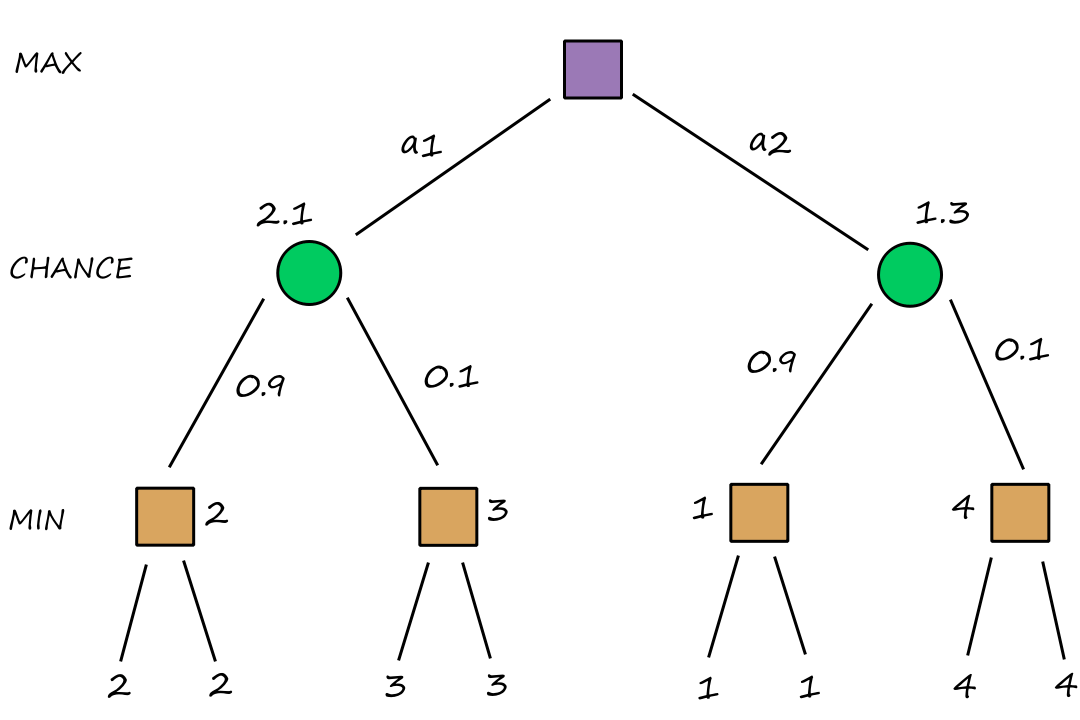

Evolutionary algorithms are based on the ideas of natural selection in biology. There is a population of individuals (states), and the fittest (highest valued) individuals produce offspring (successor states). And just like in real life, the successor states contain properties of the parent states that produced them.

The process of combining the parent states to produce successor states is called recombination. One common way of implementing this is to split each parent at a crossover point and use the four resulting pieces to form two children.

The numbers in the fitness function are sample fitness scores assigned by the fitness function to each state. The fitness scores are then converted to a probability.

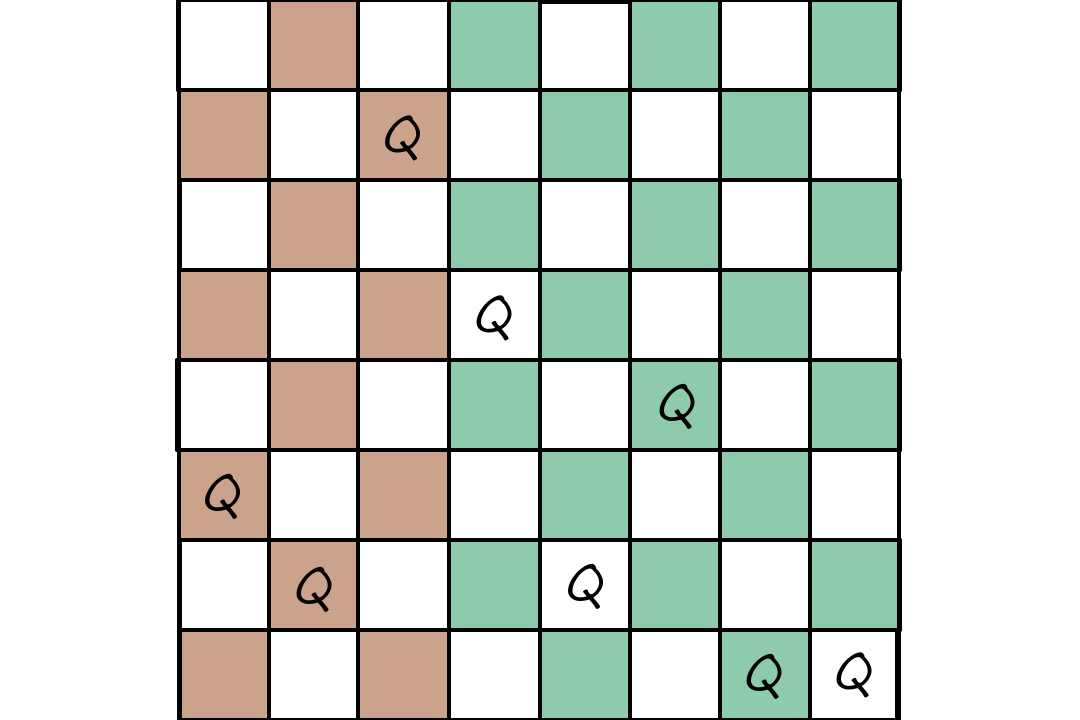

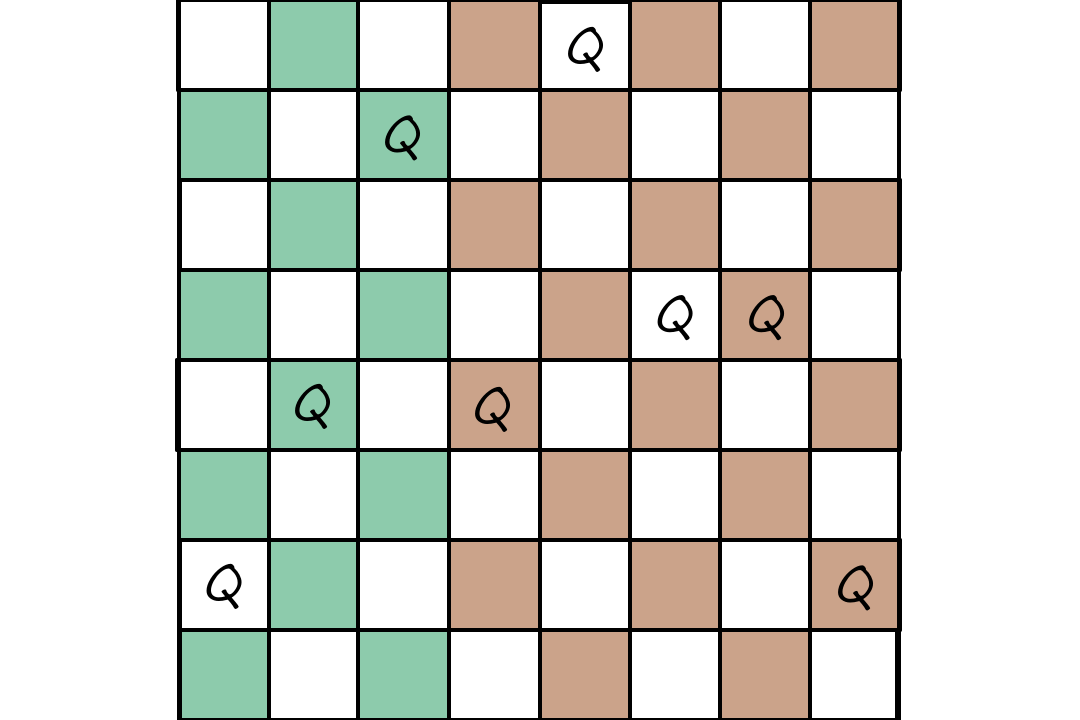

Below is an example of how an evolutionary algorithm can be used for the 8-queens problem. We can encode each queen's positions as a number from `1-8`.

`327|52411`

`247|48552`

`327|48552`

If we use nonattacking queens as the fitness function, then the sample fitness scores are the number of nonattacking pairs of queens.

Local Search in Continuous Spaces

Most real-world problems take place in a continuous environment, not in a discrete one like the examples we've seen so far. A continuous environment can have a continuous action space (e.g., a robotic arm moving anywhere between `0` and `180` degrees), a continuous state space (e.g., the current position of a robot in a room), or both.

A continuous action space has an infinite branching factor, so most of the algorithms we've seen so far won't work (except for first-choice hill climbing and simulated annealing).

One way to deal with a continuous state space is to discretize it.

For example, suppose we want to place three new airports in an area such that each city is as close as possible to its nearest airport. The state space is defined by the coordinates of the three airports: `(x_1, y_1),(x_2, y_2),(x_3, y_3)`. To discretize this, we could predefine fixed points on a grid and only allow the airports to be at those fixed points.