Numerical Analysis: A Nonlinear Story

Shortcut to this page: ntrllog.netlify.app/nonlinear

Notes provided by Professor Li-Tien Cheng (UCSD)

This is the nonlinear version of the linear algebra story. The linear story was looking at how to solve problems of the form Ax=b i.e. Ax-b=0, which is linear. This story looks at how to solve problems of the form f(x)=g(x) where f(x),g(x) are nonlinear. For example, they could be x2,x3,cos(x),ex,...

A Numbers Store Stores Numbers

How does a computer store numbers? Vaguely, we could describe it as putting each digit in a space somewhere in the computer's memory. So 100 would need 3 spaces*. This seems like an easy and obvious idea until we get to numbers like π, which has an infinite number of digits. Does this mean we need an infinite amount of memory to store π? But computers don't have infinite memory, so what happens then?

*Computers work in binary, so things are a little bit different than this.

Computers store a number by keeping as much of it as they can and then throwing away the rest. So if a computer can only store at most 8 digits, then it would store π as 3.1415926. But complications arise when we're dealing with really big or really small numbers. For example, 0.00000000000000045 would be stored as 0.0000000, which is just 0. This is a problem because 0.00000000000000045 isn't equal to 0, but it would be treated as such. A similar problem occurs for 1000000000000000, which would be stored as 10000000, which is much much smaller.

This suggests needing a smarter way to represent the numbers. Enter scientific notation. With scientific notation, we can represent 0.00000000000000045 as 0.45×10-16 and 1000000000000000 as 1×1015. Then we would only need to store the 45 and the -16 for 0.45×10-16. And the 1 and the 15 for 1×1015.

In these examples, the 45 and the 1 are called the "mantissa" and the -16 and the 15 are called the "exponents".

Single-precision computers use 32 bits (4 bytes) to store a number:

1 bit for the sign (to indicate whether the number is positive or negative)

8 bits for the exponent

23 bits for the mantissa

Double-precision computers use 64 bits (8 bytes) to store a number:

1 bit for the sign

11 bits for the exponent

52 bits for the mantissa

Scientific notation helps make it easier to store numbers, but some complications still pop up. Consider a computer that can only store at most 3 digits in the mantissa. Let's say we wanted to store the number 0.002146. In scientific notation, it would be 0.2146×10-2, which has 4 digits in the mantissa. Our computer can only store 3 digits in the mantissa, so how should it be stored? There are 2 options: chopping and rounding.

Under chopping, 0.2146×10-2 would become 0.214×10-2. As the name implies, the rest of the digits (after the 3rd) are "chopped off". Under rounding, 0.2146×10-2 would become 0.215×10-2.

From this, we can see that what the computer stores isn't always going to be exactly equal to the original number. The version of the number that the computer stores can be denoted by fl(x), where x is the original number.

So the difference between the original number and the stored number is going to be the error. There are 2 types of errors:

absolute error =|x-fl(x)|

relative error =|x-fl(x)||x|

Errors are bad, but unavoidable. So it would be helpful to know how bad the relative error could be for any number we want to store.

Let's say we want to store the number x (which is not 0) on an n-digit chopping computer (a computer that stores at most n digits and uses the chopping method). Written in scientific notation:

x=±0.d1 d2 d3 ...×10a

where di is the ith digit of the mantissa of x

Since this computer uses chopping, it would only store the first n digits of the mantissa.

fl(x)=±0.d1 d2 d3 ... dn×10a

We want to compute the relative error, so we need |x-fl(x)| and |x|.

|x-fl(x)|=0.00...0⏟n dn+1 dn+2 ...×10a

|x|=0.d1 d2 d3 ...×10a

relative error = |x-fl(x)||x|=0.n⏞00...0 dn+1 dn+2 ...×10a0.d1 d2 d3 ...×10a

=0.n⏞00...0 dn+1 dn+2 ...0.d1 d2 d3 ...

To find out the worst our error could be, we want to make it as large as possible. We do that by making the numerator as big as possible (by letting dn+1=dn+2=...=9) and making the denominator as small as possible (by letting d1=1 * and d2=d3=...=0).

*d1 cannot be 0 because of the way numbers are written in scientific notation. We wouldn't write something like 0.083×103; it would be 0.83×102.

≤0.n-1⏞00...01*0.100

=0.n-2⏞0...01

=10-(n-1)

=101-n

*0.999...=1

So the relative error — which we can denote by ε — can be at most 101-n. This is the largest error possible for an n-digit chopping computer.

For an n-digit rounding computer, ε=12⋅101-n

Let x=0.d1 d2 ...×10a be a real number. Suppose dn+1<5. Then fl(x)=0.d1 d2 ...dn×10a

relative error = |x-fl(x)||x|

=0.n⏞0...0 dn+1 dn+2 ...×10a0.d1 d2 ...×10a

=0.n⏞0...0 dn+1 dn+2 ...0.d1 d2 ...

≤0.n⏞0...049...0.10...

=0.n⏞0...050.1

=0.n-1⏞0...05

=0.5×10-(n-1)

=12×101-n

Now suppose 5≤dn+1≤9. Then fl(x)=0.d1 d2 ... dn×10a+0.n-1⏞0...01×10a

relative error = |x-fl(x)||x|

=|0.d1 d2 ... dn dn+1 ...×10a-0.d1 ... dn×10a-0.n-1⏞0...01×10a||0.d1 d2 ...×10a|

=|0.n⏞0...0 dn+1 ...-0.n-1⏞0...01||0.d1 d2 ...|*

≤0.n⏞0...050.1

=0.n-1⏞0...05

=0.5×10-(n-1)

=12×101-n

* Since 5≤dn+1≤9, we have:

|0.n⏞0...05-0.n-1⏞0...01|=0.n⏞0...05

|0.n⏞0...06-0.n-1⏞0...01|=0.n⏞0...04

|0.n⏞0...07-0.n-1⏞0...01|=0.n⏞0...03

|0.n⏞0...08-0.n-1⏞0...01|=0.n⏞0...02

|0.n⏞0...09-0.n-1⏞0...01|=0.n⏞0...01

So dn+1=5 (and dn+2=dn+3=...=0) gives us the biggest error possible.

The story thus far: When we're working with really small/large numbers, computers won't be able to store them exactly as they are. Computers get around this by storing them using scientific notation. But even then, some numbers have so many digits (e.g. π) that computers still can't store them exactly as they are. So computers either round the number or chop the number so there are less digits to store. This means that computers store a modified version of the number (denoted fl(x)).

Errors in Arithmetic Operations

Let's suppose we wanted to add 0.42312 and 0.63546 on a 3-digit rounding computer. First, the computer would store them as 0.423 and 0.635. Then, the computer would add those two to get 1.058*. Since this is a 3-digit rounding computer, it would say that the answer is 1.06. In math notation:

fl(fl(0.42312)+fl(0.63546))=fl(0.423+0.635)=fl(1.058)=1.06

Notice how this differs from the actual answer, which is 1.05858.

* This is a 4-digit number, but the computer can only store 3-digit numbers. It turns out that computers temporarily store results of arithmetic operations using more digits if needed.

For longer strings of operations, the computer performs the operations pairwise. For example:

x⋅y⋅z=(x⋅y)⋅z

the computer does x⋅y first, then multiplies that with z

fl(fl(fl(x)⋅fl(y))⋅fl(z))

We've established that there are errors in storing numbers. And performing operations with "wrong" numbers will lead to numbers that are "more wrong". But how much error could there be when doing arithmetic operations?

Let's suppose we wanted to multiply two numbers, x and y. In the computer, they're stored as fl(x) and fl(y). Well, we can write:

fl(x)=x(1+δ1)

fl(y)=y(1+δ2)

where |δ1| and |δ2| are the relative errors*

In words: fl(some number) is the actual value with some error attached to it.

* Recall that the formula for relative error is |fl(x)-x||x|

fl(x)=x(1+δ1)

fl(x)=x+xδ1

fl(x)-x=xδ1

fl(x)-xx=δ1

|fl(x)-x||x|=|δ1|

so |δ1| is the relative error (similarly for |δ2|)

So multiplying those two values would result in:

fl(fl(x)⋅fl(y))

fl(x(1+δ1)⋅y(1+δ2))

(x(1+δ1)⋅y(1+δ2))(1+δ3)

xy(1+δ)

where |δ| is the relative error

and (1+δ)=(1+δ1)(1+δ2)(1+δ3)

=1+δ1+δ2+δ3+δ1δ2+δ1δ3+δ2δ3+δ1δ2δ3

So how big is the relative error?

1+δ=1+δ1+δ2+δ3+δ1δ2+δ1δ3+δ2δ3+δ1δ2δ3

δ=δ1+δ2+δ3+δ1δ2+δ1δ3+δ2δ3+δ1δ2δ3

|δ|=|δ1+δ2+δ3+δ1δ2+δ1δ3+δ2δ3+δ1δ2δ3|

|δ|≤|δ1|+|δ2|+|δ3|+|δ1δ2|+|δ1δ3|+|δ2δ3|+|δ1δ2δ3| (by triangle inequality)

In the previous section, we defined ε to be the largest possible relative error. So all of these relative errors are smaller than ε, i.e. |δ1|,|δ2|,|δ3|≤ε

|δ|≤|δ1|+|δ2|+|δ3|+|δ1δ2|+|δ1δ3|+|δ2δ3|+|δ1δ2δ3|

|δ|≤ε+ε+ε+ε2+ε2+ε2+ε3

|δ|≤3ε+3ε2+ε3

|δ|≤3ε+O(ε2)=O(ε)

So multiplying two numbers will lead to error, but it will be at most 3 times as bad as the worst error in storing a number.

(x⋅y)⋅z gets relative error |δ|≤5ε+O(ε2)

((x1⋅x2)⋅x3)⋅x4⋅...⋅xm gets relative error |δ|≤(2m-1)ε+O(ε2)

Now, let's suppose we wanted to subtract two numbers x and y.

fl(fl(x)-fl(y))=(x(1+δ1)-y(1+δ2))(1+δ3)

(x-y+xδ1-yδ2)(1+δ3)

(x-y)(1+xδ1x-y-yδ2x-y)(1+δ3)

(x-y)(1+δ)

where (1+δ)=(1+xδ1x-y-yδ2x-y)(1+δ3)

⇒δ=δ3+xδ1x-y-yδ2x-y+δ3(xδ1x-y-yδ2x-y)

But |xx-y| and |yx-y| can be very large when x is close to y. When this happens, |δ| will be very large. This is called catastrophic cancellation.

Example: For n=5 (a 5-digit rounding computer), let's subtract x=1 and y=1.00001. (The exact solution is 1-1.00001=-0.00001)

fl(1)=1 and fl(1.00001)=1.0000=1

So fl(1)-fl(1.00001)=0

Relative error = |exact-approximate||exact|=|-0.00001-0||-0.00001|=1

This means there is 100% error.

1) Errors become slightly larger every arithmetic operation.

Because of this, we want to minimize the number of arithmetic operations whenever possible. For example, 1+x+x2+x3 takes 6 operations to do (3 additions, 3 multiplications). However, we could rewrite 1+x+x2+x3 as 1+x(1+x(1+x)), which only requires 5 operations (3 additions, 2 multiplications). The number of operations saved writing polynomials in nested form increases as the number of polynomials increases.

2) Catastrophic cancellation can occur when subtracting numbers.

Consider the situation of trying to find the roots of a quadratic equation ax2+bx+c=0. Let's suppose b is positive and a, c are small so that |-4ac| is small. Then x=-b+√b2-4ac2a≈-b+√b22a=-b+b2a=0*, which leads to catastrophic cancellation. We could avoid this by rewriting x by multiplying it by 1.

x=-b+√b2-4ac2a⋅-b-√b2-4ac-b-√b2-4ac=b2-(b2-4ac)2a(-b-√b2-4ac)=2c-b-√b2-4ac. In this case, catastrophic cancellation is avoided.

*It only equals 0 when the number of digits of b exceeds the number of digits the computer can store. After all, √b2-4ac≈√b2.

Catastrophic cancellation only occurs when the number of digits exceeds the number of digits of a number a computer can store. This is why everything is fine when doing something like 5-4.99999.

The story thus far: Computers store modified versions of numbers. This means that the number the computer stores is "wrong". When we work with "wrong" numbers (e.g. add, multiply them), we're gonna get an answer that is even "more wrong". For multiplication, things aren't too bad. But for subtraction, we can get undesired results when the numbers we're subtracting are really close to each other.

Solving Nonlinear Equations: Roots

How do we solve something like cos(x)=x? Let's say we didn't have a calculator, so we can't find the exact answer. How can we find a (good) approximation? In general, how do we find an approximation for f(x)=g(x)?

Getting to the Root of the Problem

One way is to change the equation to the form f(x)=0. Then, finding the roots of f(x) will be the solutions. Of course, it isn't going to be obvious what the roots are just from looking at something like cos(x)-x=0. But we can try to trap a root in a small interval (i.e. find a small interval between which a root lies).

This can be done with an iterative algorithm. We start with a root trapped in a large interval and reduce the size of the interval at every step. But how do we find an interval in which there is a root? Without knowing this, we can't start the algorithm!

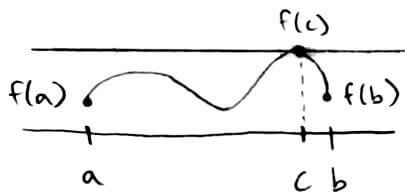

We do this by using the Intermediate Value Theorem, which says if f is a continuous function in [a,b], then f assumes every value between f(a) and f(b).

In this example, f achieves every value between the dotted lines between a and b.

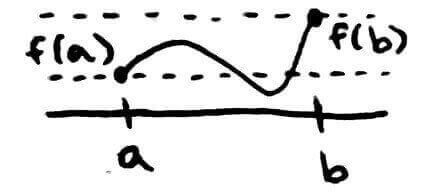

So how does this help us find roots? We can apply it to the situation where f(a) and f(b) have different signs (e.g. one is positive and the other is negative). If f(a) and f(b) have different signs, then f has a root in [a,b].

Again, f achieves every value between the dotted lines between a and b. Here, the x-axis lies between the dotted lines, so it is guaranteed that f will cross the x-axis, i.e. f(c)=0 at some point c between a and b.

This suggests that we start the algorithm by picking a0,b0 such that f(a0),f(b0) have different signs (e.g. f(a0) is positive and f(b0) is negative).

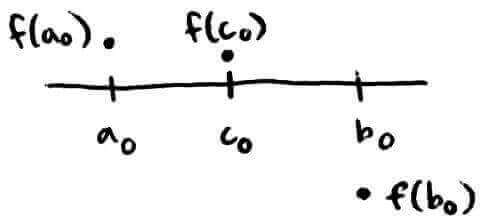

Now we have to reduce the size of the interval. We can split it in half; then, the root has to either be on the left half or the right half. So let's split it at c0 (the midpoint) and suppose that f(c0) is positive.

Now we have two choices for what our new interval [a1,b1] could be: [a0,c0] or [c0,b0]. The IVT doesn't tell us if there's a root between a0 and c0, but it does guarantee that there is a root between c0 and b0. So the new interval [a1,b1] should be [c0,b0].

And this process of dividing the interval in half repeats until the interval is small enough. This process is called the bisection method.

Error of the Bisection Method

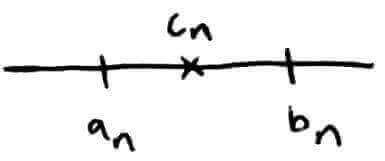

The thing is, we're looking for a number, but we end up with an interval (two numbers). So how do we get our answer? We could just pick some number between that interval. At this point, the interval is really small, so our approximation should be close to the actual solution. But how much error is there?

Let's pick some number dn and use that as our approximation. In this picture, there would be the most error if the actual solution was at bn. In general,

|exact-approximate|=|r-dn|≤|bn-an|

where r is the exact solution

This is because the worst error would occur when the approximation we pick is an and the actual solution is bn (or vice versa).

We usually pick as our approximation the midpoint of an and bn. That actually gives us a better approximation because the most error we could have then is half of the previous error. (This would be when the exact solution is either at an or at bn.)

|exact-approximate|=|r-cn|≤|bn-an|2

In general, |r-dn|≤max(|dn-an|,|dn-bn|).

So to summarize, the algorithm for finding a root of a polynomial is:

Start with an interval [a0,b0] where f(a0) and f(b0) have different signs

Cut the interval in half at c0, which is the midpoint of [a0,b0]

Pick a new (smaller) interval such that either f(a0) and f(c0) have different signs or f(c0) and f(b0) have different signs

Repeat the steps for that new (smaller) interval

Convergence of the Bisection Method

The bisection method generates a sequence of approximations:

{cn}∞n=0

where cn=an+bn2

If the actual solution is r, then what we want to say is that limn→∞cn=r. In words, the more times we do the algorithm, the more approximations we will get and the closer those approximations will be to the actual solution r.

Another way to say that is that the difference (i.e. error) between the approximation we get and the actual solution gets closer and closer to 0. Generally, it might not be guaranteed to happen, but for the bisection method, it is true that the more times we do the algorithm, the closer it will get to 0.

Previously, we found that:

|r-cn|≤|bn-an|2

Well that means:

|r-c0|≤|b0-a0|2.

and

|r-c1|≤|b1-a1|2.

But what is |b1-a1|? Thinking back to the steps of the algorithm, [a1,b1] represented the new interval after splitting [a0,b0] in half. So [a1,b1] is really half of [a0,b0]. Which means

|b1-a1|=|b0-a0|2.

Putting all that together:

|r-c1|≤|b1-a1|2=|b0-a0|22=122(b0-a0)

Generalizing that idea, we can see that:

|r-c0|≤121(b0-a0)

|r-c1|≤122(b0-a0)

|r-c2|≤123(b0-a0)

|r-c3|≤124(b0-a0)

⋮

|r-cn|≤12n+1(b0-a0)

Now, as n gets larger and larger (i.e. as we do more steps of the algorithm), the right-hand side gets smaller and smaller. When n goes to ∞, the right-hand side goes to 0.

This proves that what we want to say is true: limn→∞|r-cn|=0⇒limn→∞cn=r

When this happens, we say that the bisection method converges.

So in theory, the error gets really small when n is large. But how large should n be? How many times should we keep dividing intervals in half until we get a satisfactory answer?

If we know that the worst error is 12n+1(b0-a0), then we can decide how small the error could be.

Note that the error bound is dependent only on a0, b0, and n. We can already figure out how much error there will be without even knowing what f(x) is.

Ex: Let's say the starting interval is [-1,3] and that we want the error to be no bigger than 10-12.

3-(-1)2n+1≤10-12

42n+1≤11012

4⋅1012≤2n+1

log2(4⋅1012)≤n+1

n≥log2(4⋅1012)-1≈41

So if we do the bisection method for 41 steps, then the approximation we end up with will have an error of no more than 10-12.

There are other procedures for deciding when to stop without using error bounds.

We could stop when |cn-cn-1| is small enough. In this case, we would be getting approximations that are unchanging because the error is so small. For example, if we got {...,0.98757,0.98754,0.98751,0.98751,0.98751,0.98751}, then we could be pretty sure that 0.98751 is a good approximation.

We could stop when |f(cn)| is small enough. In this case, |f(cn)| would be really close to 0, which is what we wanted in the first place (because we're looking for roots).

We could stop when the algorithm takes too long or is not converging. This could happen for general algorithms (not the bisection method).

Matlab code for bisection method

function [c] = bisection(a,b,n) c = (a+b)/2; for step = 1:n % f is defined as another function in another .m file if f(a)*f(c) <= 0 b = c; else a = c; end c = (a+b)/2; end end

Speed of the Bisection Method

Since we're dealing with nonlinear equations, we can say that computing f(x) is relatively hard (i.e. time-consuming). Of course, a computer can do it almost instantly, but it takes more time to compute powers and roots than additions and divisions. So we can measure the speed of the bisection method by counting how many times we compute f(x).

In the code above, we're calcuating f(a) and f(c) every iteration. That's 2 costly operations. It turns out we can reduce it to only 1 costly operation per iteration if we store their values.

function [c] = bisectionOptimized(a,b,n) c = (a+b)/2; fa = f(a); for step = 1:n fc = f(c); if fa*fc <= 0 b = c; else a = c; fa = fc; end c = (a+b)/2; end end

We only need to calculate f(a) once at the beginning to use IVT. After that, a becomes c. At this point, we've also already calculated f(c), so when we're trying to find new intervals, we don't need to calculate f(c) again.

Let's look at the bisection method without the code. Let's assume that to find c0, we have to first determine whether f(a0) and f(b0) have opposite signs. Then to find c1 we have to compute f(c0) and so on.

c0: 2 total function evaluations so far (f(a0) and f(b0))

c1: 3 total function evaluations so far (f(c0) plus previous)

c2: 4 total function evaluations so far (f(c1) plus previous)

⋮

cn: n+2 function evaluations

The 2 function evaluations come from using the IVT to ensure that the root is in between the intervals.

Some details regarding the bisection method:

If f(a) and f(c) are both really large, then it's possible that multiplying them together results in a number that is too large for the computer to store. Then, we would get errors like we saw in the previous section. To avoid this, we could define a function called sign(f(x))={1iff(x)>0-1iff(x)<00iff(x)=0. Then we could check if sign(f(a))≠sign(f(c)) instead of multiplying them.

It might sound like a good idea to check if f(cn)=0 to stop the algorithm early. That would mean that in doing our algorithm, we hit on the exact root. The chances of that happening are virtually 0 (imagine marking √2 exactly on a a number line). The bisection method will converge anyway, so checking that is an extra costly operation.

Like with the first point, it's possible that when calculating c=a+b2, adding a and b results in a number too large to store. We can avoid this by calculating c=a+b-a2. Note that a+b-a2=a+b2.

The story thus far: Now we're looking at how to actually solve problems like f(x)=g(x). One way is to rearrange the equation so that we get f(x)-g(x)=0 (for simplicity, we generalize it to f(x)=0). Then the task is to find the roots/zeros of f(x). One way to find a root is to start with a big interval in which the root is guaranteed to lie. Then we repeatedly make the interval smaller and smaller until it's really close to the root.

Speed of bisection method: 1 function evaluation per iteration

Convergence of bisection method: en=12n+1(b0-a0)

Straight to the Point

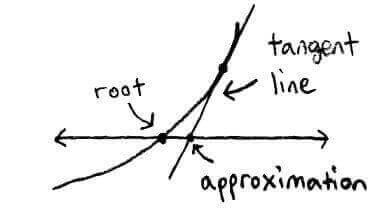

Another method we could use is Newton's method. In this method, we use tangent lines to approximate the root.

The idea is to start at an initial guess x0 and repeatedly draw tangent lines until we (hopefully) get close to the root.

Using the point-slope formula for the equation of a line, we can get the equation of the tangent line at x0:

y-f(x0)=f′(x0)(x-x0)

y=f′(x0)(x-x0)+f(x0)

Our goal is to get y=0, so we have:

0=f′(x0)(x-x0)+f(x0)

Solving for x, we get:

x=x0-f(x0)f′(x0)

This is the intuition behind the iterative formula for Newton's method:

xn+1=xn-f(xn)f′(xn)

There are some cases where Newton's method won't work.

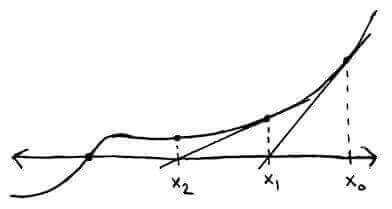

Here, each approximation results in a tangent line that just keeps going to the right.

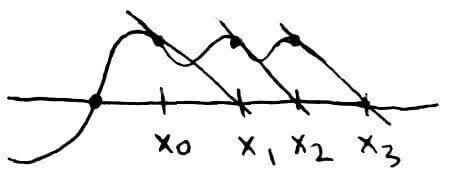

Here, the approximations will just keep bouncing back and forth between x0 and x1.

Here, the tangent line doesn't cross the x-axis, so we can't get an approximation.

So unlike the bisection method, Newton's method won't always work. However, it's still worth looking at Newton's method, because it turns out that it is generally faster than the bisection method.

Error of Newton's Method

A Taylor series represents a function as an infinite sum.

f(x)=f(a)+f′(a)1!(x-a)+f′′(a)2!(x-a)2+f′′′(a)3!(x-a)3+...

f(x)=∞∑n=0fn(a)n!(x-a)n

To look at the error, we need an equation with |xn+1-r|. Since xn+1=xn-f(xn)f′(xn), we need xn, f(xn), and f′(xn) to get xn+1. We can get those 3 parts by looking at the Taylor series expansion of f.

Notice that the first 2 terms of the Taylor series are the equation of a line for f(x) (and thus, are a linear approximation of f(x)). Since we're looking at approximations using lines, we'll consider the first 2 terms and collect the rest of the terms as some error.

f(x)=f(xn)+f′(xn)(x-xn)+f′′(ξn)2(x-xn)2

for some ξn between x and xn

Since we're interested in finding the root of f(x), we can use x=r where r is a root to get:

f(r)=f(xn)+f′(xn)(r-xn)+f′′(ξn)2(r-xn)2

0=f(xn)+f′(xn)(r-xn)+f′′(ξn)2(r-xn)2

r=xn-f(xn)f′(xn)-f′′(ξn)2f′(xn)(r-xn)2

Well, xn-f(xn)f′(xn)=xn+1 from the iterative formula for Newton's method, so we have:

r=xn+1-f′′(ξn)2f′(xn)(r-xn)2

xn+1-r=f′′(ξn)2f′(xn)(r-xn)2

Then, we can let en=xn-r be the error between the approximation and the root (so en+1=xn+1-r).

en+1=f′′(ξn)2f′(xn)(en)2

This means that the error between our (n+1)st approximation and the root is roughly equal to the square of the error between the nth approximation and the root. So if the error was small for the nth approximation, the error will be even smaller for the (n+1)st approximation.

If f′(xn)=0 (the slope of the tangent line is horizontal), then we get a 0 in the denominator. This explains one of the cases where Newton's method doesn't work mentioned earlier.

If f′′(ξn)=∞ (the second derivative of f doesn't exist), then we get really large error.

Speed of Newton's Method

The iterative formula for Newton's method is xn+1=xn-f(xn)f′(xn). From this, we can see that it requires 2 function evaluations per iteration. The bisection method required 2 function evaluations at the beginning, but then it only required 1 function evaluation per iteration. This means that Newton's method does roughly twice as many function evaluations per iteration as the bisection method. Doing more function evaluations means that Newton's method is slower than the bisection method.

Convergence of Newton's Method

But maybe Newton's method will converge faster than the bisection method will? Let's look back at the error equation en+1=f′′(ξn)2f′(xn)(en)2. If we start with an initial guess close enough to r, then f′′(ξn)2f′(xn)≈f′′(r)2f′(r), which is a constant, so we can call it c. Then the error for Newton's method can be represented as en+1≈c(en)2.

Let's say the error of the nth approximation was 0.001.

| Bisection Method | Newton's Method | |

| Error Formula | en+1=12en | en+1≈c(en)2 |

| en | 0.001 | 0.001 |

| en+1 | 0.0005 | c⋅0.000001 |

From this, we can see that Newton's method (generally) converges faster than the bisection method. The convergence of Newton's method is described as "quadratic convergence" because of the power 2.

But this doesn't mean that Newton's method always converges faster than the bisection method. It's possible that Newton's method won't converge at all (when f′=0 or f′′ doesn't exist). However, there are some theorems that guarantee the convergence of Newton's method.

Let's suppose f′(x),f′′(x) are continuous around x=r and f′(r)≠0. Then it turns out that if we start with an initial guess close enough to r, we can get xn→r (i.e. the nth approximation will approach r).

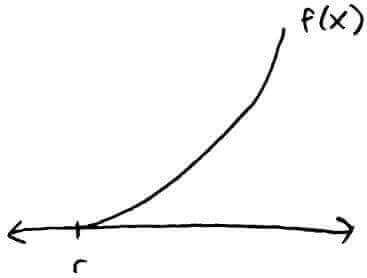

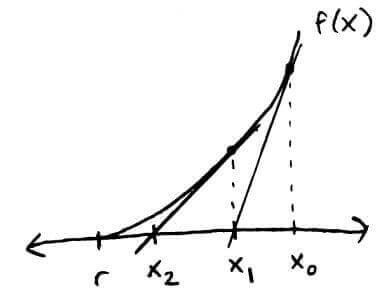

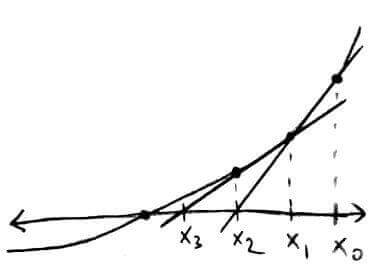

Another convergence result is that if f′′(x)>0 (f is concave up) in the interval [r,∞) and f′(x)>0 (f is increasing) in the interval [r,∞), then x0∈[r,∞) implies Newton's method will converge to r.

This is an example where f′′>0 and f′>0.

We can see that if we continue, we will eventually hit r. This is what the second convergence result is saying. It's important to note that we have to start at an x0 to the right of r, i.e. x0>r.

To prove the second convergence result, we have to show that:

xn is decreasing, i.e. xn<...<x3<x2<x1<x0

every approximation will be to the right of r, i.e. xn≥r for n≥1

a decreasing sequence bounded from below has to converge to s≥r

if xn→s then s is a root

s is actually r (the root is unique)

1. xn is decreasing

To show that xn is decreasing, we have to show that xn+1<xn, which means we need an equation with xn+1 and xn. So we look at the iterative formula.

We have xn+1=xn-f(xn)f′(xn)

Since f′>0, f(r)=0, and xn>r, we get that f(xn)>0

Also, since f′>0, we get that f′(xn)>0

So f(xn)f′(xn)>0

Rearranging terms, we get xn-xn+1=f(xn)f′(xn)>0

xn-xn+1>0⇒xn>xn+1

In words, xn+1 is obtained by getting xn and taking away a part of it. If you take away a part of the original amount, then the amount you end up with will always be less than the original amount.

2. every approximation will be to the right of r

To show that every approximation will be to the right of r, we have to show that xn≥r for n≥1. To get an equation with xn and r, we can look at the error equation.

We have xn-r=en=f′′(ξn-1)2f′(xn-1)(en-1)2

(en-1)2≥0 since it is a square

We also assumed f′′>0 & f′>0

So we have en=f′′(ξn-1)2f′(xn-1)(en-1)2≥0

But en also equals xn-r, so xn-r≥0⇒xn≥r

By assumption, x0>r. From 1., we have that xn is decreasing. (Note that x0≥xn because xn is decreasing.) But if we keep decreasing and xn≥r (xn is still to the right of r), then that must mean all the approximations between x0 and xn have to be to the right of r

3. a decreasing sequence bounded from below has to converge to s≥r

If every approximation is to the right of r, then we will never go below (to the left of) r (from 2.)

But we also can't be jumping randomly either because xn is decreasing (from 1.)

So we have to keep moving left but never past r

We have to stop at some point either because we're at r or because the amount xn is decreasing by is virtually 0

Note: the second option means that it could stop at a point s before r

4. if xn→s then s is a root

To prove that s is a root of f, we need to show that f(s)=0, which means we need an equation with f(xn). So we look at the iterative formula.

We have xn+1=xn-f(xn)f′(xn)

Since f and f′ are continuous, limn→∞xn+1=limn→∞xn-f(xn)f′(xn) (we can take limits of both sides because they're continuous functions)

Since we have xn→s, limn→∞xn+1=s and limn→∞xn=s

So we end up with s=s-f(s)f′(s) (we can also move limits inside functions since those functions are continuous)

⇒0=-f(s)f′(s)⇒f(s)=0, which means s is a root

5.s is actually r

What we want to show is that s=r. We can do this by showing s-r=0, which means we need an equation with s-r.

Using the Mean Value Theorem (described later in the story) on the interval between s and r we get:

f(s)=f(r)+f′(α)(s-r)

for some α between s and r

From 4., s is a root, so f(s)=0. Also, f(r) is a root by definition

0=0+f′(α)(s-r)

f′(α)≠0 since f′>0, so we can divide both sides by f′(α)

s-r=0⇒s=r

Note: this proves that Newton's method converges to a unique root

We proved that Newton's method converges on the interval [r,∞) when f′′>0 and f′>0. We can take a similar approach to prove that Newton's method converges:

on the interval [r,∞) when f′′<0 and f′<0

on the interval (-∞,r] when f′′>0 and f′<0

on the interval (-∞,r] when f′′<0 and f′>0

The story thus far: Newton's method approximates a root by repeatedly drawing tangent lines until the root of the tangent line matches the root of the function. It has to do more function evaluations per iteration than the bisection method, but it converges faster than the bisection method.

Speed of Newton's method: 2 function evaluations per iteration

Convergence of Newton's method: en+1=f′′(ξn)2f′(xn)(en)2 for ξn between xn and r

Iterative formula for Newton's method: xn+1=xn-f(xn)f′(xn)

Solving Systems with 2 variables:

Suppose we wanted to solve a system like this: {f1(x1,x2)=0f2(x1,x2)=0 where (r1,r2) is the root

Using Taylor series, we can represent f1 as:

f1(r1,r2)≈f1(x(0)1,r2)+∂f1∂x1(x(0)1,r2)(r1-x(0)1)

≈f1(x(0)1,x(0)2)+∂f1∂x2(x(0)1,x(0)2)(r2-x(0)2)+∂f1∂x1(x(0)1,x(0)2)(r1-x(0)1)

And similarly for f2:

f2(r1,r2)≈f2(x(0)1,x(0)2)+∂f2∂x1(x(0)1,x(0)2)(r1-x(0)1)+∂f2∂x2(x(0)1,x(0)2)(r2-x(0)2)

Then, with our initial guess (x(0)1,x(0)2), we would solve it by doing:

[x(1)1x(1)2]=[x(0)1x(0)2]-[∂f1∂x1(x(0)1,x(0)2)∂f1∂x2(x(0)1,x(0)2)∂f2∂x1(x(0)1,x(0)2)∂f2∂x2(x(0)1,x(0)2)]-1[f1(x(0)1,x(0)2)f2(x(0)1,x(0)2)]

Oh See Can't You See

So Newton's method converges faster than the bisection method does, but it requires more function evaluations. Is there a method that converges faster than the bisection method and requires less function evaluations than Newton's method does?

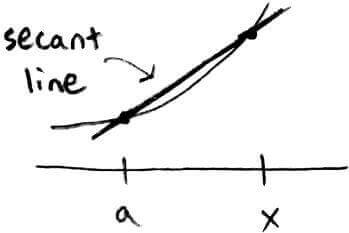

Newton's method looked at tangent lines. Let's look instead at secant lines.

The secant method is a variation of Newton's method. It still uses the iterative formula xn+1=xn-f(xn)f′(xn), but the difference is that f′(xn) will be the slope of the secant line, not the tangent line.

The derivative can be calculated by f′(a)=limx→af(x)-f(a)x-a. Without the limit, it can be approximated by f′(a)≈f(x)-f(a)x-a.

One way to get f′(xn) is to consider the slope of the secant line through xn-1 and xn. Then we have f′(xn)≈f(xn)-f(xn-1)xn-xn-1. This leads to the iterative formula for the secant method:

xn+1=xn-f(xn)f(xn)-f(xn-1)xn-xn-1

xn+1=xn-f(xn)(xn-xn-1)f(xn)-f(xn-1)

The idea is that we're getting our next approximation by drawing the secant line through our previous two approximations.

Notice how using the previous two approximations means we only need 1 function evaluation per iteration.

| all function evaluations needed | new function evaluations needed | |

| x0 | f(x0) | f(x0) |

| x1 | f(x1) | f(x1) |

| x2 | f(x0),f(x1) | none |

| x3 | f(x1),f(x2) | f(x2) |

| x4 | f(x2),f(x3) | f(x3) |

Error of Secant Method

To look at the error, we can start by rewriting the iterative formula:

xn+1=xn-f(xn)(xn-xn-1)f(xn)-f(xn-1)

xn+1=xn(f(xn)-f(xn-1))f(xn)-f(xn-1)-xnf(xn)-xn-1f(xn)f(xn)-f(xn-1)

xn+1=xnf(xn)-xnf(xn-1)-xnf(xn)+xn-1f(xn)f(xn)-f(xn-1)

xn+1=xn-1f(xn)-xnf(xn-1)f(xn)-f(xn-1)

Now we can look at en+1=xn+1-r:

xn+1-r=xn-1f(xn)-xnf(xn-1)f(xn)-f(xn-1)-r

xn+1-r=xn-1f(xn)-xnf(xn-1)f(xn)-f(xn-1)-r(f(xn)-f(xn-1))f(xn)-f(xn-1)

xn+1-r=xn-1f(xn)-xnf(xn-1)-rf(xn)+rf(xn-1)f(xn)-f(xn-1)

Using the fact that en-1=xn-1-r and en=xn-r:

xn+1-r=en-1f(xn)-enf(xn-1)f(xn)-f(xn-1)

xn+1-r=(f(xn)en-f(xn-1)en-1f(xn)-f(xn-1))(en-1en)

by factoring out (en-1en)

Multiplying by 1=xn-xn-1xn-xn-1:

xn+1-r=(xn-xn-1f(xn)-f(xn-1)⋅f(xn)en-f(xn-1)en-1xn-xn-1)en-1en

xn-xn-1f(xn)-f(xn-1) is a constant

Since we're looking at subtracting 2 functions, maybe it would be helpful to write those functions as Taylor series expansions and hope that terms cancel out through subtraction.

Taylor series of f centered at r:

f(xn)=f(r)+f′(r)(xn-r)+f′′(r)2(xn-r)2+...=f(r)+f′(r)en+f′′(r)2(en)2+...

Similarly, f(xn-1)=f(r)+f′(r)en-1+f′′(r)2(en-1)2+...

Subtracting f(xn-1) and f(xn) gives us:

f(xn)-f(xn-1)=f′(r)(en-en-1)+...

Well, en-en-1=(xn-r)-(xn-1-r)=xn-xn-1

So f(xn)-f(xn-1)≈f′(r)(xn-xn-1)

Which means xn-xn-1f(xn)-f(xn-1)≈xn-xn-1f′(r)(xn-xn-1)=1f′(r), which is a constant

f(xn)en-f(xn-1)en-1xn-xn-1 is a constant

f(xn)en=f′(r)+f′′(r)2en+...

f(xn-1)en-1=f′(r)+f′′(r)2en-1+...

Subtracting f(xn-1)en-1 and f(xn)en gives us:

f(xn)en-f(xn-1)en-1=f′′(r)2(en-en-1)+...

Well, en-en-1=(xn-r)-(xn-1-r)=xn-xn-1

So f(xn)en-f(xn-1)en-1≈f′′(r)2(xn-xn-1)

Which means f(xn)en-f(xn-1)en-1xn-xn-1≈f′′(r)2(xn-xn-1)xn-xn-1=f′′(r)2, which is a constant

Combining the results above, we get that xn+1-r=en+1≈Cen-1en where C=f′′(r)2f′(r). If we want to compare this error to the error in Newton's method (which was en+1=c(en)2), then we should rewrite en+1=Cen-1en in the form of A(en)α and find out what α is. If α<2, then the secant method converges slower than Newton's method. If α>2, then the secant method converges faster than Newton's method.

Suppose en+1=Cen-1en=A(en)α.

From en+1=A(en)α, we get:

en=A(en-1)α

Rearranging terms, we get:

en-1=(en)1αA1α

Plugging en-1 and en into Cen-1en=A(en)α:

Cen-1en=C(en)1αA1αA(en-1)α

Using en=A(en-1)α, we can find (en-1)α:

en=A(en-1)α⇒(en-1)α=enA

Now we substitute enA for (en-1)α in C(en)1αA1αA(en-1)α:

C(en)1αA1αA(enA)

C(en)1αA1αen

CA1α(en)1+1α

So Cen-1en=CA1α(en)1+1α=A(en)α, which means α=1+1α

α=1+1α⇒α2=α+1⇒α2-α-1=0

Using the quadratic formula, we get:

α=1+√52≈1.62

So xn+1-r=en+1=A(en)1.62, which means the secant method converges slower than Newton's method.

Speed of Secant Method

Recall that the secant method only requires 1 function evaluation per iteration, and that Newton's method requires 2 function evaluations per iteration. This means that we can perform 2 steps of the secant method in the time it takes to perform 1 step of Newton's method. So to compare them on a level playing field, we can compare the error when performing 2 steps of the secant method vs. the error in performing 1 step of Newton's method.

For the secant method:

| xn+1 | |en+1|≈C|en|1.62 |

| xn+2 | |en+2|≈C|en+1|1.62≈C(C|en|1.62)1.62≈C1.62|en|1.622≈C|en|2.62 |

Comparing C|en|2.62 to Newton's c|en|2, we can see that the secant method can be considered faster.

The story thus far: The secant method is a variation of Newton's method where we use secant lines instead of tangent lines to approximate roots. It requires fewer function evaluations per iteration than Newton's method, but it may be faster or slower than Newton's method depending on how we study it.

Speed of secant method: 1 function evaluation per iteration

Convergence of secant method: en+1=f′′(r)2f′(r)en-1en

Iterative formula for secant method: xn+1=xn-f(xn)(xn-xn-1)f(xn)-f(xn-1)

Solving Nonlinear Equations: Fixed Point Method

Switching gears, let's look at ways to solve nonlinear equations without finding roots.

A Fixed Point of View

With Newton's method, we had: xn+1=xn-f(xn)f′(xn). Well, we could consider the right hand side as just one big function of xn, call it F(xn). Then we have xn+1=F(xn). So we plug in xn and get out xn+1. Let's suppose that we do this enough times so that xn→s. Then:

limn→∞xn+1=limn→∞F(xn)

s=F(limn→∞xn) (since F is continuous)

s=F(s)

This is saying that if we plug in a value, we get back that same value. This leads to another way of solving nonlinear equations: solving x=F(x) (as opposed to solving f(x)=0).

The points x that satisfy F(x)=x are called fixed points. The idea is that if we apply F to x and we get back the same x, then that x is not changing, i.e. fixed.

cosx=x

root finding: f(x)=cosx-x

fixed point: F(x)=cosx

x2=2

root finding: f(x)=x2-2

fixed point: x2-2+x⏟F(x)=x

fixed point: 2x⏟F(x)=x

fixed point: x2+1x⏟F(x)*=x

* this comes from Newton's method: x-x2-22x

There are many options for solving nonlinear equations using the fixed point method. As long as the equation is of the form "x equals a function of x".

For this method to work, we need F to be contractive. F is contractive if there exists a positive λ<1 such that |F(x)-F(y)|≤λ|x-y| for all x,y. It's "contractive" because the distance between x and y (represented by |x-y|) is getting smaller after applying F (because λ<1).

Note: If F is contractive, then F is continuous.

Fixed Point Theorem: Let F:[a,b]→[a,b] be a contractive map. Then F has a unique fixed point in [a,b]. Also, xn+1=F(xn) with x0∈[a,b] will converge to that fixed point.

Suppose x and y are fixed points in [a,b]. We want to show that x=y

|F(x)-F(y)|≤λ|x-y|

|x-y|≤λ|x-y| (since x is a fixed point, F(x)=x)

There are 2 cases: either |x-y|≠0 or |x-y|=0

Suppose |x-y|≠0. Then we can divide both sides by |x-y| to get 1≤λ, which is a contradiction because λ<1

This means that |x-y|=0, which means that x=y

We want to show that xn→p for some fixed point p

Rewrite xn as x0+(x1-x0)+(x2-x1)+...+(xn-xn-1):

xn=x0+(x1-x0)+(x2-x1)+...+(xn-xn-1)

xn=x0+∞∑n=1(xn-xn-1)

Showing that xn converges is the same as showing that ∞∑n=1(xn-xn-1) converges, so let's look at that sum

Showing that ∞∑n=1(xn-xn-1) converges is the same as showing that ∞∑n=1|xn-xn-1| converges. We can do that by repeatedly applying xn+1=F(xn) and |F(x)-F(y)|≤λ|x-y|

|xn-xn-1|=|F(xn-1)-F(xn-2)|

|F(xn-1)-F(xn-2)|≤λ|xn-1-xn-2|

=λ(F(xn-2)-F(xn-3))

≤λ2|xn-2-xn-3|

≤...≤λn-1|x1-x0|

So ∞∑n=1|xn-xn-1|≤∞∑n=1λn-1|x1-x0|

∞∑n=1λn-1|x1-x0|=|x1-x0|∞∑n=1λn-1

=|x1-x0|∞∑n=0λn

=|x1-x0|11-λ*, which is a number

So xn converges. Let's say it converges to p. At the beginning of this section, we showed that s=F(s) if xn→s. Here xn→p so p=F(p), i.e. xn converges to the fixed point.

*This comes from the closed form of a geometric series. Note that λ<1.

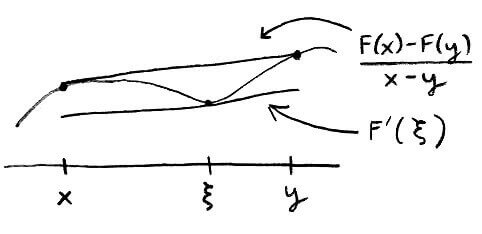

So how do we know what to use for λ? It turns out that we can answer this by looking at the Mean Value Theorem, which says that if f is continuous on [a,b] and differentiable on (a,b), then there exists a point c between a and b such that f′(c)=f(b)-f(a)b-a.

For our situation, we can adjust that equation a little bit to get:

F′(ξ)=F(x)-F(y)x-y

F(x)-F(y)=F′(ξ)(x-y)

Notice how similar this is to the contractive property: |F(x)-F(y)|≤λ|x-y|. This means we can use F′(ξ) for λ.

|F(x)-F(y)|≤(maxz∈[a,b]|F′(z)|)*|x-y|

*If this is less than 1, then * serves as λ.

Also, maxz∈[a,b]|F′(z)|<1⇔f is a contractive map.

This is the graphical interpretation of looking at fixed points. Notice that the point is on the line y=x and y=F(x). So x=F(x).

Convergence of the Fixed Point Method

Let's suppose the fixed point iterations converge to s. To look at the convergence, let's look at how the error compares to the error of Newton's method.

So let's start with en+1=xn+1-s. From the formula xn+1=F(xn) and the definition of fixed point, we get:

xn+1-s

F(xn)-F(s)

To introduce xn-s=en, we can look at the Taylor series expansion of F(xn):

F(xn)-F(s)

F(s)+F′(s)(xn-s)+F′′(s)2(xn-s)2+...-F(s)

F′(s)(xn-s)+F′′(s)2(xn-s)2+...

F′(s)en+F′′(s)2(en)2+...

So en+1=F′(s)(en)+F′′(s)2(en)2+... Notice that the error depends on the differentiability of F.

F′(s)≠0⇒en+1≈F′(s)en

F′(s)=0 and F′′(s)≠0⇒en+1≈F′′(s)2(en)2

F′(s)=F′′(s)=0 and F′′′(s)≠0⇒en+1≈F′′′(s)3!(en)3

Recall that the error for Newton's method is en+1=c(en)2 where c is a constant.

Fixed point method vs. Newton's method

en+1≈F′(s)en vs. en+1=c(en)2

Fixed point converges slower than Newton's method does

en+1≈F′′(s)2(en)2 vs. en+1=c(en)2

Fixed point converges at the same rate as Newton's method does

en+1≈F′′′(s)3!(en)3 vs. en+1=c(en)2

Fixed point converges faster than Newton's method does

We say a sequence has order of convergence q if |en+1||en|q converges to some nonzero number. (Sometimes the nonzero number is referred to as the "asymptotic error constant".) Higher orders of convergence means the sequence converges faster.

The story thus far: Instead of looking at solving problems of the form f(x)=0, we look at solving problems of the form f(x)=x. Finding an x such that f(x)=x is done with the fixed point method. In order for the fixed point method to converge, F has to be contractive, which means that there exists a positive λ<1 such that |F(x)-F(y)|≤λ|x-y|. From the MVT, we can use maxx|F′(x)| for λ.

Speed of fixed point method: 1 function evaluation per iteration

Convergence of fixed point method: en+1=F′(s)en+F′′(s)2(en)2+...

Iterative formula for fixed point method: xn+1=F(xn)

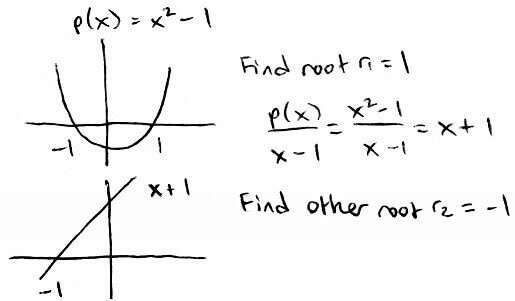

Solving Nonlinear Equations: Polynomial Interpolation

Going back to the root-finding problem, we've been able to find just one root. However, it would be nice to be able to use the algorithms to find all the roots. It turns out that we actually can do this with polynomials because of their special properties.

Polynomial Theorems

p(x)=a0+a1x+a2x2+...+anxn (with an≠0) is a polynomial. The degree of a polynomial is the highest power, which in this case is n, denoted as deg p(x)=n.

The Fundamental Theorem of Algebra says that every nonconstant polynomial has at least one root in the complex field. In fact, an extension of this theorem states something more powerful: if the degree of a polynomial is n, then there are exactly n roots in the complex field. What we would like is to use our algorithms to find all these (real) roots.

When performing operations with polynomials, the degree can only stay the same or go down.

x2+x2=2x2

5x3-3x3=2x3

x3⋅x2=x5 (note the degree of the left-hand side is 5, not 3 or 2)

x6x2=x4

We can divide polynomials just like we can divide numbers. If we divide a polynomial p(x) (which has degree of at least 1) by a linear polynomial (x-s), then the result is a quotient q(x) (which is a polynomial) and a remainder r.

p(x)=(x-s)q(x)+r

Using real numbers, this is like saying 9=2⋅4+1.

The Remainder Theorem says that if we divide a polynomial p(x) by a linear polynomial (x-s), then p(s) is the remainder r.

p(s)=(s-s)q(s)+r

p(s)=0⋅q(s)+r

p(s)=r

In fact, if s is a root, then p(x)=(x-s)q(x) (notice there's no remainder.)

p(s)=0 since s is a root

p(s)=(s-s)q(s)+r

0=0⋅q(s)+r

r=0

A special case of the Remainder Theorem — called the Factor Theorem — combines the two points above: p(s)=0⇔p(x)=(x-s)q(x). In words, if s is a root of p(x), then p(x) can be divided evenly by (x-s), which means p(x)=(x-s)q(x) (and if p(x) can be divided evenly by (x-s), then p(s)=0).

Divide, Rinse, & Repeat (To a Certain Degree)

So we can use the algorithms to find one root of p(x), call it r1, and then divide p(x) by (x-r1) to get p(x)=(x-r1)q(x). Then we can use the algorithms to find the root of q(x), call it r2, and divide q(x) by (x-r2) to get p(x)=(x-r1)(x-r2)u(x). Wait, it seems like we can just keep on dividing forever. There's actually a limit to the number of times we can divide polynomials, and it involves looking at the degrees of the polynomials.

Recall that when we multiply like terms with exponents, we add the exponents. Like x2⋅x3=x5.

If p(x) has degree n, then (x-s)q(x) also needs to have degree n (since they're equal to each other). Well, (x-s) has degree 1, which means q(x) needs to have degree n-1 (notice that gives us 1+(n-1)=n). Also notice that the degree of q(x) is 1 less than the degree of p(x). In a very rough sense, the degree is decreasing by 1 every time we divide.

We can only divide polynomials p(x) by linear polynomials (x-s) as long as p(x) has degree greater than 0. Since the degree is "decreasing by 1 every time we divide", if p(x) has degree n, then we can only divide it n times. The result is that:

p(x)=(x-r1)(x-r2)...(x-rn)an

where an is some constant

Giving us the n roots stated in the extension of the Fundamental Theorem of Algebra. So to find all the roots of p(x) we:

Find one root of p(x)

Remove the root by dividing it out (setting p(x) equal to p(x)x-root)

Repeat

Since the algorithms only handle real roots, we will only find all the real roots and not the complex roots.

Getting the Points

So we've seen that it's possible to find all the roots of polynomials. But what about finding roots for complicated functions that aren't polynomials? It turns out that complicated functions can be replaced or approximated by polynomials.

Let's suppose we had a table of n+1 data points (xi,yi) for i=0,...,n obtained from some complicated function f(x). Then there exists a unique polynomial with degree less than or equal to n that passes through (interpolates) the points. The polynomial interpolating the data points is called the interpolation polynomial. Each xi is called a node.

Suppose p(x),q(x) both interpolate the data points and deg p(x),deg q(x)≤n

Let r(x)=p(x)-q(x) (note that deg r≤n)

Then r(xi)=p(xi)-q(xi) for all i=0,...,n

Since p,q both interpolate the data, p(xi)=q(xi)=yi

So r(xi)=p(xi)-q(xi)=yi-yi=0 for all i=0,...,n

This means r has n+1 roots. But the degree of r is n.

So by the extension of the Fundamental Theorem of Algebra*, r must be a constant polynomial

Since r(xi)=0 for all i=0,...,n, r(x)=0

So r(x)=0=p(x)-q(x)⇒p(x)=q(x)

*The extension of the Fundamental Theorem of Algebra says that nonconstant polynomials with degree n have exactly n roots. Here r has n+1 roots, but the degree of r is n, which means r has more roots than it's degree. This means that r cannot be a nonconstant polynomial, i.e. it must be a constant polynomial.

In the case when n=1, there are 2 points. And there is only one unique line (polynomial of degree 1) passing through 2 points.

The polynomial that interpolates the data points is p(x)=n∑i=0yin∏j=0,j≠ix-xjxi-xj

Let's start with the table of data points

| x | x0 | x1 | ... | xn |

| y | y0 | y1 | ... | yn |

We could represent y by a vector as follows:

y=[y0y1⋮yn]=y0[100⋮0]+y1[010⋮0]+...+yn[00⋮01]

Then we take each vector and make a table for each one. Taking y0[100⋮0] as an example:

| x | x0 | x1 | ... | xn |

| y | 1 | 0 | ... | 0 |

Now we can find* a polynomial of degree less than or equal to n interpolating these points. Let's call the polynomial l0(x)

Doing this for each vector, we get p(x)=y0l0(x)+y1l1(x)+...+ynln(x) (note that deg p≤n)

By the way we set things up, for x=[x0x1⋮xn],p(xi)=n∑j=0yjlj(xi) where lj(xi)={0 if i≠j1 if i=j

This means p(xi)=yi, so p(x)=[y0y1⋮yn]=y

*By the way we set things up, l0(x) has roots at x1,...,xn (and not at x0)

So l0(x)=(x-x1)(x-x2)...(x-xn)q(x) where q(x) is a constant

We can find what the constant is by looking at l0(x0)=1

1=l0(x0)=(x0-x1)(x0-x2)...(x0-xn)q(x)

q(x)=1(x0-x1)(x0-x2)...(x0-xn)

So l0(x)=(x-x1)(x-x2)...(x-xn)(x0-x1)(x0-x2)...(x0-xn)

Generally,

li(x)=(x-x0)...(x-xi-1)(x-xi+1)...(x-xn)(xi-x0)...(xi-xi-1)(xi-xi+1)...(xi-xn)

The polynomial obtained using this formula is called the the Lagrange form of the interpolation polynomial.

Let's suppose we have these data points:

| x | 1 | 2 | 3 |

| y | -3 | 5 | -9 |

Then p(x)=-3((x-2)(x-3)(1-2)(1-3))+5((x-1)(x-3)(2-1)(2-3))-9((x-1)(x-2)(3-1)(3-2))

The idea is that if we plug in x=1, we should get p(1)=-3. We make sure that happens by making sure the numerator is 0 for the second and third terms when we plug in x=1. We also make sure that everything inside the parentheses of the first term cancels out when we plug in x=1.

To easily see uniqueness, let's suppose we have a smaller table:

| x | 1 | 2 |

| y | -3 | 5 |

Using the point-slope formula, the line passing through these 2 points is y+3=5-(-3)2-1(x-1)⇒y=8x-11

Then p(x)=-3(x-21-2)+5(x-12-1)=3(x-2)+5(x-1)=3x-6+5x-5=8x-11

Since we defined a polynomial to be p(x)=a0+a1x+...+anxn it seems natural to find a polynomial of that form that interpolates the data. Meaning we find a0,a1,...,an. It turns out that it's not so easy (nor is it really a good idea).

Since p(xi)=yi=a0+a1xi+...+anxni for i=0,...,n, we're solving a system of n equations with n unknowns. Ax=b

[1x0...xn01x1...xn1⋮⋮⋱⋮1xn...xnn][a0a1⋮an]=[y0y1⋮yn]

A is known as a Vandermonde matrix, which is not well conditioned. This is why it's not a good idea (besides having to solve a matrix, which is time consuming).

Initially, it also seems that this won't work if A is not invertible. But actually we can show that A is invertible by showing the null space of A is only {0}, i.e. Ax=0⇒x={0}.

Ax=0 is the same as having the data (xi,0) for i=0,...,n

Well, the 0 polynomial interpolates the data, has degree ≤n, and is unique.

So there can be no other x=[a0⋮an] that makes Ax=0

So the null space is only {0}

Let's say we have a polynomial that interpolates the data. However, we later find out that there are more data points we now need to consider. (Maybe we missed some the first time? Nah, let's say someone handed us more data.) Our current interpolation polynomial won't work with these new points, so we have to change our polynomial. How hard is it to change the interpolation polynomial to fit these new data points along with the old data points?

| x | x0 | x1 | x2 |

| y | y0 | y1 | y2 |

| x3 |

| y3 |

Let's suppose q(x) was our original polynomial interpolating the old data. What we want is to get a new polynomial p(x)=q(x)+r(x) where r(x) is a polynomial interpolating just the new data. So let's see what r(x) is.

y0=p(x0)=q(x0)+r(x0)

Since q(x) interpolates the data, q(x0)=y0

y0=y0+r(x0)

r(x0)=0

Similarly, r(x1)=r(x2)=0. So r(x)=C(x-x0)(x-x1)(x-x2). Let's find out what C is.

y3=p(x3)=q(x3)+r(x3)

y3=q(x3)+C(x3-x0)(x3-x1)(x3-x2)

C=y3-q(x3)(x3-x0)(x3-x1)(x3-x2)

So p(x)=q(x)+C(x-x0)(x-x1)(x-x2) interpolates all of the data. Polynomials obtained using this method are called the Newton form of the interpolation polynomial.

We can use Newton polynomials to interpolate data in general, not just in situations where we add new data. However, in constructing the polynomial, we act as if we were adding new data.

| x | x0 | ... | xn |

| y | y0 | ... | yn |

Let's find the interpolation polynomial for the data points above.

| x | x0 |

| y | y0 |

So first, we pretend we only have 1 data point (x0,y0). That gives us the constant polynomial a0=y0.

| x | x0 | x1 |

| y | y0 | y1 |

Then we add (x1,y1) to get a0+a1(x-x0).

| x | x0 | x1 | x2 |

| y | y0 | y1 | y2 |

Then we add (x2,y2) to get a0+a1(x-x0)+a2(x-x0)(x-x1).

At the (n+1)st step, we get a0+a1(x-x0)+a2(x-x0)(x-x1)+...+an(x-x0)(x-x1)...(x-xn-1)

=n∑i=0aii-1∏j=0(x-xj)

Note: Each ai isn't equal to yi (except for a0=y0). Finding ai is similar to finding C mentioned above.

Error of Polynomial Interpolation

So given a set of points (xi,f(xi)), it's possible to find a polynomial (of lowest possible degree) that interpolates those points. However, the polynomial is just an approximation, so there's going to be some error. How well does an interpolation polynomial approximate f(x)?

"Interpolation der Runge-Funktion (n=5)" by Cuvwb is licensed under CC BY-SA 4.0

The blue line is the actual function f(x)=11+25x2 (known as the Runge function), and the red line is the degree 5 interpolation polynomial. Notice that while they have the same y-values at x=-5,-3,-1,1,3,5, the y-values differ in between those x-values.

Suppose f(x)∈Cn+1[a,b]* for x∈[a,b]. Suppose p(x) is the interpolation polynomial of degree ≤n. Then:

f(x)-p(x)=f(n+1)(ξx)(n+1)!n∏i=0(x-xi)

for some ξx∈(a,b)

* The (n+1)st derivative of f exists and is continuous on [a,b].

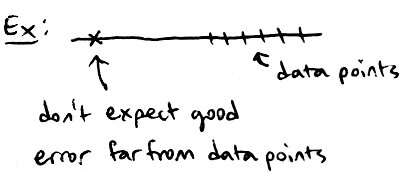

Note that if x is far from the data points xi then |x-xi| is large, and n∏i=0(x-xi) is large, making the error large.

If we have a polynomial interpolating the points on the right, then it's not very likely that it will fit well with the point all the way on the left.

Rolle's Theorem: If f∈C1[a,b] and f(a)=f(b), then there exists a c∈(a,b) such that f′(c)=0

Notice that if a and b are roots of f, then there is a root of f′ between the roots of f. This generalizes for higher-order derivatives. Between any two roots of f′, there is a root of f′′. And so on. This idea is used in the proof of the error.

f(x)-p(x)=f(n+1)(ξx)(n+1)!n∏i=0(x-xi) for some ξx∈(a,b)

Let ϕ(t)=f(t)-p(t)-λw(t) where λ is chosen to be the number that makes ϕ(x)=0 (for a fixed x) and w(t)=n∏i=0(t-xi)

So λ=f(x)-p(x)w(x)

Notice that

ϕ(xi)=f(xi)-p(xi)-λw(xi)

ϕ(xi)=0-0=0

0=f(xi)-p(xi)-0

f(xi)=p(xi)

This means we have ϕ(xi)=0

By the way we constructed it, ϕ has roots at x0,...,xn and x. So it has n+2 roots

Then by Rolle's Theorem, ϕ′ has n+1 roots

Continuing, ϕ′′ has n roots, ϕ′′′ has n-1 roots, ..., ϕ(n+1) has 1 root

This means there exists a ξx∈(a,b) such that ϕ(n+1)(ξx)=0

Well,

ϕ(n+1)(ξx)=f(n+1)(ξx)-p(n+1)(ξx)-λw(n+1)(ξx)

Since p(x) has degree ≤n, taking n+1 derivatives makes p(n+1)=0

So,

ϕ(n+1)(ξx)=f(n+1)(ξx)-λw(n+1)(ξx)

Since w(t) is the product of n+1 linear polynomials, w(t) has degree n+1. Which means when we take n+1 derivatives, it will equal (n+1)(n)...1=(n+1)!

So,

ϕ(n+1)(ξx)=f(n+1)(ξx)-λ(n+1)!

Remember that λ=f(x)-p(x)w(x) and ϕ(n+1)(ξx)=0

So,

0=f(n+1)(ξx)-(f(x)-p(x)w(x))(n+1)!

f(x)-p(x)=f(n+1)(ξx)(n+1)!w(x)

Remember that w(x)=n∏i=0(x-xi)

So,

f(x)-p(x)=f(n+1)(ξx)(n+1)!n∏i=0(x-xi)

There are several ways to get better error. One way is to connect all the data points with straight lines. Then use only the data points we need instead of all the points. This concept is known as piecewise linear interpolation.

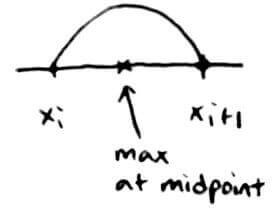

Since we're looking at only 2 data points (n=1), the error of piecewise linear interpolation is:

|f(x)-p(x)|=|f′′(ξx)|2!|x-xi||x-xi+1|

≤12(maxz∈[xi,xi+1]|f′′(z)|)|xi+1-xi|2|xi+1-xi|2

=12(maxz∈[xi,xi+1]|f′′(z)|)(xi+1-xi)24

Ex: Consider f(x)=e2x in the interval [-1,1] with the n+1 data points evenly spaced and x0=-1, xn=1. Let h be the stepsize =xi+1-xi=1-(-1)n=2n. Fix x∈[-1,1], so x∈[xi,xi+1] for some i. Let p be the piecewise linear interpolant. Then the error at x is:

f(x)-p(x)=f′′(ξx)2(x-xi)(x-xi+1) for some ξx∈[xi,xi+1]

Putting an upper bound on the error, we get:

|f(x)-p(x)|≤maxz∈[xi,xi+1]|4e2z|2|(x-xi)(x-xi+1)|

So for x∈[-1,1]:

|f(x)-p(x)|≤maxz∈[-1,1]|4e2z|2|(x+1)(x-1)|

=4e22|(x+1)(x-1)|

We can look at the error of |(x+1)(x-1)| graphically. |(x-xi)(x-xi+1)| is a degree 2 polynomial, so it is a parabola with roots at xi and xi+1.

So |(x-xi)(x-xi+1)|≤(h2)(h2)=h24, which means:

4e22|(x+1)(x-1)|≤4e22⋅h24

=e2h22

Notice that the error goes to 0 as n→∞ or h→0. In words, we can get better error if we use more data points or decrease the spacing between each data point.

Chebyshev Polynomials

Let's say instead of working with a table of data points, we're given the function f(x). It turns out that there's a way to optimally choose our nodes so the error is small. (We're essentially creating our own table of data points.) Determining which nodes to choose involves something called Chebyshev polynomials.

There are 2 definitions of Chebyshev polynomials. The first one is a recursive definition:

{T0(x)=1T1(x)=xTn+1(x)=2xTn(x)-Tn-1(x)

So a Chebyshev polynomial of degree 2 would look like:

T2(x)=2x⋅T1(x)-T0(x)=2x⋅x-1=2x2-1

And one of degree 3:

T3(x)=2x⋅T2(x)-T1(x)=2x⋅(2x2-1)-x=4x3-3x

Note: Tn(x)=a0+a1x+...+anxn where an=2n-1 for n≥1.

For n=2: a2=22-1=21=2 (which appears in the 2x2)

For n=3: a3=23-1=22=4 (which appears in the 4x3)

The second definition of a Chebyshev polynomial is:

Tn(x)=cos(ncos-1(x))

T0(x)=cos(0⋅cos-1(x))=cos(0)=1

T1(x)=cos(1⋅cos-1(x))=cos(cos-1(x))=x

The second definition tells us a lot about the properties of Chebyshev polynomials.

cos(x) ranges from -1 to 1, so |Tn(x)|≤1

Tn(cos(2j-12nπ))=0 for j=1,...,n

— this tells us about the roots of Tn(x)

Tn(cos(jπn))=(-1)j for j=0,...,n

— this tells us about the min and max of Tn(x)

A monic polynomial of degree n has the form xn+an-1xn-1+...+a0

The coefficient in front of the highest degree term is 1

The monic version of the Chebyshev polynomial is Tn(x)2n-1 for n≥1 (this comes from the note mentioned earlier)

That means |Tn(x)2n-1|≤12n-1

Recall that the error of polynomial interpolation is f(n+1)(ξx)(n+1)!n∏i=0(x-xi) for some ξx∈(a,b). We can make the error small by making n∏i=0(x-xi)=(x-x0)...(x-xn) small. Well, (x-x0)...(x-xn) is a monic polynomial, and there's a property of monic polynomials that allows us to put a bound on the error of them.

If p is a monic polynomial of degree n, then maxx∈[-1,1]|p(x)|≥12n-1

To achieve a contradiction, suppose p is a monic polynomial of degree n such that maxx∈[-1,1]|p(x)|<12n-1

Let's consider r(x)=p(x)-Tn(x)2n-1

Recall that Tn(cos(jπn))=(-1)j for j=0,...,n

So at x=cos(jπn), the sign of Tn(x) alternates n+1 times

When Tn(x) is negative, r(x)=p(x)+12n-1 is positive

When Tn(x) is positive, r(x)=p(x)-12n-1 is negative since |p(x)|<12n-1

So the sign of r(x) also alternates n+1 times

By the Intermediate Value Theorem, r(x) has n roots

But r(x) has degree n-1*, which contradicts the extension of the Fundamental Theorem of Algebra

This means r(x) must be a constant polynomial

In fact, since r(x) has roots, r(x)=0, i.e. r(x) is the zero polynomial

So 0=p(x)-Tn(x)2n-1⇒p(x)=Tn(x)2n-1⇒maxx∈[-1,1]|p(x)|=maxx∈[-1,1]Tn(x)2n-1

⇒maxx∈[-1,1]p(x)=12n-1, which is a contradiction

So maxx∈[-1,1]|p(x)|≥12n-1

*p(x) and Tn(x)2n-1 are monic polynomials, which means that the highest degree terms cancel out when doing p(x)-Tn(x)2n-1. After subtraction, the term with the highest degree has degree n-1

Notice that |Tn(x)2n-1|≤12n-1⇒maxx∈[-1,1]|Tn(x)2n-1|=12n-1. Combining this with the result above means that

maxx∈[-1,1]|p(x)|≥maxx∈[-1,1]|Tn(x)2n-1|

In words, the monic degree n Chebyshev polynomial minimizes maxx∈[-1,1]|p(x)| among all monic polynomials p(x) of degree n.

Also notice that |p(x)| (a monic polynomial of degree n) is smallest when p(x)=Tn(x)2n-1. So (x-x0)...(x-xn) (a monic polynomial of degree n+1) is smallest when (x-x0)...(x-xn)=Tn+1(x)2n.

The nodes x0, ..., xn make the left-hand side equal to 0. This means the nodes x0, ..., xn are the roots of the Chebyshev polynomial. This suggests that the minimum error is achieved when we pick nodes to be the roots of the Chebyshev polynomial, i.e. xi=cos(2i+12n+2π) for i=0,...,n.

Newton's Form, Revisited

The process for generating the interpolation polynomial of Newton's form involved generating the interpolation polynomial for 1 data point, and then adding more data points.

| x | x0 |

| f(x) | f(x0) |

Let's say the interpolation polynomial is q0(x)=c0

| x | x0 | x1 |

| f(x) | f(x0) | f(x1) |

q1(x)=q0(x)+c1(x-x0)

| x | x0 | x1 | x2 |

| f(x) | f(x0) | f(x1) | f(x2) |

q2(x)=q1(x)+c2(x-x0)(x-x1)

So we can say that ci is the coefficient of xi for qi(x) of

| x | x0 | ... | xi |

| f(x) | f(x0) | ... | f(xi) |

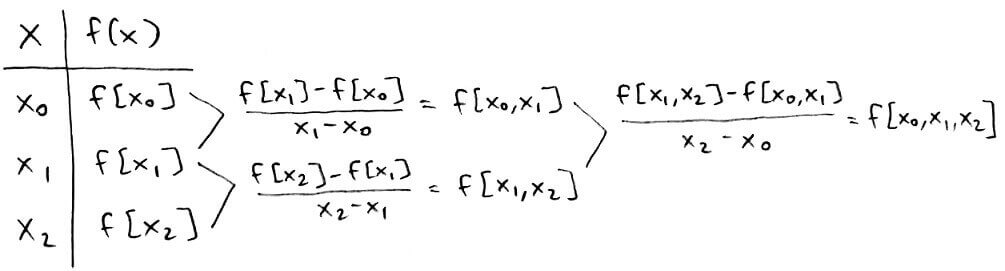

The problem right now is that ci is pretty hard to calculate (refer back to first mention of Newton's form). It turns out that there's an easier way to find out what the coefficients are without having to generate the interpolation polynomial piece by piece.

Since ci depends on the values of f(x) at x0,...,xi, there is a notation for ci:

ci=f[x0,...,xi]

f[x0,...,xi] is called a divided difference of order i.

f[x0,...,xi] is the coefficient of the ith degree term. Also, f[xi]=f(xi).

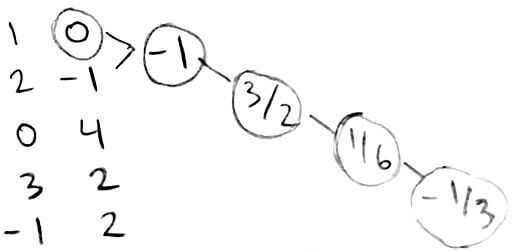

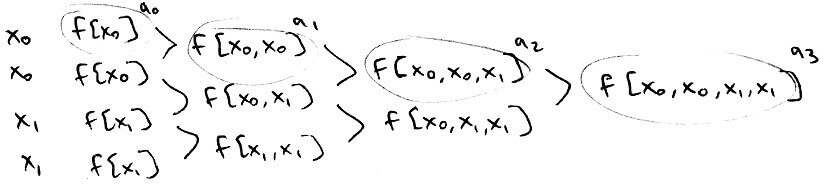

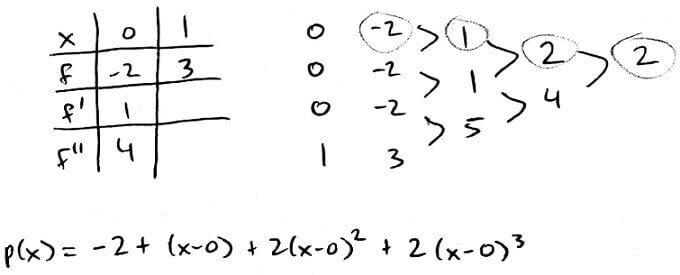

From this, we can see that the coefficients of xi are going to lie along the top-most path.

We can even construct the interpolation polynomial at just some of the points. (From here on, the leftmost column contains the x-values and the column immediately to the right of it contains the y-values.)

The interpolation polynomial for the points 0,1,2 is p(x)=4-4(x-0)+32(x-1)(x-0)

Properties of Divided Differences

Switching the order of the values in the table doesn't change what the divided differences are. Let's say we had a table of values and the interpolation polynomial p(x)=a0+a1x+...+a3x3.

| x | x0 | x1 | x2 | x3 |

| f(x) | f(x0) | f(x1) | f(x2) | f(x3) |

Now let's form another table with the order of the values changed and have an interpolation polynomial q for this table.

| x | x1 | x0 | x3 | x2 |

| f(x) | f(x1) | f(x0) | f(x3) | f(x2) |

Since q interpolates the same points as p, q(x)=a0+a1x+...+a3x3=p(x). This means the divided differences of q are the same as the divided differences of p. So f[any permutation of x0,...,xn]=f[x0,...,xn].

Earlier, we said that the coefficients lie along the top-most path. However, because of this result about switching order, we can go along any path and still get the interpolation polynomial.

Here, the interpolation polynomial is p(x)=0-1(x-1)+32(x-1)(x-2)+16(x-1)(x-2)(x-0)-13(x-1)(x-2)(x-0)(x-3)

This is more easily seen after rearranging the table:

We can also look at the error of polynomial interpolation in terms of divided differences.

Suppose we have an interpolation polynomial p interpolating the data points (xi,f(xi)) for i=0,...,n. Now, let's add a new data point (t,f(t)) and look at the error at that point. To interpolate that new point, we would do

p(x)+f[x0,...,xn,t]n∏i=0(t-xi).

(Therefore, we have f(x)=p(x)+f[x0,...,xn,t]n∏i=0(x-xi).)

At x=t,

f(t)=p(t)+f[x0,...,xn,t]n∏i=0(t-xi).

f(t)-p(t)=f[x0,...,xn,t]n∏i=0(t-xi).

Recall that the error of polynomial interpolation is (at x=t)

f(t)-p(t)=f(n+1)(ξt)(n+1)!n∏i=0(t-xi)

Comparing the two equations, we see that

f[x0,...,xn,t]=f(n+1)(ξt)(n+1)!

The story thus far: The bisection method, Newton's method, secant method, and fixed-point method allows us to find one root of a function. But we're often interested in finding all the roots of a function. For complicated functions, it's a bit tricky to do so, but it is possible for polynomials. This is convenient because we can approximate complicated functions with polynomials. Two methods for doing this are finding the Lagrange form and Newton form of the interpolation polynomial. Of course, it is only an approximation, so there will be some error. But we can reduce the error by using Chebyshev polynomials.

Lagrange form: p(x)=n∑i=0f(xi)n∏j=0,j≠ix-xjxi-xj

Newton form: p(x)=f[x0]+n∑i=1(f[x0,...,xi]i-1∏j=0(x-xj))

Error of polynomial interpolation: f(x)-p(x)=f(n+1)(ξx)(n+1)!n∏i=0(x-xi) for some ξx∈(a,b)

Hermite Interpolation

It's possible that we're given more information about our data points than just their values. We could know something about their first derivatives, second derivatives, and so on. Finding the interpolation polynomial for these situations is called Hermite interpolation.

Hermite interpolation will only work if enough information is given. Formally, for x0,...,xn, we need to be given (along with their values f(xi)) f(j)(xi) for j=0,...,ki-1. For example, we could do Hermite interpolation for a table that looks like this:

| x0 | x1 | x2 | |

| f(x) | f(x0) | f(x1) | f(x2) |

| f′(x) | f′(x1) | f′(x2) | |

| f′′(x) | f′′(x1) |

Here, k0=1, k1=3, k2=2

ki is the number of information given for each xi

And we can't do Hermite interpolation for a table that looks like this:

| x0 | x1 | x2 | |

| f(x) | f(x0) | f(x1) | f(x2) |

| f′(x) | f′(x0) | ||

| f′′(x) | f′′(x1) | f′′(x2) |

because we have second derivatives, but the first derivatives are skipped.

We can't have gaps in the table that skip information about lower derivatives.

For Hermite interpolation, there exists a unique polynomial of degree ≤m that interpolates the data where m=(total amount of information given)-1=n∑i=0ki-1

Let p(x)=a0+a1x+...+amxm

We want to show that it is possible to find unique a0,...,am that satisfies all the given information

We can represent that equation with a linear system A→u=→c where A=[1x0x20...xm01x1x21...xm1⋮⋮⋮⋱⋮1xnx2n...xmn], →u=[a0⋮am], and →c contains the information given to us

If A is invertible, then there exists a unique →u, which proves what we want to show

So the goal is to show A is invertible by showing that →u=0 is the only solution to A→u=0

A→u=0 corresponds to finding the coefficients a0,...,am of the polynomial that interpolates the data f(j)(xi)=0, i.e. all values and derivatives are equal to 0

So p(x) has roots of multiplicity ki at xi*

This means p(x)=(x-x0)k0(x-x1)k1...(x-xn)knq(x)

If q≠0, then deg p≥n∑i=0ki=m+1

But then the degree of p would be too high

Therefore, it must be the case that q=0, which means p=0

So the only polynomial interpolating the data f(j)(xi)=0 is the zero polynomial

Which means →u=0 is the only solution to A→u=0

So A is invertible

*Suppose we have, for x0=1, f(1)=f′(1)=f′′(1)=0. In this case, k0=3. So we have (x-1)3.

Well, at x=1, it equals 0, so that's satisfied

Taking the first derivative, we get 3(x-1)2, which also equals 0 at x=1

Taking the second derivative, we get 6(x-1), which also equals 0 at x=1

This is why p has a root of multiplicity ki at xi

Error of Hermite Interpolation

Suppose f∈C2n+2[a,b] and p is the interpolation polynomial of degree ≤2n+1 such that p(xi)=f(xi) and p′(xi)=f′(xi) for i=0,...,n. Then

f(x)-p(x)=f(2n+2)(ξx)(2n+2)!(x-x0)2...(x-xn)2

for some ξx∈(a,b)

Let ϕ(t)=f(t)-p(t)-λw(t) where w(t)=n∏i=0(t-xi)2 and λ is chosen so that ϕ(x)=0

So λ=f(x)-p(x)w(x)

Notice that

ϕ(xi)=f(xi)-p(xi)-λw(xi)

ϕ(xi)=0-0=0

0=f(xi)-p(xi)-0

f(xi)=p(xi)

Also,

ϕ′(xi)=f′(xi)-p′(xi)-λw′(xi)

ϕ′(xi)=0-0=0

0=f′(xi)-p′(xi)-0

f′(xi)=p′(xi)

This means we have ϕ(xi)=0 and ϕ′(xi)=0

By this construction, ϕ has n+2 zeros (at x0,...,xn,x)

By Rolle's Theorem, ϕ′ has n+1 zeros. But ϕ′(xi)=0, which is another n+1 zeros. So ϕ′ has a total of 2n+2 zeros

Generalizing Rolle's Theorem,

ϕ′′ has 2n+1 zeros

ϕ′′′ has 2n zeros

ϕ(4) has 2n-1 zeros

⋮

ϕ(2n+2) has 1 zero, call it ξx∈(a,b)

So ϕ(2n+2)(ξx)=0

Also,

ϕ(2n+2)(ξx)=f(2n+2)(ξx)-p(2n+2)(ξx)-λw(2n+2)(ξx)

Since p has degree ≤2n+1, taking 2n+2 derivatives results in it being 0

0=f(2n+2)(ξx)-0-λw(2n+2)(ξx)

w(t) has degree 2(n+1)=2n+2, so taking 2n+2 derivatives results in it being (2n+2)!

0=f(2n+2)(ξx)-λ(2n+2)!

λ=f(x)-p(x)w(x), so

0=f(2n+2)(ξx)-f(x)-p(x)w(x)(2n+2)!

Moving terms around, we get

f(x)-p(x)=f(2n+2)(ξx)(2n+2)!(x-x0)2...(x-xn)2

Just like for regular polynomial interpolation we could use piecewise interpolation and Chebyshev polynomials to reduce the error. For piecewise interpolation, instead of piecewise linear interpolation, it would be piecewise cubic interpolation because of the extra information given through the derivatives.

The (least possible) degree of the interpolation polynomial is always less than the number of data points given. For piecewise interpolation, we only consider 2 nodes. So the degree of the interpolation polynomial is 1, hence the term piecewise linear interpolation. However, for Hermite interpolation, we also need to take into account the information about the derivatives. For 2 nodes xi, xi+1, we have f(xi), f(xi+1), f′(xi), and f′(xi+1), which is a total of 4 pieces of information. So the degree of the interpolation polynomial is at most 3, hence the term piecewise cubic interpolation.

To actually construct the interpolation polynomial, we follow a process similar to constructing the polynomial using the Newton form (this will actually be considered the Newton form for constructing a Hermite polynomial.) We suppose we have an interpolation polynomial q interpolating f and f′ at x0,...,xn-1 and add new data f and f′ at xn.

So we have p(x)=q(x)+r(x) where q(x) interpolates the old data at x0,...,xn-1 and r(x) interpolates just the new data at xn. This is achieved by doing:

p(x)=q(x)+C(x-x0)2...(x-xn-1)2

But this polynomial only gives us the value of f(xn). We also need the polynomial to give us the value of f′(xn). So we end up with the final interpolation polynomial:

p(x)=q(x)+C(x-x0)2...(x-xn-1)2+D(x-x0)2...(x-xn-1)2(x-xn)

In fact,

p(x)=a0+a1(x-x0)+a2(x-x0)2+a3(x-x0)2(x-x1)+a4(x-x0)2(x-x1)2+...+a2n+1(x-x0)2...(x-xn-1)2+a2n+2(x-x0)2...(x-xn-1)2(x-xn)

To find the values of a0,...,a2n+2, we use divided differences, except we repeat each xi twice (because we have two pieces of information on each xi).

However, notice that f[x0,x0]=f[x0]-f[x0]x0-x0=00. To avoid this, we use a property of divided differences we showed earlier:

f[x0,...,xn]=f(n)(ξ)n!

for some ξ between x0 and xn

So f[x0,x0]=f′(ξ)1! (for some ξ between x0 and x0) =f′(x0).

Another way to see this is lim_(y -> x_0) f[x_0,y] = lim_(y -> x_0) (f[x_0]-f(y))/(x_0-y) = f'(x_0)

Notice that we repeated 0 three times because we had three pieces of information on 0.

Also notice that f[0,0,0] = (1-1)/(0-0) = 0/0. Like we did earlier, we use f[0,0,0] = (f''(xi))/(2!) (for some xi between [0,0]) = (f''(0))/2 = 4/2 = 2

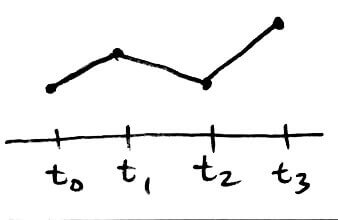

Spline Interpolation

Interpolation polynomials are smooth (have continuous derivatives), but they sometimes have bad error. To get better error, we said that using piecewise interpolation would help, but they are not smooth. Splines try to get the best of both: smoothness and better error.

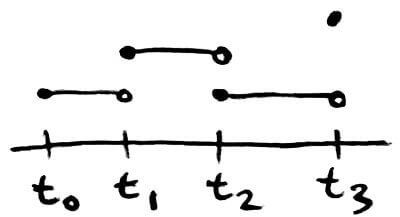

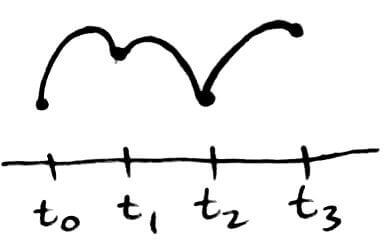

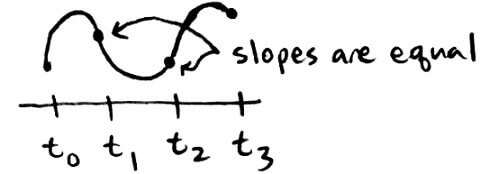

A spline function is a piecewise function of polynomials on subintervals from i = 0, ..., n. The subintervals lie between t_0, ..., t_n, which are called knots. The knots t_1, ..., t_(n-1) are called junctions. A spline function of degree k means that

all polynomial pieces have degree le k

all polynomial pieces have a continuous (k-1)^(st) derivative

S(x) = {(S_0(x), x in [t_0,t_1)),(S_1(x),x in [t_1,t_2)),(vdots,vdots),(S_(n-1)(x),x in [t_(n-1),t_n]):}

Usually we choose the knots t_0, ..., t_n to be the nodes x_0, ..., x_n.

A spline of degree 0 consists of n constant polynomials. It is also known as a step function.

A spline of degree 1 consists of n linear polynomials. It is the classic piecewise linear interpolation polynomial.

Splines of degree 0 usually aren't continuous and splines of degree 1 aren't smooth. So the splines of interest are splines of degree ge 2.

A linear system can either have no solutions, one solution, or infinitely many solutions. In general:

If there are more equations than unknowns, then there are no solutions

{(x+y=7),(2x+2y=8),(3x+3y=6):} we can't find x,y such that all 3 equations are true

If there are an equal number of equations and unknowns, then there is one unique solution

{(2x+3y=5),(5x+8y=13):} has the solution x = 1, y = 1

If there are more unknowns than equations, then there are infinitely many solutions

{(2x+3y+4z=5),(5x+8y+z=13):} has infinitely many solutions (one being x = 1, y = 1, z = 0)

Splines of a certain degree need to have a specific form and need to satisfy certain conditions (e.g. continuity, continuity of first derivatives, ...). Because of this, there are going to be a number of equations and unknowns. What we would like to know is if it is possible to set up a spline of degree k that interpolates the data and has continuous k-1 derivatives.

Splines of degree 2 have this form:

S(x) = {(Ax^2 + Bx + C, x in [t_0,t_1)),(Dx^2 + Ex + F, x in [t_1, t_2)),(vdots, vdots),(Gx^2 + Hx + I, x in [t_(n-1),t_n]):}

From this, we have 3 unknowns per equation. There are a total of n equations from t_0 to t_n. So the total number of unknowns is 3n.

The number of equations comes from the conditions on interpolation and continuity. For each subinterval [t_i,t_(i+1)], we need S(t_i) = y_i and S(t_(i+1)) = y_(i+1). That's 2 equations per subinterval, and there are n subintervals. So the total number of equations is 2n.

We also need continuous 1^(st) derivatives, i.e. S'_i(t_i) = S'_(i+1)(t_i) for i = 1, ..., n-1. So that's another n-1 equations.

The total number of unknowns is 3n and the total number of equations is 2n + n-1 = 3n-1. There are more unknowns than equations, so it is possible to set up a quadratic spline that satisfies interpolation and continuity. More formally, quadratic splines interpolate and are C^1.

Splines of degree 3 have this form:

S(x) = {(Ax^3 + Bx^2 + Cx + D, x in [t_0,t_1)),(vdots, vdots),(Ex^3 + Fx^2 + Gx + H, x in [t_(n-1),t_n]):}

From this, we have 4 unknowns per equation, and n equations total. So the total number of unknowns is 4n.

For the number of equations, the same reasoning for the degree 2 case applies to the degree 3 case. The only difference is we now need to consider the continuity of 2^(nd) derivatives, which is another n-1 equations (S''_i(t_i) = S''_(i+1)(t_i) for i = 1, ..., n-1).

So there are more unknowns (4n) than equations (4n-2), which means it is possible to set up a cubic spline. More formally, cubic splines interpolate and are C^2.

Cubic splines are most often used in practice.

There are 2 more unknowns than equations. This means we can find 2 more equations and still have a cubic spline. The 2 equations that are most often used are S''(t_0) = S''(t_n) = 0. Cubic splines with this condition are known as natural cubic splines.

Existence and Uniqueness of Natural Cubic Splines

Let h_i = t_(i+1)-t_i and S''_i(t_i) = z_i

Let S''_i(x) be the line satisfying S''_i(t_i) = z_i and S_i''(t_(i+1)) = z_(i+1)

Therefore,

S''_i(x) = z_i/h_i (t_(i+1)-x) + z_(i+1)/h_i (x-t_i)

Integrating S''_i(x) twice gives us:

S_i(x) = z_i/(6h_i) (t_(i+1)-x)^3 + z_(i+1)/(6h_i) (x-t_i)^3 + C(x-t_i) + D(t_(i+1)-x)

where C and D are integration constants

Using S_i(t_i) = y_i and S_i(t_(i+1)) = y_(i+1), we can find C and D:

C = y_(i+1)/h_i - (z_(i+1)h_i)/6

D = y_i/h_i - (z_ih_i)/6

To find z_i, we can use S'_i(t_i) = S'_(i-1)(t_i)

S'_i(t_i) = (-h_i)/3z_i - h_i/6z_(i+1) - y_i/h_i + y_(i+1)/h_i

S'_(i-1)(t_i) = h_(i-1)/6z_(i-1)+h_(i-1)/3z_i - y_(i-1)/h_(i-1) + y_i/h_(i-1)

(-h_i)/3z_i - h_i/6z_(i+1) - y_i/h_i + y_(i+1)/h_i = h_(i-1)/6z_(i-1)+h_(i-1)/3z_i - y_(i-1)/h_(i-1) + y_i/h_(i-1)

h_(i-1)z_(i-1) + 2(h_i+h_(i-1))z_i + h_iz_(i+1) = 6/h_i(y_(i+1)-y_i)-6/(h_(i-1))(y_i-y_(i-1))

With z_0 = z_n = 0 (making it a natural cubic spline), this is a linear system of the form:

[[u_1,h_1,,,,],[h_1,u_2,h_2,,,],[,h_2,u_3,h_3,,],[,,ddots,ddots,ddots,],[,,,h_(n-3),u_(n-2),h_(n-2)],[,,,,h_(n-2),u_(n-1)]] [[z_1],[z_2],[z_3],[vdots],[z_(n-2)],[z_(n-1)]] = [[b_1],[b_2],[b_3],[vdots],[b_(n-2)],[b_(n-1)]]

where

u_i = 2(h_i+h_(i-1))

b_i = 6/h_i(y_(i+1)-y_i)-6/h_(i-1)(y_i-y_(i-1))

The matrix is positive definite and tridiagonal, which means it is invertible.

Natural cubic splines are the smoothest possible interpolating function. Let a = t_0 lt t_1 lt ... lt t_n = b be the nodes. Let f in C^2[a,b]. Let S be the natural cubic spline. Then int_a^b(S''(x))^2dx le int_a^b (f''(x))^2dx

Let g = f-S

Then g(t_i) = f(t_i)-S(t_i) = 0 for all i

int_a^b(f''(x))^2dx

= int_a^b(g''(x)+S''(x))^2dx

= int_a^b(g''(x))^2dx + int_a^b(S''(x))^2 + int_a^b2g''(x)S''(x)dx

Since (g''(x))^2 is ge 0, int_a^b(g''(x))^2dx ge 0

So we need to show that int_a^b2g''(x)S''(x)dx ge 0

int_a^bg''(x)S''(x)dx

= sum_(i=1)^n int_(t_(i-1))^(t_i)g''(x)S''(x)dx (since S is a spline)

= sum_(i=1)^n g'(x)S''(x)]_(t_(i-1))^(t_i)-int_(t_(i-1))^(t_i) g'(x)S'''(x)dx (integration by parts)

sum_(i=1)^n g'(x)S''(x)]_(t_(i-1))^(t_i) is a telescoping series, so that equals g'(t_n)S''(t_n)-g'(t_0)S''(t_0)

S'''(x) is a constant, call it c_i, since S has degree le 3

Altogether, we have: